mirror of

https://github.com/crawlab-team/crawlab.git

synced 2026-01-23 17:31:11 +01:00

Merge branch 'develop'

This commit is contained in:

9

.gitattributes

vendored

Normal file

9

.gitattributes

vendored

Normal file

@@ -0,0 +1,9 @@

|

||||

*.md linguist-language=Go

|

||||

*.yml linguist-language=Go

|

||||

*.html linguist-language=Go

|

||||

*.js linguist-language=Go

|

||||

*.xml linguist-language=Go

|

||||

*.css linguist-language=Go

|

||||

*.sql linguist-language=Go

|

||||

*.uml linguist-language=Go

|

||||

*.cmd linguist-language=Go

|

||||

24

.github/ISSUE_TEMPLATE/bug_report.md

vendored

Normal file

24

.github/ISSUE_TEMPLATE/bug_report.md

vendored

Normal file

@@ -0,0 +1,24 @@

|

||||

---

|

||||

name: Bug report

|

||||

about: Create a report to help us improve

|

||||

title: ''

|

||||

labels: 'bug'

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

**Describe the bug**

|

||||

A clear and concise description of what the bug is.

|

||||

|

||||

**To Reproduce**

|

||||

Steps to reproduce the behavior:

|

||||

1. Go to '...'

|

||||

2. Click on '....'

|

||||

3. Scroll down to '....'

|

||||

4. See error

|

||||

|

||||

**Expected behavior**

|

||||

A clear and concise description of what you expected to happen.

|

||||

|

||||

**Screenshots**

|

||||

If applicable, add screenshots to help explain your problem.

|

||||

23

.github/ISSUE_TEMPLATE/bug_report_zh.md

vendored

Normal file

23

.github/ISSUE_TEMPLATE/bug_report_zh.md

vendored

Normal file

@@ -0,0 +1,23 @@

|

||||

---

|

||||

name: Bug 报告

|

||||

about: 创建一份 Bug 报告帮助我们优化产品

|

||||

title: ''

|

||||

labels: 'bug'

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

**Bug 描述**

|

||||

例如,当 xxx 时,xxx 功能不工作。

|

||||

|

||||

**复现步骤**

|

||||

该 Bug 复现步骤如下

|

||||

1.

|

||||

2.

|

||||

3.

|

||||

|

||||

**期望结果**

|

||||

xxx 能工作。

|

||||

|

||||

**截屏**

|

||||

|

||||

17

.github/ISSUE_TEMPLATE/feature_request.md

vendored

Normal file

17

.github/ISSUE_TEMPLATE/feature_request.md

vendored

Normal file

@@ -0,0 +1,17 @@

|

||||

---

|

||||

name: Feature request

|

||||

about: Suggest an idea for this project

|

||||

title: ''

|

||||

labels: 'enhancement'

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

**Is your feature request related to a problem? Please describe.**

|

||||

A clear and concise description of what the problem is. Ex. I'm always frustrated when [...]

|

||||

|

||||

**Describe the solution you'd like**

|

||||

A clear and concise description of what you want to happen.

|

||||

|

||||

**Describe alternatives you've considered**

|

||||

A clear and concise description of any alternative solutions or features you've considered.

|

||||

17

.github/ISSUE_TEMPLATE/feature_request_zh.md

vendored

Normal file

17

.github/ISSUE_TEMPLATE/feature_request_zh.md

vendored

Normal file

@@ -0,0 +1,17 @@

|

||||

---

|

||||

name: 功能需求

|

||||

about: 优化和功能需求建议

|

||||

title: ''

|

||||

labels: 'enhancement'

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

**请描述该需求尝试解决的问题**

|

||||

例如,当 xxx 时,我总是被当前 xxx 的设计所困扰。

|

||||

|

||||

**请描述您认为可行的解决方案**

|

||||

例如,添加 xxx 功能能够解决问题。

|

||||

|

||||

**考虑过的替代方案**

|

||||

例如,如果用 xxx,也能解决该问题。

|

||||

3

.gitignore

vendored

3

.gitignore

vendored

@@ -121,4 +121,5 @@ _book/

|

||||

.idea

|

||||

*.lock

|

||||

|

||||

backend/spiders

|

||||

backend/spiders

|

||||

spiders/*.zip

|

||||

|

||||

190

CHANGELOG-zh.md

Normal file

190

CHANGELOG-zh.md

Normal file

@@ -0,0 +1,190 @@

|

||||

# 0.4.5 (unkown)

|

||||

### 功能 / 优化

|

||||

- **交互式教程**. 引导用户了解 Crawlab 的主要功能.

|

||||

- **加入全局环境变量**. 可以设置全局环境变量,然后传入到所有爬虫程序中. [#177](https://github.com/crawlab-team/crawlab/issues/177)

|

||||

- **项目**. 允许用户将爬虫关联到项目上. [#316](https://github.com/crawlab-team/crawlab/issues/316)

|

||||

- **示例爬虫**. 当初始化时,自动加入示例爬虫. [#379](https://github.com/crawlab-team/crawlab/issues/379)

|

||||

- **用户管理优化**. 限制管理用户的权限. [#456](https://github.com/crawlab-team/crawlab/issues/456)

|

||||

- **设置页面优化**.

|

||||

- **任务结果页面优化**.

|

||||

|

||||

### Bug 修复

|

||||

- **无法找到爬虫文件错误**. [#485](https://github.com/crawlab-team/crawlab/issues/485)

|

||||

- **点击删除按钮导致跳转**. [#480](https://github.com/crawlab-team/crawlab/issues/480)

|

||||

- **无法在空爬虫里创建文件**. [#479](https://github.com/crawlab-team/crawlab/issues/479)

|

||||

- **下载结果错误**. [#465](https://github.com/crawlab-team/crawlab/issues/465)

|

||||

- **crawlab-sdk CLI 错误**. [#458](https://github.com/crawlab-team/crawlab/issues/458)

|

||||

- **页面刷新问题**. [#441](https://github.com/crawlab-team/crawlab/issues/441)

|

||||

- **结果不支持 JSON**. [#202](https://github.com/crawlab-team/crawlab/issues/202)

|

||||

- **修复“删除爬虫后获取所有爬虫”错误**.

|

||||

- **修复 i18n 警告**.

|

||||

|

||||

# 0.4.4 (2020-01-17)

|

||||

|

||||

### 功能 / 优化

|

||||

- **邮件通知**. 允许用户发送邮件消息通知.

|

||||

- **钉钉机器人通知**. 允许用户发送钉钉机器人消息通知.

|

||||

- **企业微信机器人通知**. 允许用户发送企业微信机器人消息通知.

|

||||

- **API 地址优化**. 在前端加入相对路径,因此用户不需要特别注明 `CRAWLAB_API_ADDRESS`.

|

||||

- **SDK 兼容**. 允许用户通过 Crawlab SDK 与 Scrapy 或通用爬虫集成.

|

||||

- **优化文件管理**. 加入树状文件侧边栏,让用户更方便的编辑文件.

|

||||

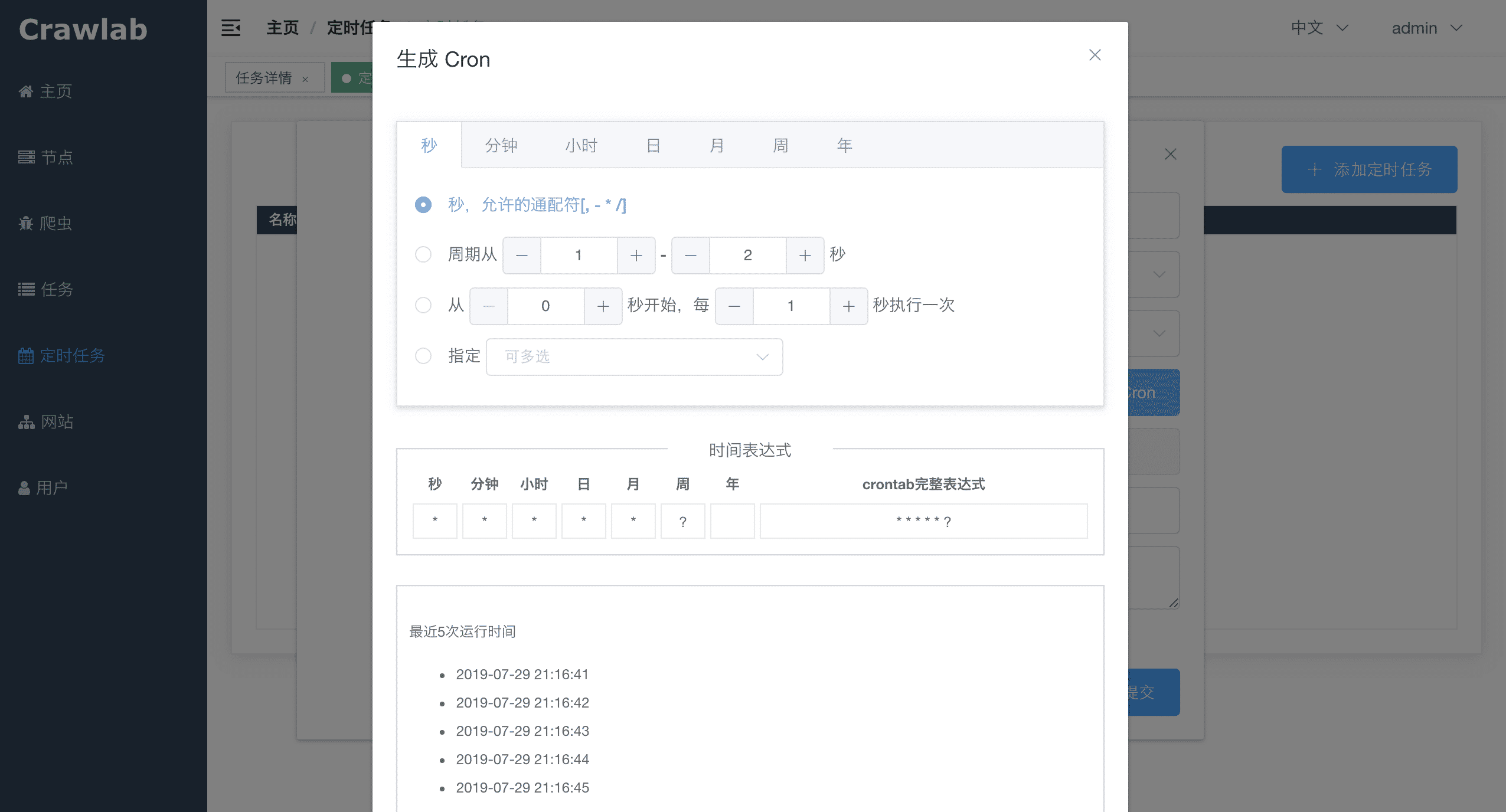

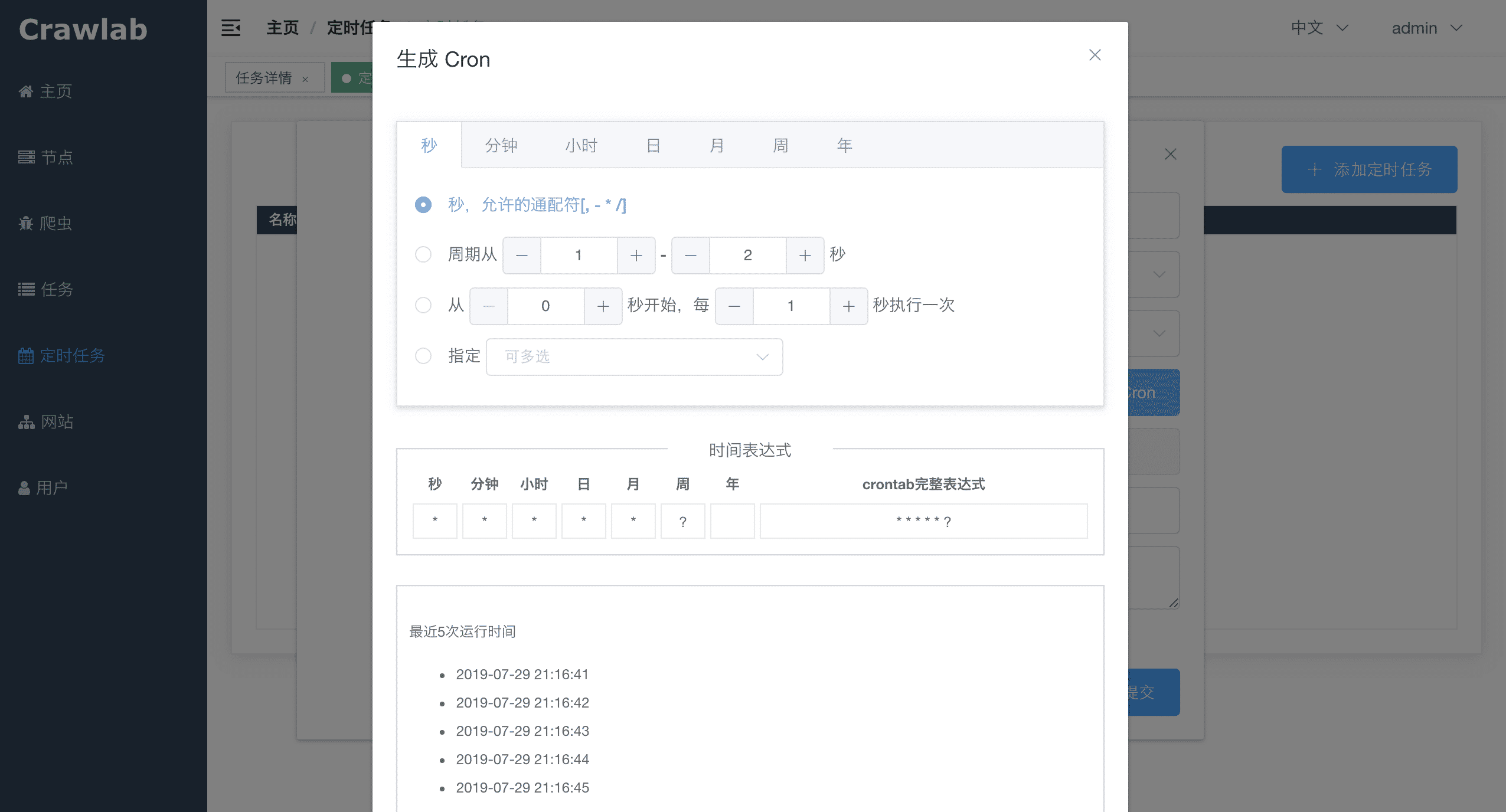

- **高级定时任务 Cron**. 允许用户通过 Cron 可视化编辑器编辑定时任务.

|

||||

|

||||

### Bug 修复

|

||||

- **`nil retuened` 错误**.

|

||||

- **使用 HTTPS 出现的报错**.

|

||||

- **无法在爬虫列表页运行可配置爬虫**.

|

||||

- **上传爬虫文件缺少表单验证**.

|

||||

|

||||

# 0.4.3 (2020-01-07)

|

||||

|

||||

### 功能 / 优化

|

||||

- **依赖安装**. 允许用户在平台 Web 界面安装/卸载依赖以及添加编程语言(暂时只有 Node.js)。

|

||||

- **Docker 中预装编程语言**. 允许 Docker 用户通过设置 `CRAWLAB_SERVER_LANG_NODE` 为 `Y` 来预装 `Node.js` 环境.

|

||||

- **在爬虫详情页添加定时任务列表**. 允许用户在爬虫详情页查看、添加、编辑定时任务. [#360](https://github.com/crawlab-team/crawlab/issues/360)

|

||||

- **Cron 表达式与 Linux 一致**. 将表达式从 6 元素改为 5 元素,与 Linux 一致.

|

||||

- **启用/禁用定时任务**. 允许用户启用/禁用定时任务. [#297](https://github.com/crawlab-team/crawlab/issues/297)

|

||||

- **优化任务管理**. 允许用户批量删除任务. [#341](https://github.com/crawlab-team/crawlab/issues/341)

|

||||

- **优化爬虫管理**. 允许用户在爬虫列表页对爬虫进行筛选和排序.

|

||||

- **添加中文版 `CHANGELOG`**.

|

||||

- **在顶部添加 Github 加星按钮**.

|

||||

|

||||

### Bug 修复

|

||||

- **定时任务问题**. [#423](https://github.com/crawlab-team/crawlab/issues/423)

|

||||

- **上传爬虫zip文件问题**. [#403](https://github.com/crawlab-team/crawlab/issues/403) [#407](https://github.com/crawlab-team/crawlab/issues/407)

|

||||

- **因为网络原因导致崩溃**. [#340](https://github.com/crawlab-team/crawlab/issues/340)

|

||||

- **定时任务无法正常运行**

|

||||

- **定时任务列表列表错位问题**

|

||||

- **刷新按钮跳转错误问题**

|

||||

|

||||

# 0.4.2 (2019-12-26)

|

||||

### 功能 / 优化

|

||||

- **免责声明**. 加入免责声明.

|

||||

- **通过 API 获取版本号**. [#371](https://github.com/crawlab-team/crawlab/issues/371)

|

||||

- **通过配置来允许用户注册**. [#346](https://github.com/crawlab-team/crawlab/issues/346)

|

||||

- **允许添加新用户**.

|

||||

- **更高级的文件管理**. 允许用户添加、编辑、重命名、删除代码文件. [#286](https://github.com/crawlab-team/crawlab/issues/286)

|

||||

- **优化爬虫创建流程**. 允许用户在上传 zip 文件前创建空的自定义爬虫.

|

||||

- **优化任务管理**. 允许用户通过选择条件过滤任务. [#341](https://github.com/crawlab-team/crawlab/issues/341)

|

||||

|

||||

### Bug 修复

|

||||

- **重复节点**. [#391](https://github.com/crawlab-team/crawlab/issues/391)

|

||||

- **"mongodb no reachable" 错误**. [#373](https://github.com/crawlab-team/crawlab/issues/373)

|

||||

|

||||

# 0.4.1 (2019-12-13)

|

||||

### 功能 / 优化

|

||||

- **Spiderfile 优化**. 将阶段由数组更换为字典. [#358](https://github.com/crawlab-team/crawlab/issues/358)

|

||||

- **百度统计更新**.

|

||||

|

||||

### Bug 修复

|

||||

- **无法展示定时任务**. [#353](https://github.com/crawlab-team/crawlab/issues/353)

|

||||

- **重复节点注册**. [#334](https://github.com/crawlab-team/crawlab/issues/334)

|

||||

|

||||

# 0.4.0 (2019-12-06)

|

||||

### 功能 / 优化

|

||||

- **可配置爬虫**. 允许用户添加 `Spiderfile` 来配置抓取规则.

|

||||

- **执行模式**. 允许用户选择 3 种任务执行模式: *所有节点*, *指定节点* and *随机*.

|

||||

|

||||

### Bug 修复

|

||||

- **任务意外被杀死**. [#306](https://github.com/crawlab-team/crawlab/issues/306)

|

||||

- **文档更正**. [#301](https://github.com/crawlab-team/crawlab/issues/258) [#301](https://github.com/crawlab-team/crawlab/issues/258)

|

||||

- **直接部署与 Windows 不兼容**. [#288](https://github.com/crawlab-team/crawlab/issues/288)

|

||||

- **日志文件丢失**. [#269](https://github.com/crawlab-team/crawlab/issues/269)

|

||||

|

||||

# 0.3.5 (2019-10-28)

|

||||

### 功能 / 优化

|

||||

- **优雅关闭**. [详情](https://github.com/crawlab-team/crawlab/commit/63fab3917b5a29fd9770f9f51f1572b9f0420385)

|

||||

- **节点信息优化**. [详情](https://github.com/crawlab-team/crawlab/commit/973251a0fbe7a2184ac0da09e0404a17c736aee7)

|

||||

- **将系统环境变量添加到任务**. [详情](https://github.com/crawlab-team/crawlab/commit/4ab4892471965d6342d30385578ca60dc51f8ad3)

|

||||

- **自动刷新任务日志**. [详情](https://github.com/crawlab-team/crawlab/commit/4ab4892471965d6342d30385578ca60dc51f8ad3)

|

||||

- **允许 HTTPS 部署**. [详情](https://github.com/crawlab-team/crawlab/commit/5d8f6f0c56768a6e58f5e46cbf5adff8c7819228)

|

||||

|

||||

### Bug 修复

|

||||

- **定时任务中无法获取爬虫列表**. [详情](https://github.com/crawlab-team/crawlab/commit/311f72da19094e3fa05ab4af49812f58843d8d93)

|

||||

- **无法获取工作节点信息**. [详情](https://github.com/crawlab-team/crawlab/commit/6af06efc17685a9e232e8c2b5fd819ec7d2d1674)

|

||||

- **运行爬虫任务时无法选择节点**. [详情](https://github.com/crawlab-team/crawlab/commit/31f8e03234426e97aed9b0bce6a50562f957edad)

|

||||

- **结果量很大时无法获取结果数量**. [#260](https://github.com/crawlab-team/crawlab/issues/260)

|

||||

- **定时任务中的节点问题**. [#244](https://github.com/crawlab-team/crawlab/issues/244)

|

||||

|

||||

|

||||

# 0.3.1 (2019-08-25)

|

||||

### 功能 / 优化

|

||||

- **Docker 镜像优化**. 将 Docker 镜像进一步分割成 alpine 镜像版本的 master、worker、frontendSplit docker further into master, worker, frontend.

|

||||

- **单元测试**. 用单元测试覆盖部分后端代码.

|

||||

- **前端优化**. 登录页、按钮大小、上传 UI 提示.

|

||||

- **更灵活的节点注册**. 允许用户传一个变量作为注册 key,而不是默认的 MAC 地址.

|

||||

|

||||

### Bug 修复

|

||||

- **上传大爬虫文件错误**. 上传大爬虫文件时的内存崩溃问题. [#150](https://github.com/crawlab-team/crawlab/issues/150)

|

||||

- **无法同步爬虫**. 通过提高写权限等级来修复同步爬虫文件时的问题. [#114](https://github.com/crawlab-team/crawlab/issues/114)

|

||||

- **爬虫页问题**. 通过删除 `Site` 字段来修复. [#112](https://github.com/crawlab-team/crawlab/issues/112)

|

||||

- **节点展示问题**. 当在多个机器上跑 Docker 容器时,节点无法正确展示. [#99](https://github.com/crawlab-team/crawlab/issues/99)

|

||||

|

||||

# 0.3.0 (2019-07-31)

|

||||

### 功能 / 优化

|

||||

- **Golang 后端**: 将后端由 Python 重构为 Golang,很大的提高了稳定性和性能.

|

||||

- **节点网络图**: 节点拓扑图可视化.

|

||||

- **节点系统信息**: 可以查看包括操作系统、CPU数量、可执行文件在内的系统信息.

|

||||

- **节点监控改进**: 节点通过 Redis 来监控和注册.

|

||||

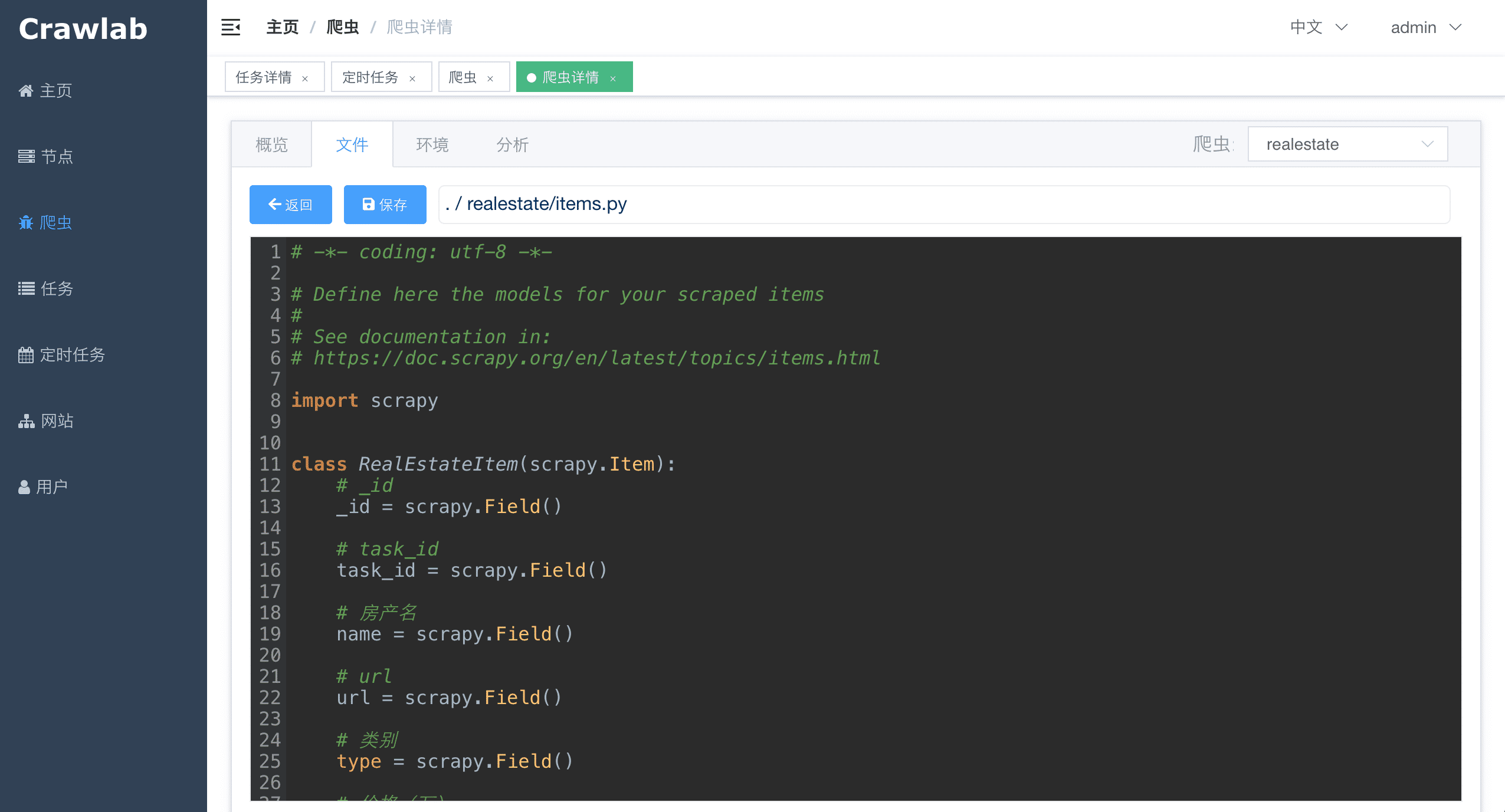

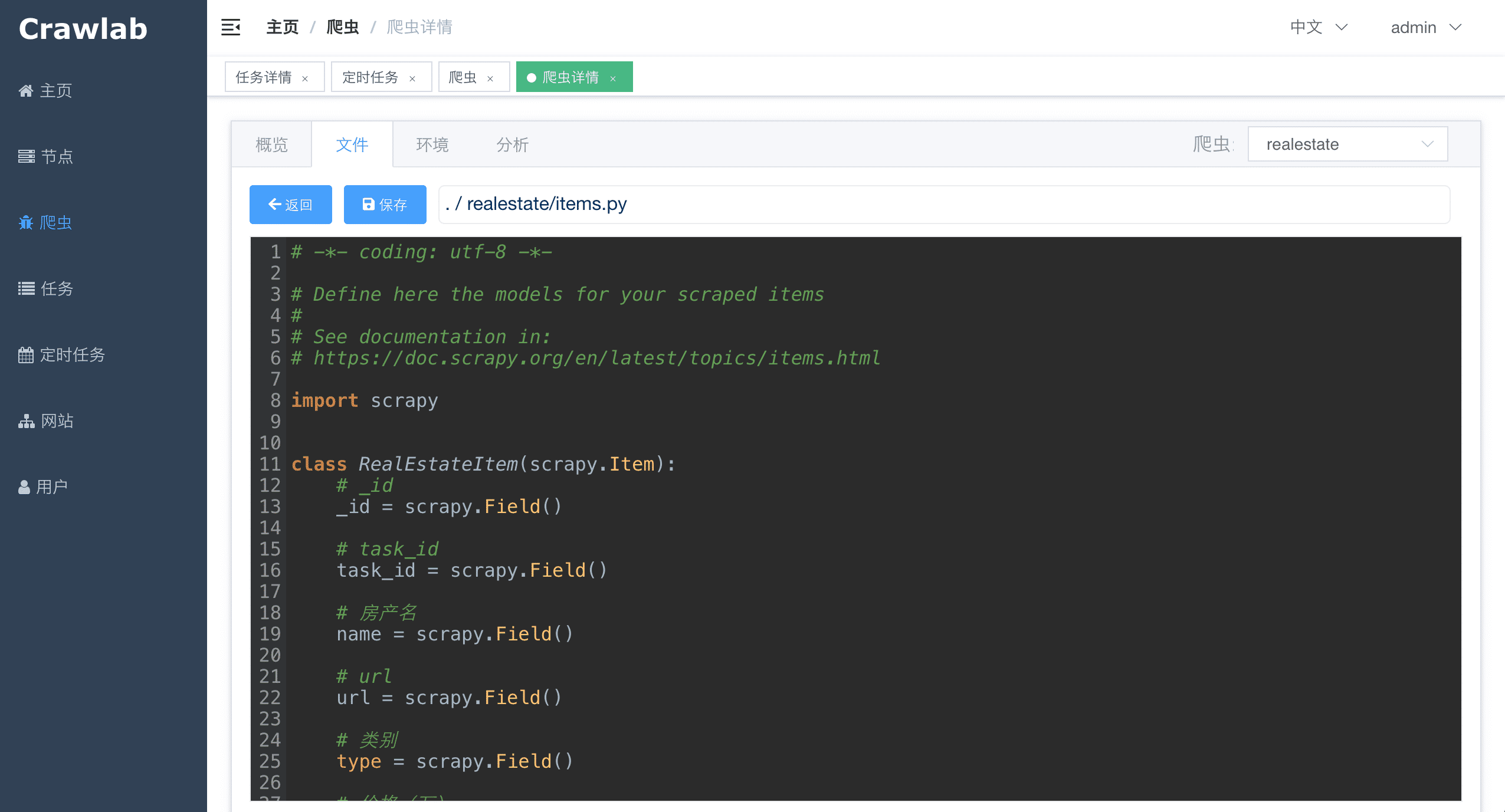

- **文件管理**: 可以在线编辑爬虫文件,包括代码高亮.

|

||||

- **登录页/注册页/用户管理**: 要求用户登录后才能使用 Crawlab, 允许用户注册和用户管理,有一些基于角色的鉴权机制.

|

||||

- **自动部署爬虫**: 爬虫将被自动部署或同步到所有在线节点.

|

||||

- **更小的 Docker 镜像**: 瘦身版 Docker 镜像,通过多阶段构建将 Docker 镜像大小从 1.3G 减小到 700M 左右.

|

||||

|

||||

### Bug 修复

|

||||

- **节点状态**. 节点状态不会随着节点下线而更新. [#87](https://github.com/tikazyq/crawlab/issues/87)

|

||||

- **爬虫部署错误**. 通过自动爬虫部署来修复 [#83](https://github.com/tikazyq/crawlab/issues/83)

|

||||

- **节点无法显示**. 节点无法显示在线 [#81](https://github.com/tikazyq/crawlab/issues/81)

|

||||

- **定时任务无法工作**. 通过 Golang 后端修复 [#64](https://github.com/tikazyq/crawlab/issues/64)

|

||||

- **Flower 错误**. 通过 Golang 后端修复 [#57](https://github.com/tikazyq/crawlab/issues/57)

|

||||

|

||||

# 0.2.4 (2019-07-07)

|

||||

### 功能 / 优化

|

||||

- **文档**: 更优和更详细的文档.

|

||||

- **更好的 Crontab**: 通过 UI 界面生成 Cron 表达式.

|

||||

- **更优的性能**: 从原生 flask 引擎 切换到 `gunicorn`. [#78](https://github.com/tikazyq/crawlab/issues/78)

|

||||

|

||||

### Bug 修复

|

||||

- **删除爬虫**. 删除爬虫时不止在数据库中删除,还应该删除相关的文件夹、任务和定时任务. [#69](https://github.com/tikazyq/crawlab/issues/69)

|

||||

- **MongoDB 授权**. 允许用户注明 `authenticationDatabase` 来连接 `mongodb`. [#68](https://github.com/tikazyq/crawlab/issues/68)

|

||||

- **Windows 兼容性**. 加入 `eventlet` 到 `requirements.txt`. [#59](https://github.com/tikazyq/crawlab/issues/59)

|

||||

|

||||

|

||||

# 0.2.3 (2019-06-12)

|

||||

### 功能 / 优化

|

||||

- **Docker**: 用户能够运行 Docker 镜像来加快部署.

|

||||

- **CLI**: 允许用户通过命令行来执行 Crawlab 程序.

|

||||

- **上传爬虫**: 允许用户上传自定义爬虫到 Crawlab.

|

||||

- **预览时编辑字段**: 允许用户在可配置爬虫中预览数据时编辑字段.

|

||||

|

||||

### Bug 修复

|

||||

- **爬虫分页**. 爬虫列表页中修复分页问题.

|

||||

|

||||

# 0.2.2 (2019-05-30)

|

||||

### 功能 / 优化

|

||||

- **自动抓取字段**: 在可配置爬虫列表页种自动抓取字段.

|

||||

- **下载结果**: 允许下载结果为 CSV 文件.

|

||||

- **百度统计**: 允许用户选择是否允许向百度统计发送统计数据.

|

||||

|

||||

### Bug 修复

|

||||

- **结果页分页**. [#45](https://github.com/tikazyq/crawlab/issues/45)

|

||||

- **定时任务重复触发**: 将 Flask DEBUG 设置为 False 来保证定时任务无法重复触发. [#32](https://github.com/tikazyq/crawlab/issues/32)

|

||||

- **前端环境**: 添加 `VUE_APP_BASE_URL` 作为生产环境模式变量,然后 API 不会永远都是 `localhost` [#30](https://github.com/tikazyq/crawlab/issues/30)

|

||||

|

||||

# 0.2.1 (2019-05-27)

|

||||

- **可配置爬虫**: 允许用户创建爬虫来抓取数据,而不用编写代码.

|

||||

|

||||

# 0.2 (2019-05-10)

|

||||

|

||||

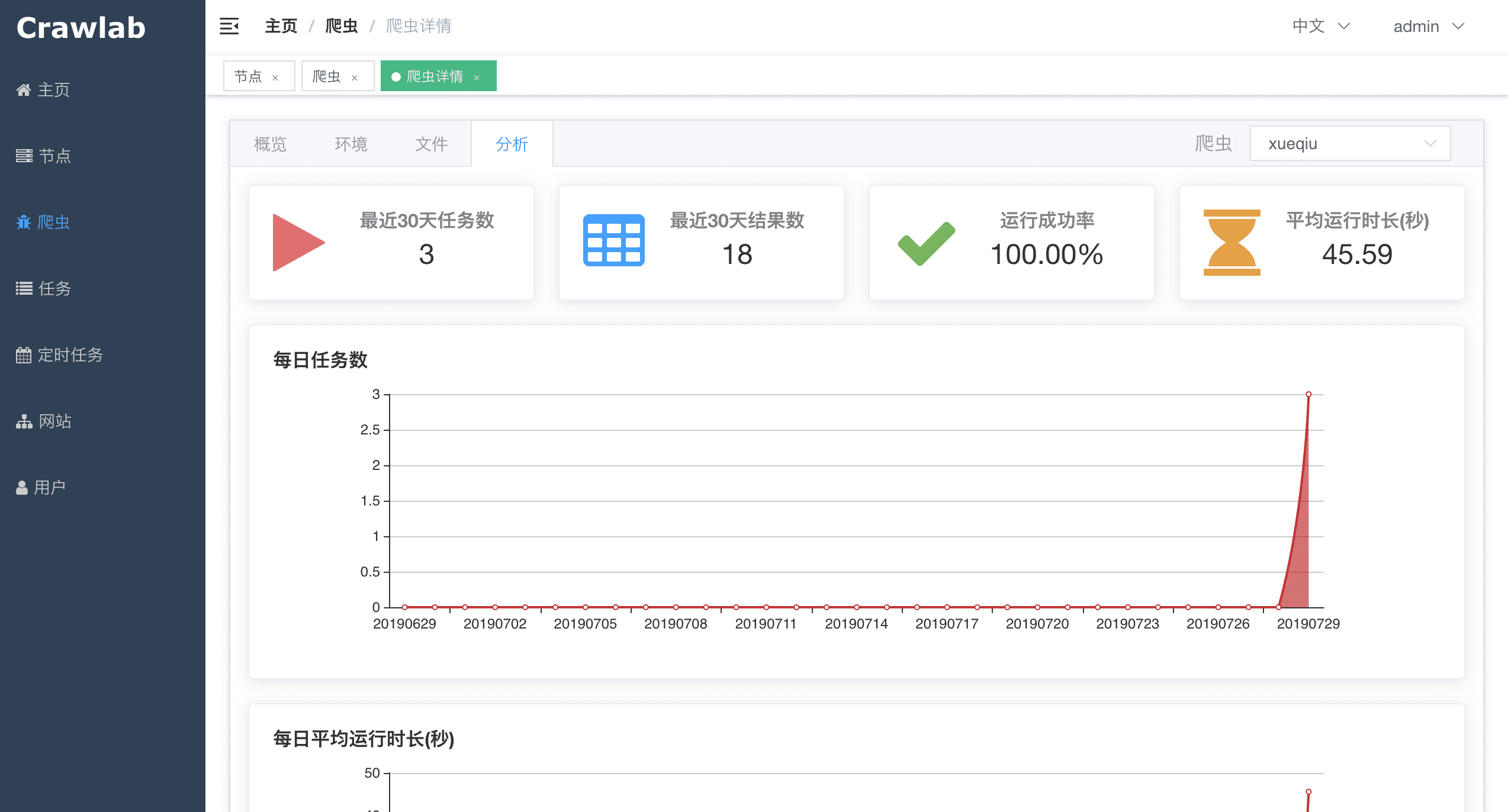

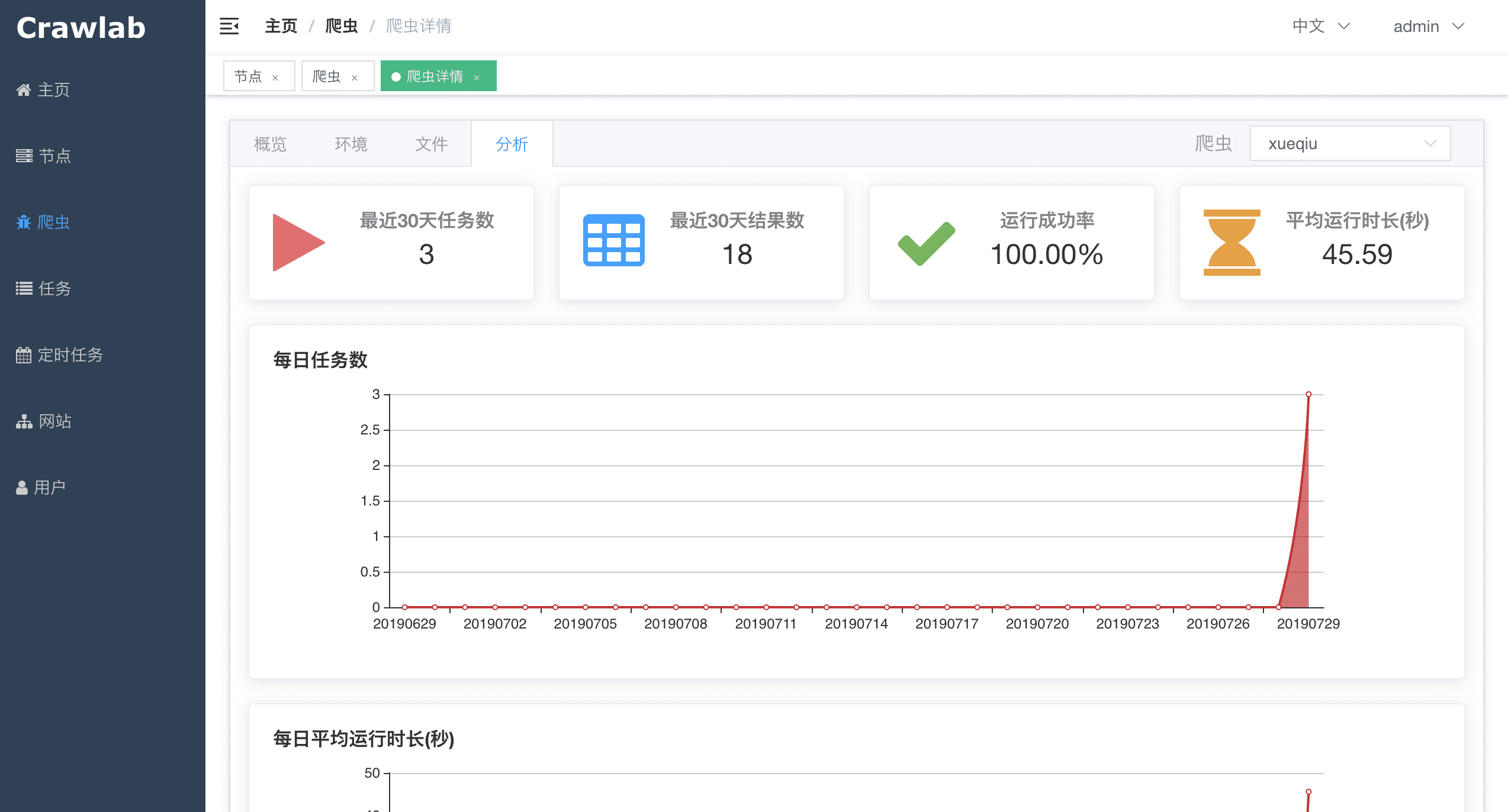

- **高级数据统计**: 爬虫详情页的高级数据统计.

|

||||

- **网站数据**: 加入网站列表(中国),允许用户查看 robots.txt、首页响应时间等信息.

|

||||

|

||||

# 0.1.1 (2019-04-23)

|

||||

|

||||

- **基础统计**: 用户可以查看基础统计数据,包括爬虫和任务页中的失败任务数、结果数.

|

||||

- **近实时任务信息**: 周期性(5 秒)向服务器轮训数据来实现近实时查看任务信息.

|

||||

- **定时任务**: 利用 apscheduler 实现定时任务,允许用户设置类似 Cron 的定时任务.

|

||||

|

||||

# 0.1 (2019-04-17)

|

||||

|

||||

- **首次发布**

|

||||

92

CHANGELOG.md

92

CHANGELOG.md

@@ -1,3 +1,95 @@

|

||||

# 0.4.5 (2020-02-03)

|

||||

### Features / Enhancement

|

||||

- **Interactive Tutorial**. Guide users through the main functionalities of Crawlab.

|

||||

- **Global Environment Variables**. Allow users to set global environment variables, which will be passed into all spider programs. [#177](https://github.com/crawlab-team/crawlab/issues/177)

|

||||

- **Project**. Allow users to link spiders to projects. [#316](https://github.com/crawlab-team/crawlab/issues/316)

|

||||

- **Demo Spiders**. Added demo spiders when Crawlab is initialized. [#379](https://github.com/crawlab-team/crawlab/issues/379)

|

||||

- **User Admin Optimization**. Restrict privilleges of admin users. [#456](https://github.com/crawlab-team/crawlab/issues/456)

|

||||

- **Setting Page Optimization**.

|

||||

- **Task Results Optimization**.

|

||||

|

||||

### Bug Fixes

|

||||

- **Unable to find spider file error**. [#485](https://github.com/crawlab-team/crawlab/issues/485)

|

||||

- **Click delete button results in redirect**. [#480](https://github.com/crawlab-team/crawlab/issues/480)

|

||||

- **Unable to create files in an empty spider**. [#479](https://github.com/crawlab-team/crawlab/issues/479)

|

||||

- **Download results error**. [#465](https://github.com/crawlab-team/crawlab/issues/465)

|

||||

- **crawlab-sdk CLI error**. [#458](https://github.com/crawlab-team/crawlab/issues/458)

|

||||

- **Page refresh issue**. [#441](https://github.com/crawlab-team/crawlab/issues/441)

|

||||

- **Results not support JSON**. [#202](https://github.com/crawlab-team/crawlab/issues/202)

|

||||

- **Getting all spider after deleting a spider**.

|

||||

- **i18n warning**.

|

||||

|

||||

# 0.4.4 (2020-01-17)

|

||||

### Features / Enhancement

|

||||

- **Email Notification**. Allow users to send email notifications.

|

||||

- **DingTalk Robot Notification**. Allow users to send DingTalk Robot notifications.

|

||||

- **Wechat Robot Notification**. Allow users to send Wechat Robot notifications.

|

||||

- **API Address Optimization**. Added relative URL path in frontend so that users don't have to specify `CRAWLAB_API_ADDRESS` explicitly.

|

||||

- **SDK Compatiblity**. Allow users to integrate Scrapy or general spiders with Crawlab SDK.

|

||||

- **Enhanced File Management**. Added tree-like file sidebar to allow users to edit files much more easier.

|

||||

- **Advanced Schedule Cron**. Allow users to edit schedule cron with visualized cron editor.

|

||||

|

||||

### Bug Fixes

|

||||

- **`nil retuened` error**.

|

||||

- **Error when using HTTPS**.

|

||||

- **Unable to run Configurable Spiders on Spider List**.

|

||||

- **Missing form validation before uploading spider files**.

|

||||

|

||||

# 0.4.3 (2020-01-07)

|

||||

|

||||

### Features / Enhancement

|

||||

- **Dependency Installation**. Allow users to install/uninstall dependencies and add programming languages (Node.js only for now) on the platform web interface.

|

||||

- **Pre-install Programming Languages in Docker**. Allow Docker users to set `CRAWLAB_SERVER_LANG_NODE` as `Y` to pre-install `Node.js` environments.

|

||||

- **Add Schedule List in Spider Detail Page**. Allow users to view / add / edit schedule cron jobs in the spider detail page. [#360](https://github.com/crawlab-team/crawlab/issues/360)

|

||||

- **Align Cron Expression with Linux**. Change the expression of 6 elements to 5 elements as aligned in Linux.

|

||||

- **Enable/Disable Schedule Cron**. Allow users to enable/disable the schedule jobs. [#297](https://github.com/crawlab-team/crawlab/issues/297)

|

||||

- **Better Task Management**. Allow users to batch delete tasks. [#341](https://github.com/crawlab-team/crawlab/issues/341)

|

||||

- **Better Spider Management**. Allow users to sort and filter spiders in the spider list page.

|

||||

- **Added Chinese `CHANGELOG`**.

|

||||

- **Added Github Star Button at Nav Bar**.

|

||||

|

||||

### Bug Fixes

|

||||

- **Schedule Cron Task Issue**. [#423](https://github.com/crawlab-team/crawlab/issues/423)

|

||||

- **Upload Spider Zip File Issue**. [#403](https://github.com/crawlab-team/crawlab/issues/403) [#407](https://github.com/crawlab-team/crawlab/issues/407)

|

||||

- **Exit due to Network Failure**. [#340](https://github.com/crawlab-team/crawlab/issues/340)

|

||||

- **Cron Jobs not Running Correctly**

|

||||

- **Schedule List Columns Mis-positioned**

|

||||

- **Clicking Refresh Button Redirected to 404 Page**

|

||||

|

||||

# 0.4.2 (2019-12-26)

|

||||

### Features / Enhancement

|

||||

- **Disclaimer**. Added page for Disclaimer.

|

||||

- **Call API to fetch version**. [#371](https://github.com/crawlab-team/crawlab/issues/371)

|

||||

- **Configure to allow user registration**. [#346](https://github.com/crawlab-team/crawlab/issues/346)

|

||||

- **Allow adding new users**.

|

||||

- **More Advanced File Management**. Allow users to add / edit / rename / delete files. [#286](https://github.com/crawlab-team/crawlab/issues/286)

|

||||

- **Optimized Spider Creation Process**. Allow users to create an empty customized spider before uploading the zip file.

|

||||

- **Better Task Management**. Allow users to filter tasks by selecting through certian criterions. [#341](https://github.com/crawlab-team/crawlab/issues/341)

|

||||

|

||||

### Bug Fixes

|

||||

- **Duplicated nodes**. [#391](https://github.com/crawlab-team/crawlab/issues/391)

|

||||

- **"mongodb no reachable" error**. [#373](https://github.com/crawlab-team/crawlab/issues/373)

|

||||

|

||||

# 0.4.1 (2019-12-13)

|

||||

### Features / Enhancement

|

||||

- **Spiderfile Optimization**. Stages changed from dictionary to array. [#358](https://github.com/crawlab-team/crawlab/issues/358)

|

||||

- **Baidu Tongji Update**.

|

||||

|

||||

### Bug Fixes

|

||||

- **Unable to display schedule tasks**. [#353](https://github.com/crawlab-team/crawlab/issues/353)

|

||||

- **Duplicate node registration**. [#334](https://github.com/crawlab-team/crawlab/issues/334)

|

||||

|

||||

# 0.4.0 (2019-12-06)

|

||||

### Features / Enhancement

|

||||

- **Configurable Spider**. Allow users to add spiders using *Spiderfile* to configure crawling rules.

|

||||

- **Execution Mode**. Allow users to select 3 modes for task execution: *All Nodes*, *Selected Nodes* and *Random*.

|

||||

|

||||

### Bug Fixes

|

||||

- **Task accidentally killed**. [#306](https://github.com/crawlab-team/crawlab/issues/306)

|

||||

- **Documentation fix**. [#301](https://github.com/crawlab-team/crawlab/issues/258) [#301](https://github.com/crawlab-team/crawlab/issues/258)

|

||||

- **Direct deploy incompatible with Windows**. [#288](https://github.com/crawlab-team/crawlab/issues/288)

|

||||

- **Log files lost**. [#269](https://github.com/crawlab-team/crawlab/issues/269)

|

||||

|

||||

# 0.3.5 (2019-10-28)

|

||||

### Features / Enhancement

|

||||

- **Graceful Showdown**. [detail](https://github.com/crawlab-team/crawlab/commit/63fab3917b5a29fd9770f9f51f1572b9f0420385)

|

||||

|

||||

12

DISCLAIMER-zh.md

Normal file

12

DISCLAIMER-zh.md

Normal file

@@ -0,0 +1,12 @@

|

||||

# 免责声明

|

||||

|

||||

本免责及隐私保护声明(以下简称“免责声明”或“本声明”)适用于 Crawlab 开发组 (以下简称“开发组”)研发的系列软件(以下简称"Crawlab") 在您阅读本声明后若不同意此声明中的任何条款,或对本声明存在质疑,请立刻停止使用我们的软件。若您已经开始或正在使用 Crawlab,则表示您已阅读并同意本声明的所有条款之约定。

|

||||

|

||||

1. 总则:您通过安装 Crawlab 并使用 Crawlab 提供的服务与功能即表示您已经同意与开发组立本协议。开发组可随时执行全权决定更改“条款”。经修订的“条款”一经在 Github 免责声明页面上公布后,立即自动生效。

|

||||

2. 本产品是基于Golang的分布式爬虫管理平台,支持Python、NodeJS、Go、Java、PHP等多种编程语言以及多种爬虫框架。

|

||||

3. 一切因使用 Crawlab 而引致之任何意外、疏忽、合约毁坏、诽谤、版权或知识产权侵犯及其所造成的损失(包括在非官方站点下载 Crawlab 而感染电脑病毒),Crawlab 开发组概不负责,亦不承担任何法律责任。

|

||||

4. 用户对使用 Crawlab 自行承担风险,我们不做任何形式的保证, 因网络状况、通讯线路等任何技术原因而导致用户不能正常升级更新,我们也不承担任何法律责任。

|

||||

5. 用户使用 Crawlab 对目标网站进行抓取时需遵从《网络安全法》等与爬虫相关的法律法规,切勿擅自采集公民个人信息、用 DDoS 等方式造成目标网站瘫痪、不遵从目标网站的 robots.txt 协议等非法手段。

|

||||

6. Crawlab 尊重并保护所有用户的个人隐私权,不会窃取任何用户计算机中的信息。

|

||||

7. 系统的版权:Crawlab 开发组对所有开发的或合作开发的产品拥有知识产权,著作权,版权和使用权,这些产品受到适用的知识产权、版权、商标、服务商标、专利或其他法律的保护。

|

||||

8. 传播:任何公司或个人在网络上发布,传播我们软件的行为都是允许的,但因公司或个人传播软件可能造成的任何法律和刑事事件 Crawlab 开发组不负任何责任。

|

||||

12

DISCLAIMER.md

Normal file

12

DISCLAIMER.md

Normal file

@@ -0,0 +1,12 @@

|

||||

# Disclaimer

|

||||

|

||||

This Disclaimer and privacy protection statement (hereinafter referred to as "disclaimer statement" or "this statement") is applicable to the series of software (hereinafter referred to as "crawlab") developed by crawlab development group (hereinafter referred to as "development group") after you read this statement, if you do not agree with any terms in this statement or have doubts about this statement, please stop using our software immediately. If you have started or are using crawlab, you have read and agree to all terms of this statement.

|

||||

|

||||

1. General: by installing crawlab and using the services and functions provided by crawlab, you have agreed to establish this agreement with the development team. The developer group may at any time change the terms at its sole discretion. The amended "terms" shall take effect automatically as soon as they are published on the GitHub disclaimer page.

|

||||

2. This product is a distributed crawler management platform based on golang, supporting python, nodejs, go, Java, PHP and other programming languages as well as a variety of crawler frameworks.

|

||||

3. The development team of crawlab shall not be responsible for any accident, negligence, contract damage, defamation, copyright or intellectual property infringement caused by the use of crawlab and any loss caused by it (including computer virus infection caused by downloading crawlab on the unofficial site), and shall not bear any legal responsibility.

|

||||

4. The user shall bear the risk of using crawlab by himself, we do not make any form of guarantee, and we will not bear any legal responsibility for the user's failure to upgrade and update normally due to any technical reasons such as network condition and communication line.

|

||||

5. When users use crawlab to grab the target website, they need to comply with the laws and regulations related to crawlers, such as the network security law. Do not collect personal information of citizens without authorization, cause the target website to be paralyzed by DDoS, or fail to comply with the robots.txt protocol and other illegal means of the target website.

|

||||

6. Crawlab respects and protects the personal privacy of all users and will not steal any information from users' computers.

|

||||

7. Copyright of the system: the crawleb development team owns the intellectual property rights, copyrights, copyrights and use rights for all developed or jointly developed products, which are protected by applicable intellectual property rights, copyrights, trademarks, service trademarks, patents or other laws.

|

||||

8. Communication: any company or individual who publishes or disseminates our software on the Internet is allowed, but the crawlab development team shall not be responsible for any legal and criminal events that may be caused by the company or individual disseminating the software.

|

||||

20

Dockerfile

20

Dockerfile

@@ -15,34 +15,34 @@ WORKDIR /app

|

||||

|

||||

# install frontend

|

||||

RUN npm config set unsafe-perm true

|

||||

RUN npm install -g yarn && yarn install --registry=https://registry.npm.taobao.org

|

||||

RUN npm install -g yarn && yarn install

|

||||

|

||||

RUN npm run build:prod

|

||||

|

||||

# images

|

||||

FROM ubuntu:latest

|

||||

|

||||

ADD . /app

|

||||

|

||||

# set as non-interactive

|

||||

ENV DEBIAN_FRONTEND noninteractive

|

||||

|

||||

# set CRAWLAB_IS_DOCKER

|

||||

ENV CRAWLAB_IS_DOCKER Y

|

||||

|

||||

# install packages

|

||||

RUN apt-get update \

|

||||

&& apt-get install -y curl git net-tools iputils-ping ntp ntpdate python3 python3-pip \

|

||||

&& apt-get install -y curl git net-tools iputils-ping ntp ntpdate python3 python3-pip nginx \

|

||||

&& ln -s /usr/bin/pip3 /usr/local/bin/pip \

|

||||

&& ln -s /usr/bin/python3 /usr/local/bin/python

|

||||

|

||||

# install backend

|

||||

RUN pip install scrapy pymongo bs4 requests -i https://pypi.tuna.tsinghua.edu.cn/simple

|

||||

RUN pip install scrapy pymongo bs4 requests crawlab-sdk scrapy-splash

|

||||

|

||||

# add files

|

||||

ADD . /app

|

||||

|

||||

# copy backend files

|

||||

COPY --from=backend-build /go/src/app .

|

||||

COPY --from=backend-build /go/bin/crawlab /usr/local/bin

|

||||

|

||||

# install nginx

|

||||

RUN apt-get -y install nginx

|

||||

|

||||

# copy frontend files

|

||||

COPY --from=frontend-build /app/dist /app/dist

|

||||

COPY --from=frontend-build /app/conf/crawlab.conf /etc/nginx/conf.d

|

||||

@@ -57,4 +57,4 @@ EXPOSE 8080

|

||||

EXPOSE 8000

|

||||

|

||||

# start backend

|

||||

CMD ["/bin/sh", "/app/docker_init.sh"]

|

||||

CMD ["/bin/bash", "/app/docker_init.sh"]

|

||||

|

||||

@@ -4,44 +4,43 @@ WORKDIR /go/src/app

|

||||

COPY ./backend .

|

||||

|

||||

ENV GO111MODULE on

|

||||

ENV GOPROXY https://mirrors.aliyun.com/goproxy/

|

||||

ENV GOPROXY https://goproxy.io

|

||||

|

||||

RUN go install -v ./...

|

||||

|

||||

FROM node:8.16.0 AS frontend-build

|

||||

FROM node:8.16.0-alpine AS frontend-build

|

||||

|

||||

ADD ./frontend /app

|

||||

WORKDIR /app

|

||||

|

||||

# install frontend

|

||||

RUN npm install -g yarn && yarn install --registry=https://registry.npm.taobao.org

|

||||

RUN npm config set unsafe-perm true

|

||||

RUN npm install -g yarn && yarn install --registry=https://registry.npm.taobao.org # --sass_binary_site=https://npm.taobao.org/mirrors/node-sass/

|

||||

|

||||

RUN npm run build:prod

|

||||

|

||||

# images

|

||||

FROM ubuntu:latest

|

||||

|

||||

ADD . /app

|

||||

|

||||

# set as non-interactive

|

||||

ENV DEBIAN_FRONTEND noninteractive

|

||||

|

||||

# install packages

|

||||

RUN apt-get update \

|

||||

&& apt-get install -y curl git net-tools iputils-ping ntp ntpdate python3 python3-pip \

|

||||

RUN chmod 777 /tmp \

|

||||

&& apt-get update \

|

||||

&& apt-get install -y curl git net-tools iputils-ping ntp ntpdate python3 python3-pip nginx \

|

||||

&& ln -s /usr/bin/pip3 /usr/local/bin/pip \

|

||||

&& ln -s /usr/bin/python3 /usr/local/bin/python

|

||||

|

||||

# install backend

|

||||

RUN pip install scrapy pymongo bs4 requests -i https://pypi.tuna.tsinghua.edu.cn/simple

|

||||

RUN pip install scrapy pymongo bs4 requests crawlab-sdk scrapy-splash -i https://pypi.tuna.tsinghua.edu.cn/simple

|

||||

|

||||

# add files

|

||||

ADD . /app

|

||||

|

||||

# copy backend files

|

||||

COPY --from=backend-build /go/src/app .

|

||||

COPY --from=backend-build /go/bin/crawlab /usr/local/bin

|

||||

|

||||

# install nginx

|

||||

RUN apt-get -y install nginx

|

||||

|

||||

# copy frontend files

|

||||

COPY --from=frontend-build /app/dist /app/dist

|

||||

COPY --from=frontend-build /app/conf/crawlab.conf /etc/nginx/conf.d

|

||||

@@ -56,4 +55,4 @@ EXPOSE 8080

|

||||

EXPOSE 8000

|

||||

|

||||

# start backend

|

||||

CMD ["/bin/sh", "/app/docker_init.sh"]

|

||||

CMD ["/bin/bash", "/app/docker_init.sh"]

|

||||

|

||||

153

README-zh.md

153

README-zh.md

@@ -1,39 +1,68 @@

|

||||

# Crawlab

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

<p>

|

||||

<a href="https://hub.docker.com/r/tikazyq/crawlab/builds" target="_blank">

|

||||

<img src="https://img.shields.io/docker/cloud/build/tikazyq/crawlab.svg?label=build&logo=docker">

|

||||

</a>

|

||||

<a href="https://hub.docker.com/r/tikazyq/crawlab" target="_blank">

|

||||

<img src="https://img.shields.io/docker/pulls/tikazyq/crawlab?label=pulls&logo=docker">

|

||||

</a>

|

||||

<a href="https://github.com/crawlab-team/crawlab/releases" target="_blank">

|

||||

<img src="https://img.shields.io/github/release/crawlab-team/crawlab.svg?logo=github">

|

||||

</a>

|

||||

<a href="https://github.com/crawlab-team/crawlab/commits/master" target="_blank">

|

||||

<img src="https://img.shields.io/github/last-commit/crawlab-team/crawlab.svg">

|

||||

</a>

|

||||

<a href="https://github.com/crawlab-team/crawlab/issues?q=is%3Aissue+is%3Aopen+label%3Abug" target="_blank">

|

||||

<img src="https://img.shields.io/github/issues/crawlab-team/crawlab/bug.svg?label=bugs&color=red">

|

||||

</a>

|

||||

<a href="https://github.com/crawlab-team/crawlab/issues?q=is%3Aissue+is%3Aopen+label%3Aenhancement" target="_blank">

|

||||

<img src="https://img.shields.io/github/issues/crawlab-team/crawlab/enhancement.svg?label=enhancements&color=cyan">

|

||||

</a>

|

||||

<a href="https://github.com/crawlab-team/crawlab/blob/master/LICENSE" target="_blank">

|

||||

<img src="https://img.shields.io/github/license/crawlab-team/crawlab.svg">

|

||||

</a>

|

||||

</p>

|

||||

|

||||

中文 | [English](https://github.com/crawlab-team/crawlab)

|

||||

|

||||

[安装](#安装) | [运行](#运行) | [截图](#截图) | [架构](#架构) | [集成](#与其他框架的集成) | [比较](#与其他框架比较) | [相关文章](#相关文章) | [社区&赞助](#社区--赞助)

|

||||

[安装](#安装) | [运行](#运行) | [截图](#截图) | [架构](#架构) | [集成](#与其他框架的集成) | [比较](#与其他框架比较) | [相关文章](#相关文章) | [社区&赞助](#社区--赞助) | [更新日志](https://github.com/crawlab-team/crawlab/blob/master/CHANGELOG-zh.md) | [免责声明](https://github.com/crawlab-team/crawlab/blob/master/DISCLAIMER-zh.md)

|

||||

|

||||

基于Golang的分布式爬虫管理平台,支持Python、NodeJS、Go、Java、PHP等多种编程语言以及多种爬虫框架。

|

||||

|

||||

[查看演示 Demo](http://crawlab.cn/demo) | [文档](https://tikazyq.github.io/crawlab-docs)

|

||||

[查看演示 Demo](http://crawlab.cn/demo) | [文档](http://docs.crawlab.cn)

|

||||

|

||||

## 安装

|

||||

|

||||

三种方式:

|

||||

1. [Docker](https://tikazyq.github.io/crawlab-docs/Installation/Docker.html)(推荐)

|

||||

2. [直接部署](https://tikazyq.github.io/crawlab-docs/Installation/Direct.html)(了解内核)

|

||||

3. [Kubernetes](https://mp.weixin.qq.com/s/3Q1BQATUIEE_WXcHPqhYbA)

|

||||

1. [Docker](http://docs.crawlab.cn/Installation/Docker.html)(推荐)

|

||||

2. [直接部署](http://docs.crawlab.cn/Installation/Direct.html)(了解内核)

|

||||

3. [Kubernetes](https://juejin.im/post/5e0a02d851882549884c27ad) (多节点部署)

|

||||

|

||||

### 要求(Docker)

|

||||

- Docker 18.03+

|

||||

- Redis

|

||||

- Redis 5.x+

|

||||

- MongoDB 3.6+

|

||||

- Docker Compose 1.24+ (可选,但推荐)

|

||||

|

||||

### 要求(直接部署)

|

||||

- Go 1.12+

|

||||

- Node 8.12+

|

||||

- Redis

|

||||

- Redis 5.x+

|

||||

- MongoDB 3.6+

|

||||

|

||||

## 快速开始

|

||||

|

||||

请打开命令行并执行下列命令。请保证您已经提前安装了 `docker-compose`。

|

||||

|

||||

```bash

|

||||

git clone https://github.com/crawlab-team/crawlab

|

||||

cd crawlab

|

||||

docker-compose up -d

|

||||

```

|

||||

|

||||

接下来,您可以看看 `docker-compose.yml` (包含详细配置参数),以及参考 [文档](http://docs.crawlab.cn) 来查看更多信息。

|

||||

|

||||

## 运行

|

||||

|

||||

### Docker

|

||||

@@ -47,13 +76,11 @@ services:

|

||||

image: tikazyq/crawlab:latest

|

||||

container_name: master

|

||||

environment:

|

||||

CRAWLAB_API_ADDRESS: "http://localhost:8000"

|

||||

CRAWLAB_SERVER_MASTER: "Y"

|

||||

CRAWLAB_MONGO_HOST: "mongo"

|

||||

CRAWLAB_REDIS_ADDRESS: "redis"

|

||||

ports:

|

||||

- "8080:8080" # frontend

|

||||

- "8000:8000" # backend

|

||||

- "8080:8080"

|

||||

depends_on:

|

||||

- mongo

|

||||

- redis

|

||||

@@ -111,9 +138,9 @@ Docker部署的详情,请见[相关文档](https://tikazyq.github.io/crawlab-d

|

||||

|

||||

|

||||

|

||||

#### 爬虫文件

|

||||

#### 爬虫文件编辑

|

||||

|

||||

|

||||

|

||||

|

||||

#### 任务详情 - 抓取结果

|

||||

|

||||

@@ -121,13 +148,21 @@ Docker部署的详情,请见[相关文档](https://tikazyq.github.io/crawlab-d

|

||||

|

||||

#### 定时任务

|

||||

|

||||

|

||||

|

||||

|

||||

#### 依赖安装

|

||||

|

||||

|

||||

|

||||

#### 消息通知

|

||||

|

||||

<img src="http://static-docs.crawlab.cn/notification-mobile.jpeg" height="480px">

|

||||

|

||||

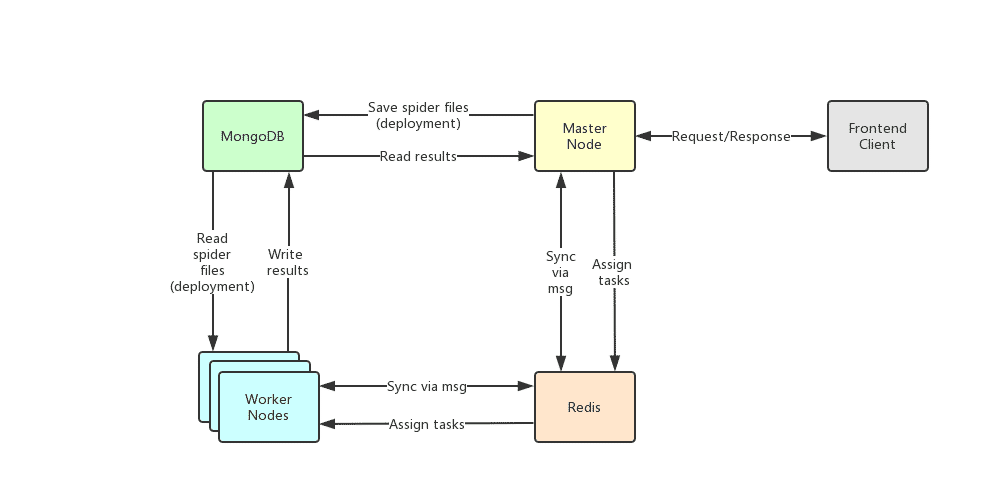

## 架构

|

||||

|

||||

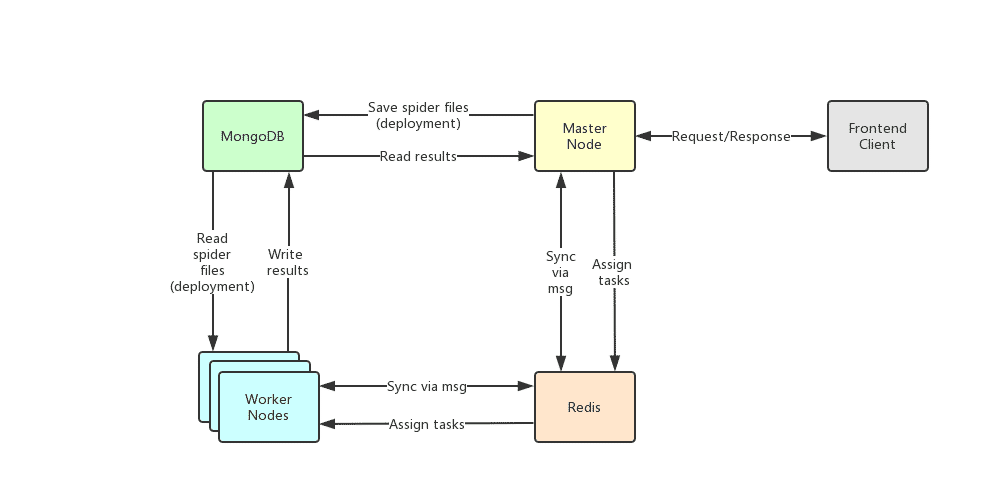

Crawlab的架构包括了一个主节点(Master Node)和多个工作节点(Worker Node),以及负责通信和数据储存的Redis和MongoDB数据库。

|

||||

|

||||

|

||||

|

||||

|

||||

前端应用向主节点请求数据,主节点通过MongoDB和Redis来执行任务派发调度以及部署,工作节点收到任务之后,开始执行爬虫任务,并将任务结果储存到MongoDB。架构相对于`v0.3.0`之前的Celery版本有所精简,去除了不必要的节点监控模块Flower,节点监控主要由Redis完成。

|

||||

|

||||

@@ -162,37 +197,43 @@ Redis是非常受欢迎的Key-Value数据库,在Crawlab中主要实现节点

|

||||

|

||||

## 与其他框架的集成

|

||||

|

||||

[Crawlab SDK](https://github.com/crawlab-team/crawlab-sdk) 提供了一些 `helper` 方法来让您的爬虫更好的集成到 Crawlab 中,例如保存结果数据到 Crawlab 中等等。

|

||||

|

||||

### 集成 Scrapy

|

||||

|

||||

在 `settings.py` 中找到 `ITEM_PIPELINES`(`dict` 类型的变量),在其中添加如下内容。

|

||||

|

||||

```python

|

||||

ITEM_PIPELINES = {

|

||||

'crawlab.pipelines.CrawlabMongoPipeline': 888,

|

||||

}

|

||||

```

|

||||

|

||||

然后,启动 Scrapy 爬虫,运行完成之后,您就应该能看到抓取结果出现在 **任务详情-结果** 里。

|

||||

|

||||

### 通用 Python 爬虫

|

||||

|

||||

将下列代码加入到您爬虫中的结果保存部分。

|

||||

|

||||

```python

|

||||

# 引入保存结果方法

|

||||

from crawlab import save_item

|

||||

|

||||

# 这是一个结果,需要为 dict 类型

|

||||

result = {'name': 'crawlab'}

|

||||

|

||||

# 调用保存结果方法

|

||||

save_item(result)

|

||||

```

|

||||

|

||||

然后,启动爬虫,运行完成之后,您就应该能看到抓取结果出现在 **任务详情-结果** 里。

|

||||

|

||||

### 其他框架和语言

|

||||

|

||||

爬虫任务本质上是由一个shell命令来实现的。任务ID将以环境变量`CRAWLAB_TASK_ID`的形式存在于爬虫任务运行的进程中,并以此来关联抓取数据。另外,`CRAWLAB_COLLECTION`是Crawlab传过来的所存放collection的名称。

|

||||

|

||||

在爬虫程序中,需要将`CRAWLAB_TASK_ID`的值以`task_id`作为可以存入数据库中`CRAWLAB_COLLECTION`的collection中。这样Crawlab就知道如何将爬虫任务与抓取数据关联起来了。当前,Crawlab只支持MongoDB。

|

||||

|

||||

### 集成Scrapy

|

||||

|

||||

以下是Crawlab跟Scrapy集成的例子,利用了Crawlab传过来的task_id和collection_name。

|

||||

|

||||

```python

|

||||

import os

|

||||

from pymongo import MongoClient

|

||||

|

||||

MONGO_HOST = '192.168.99.100'

|

||||

MONGO_PORT = 27017

|

||||

MONGO_DB = 'crawlab_test'

|

||||

|

||||

# scrapy example in the pipeline

|

||||

class JuejinPipeline(object):

|

||||

mongo = MongoClient(host=MONGO_HOST, port=MONGO_PORT)

|

||||

db = mongo[MONGO_DB]

|

||||

col_name = os.environ.get('CRAWLAB_COLLECTION')

|

||||

if not col_name:

|

||||

col_name = 'test'

|

||||

col = db[col_name]

|

||||

|

||||

def process_item(self, item, spider):

|

||||

item['task_id'] = os.environ.get('CRAWLAB_TASK_ID')

|

||||

self.col.save(item)

|

||||

return item

|

||||

```

|

||||

|

||||

## 与其他框架比较

|

||||

|

||||

现在已经有一些爬虫管理框架了,因此为啥还要用Crawlab?

|

||||

@@ -201,13 +242,12 @@ class JuejinPipeline(object):

|

||||

|

||||

Crawlab使用起来很方便,也很通用,可以适用于几乎任何主流语言和框架。它还有一个精美的前端界面,让用户可以方便的管理和运行爬虫。

|

||||

|

||||

|框架 | 类型 | 分布式 | 前端 | 依赖于Scrapyd |

|

||||

|:---:|:---:|:---:|:---:|:---:|

|

||||

| [Crawlab](https://github.com/crawlab-team/crawlab) | 管理平台 | Y | Y | N

|

||||

| [ScrapydWeb](https://github.com/my8100/scrapydweb) | 管理平台 | Y | Y | Y

|

||||

| [SpiderKeeper](https://github.com/DormyMo/SpiderKeeper) | 管理平台 | Y | Y | Y

|

||||

| [Gerapy](https://github.com/Gerapy/Gerapy) | 管理平台 | Y | Y | Y

|

||||

| [Scrapyd](https://github.com/scrapy/scrapyd) | 网络服务 | Y | N | N/A

|

||||

|框架 | 技术 | 优点 | 缺点 | Github 统计数据 |

|

||||

|:---|:---|:---|-----| :---- |

|

||||

| [Crawlab](https://github.com/crawlab-team/crawlab) | Golang + Vue|不局限于 scrapy,可以运行任何语言和框架的爬虫,精美的 UI 界面,天然支持分布式爬虫,支持节点管理、爬虫管理、任务管理、定时任务、结果导出、数据统计、消息通知、可配置爬虫、在线编辑代码等功能|暂时不支持爬虫版本管理|   |

|

||||

| [ScrapydWeb](https://github.com/my8100/scrapydweb) | Python Flask + Vue|精美的 UI 界面,内置了 scrapy 日志解析器,有较多任务运行统计图表,支持节点管理、定时任务、邮件提醒、移动界面,算是 scrapy-based 中功能完善的爬虫管理平台|不支持 scrapy 以外的爬虫,Python Flask 为后端,性能上有一定局限性|   |

|

||||

| [Gerapy](https://github.com/Gerapy/Gerapy) | Python Django + Vue|Gerapy 是崔庆才大神开发的爬虫管理平台,安装部署非常简单,同样基于 scrapyd,有精美的 UI 界面,支持节点管理、代码编辑、可配置规则等功能|同样不支持 scrapy 以外的爬虫,而且据使用者反馈,1.0 版本有很多 bug,期待 2.0 版本会有一定程度的改进|   |

|

||||

| [SpiderKeeper](https://github.com/DormyMo/SpiderKeeper) | Python Flask|基于 scrapyd,开源版 Scrapyhub,非常简洁的 UI 界面,支持定时任务|可能有些过于简洁了,不支持分页,不支持节点管理,不支持 scrapy 以外的爬虫|   |

|

||||

|

||||

## Q&A

|

||||

|

||||

@@ -254,6 +294,9 @@ Crawlab使用起来很方便,也很通用,可以适用于几乎任何主流

|

||||

<a href="https://github.com/hantmac">

|

||||

<img src="https://avatars2.githubusercontent.com/u/7600925?s=460&v=4" height="80">

|

||||

</a>

|

||||

<a href="https://github.com/duanbin0414">

|

||||

<img src="https://avatars3.githubusercontent.com/u/50389867?s=460&v=4" height="80">

|

||||

</a>

|

||||

|

||||

## 社区 & 赞助

|

||||

|

||||

|

||||

145

README.md

145

README.md

@@ -1,39 +1,68 @@

|

||||

# Crawlab

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

<p>

|

||||

<a href="https://hub.docker.com/r/tikazyq/crawlab/builds" target="_blank">

|

||||

<img src="https://img.shields.io/docker/cloud/build/tikazyq/crawlab.svg?label=build&logo=docker">

|

||||

</a>

|

||||

<a href="https://hub.docker.com/r/tikazyq/crawlab" target="_blank">

|

||||

<img src="https://img.shields.io/docker/pulls/tikazyq/crawlab?label=pulls&logo=docker">

|

||||

</a>

|

||||

<a href="https://github.com/crawlab-team/crawlab/releases" target="_blank">

|

||||

<img src="https://img.shields.io/github/release/crawlab-team/crawlab.svg?logo=github">

|

||||

</a>

|

||||

<a href="https://github.com/crawlab-team/crawlab/commits/master" target="_blank">

|

||||

<img src="https://img.shields.io/github/last-commit/crawlab-team/crawlab.svg">

|

||||

</a>

|

||||

<a href="https://github.com/crawlab-team/crawlab/issues?q=is%3Aissue+is%3Aopen+label%3Abug" target="_blank">

|

||||

<img src="https://img.shields.io/github/issues/crawlab-team/crawlab/bug.svg?label=bugs&color=red">

|

||||

</a>

|

||||

<a href="https://github.com/crawlab-team/crawlab/issues?q=is%3Aissue+is%3Aopen+label%3Aenhancement" target="_blank">

|

||||

<img src="https://img.shields.io/github/issues/crawlab-team/crawlab/enhancement.svg?label=enhancements&color=cyan">

|

||||

</a>

|

||||

<a href="https://github.com/crawlab-team/crawlab/blob/master/LICENSE" target="_blank">

|

||||

<img src="https://img.shields.io/github/license/crawlab-team/crawlab.svg">

|

||||

</a>

|

||||

</p>

|

||||

|

||||

[中文](https://github.com/crawlab-team/crawlab/blob/master/README-zh.md) | English

|

||||

|

||||

[Installation](#installation) | [Run](#run) | [Screenshot](#screenshot) | [Architecture](#architecture) | [Integration](#integration-with-other-frameworks) | [Compare](#comparison-with-other-frameworks) | [Community & Sponsorship](#community--sponsorship)

|

||||

[Installation](#installation) | [Run](#run) | [Screenshot](#screenshot) | [Architecture](#architecture) | [Integration](#integration-with-other-frameworks) | [Compare](#comparison-with-other-frameworks) | [Community & Sponsorship](#community--sponsorship) | [CHANGELOG](https://github.com/crawlab-team/crawlab/blob/master/CHANGELOG.md) | [Disclaimer](https://github.com/crawlab-team/crawlab/blob/master/DISCLAIMER.md)

|

||||

|

||||

Golang-based distributed web crawler management platform, supporting various languages including Python, NodeJS, Go, Java, PHP and various web crawler frameworks including Scrapy, Puppeteer, Selenium.

|

||||

|

||||

[Demo](http://crawlab.cn/demo) | [Documentation](https://tikazyq.github.io/crawlab-docs)

|

||||

[Demo](http://crawlab.cn/demo) | [Documentation](http://docs.crawlab.cn)

|

||||

|

||||

## Installation

|

||||

|

||||

Two methods:

|

||||

1. [Docker](https://tikazyq.github.io/crawlab-docs/Installation/Docker.html) (Recommended)

|

||||

2. [Direct Deploy](https://tikazyq.github.io/crawlab-docs/Installation/Direct.html) (Check Internal Kernel)

|

||||

3. [Kubernetes](https://mp.weixin.qq.com/s/3Q1BQATUIEE_WXcHPqhYbA)

|

||||

1. [Docker](http://docs.crawlab.cn/Installation/Docker.html) (Recommended)

|

||||

2. [Direct Deploy](http://docs.crawlab.cn/Installation/Direct.html) (Check Internal Kernel)

|

||||

3. [Kubernetes](https://juejin.im/post/5e0a02d851882549884c27ad) (Multi-Node Deployment)

|

||||

|

||||

### Pre-requisite (Docker)

|

||||

- Docker 18.03+

|

||||

- Redis

|

||||

- Redis 5.x+

|

||||

- MongoDB 3.6+

|

||||

- Docker Compose 1.24+ (optional but recommended)

|

||||

|

||||

### Pre-requisite (Direct Deploy)

|

||||

- Go 1.12+

|

||||

- Node 8.12+

|

||||

- Redis

|

||||

- Redis 5.x+

|

||||

- MongoDB 3.6+

|

||||

|

||||

## Quick Start

|

||||

|

||||

Please open the command line prompt and execute the command beloe. Make sure you have installed `docker-compose` in advance.

|

||||

|

||||

```bash

|

||||

git clone https://github.com/crawlab-team/crawlab

|

||||

cd crawlab

|

||||

docker-compose up -d

|

||||

```

|

||||

|

||||

Next, you can look into the `docker-compose.yml` (with detailed config params) and the [Documentation (Chinese)](http://docs.crawlab.cn) for further information.

|

||||

|

||||

## Run

|

||||

|

||||

### Docker

|

||||

@@ -48,13 +77,11 @@ services:

|

||||

image: tikazyq/crawlab:latest

|

||||

container_name: master

|

||||

environment:

|

||||

CRAWLAB_API_ADDRESS: "http://localhost:8000"

|

||||

CRAWLAB_SERVER_MASTER: "Y"

|

||||

CRAWLAB_MONGO_HOST: "mongo"

|

||||

CRAWLAB_REDIS_ADDRESS: "redis"

|

||||

ports:

|

||||

- "8080:8080" # frontend

|

||||

- "8000:8000" # backend

|

||||

- "8080:8080"

|

||||

depends_on:

|

||||

- mongo

|

||||

- redis

|

||||

@@ -109,9 +136,9 @@ For Docker Deployment details, please refer to [relevant documentation](https://

|

||||

|

||||

|

||||

|

||||

#### Spider Files

|

||||

#### Spider File Edit

|

||||

|

||||

|

||||

|

||||

|

||||

#### Task Results

|

||||

|

||||

@@ -119,13 +146,21 @@ For Docker Deployment details, please refer to [relevant documentation](https://

|

||||

|

||||

#### Cron Job

|

||||

|

||||

|

||||

|

||||

|

||||

#### Dependency Installation

|

||||

|

||||

|

||||

|

||||

#### Notifications

|

||||

|

||||

<img src="http://static-docs.crawlab.cn/notification-mobile.jpeg" height="480px">

|

||||

|

||||

## Architecture

|

||||

|

||||

The architecture of Crawlab is consisted of the Master Node and multiple Worker Nodes, and Redis and MongoDB databases which are mainly for nodes communication and data storage.

|

||||

|

||||

|

||||

|

||||

|

||||

The frontend app makes requests to the Master Node, which assigns tasks and deploys spiders through MongoDB and Redis. When a Worker Node receives a task, it begins to execute the crawling task, and stores the results to MongoDB. The architecture is much more concise compared with versions before `v0.3.0`. It has removed unnecessary Flower module which offers node monitoring services. They are now done by Redis.

|

||||

|

||||

@@ -161,35 +196,43 @@ Frontend is a SPA based on

|

||||

|

||||

## Integration with Other Frameworks

|

||||

|

||||

A crawling task is actually executed through a shell command. The Task ID will be passed to the crawling task process in the form of environment variable named `CRAWLAB_TASK_ID`. By doing so, the data can be related to a task. Also, another environment variable `CRAWLAB_COLLECTION` is passed by Crawlab as the name of the collection to store results data.

|

||||

[Crawlab SDK](https://github.com/crawlab-team/crawlab-sdk) provides some `helper` methods to make it easier for you to integrate your spiders into Crawlab, e.g. saving results.

|

||||

|

||||

⚠️Note: make sure you have already installed `crawlab-sdk` using pip.

|

||||

|

||||

### Scrapy

|

||||

|

||||

Below is an example to integrate Crawlab with Scrapy in pipelines.

|

||||

In `settings.py` in your Scrapy project, find the variable named `ITEM_PIPELINES` (a `dict` variable). Add content below.

|

||||

|

||||

```python

|

||||

import os

|

||||

from pymongo import MongoClient

|

||||

|

||||

MONGO_HOST = '192.168.99.100'

|

||||

MONGO_PORT = 27017

|

||||

MONGO_DB = 'crawlab_test'

|

||||

|

||||

# scrapy example in the pipeline

|

||||

class JuejinPipeline(object):

|

||||

mongo = MongoClient(host=MONGO_HOST, port=MONGO_PORT)

|

||||

db = mongo[MONGO_DB]

|

||||

col_name = os.environ.get('CRAWLAB_COLLECTION')

|

||||

if not col_name:

|

||||

col_name = 'test'

|

||||

col = db[col_name]

|

||||

|

||||

def process_item(self, item, spider):

|

||||

item['task_id'] = os.environ.get('CRAWLAB_TASK_ID')

|

||||

self.col.save(item)

|

||||

return item

|

||||

ITEM_PIPELINES = {

|

||||

'crawlab.pipelines.CrawlabMongoPipeline': 888,

|

||||

}

|

||||

```

|

||||

|

||||

Then, start the Scrapy spider. After it's done, you should be able to see scraped results in **Task Detail -> Result**

|

||||

|

||||

### General Python Spider

|

||||

|

||||

Please add below content to your spider files to save results.

|

||||

|

||||

```python

|

||||

# import result saving method

|

||||

from crawlab import save_item

|

||||

|

||||

# this is a result record, must be dict type

|

||||

result = {'name': 'crawlab'}

|

||||

|

||||

# call result saving method

|

||||

save_item(result)

|

||||

```

|

||||

|

||||

Then, start the spider. After it's done, you should be able to see scraped results in **Task Detail -> Result**

|

||||

|

||||

### Other Frameworks / Languages

|

||||

|

||||

A crawling task is actually executed through a shell command. The Task ID will be passed to the crawling task process in the form of environment variable named `CRAWLAB_TASK_ID`. By doing so, the data can be related to a task. Also, another environment variable `CRAWLAB_COLLECTION` is passed by Crawlab as the name of the collection to store results data.

|

||||

|

||||

## Comparison with Other Frameworks

|

||||

|

||||

There are existing spider management frameworks. So why use Crawlab?

|

||||

@@ -198,13 +241,12 @@ The reason is that most of the existing platforms are depending on Scrapyd, whic

|

||||

|

||||

Crawlab is easy to use, general enough to adapt spiders in any language and any framework. It has also a beautiful frontend interface for users to manage spiders much more easily.

|

||||

|

||||

|Framework | Type | Distributed | Frontend | Scrapyd-Dependent |

|

||||

|:---:|:---:|:---:|:---:|:---:|

|

||||

| [Crawlab](https://github.com/crawlab-team/crawlab) | Admin Platform | Y | Y | N

|

||||

| [ScrapydWeb](https://github.com/my8100/scrapydweb) | Admin Platform | Y | Y | Y

|

||||

| [SpiderKeeper](https://github.com/DormyMo/SpiderKeeper) | Admin Platform | Y | Y | Y

|

||||

| [Gerapy](https://github.com/Gerapy/Gerapy) | Admin Platform | Y | Y | Y

|

||||

| [Scrapyd](https://github.com/scrapy/scrapyd) | Web Service | Y | N | N/A

|

||||

|Framework | Technology | Pros | Cons | Github Stats |

|

||||

|:---|:---|:---|-----| :---- |

|

||||

| [Crawlab](https://github.com/crawlab-team/crawlab) | Golang + Vue|Not limited to Scrapy, available for all programming languages and frameworks. Beautiful UI interface. Naturally support distributed spiders. Support spider mangement, task management, cron job, result export, analytics, notifications, configurable spiders, online code editor, etc.|Not yet support spider versioning|   |

|

||||

| [ScrapydWeb](https://github.com/my8100/scrapydweb) | Python Flask + Vue|Beautiful UI interface, built-in Scrapy log parser, stats and graphs for task execution, support node management, cron job, mail notification, mobile. Full-feature spider management platform.|Not support spiders other than Scrapy. Limited performance because of Python Flask backend.|   |

|

||||

| [Gerapy](https://github.com/Gerapy/Gerapy) | Python Django + Vue|Gerapy is built by web crawler guru [Germey Cui](https://github.com/Germey). Simple installation and deployment. Beautiful UI interface. Support node management, code edit, configurable crawl rules, etc.|Again not support spiders other than Scrapy. A lot of bugs based on user feedback in v1.0. Look forward to improvement in v2.0|   |

|

||||

| [SpiderKeeper](https://github.com/DormyMo/SpiderKeeper) | Python Flask|Open-source Scrapyhub. Concise and simple UI interface. Support cron job.|Perhaps too simplified, not support pagination, not support node management, not support spiders other than Scrapy.|   |

|

||||

|

||||

## Contributors

|

||||

<a href="https://github.com/tikazyq">

|

||||

@@ -219,6 +261,9 @@ Crawlab is easy to use, general enough to adapt spiders in any language and any

|

||||

<a href="https://github.com/hantmac">

|

||||

<img src="https://avatars2.githubusercontent.com/u/7600925?s=460&v=4" height="80">

|

||||

</a>

|

||||

<a href="https://github.com/duanbin0414">

|

||||

<img src="https://avatars3.githubusercontent.com/u/50389867?s=460&v=4" height="80">

|

||||

</a>

|

||||

|

||||

## Community & Sponsorship

|

||||

|

||||

|

||||

@@ -15,20 +15,35 @@ redis:

|

||||

log:

|

||||

level: info

|

||||

path: "/var/logs/crawlab"

|

||||

isDeletePeriodically: "Y"

|

||||

isDeletePeriodically: "N"

|

||||

deleteFrequency: "@hourly"

|

||||

server:

|

||||

host: 0.0.0.0

|

||||

port: 8000

|

||||

master: "N"

|

||||

master: "Y"

|

||||

secret: "crawlab"

|

||||

register:

|

||||

# mac地址 或者 ip地址,如果是ip,则需要手动指定IP

|

||||

type: "mac"

|

||||

ip: ""

|

||||

lang: # 安装语言环境, Y 为安装,N 为不安装,只对 Docker 有效

|

||||

python: "Y"

|

||||

node: "N"

|

||||

spider:

|

||||

path: "/app/spiders"

|

||||

task:

|

||||

workers: 4

|

||||

other:

|

||||

tmppath: "/tmp"

|

||||

version: 0.4.5

|

||||

setting:

|

||||

allowRegister: "N"

|

||||

notification:

|

||||

mail:

|

||||

server: ''

|

||||

port: ''

|

||||

senderEmail: ''

|

||||

senderIdentity: ''

|

||||

smtp:

|

||||

user: ''

|

||||

password: ''

|

||||

@@ -28,7 +28,7 @@ func (c *Config) Init() error {

|

||||

}

|

||||

viper.SetConfigType("yaml") // 设置配置文件格式为YAML

|

||||

viper.AutomaticEnv() // 读取匹配的环境变量

|

||||

viper.SetEnvPrefix("CRAWLAB") // 读取环境变量的前缀为APISERVER

|

||||

viper.SetEnvPrefix("CRAWLAB") // 读取环境变量的前缀为CRAWLAB

|

||||

replacer := strings.NewReplacer(".", "_")

|

||||

viper.SetEnvKeyReplacer(replacer)

|

||||

if err := viper.ReadInConfig(); err != nil { // viper解析配置文件

|

||||

|

||||

8

backend/constants/anchor.go

Normal file

8

backend/constants/anchor.go

Normal file

@@ -0,0 +1,8 @@

|

||||

package constants

|

||||

|

||||

const (

|

||||

AnchorStartStage = "START_STAGE"

|

||||

AnchorStartUrl = "START_URL"

|

||||

AnchorItems = "ITEMS"

|

||||

AnchorParsers = "PARSERS"

|

||||

)

|

||||

6

backend/constants/common.go

Normal file

6

backend/constants/common.go

Normal file

@@ -0,0 +1,6 @@

|

||||

package constants

|

||||

|

||||

const (

|

||||

ASCENDING = "ascending"

|

||||

DESCENDING = "descending"

|

||||

)

|

||||

6

backend/constants/config_spider.go

Normal file

6

backend/constants/config_spider.go

Normal file

@@ -0,0 +1,6 @@

|

||||

package constants

|

||||

|

||||

const (

|

||||

EngineScrapy = "scrapy"

|

||||

EngineColly = "colly"

|

||||

)

|

||||

13

backend/constants/notification.go

Normal file

13

backend/constants/notification.go

Normal file

@@ -0,0 +1,13 @@

|

||||

package constants

|

||||

|

||||

const (

|

||||

NotificationTriggerOnTaskEnd = "notification_trigger_on_task_end"

|

||||

NotificationTriggerOnTaskError = "notification_trigger_on_task_error"

|

||||

NotificationTriggerNever = "notification_trigger_never"

|

||||

)

|

||||

|

||||

const (

|

||||

NotificationTypeMail = "notification_type_mail"

|

||||

NotificationTypeDingTalk = "notification_type_ding_talk"

|

||||

NotificationTypeWechat = "notification_type_wechat"

|

||||

)

|

||||

9

backend/constants/rpc.go

Normal file

9

backend/constants/rpc.go

Normal file

@@ -0,0 +1,9 @@

|

||||

package constants

|

||||

|

||||

const (

|

||||

RpcInstallLang = "install_lang"

|

||||

RpcInstallDep = "install_dep"

|

||||

RpcUninstallDep = "uninstall_dep"

|

||||

RpcGetDepList = "get_dep_list"

|

||||

RpcGetInstalledDepList = "get_installed_dep_list"

|

||||

)

|

||||

10

backend/constants/schedule.go

Normal file

10

backend/constants/schedule.go

Normal file

@@ -0,0 +1,10 @@

|

||||

package constants

|

||||

|

||||

const (

|

||||

ScheduleStatusStop = "stopped"

|

||||

ScheduleStatusRunning = "running"

|

||||

ScheduleStatusError = "error"

|

||||

|

||||

ScheduleStatusErrorNotFoundNode = "Not Found Node"

|

||||

ScheduleStatusErrorNotFoundSpider = "Not Found Spider"

|

||||

)

|

||||

5

backend/constants/scrapy.go

Normal file

5

backend/constants/scrapy.go

Normal file

@@ -0,0 +1,5 @@

|

||||

package constants

|

||||

|

||||

const ScrapyProtectedStageNames = ""

|

||||

|

||||

const ScrapyProtectedFieldNames = "_id,task_id,ts"

|

||||

@@ -3,4 +3,5 @@ package constants

|

||||

const (

|

||||

Customized = "customized"

|

||||

Configurable = "configurable"

|

||||

Plugin = "plugin"

|

||||

)

|

||||

|

||||

@@ -5,3 +5,9 @@ const (

|

||||

Linux = "linux"

|

||||

Darwin = "darwin"

|

||||

)

|

||||

|

||||

const (

|

||||

Python = "python"

|

||||

Nodejs = "node"

|

||||

Java = "java"

|

||||

)

|

||||

|

||||

@@ -19,3 +19,9 @@ const (

|

||||

TaskFinish string = "finish"

|

||||

TaskCancel string = "cancel"

|

||||

)

|

||||

|

||||

const (

|

||||

RunTypeAllNodes string = "all-nodes"

|

||||

RunTypeRandom string = "random"

|

||||

RunTypeSelectedNodes string = "selected-nodes"

|

||||

)

|

||||

|

||||

@@ -61,11 +61,46 @@ func InitMongo() error {

|

||||

dialInfo.Password = mongoPassword

|

||||

dialInfo.Source = mongoAuth

|

||||

}

|

||||

sess, err := mgo.DialWithInfo(&dialInfo)

|

||||

if err != nil {

|

||||

return err

|

||||

|

||||

// mongo session

|

||||

var sess *mgo.Session

|

||||

|

||||

// 错误次数

|

||||

errNum := 0

|

||||

|

||||

// 重复尝试连接mongo

|

||||

for {

|

||||

var err error

|

||||

|

||||

// 连接mongo

|

||||

sess, err = mgo.DialWithInfo(&dialInfo)

|

||||

|

||||

if err != nil {

|

||||

// 如果连接错误,休息1秒,错误次数+1

|

||||

time.Sleep(1 * time.Second)

|

||||

errNum++

|

||||

|

||||

// 如果错误次数超过30,返回错误

|

||||

if errNum >= 30 {

|

||||

return err

|

||||

}

|

||||

} else {

|

||||

// 如果没有错误,退出循环

|

||||

break

|

||||

}

|

||||

}

|

||||

|

||||

// 赋值给全局mongo session

|

||||

Session = sess

|

||||

}

|

||||

//Add Unique index for 'key'

|

||||

keyIndex := mgo.Index{

|

||||

Key: []string{"key"},

|

||||

Unique: true,

|

||||

}

|

||||

s, c := GetCol("nodes")

|

||||

defer s.Close()

|

||||

c.EnsureIndex(keyIndex)

|

||||

|

||||

return nil

|

||||

}

|

||||

|

||||

@@ -58,9 +58,9 @@ func (r *Redis) subscribe(ctx context.Context, consume ConsumeFunc, channel ...s

|

||||

}

|

||||

done <- nil

|

||||

case <-tick.C:

|

||||

//fmt.Printf("ping message \n")

|

||||

if err := psc.Ping(""); err != nil {

|

||||

done <- err

|

||||

fmt.Printf("ping message error: %s \n", err)

|

||||

//done <- err

|

||||

}

|

||||

case err := <-done:

|

||||

close(done)

|

||||

|

||||

@@ -4,10 +4,12 @@ import (

|

||||

"context"

|

||||

"crawlab/entity"

|

||||

"crawlab/utils"

|

||||

"errors"

|

||||

"github.com/apex/log"

|

||||

"github.com/gomodule/redigo/redis"

|

||||

"github.com/spf13/viper"

|

||||

"runtime/debug"

|

||||

"strings"

|

||||

"time"

|

||||

)

|

||||

|

||||

@@ -17,14 +19,36 @@ type Redis struct {

|

||||

pool *redis.Pool

|

||||

}

|

||||

|

||||

type Mutex struct {

|

||||

Name string

|

||||

expiry time.Duration

|

||||

tries int

|

||||

delay time.Duration

|

||||

value string

|

||||

}

|

||||

|

||||

func NewRedisClient() *Redis {

|

||||

return &Redis{pool: NewRedisPool()}

|

||||

}

|

||||

|

||||

func (r *Redis) RPush(collection string, value interface{}) error {

|

||||

c := r.pool.Get()

|

||||

defer utils.Close(c)

|

||||

|

||||

if _, err := c.Do("RPUSH", collection, value); err != nil {

|

||||

log.Error(err.Error())

|

||||

debug.PrintStack()

|

||||

return err

|

||||

}

|

||||

return nil

|

||||

}

|

||||

|

||||

func (r *Redis) LPush(collection string, value interface{}) error {

|

||||

c := r.pool.Get()

|

||||

defer utils.Close(c)

|

||||

|

||||

if _, err := c.Do("RPUSH", collection, value); err != nil {

|

||||

log.Error(err.Error())

|

||||

debug.PrintStack()

|

||||

return err

|

||||

}

|

||||

@@ -47,6 +71,7 @@ func (r *Redis) HSet(collection string, key string, value string) error {

|

||||

defer utils.Close(c)

|

||||

|

||||

if _, err := c.Do("HSET", collection, key, value); err != nil {

|

||||

log.Error(err.Error())

|

||||

debug.PrintStack()

|

||||

return err

|

||||

}

|

||||

@@ -58,7 +83,9 @@ func (r *Redis) HGet(collection string, key string) (string, error) {

|

||||

defer utils.Close(c)

|

||||

|

||||

value, err2 := redis.String(c.Do("HGET", collection, key))

|

||||

if err2 != nil {

|

||||

if err2 != nil && err2 != redis.ErrNil {

|

||||

log.Error(err2.Error())

|

||||

debug.PrintStack()

|

||||

return value, err2

|

||||

}

|