+

+

+

+

+

+  +

+

+

+

+

+  +

+

+

+

+

+  +

+

+

+

+

+  +

+

+

+

+

+  +

+

+

+

+

+  +

+

+

+

+

+

## 架构

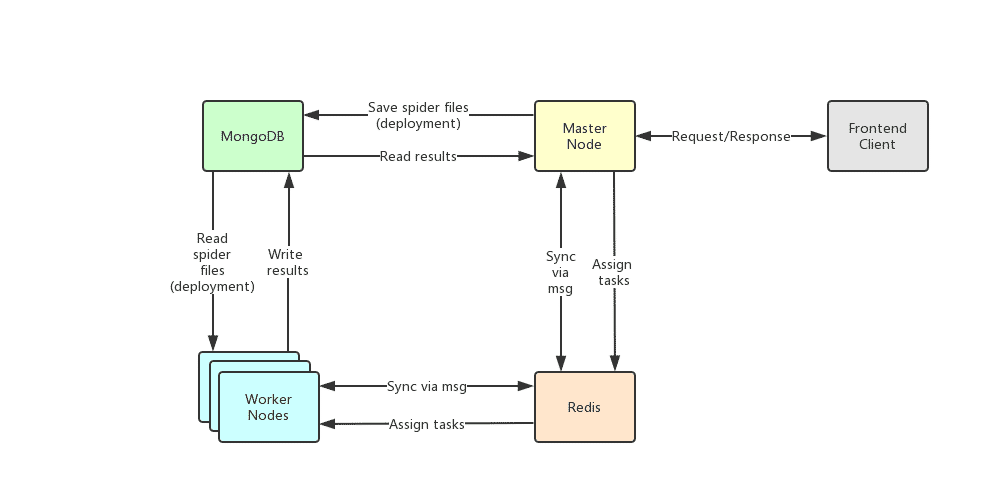

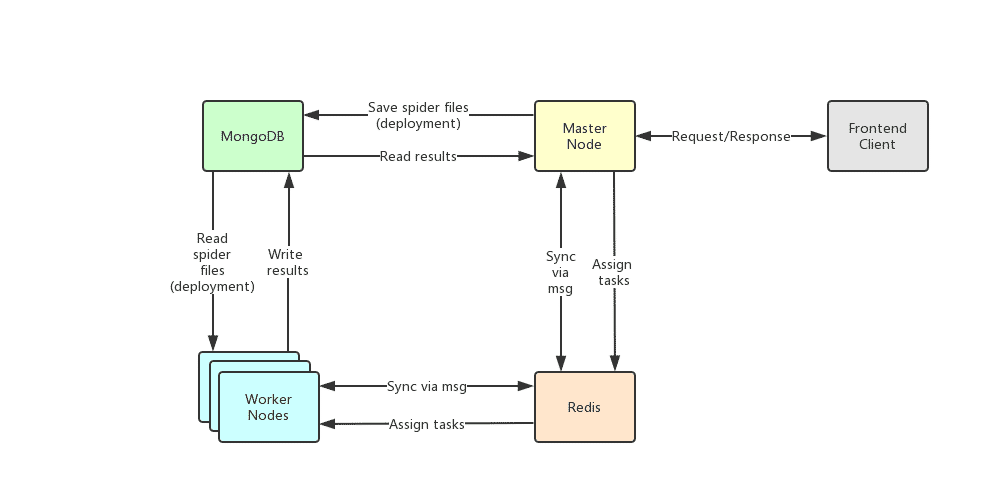

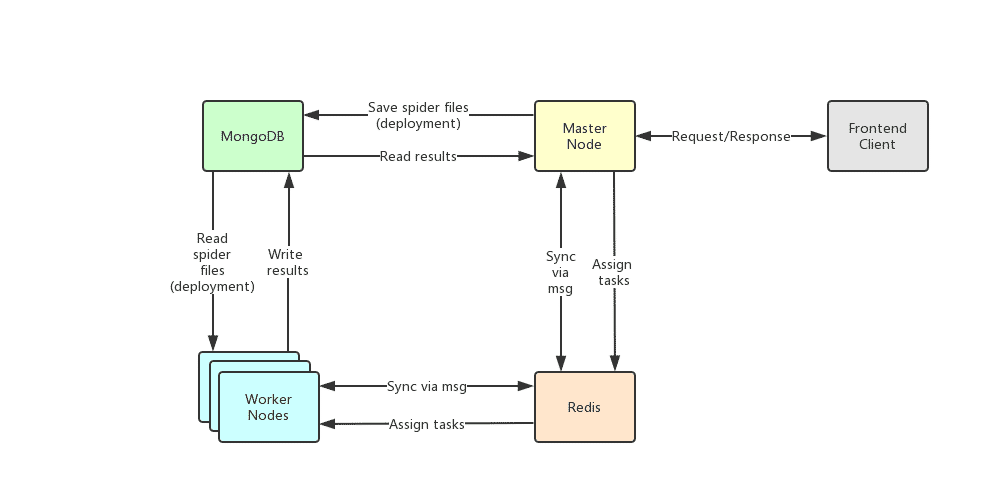

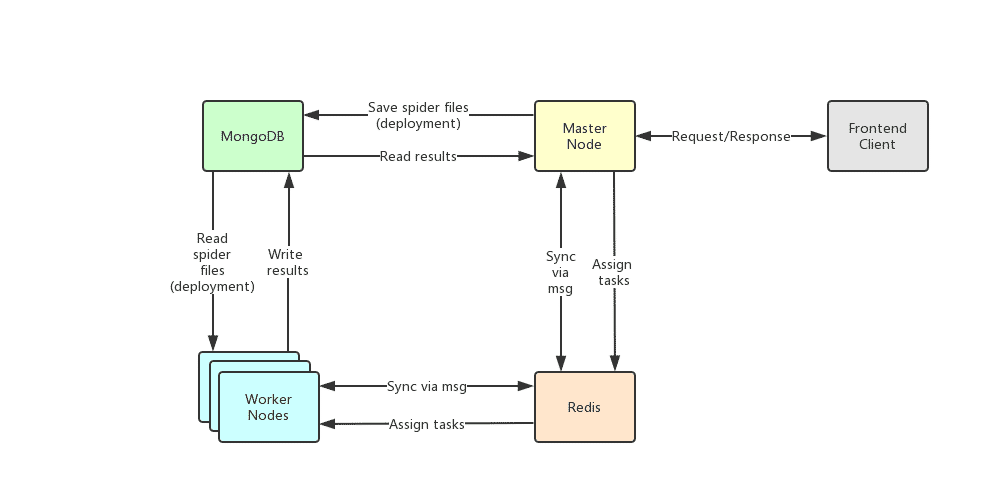

Crawlab的架构包括了一个主节点(Master Node)和多个工作节点(Worker Node),以及负责通信和数据储存的Redis和MongoDB数据库。

-

+

前端应用向主节点请求数据,主节点通过MongoDB和Redis来执行任务派发调度以及部署,工作节点收到任务之后,开始执行爬虫任务,并将任务结果储存到MongoDB。架构相对于`v0.3.0`之前的Celery版本有所精简,去除了不必要的节点监控模块Flower,节点监控主要由Redis完成。

@@ -162,37 +197,43 @@ Redis是非常受欢迎的Key-Value数据库,在Crawlab中主要实现节点

## 与其他框架的集成

+[Crawlab SDK](https://github.com/crawlab-team/crawlab-sdk) 提供了一些 `helper` 方法来让您的爬虫更好的集成到 Crawlab 中,例如保存结果数据到 Crawlab 中等等。

+

+### 集成 Scrapy

+

+在 `settings.py` 中找到 `ITEM_PIPELINES`(`dict` 类型的变量),在其中添加如下内容。

+

+```python

+ITEM_PIPELINES = {

+ 'crawlab.pipelines.CrawlabMongoPipeline': 888,

+}

+```

+

+然后,启动 Scrapy 爬虫,运行完成之后,您就应该能看到抓取结果出现在 **任务详情-结果** 里。

+

+### 通用 Python 爬虫

+

+将下列代码加入到您爬虫中的结果保存部分。

+

+```python

+# 引入保存结果方法

+from crawlab import save_item

+

+# 这是一个结果,需要为 dict 类型

+result = {'name': 'crawlab'}

+

+# 调用保存结果方法

+save_item(result)

+```

+

+然后,启动爬虫,运行完成之后,您就应该能看到抓取结果出现在 **任务详情-结果** 里。

+

+### 其他框架和语言

+

爬虫任务本质上是由一个shell命令来实现的。任务ID将以环境变量`CRAWLAB_TASK_ID`的形式存在于爬虫任务运行的进程中,并以此来关联抓取数据。另外,`CRAWLAB_COLLECTION`是Crawlab传过来的所存放collection的名称。

在爬虫程序中,需要将`CRAWLAB_TASK_ID`的值以`task_id`作为可以存入数据库中`CRAWLAB_COLLECTION`的collection中。这样Crawlab就知道如何将爬虫任务与抓取数据关联起来了。当前,Crawlab只支持MongoDB。

-### 集成Scrapy

-

-以下是Crawlab跟Scrapy集成的例子,利用了Crawlab传过来的task_id和collection_name。

-

-```python

-import os

-from pymongo import MongoClient

-

-MONGO_HOST = '192.168.99.100'

-MONGO_PORT = 27017

-MONGO_DB = 'crawlab_test'

-

-# scrapy example in the pipeline

-class JuejinPipeline(object):

- mongo = MongoClient(host=MONGO_HOST, port=MONGO_PORT)

- db = mongo[MONGO_DB]

- col_name = os.environ.get('CRAWLAB_COLLECTION')

- if not col_name:

- col_name = 'test'

- col = db[col_name]

-

- def process_item(self, item, spider):

- item['task_id'] = os.environ.get('CRAWLAB_TASK_ID')

- self.col.save(item)

- return item

-```

-

## 与其他框架比较

现在已经有一些爬虫管理框架了,因此为啥还要用Crawlab?

@@ -201,13 +242,12 @@ class JuejinPipeline(object):

Crawlab使用起来很方便,也很通用,可以适用于几乎任何主流语言和框架。它还有一个精美的前端界面,让用户可以方便的管理和运行爬虫。

-|框架 | 类型 | 分布式 | 前端 | 依赖于Scrapyd |

-|:---:|:---:|:---:|:---:|:---:|

-| [Crawlab](https://github.com/crawlab-team/crawlab) | 管理平台 | Y | Y | N

-| [ScrapydWeb](https://github.com/my8100/scrapydweb) | 管理平台 | Y | Y | Y

-| [SpiderKeeper](https://github.com/DormyMo/SpiderKeeper) | 管理平台 | Y | Y | Y

-| [Gerapy](https://github.com/Gerapy/Gerapy) | 管理平台 | Y | Y | Y

-| [Scrapyd](https://github.com/scrapy/scrapyd) | 网络服务 | Y | N | N/A

+|框架 | 技术 | 优点 | 缺点 | Github 统计数据 |

+|:---|:---|:---|-----| :---- |

+| [Crawlab](https://github.com/crawlab-team/crawlab) | Golang + Vue|不局限于 scrapy,可以运行任何语言和框架的爬虫,精美的 UI 界面,天然支持分布式爬虫,支持节点管理、爬虫管理、任务管理、定时任务、结果导出、数据统计、消息通知、可配置爬虫、在线编辑代码等功能|暂时不支持爬虫版本管理|   |

+| [ScrapydWeb](https://github.com/my8100/scrapydweb) | Python Flask + Vue|精美的 UI 界面,内置了 scrapy 日志解析器,有较多任务运行统计图表,支持节点管理、定时任务、邮件提醒、移动界面,算是 scrapy-based 中功能完善的爬虫管理平台|不支持 scrapy 以外的爬虫,Python Flask 为后端,性能上有一定局限性|   |

+| [Gerapy](https://github.com/Gerapy/Gerapy) | Python Django + Vue|Gerapy 是崔庆才大神开发的爬虫管理平台,安装部署非常简单,同样基于 scrapyd,有精美的 UI 界面,支持节点管理、代码编辑、可配置规则等功能|同样不支持 scrapy 以外的爬虫,而且据使用者反馈,1.0 版本有很多 bug,期待 2.0 版本会有一定程度的改进|   |

+| [SpiderKeeper](https://github.com/DormyMo/SpiderKeeper) | Python Flask|基于 scrapyd,开源版 Scrapyhub,非常简洁的 UI 界面,支持定时任务|可能有些过于简洁了,不支持分页,不支持节点管理,不支持 scrapy 以外的爬虫|   |

## Q&A

@@ -254,6 +294,9 @@ Crawlab使用起来很方便,也很通用,可以适用于几乎任何主流

## 架构

Crawlab的架构包括了一个主节点(Master Node)和多个工作节点(Worker Node),以及负责通信和数据储存的Redis和MongoDB数据库。

-

+

前端应用向主节点请求数据,主节点通过MongoDB和Redis来执行任务派发调度以及部署,工作节点收到任务之后,开始执行爬虫任务,并将任务结果储存到MongoDB。架构相对于`v0.3.0`之前的Celery版本有所精简,去除了不必要的节点监控模块Flower,节点监控主要由Redis完成。

@@ -162,37 +197,43 @@ Redis是非常受欢迎的Key-Value数据库,在Crawlab中主要实现节点

## 与其他框架的集成

+[Crawlab SDK](https://github.com/crawlab-team/crawlab-sdk) 提供了一些 `helper` 方法来让您的爬虫更好的集成到 Crawlab 中,例如保存结果数据到 Crawlab 中等等。

+

+### 集成 Scrapy

+

+在 `settings.py` 中找到 `ITEM_PIPELINES`(`dict` 类型的变量),在其中添加如下内容。

+

+```python

+ITEM_PIPELINES = {

+ 'crawlab.pipelines.CrawlabMongoPipeline': 888,

+}

+```

+

+然后,启动 Scrapy 爬虫,运行完成之后,您就应该能看到抓取结果出现在 **任务详情-结果** 里。

+

+### 通用 Python 爬虫

+

+将下列代码加入到您爬虫中的结果保存部分。

+

+```python

+# 引入保存结果方法

+from crawlab import save_item

+

+# 这是一个结果,需要为 dict 类型

+result = {'name': 'crawlab'}

+

+# 调用保存结果方法

+save_item(result)

+```

+

+然后,启动爬虫,运行完成之后,您就应该能看到抓取结果出现在 **任务详情-结果** 里。

+

+### 其他框架和语言

+

爬虫任务本质上是由一个shell命令来实现的。任务ID将以环境变量`CRAWLAB_TASK_ID`的形式存在于爬虫任务运行的进程中,并以此来关联抓取数据。另外,`CRAWLAB_COLLECTION`是Crawlab传过来的所存放collection的名称。

在爬虫程序中,需要将`CRAWLAB_TASK_ID`的值以`task_id`作为可以存入数据库中`CRAWLAB_COLLECTION`的collection中。这样Crawlab就知道如何将爬虫任务与抓取数据关联起来了。当前,Crawlab只支持MongoDB。

-### 集成Scrapy

-

-以下是Crawlab跟Scrapy集成的例子,利用了Crawlab传过来的task_id和collection_name。

-

-```python

-import os

-from pymongo import MongoClient

-

-MONGO_HOST = '192.168.99.100'

-MONGO_PORT = 27017

-MONGO_DB = 'crawlab_test'

-

-# scrapy example in the pipeline

-class JuejinPipeline(object):

- mongo = MongoClient(host=MONGO_HOST, port=MONGO_PORT)

- db = mongo[MONGO_DB]

- col_name = os.environ.get('CRAWLAB_COLLECTION')

- if not col_name:

- col_name = 'test'

- col = db[col_name]

-

- def process_item(self, item, spider):

- item['task_id'] = os.environ.get('CRAWLAB_TASK_ID')

- self.col.save(item)

- return item

-```

-

## 与其他框架比较

现在已经有一些爬虫管理框架了,因此为啥还要用Crawlab?

@@ -201,13 +242,12 @@ class JuejinPipeline(object):

Crawlab使用起来很方便,也很通用,可以适用于几乎任何主流语言和框架。它还有一个精美的前端界面,让用户可以方便的管理和运行爬虫。

-|框架 | 类型 | 分布式 | 前端 | 依赖于Scrapyd |

-|:---:|:---:|:---:|:---:|:---:|

-| [Crawlab](https://github.com/crawlab-team/crawlab) | 管理平台 | Y | Y | N

-| [ScrapydWeb](https://github.com/my8100/scrapydweb) | 管理平台 | Y | Y | Y

-| [SpiderKeeper](https://github.com/DormyMo/SpiderKeeper) | 管理平台 | Y | Y | Y

-| [Gerapy](https://github.com/Gerapy/Gerapy) | 管理平台 | Y | Y | Y

-| [Scrapyd](https://github.com/scrapy/scrapyd) | 网络服务 | Y | N | N/A

+|框架 | 技术 | 优点 | 缺点 | Github 统计数据 |

+|:---|:---|:---|-----| :---- |

+| [Crawlab](https://github.com/crawlab-team/crawlab) | Golang + Vue|不局限于 scrapy,可以运行任何语言和框架的爬虫,精美的 UI 界面,天然支持分布式爬虫,支持节点管理、爬虫管理、任务管理、定时任务、结果导出、数据统计、消息通知、可配置爬虫、在线编辑代码等功能|暂时不支持爬虫版本管理|   |

+| [ScrapydWeb](https://github.com/my8100/scrapydweb) | Python Flask + Vue|精美的 UI 界面,内置了 scrapy 日志解析器,有较多任务运行统计图表,支持节点管理、定时任务、邮件提醒、移动界面,算是 scrapy-based 中功能完善的爬虫管理平台|不支持 scrapy 以外的爬虫,Python Flask 为后端,性能上有一定局限性|   |

+| [Gerapy](https://github.com/Gerapy/Gerapy) | Python Django + Vue|Gerapy 是崔庆才大神开发的爬虫管理平台,安装部署非常简单,同样基于 scrapyd,有精美的 UI 界面,支持节点管理、代码编辑、可配置规则等功能|同样不支持 scrapy 以外的爬虫,而且据使用者反馈,1.0 版本有很多 bug,期待 2.0 版本会有一定程度的改进|   |

+| [SpiderKeeper](https://github.com/DormyMo/SpiderKeeper) | Python Flask|基于 scrapyd,开源版 Scrapyhub,非常简洁的 UI 界面,支持定时任务|可能有些过于简洁了,不支持分页,不支持节点管理,不支持 scrapy 以外的爬虫|   |

## Q&A

@@ -254,6 +294,9 @@ Crawlab使用起来很方便,也很通用,可以适用于几乎任何主流

+

+

## Architecture

The architecture of Crawlab is consisted of the Master Node and multiple Worker Nodes, and Redis and MongoDB databases which are mainly for nodes communication and data storage.

-

+

The frontend app makes requests to the Master Node, which assigns tasks and deploys spiders through MongoDB and Redis. When a Worker Node receives a task, it begins to execute the crawling task, and stores the results to MongoDB. The architecture is much more concise compared with versions before `v0.3.0`. It has removed unnecessary Flower module which offers node monitoring services. They are now done by Redis.

@@ -161,35 +196,43 @@ Frontend is a SPA based on

## Integration with Other Frameworks

-A crawling task is actually executed through a shell command. The Task ID will be passed to the crawling task process in the form of environment variable named `CRAWLAB_TASK_ID`. By doing so, the data can be related to a task. Also, another environment variable `CRAWLAB_COLLECTION` is passed by Crawlab as the name of the collection to store results data.

+[Crawlab SDK](https://github.com/crawlab-team/crawlab-sdk) provides some `helper` methods to make it easier for you to integrate your spiders into Crawlab, e.g. saving results.

+

+⚠️Note: make sure you have already installed `crawlab-sdk` using pip.

### Scrapy

-Below is an example to integrate Crawlab with Scrapy in pipelines.

+In `settings.py` in your Scrapy project, find the variable named `ITEM_PIPELINES` (a `dict` variable). Add content below.

```python

-import os

-from pymongo import MongoClient

-

-MONGO_HOST = '192.168.99.100'

-MONGO_PORT = 27017

-MONGO_DB = 'crawlab_test'

-

-# scrapy example in the pipeline

-class JuejinPipeline(object):

- mongo = MongoClient(host=MONGO_HOST, port=MONGO_PORT)

- db = mongo[MONGO_DB]

- col_name = os.environ.get('CRAWLAB_COLLECTION')

- if not col_name:

- col_name = 'test'

- col = db[col_name]

-

- def process_item(self, item, spider):

- item['task_id'] = os.environ.get('CRAWLAB_TASK_ID')

- self.col.save(item)

- return item

+ITEM_PIPELINES = {

+ 'crawlab.pipelines.CrawlabMongoPipeline': 888,

+}

```

+Then, start the Scrapy spider. After it's done, you should be able to see scraped results in **Task Detail -> Result**

+

+### General Python Spider

+

+Please add below content to your spider files to save results.

+

+```python

+# import result saving method

+from crawlab import save_item

+

+# this is a result record, must be dict type

+result = {'name': 'crawlab'}

+

+# call result saving method

+save_item(result)

+```

+

+Then, start the spider. After it's done, you should be able to see scraped results in **Task Detail -> Result**

+

+### Other Frameworks / Languages

+

+A crawling task is actually executed through a shell command. The Task ID will be passed to the crawling task process in the form of environment variable named `CRAWLAB_TASK_ID`. By doing so, the data can be related to a task. Also, another environment variable `CRAWLAB_COLLECTION` is passed by Crawlab as the name of the collection to store results data.

+

## Comparison with Other Frameworks

There are existing spider management frameworks. So why use Crawlab?

@@ -198,13 +241,12 @@ The reason is that most of the existing platforms are depending on Scrapyd, whic

Crawlab is easy to use, general enough to adapt spiders in any language and any framework. It has also a beautiful frontend interface for users to manage spiders much more easily.

-|Framework | Type | Distributed | Frontend | Scrapyd-Dependent |

-|:---:|:---:|:---:|:---:|:---:|

-| [Crawlab](https://github.com/crawlab-team/crawlab) | Admin Platform | Y | Y | N

-| [ScrapydWeb](https://github.com/my8100/scrapydweb) | Admin Platform | Y | Y | Y

-| [SpiderKeeper](https://github.com/DormyMo/SpiderKeeper) | Admin Platform | Y | Y | Y

-| [Gerapy](https://github.com/Gerapy/Gerapy) | Admin Platform | Y | Y | Y

-| [Scrapyd](https://github.com/scrapy/scrapyd) | Web Service | Y | N | N/A

+|Framework | Technology | Pros | Cons | Github Stats |

+|:---|:---|:---|-----| :---- |

+| [Crawlab](https://github.com/crawlab-team/crawlab) | Golang + Vue|Not limited to Scrapy, available for all programming languages and frameworks. Beautiful UI interface. Naturally support distributed spiders. Support spider mangement, task management, cron job, result export, analytics, notifications, configurable spiders, online code editor, etc.|Not yet support spider versioning|   |

+| [ScrapydWeb](https://github.com/my8100/scrapydweb) | Python Flask + Vue|Beautiful UI interface, built-in Scrapy log parser, stats and graphs for task execution, support node management, cron job, mail notification, mobile. Full-feature spider management platform.|Not support spiders other than Scrapy. Limited performance because of Python Flask backend.|   |

+| [Gerapy](https://github.com/Gerapy/Gerapy) | Python Django + Vue|Gerapy is built by web crawler guru [Germey Cui](https://github.com/Germey). Simple installation and deployment. Beautiful UI interface. Support node management, code edit, configurable crawl rules, etc.|Again not support spiders other than Scrapy. A lot of bugs based on user feedback in v1.0. Look forward to improvement in v2.0|   |

+| [SpiderKeeper](https://github.com/DormyMo/SpiderKeeper) | Python Flask|Open-source Scrapyhub. Concise and simple UI interface. Support cron job.|Perhaps too simplified, not support pagination, not support node management, not support spiders other than Scrapy.|   |

## Contributors

@@ -219,6 +261,9 @@ Crawlab is easy to use, general enough to adapt spiders in any language and any

## Architecture

The architecture of Crawlab is consisted of the Master Node and multiple Worker Nodes, and Redis and MongoDB databases which are mainly for nodes communication and data storage.

-

+

The frontend app makes requests to the Master Node, which assigns tasks and deploys spiders through MongoDB and Redis. When a Worker Node receives a task, it begins to execute the crawling task, and stores the results to MongoDB. The architecture is much more concise compared with versions before `v0.3.0`. It has removed unnecessary Flower module which offers node monitoring services. They are now done by Redis.

@@ -161,35 +196,43 @@ Frontend is a SPA based on

## Integration with Other Frameworks

-A crawling task is actually executed through a shell command. The Task ID will be passed to the crawling task process in the form of environment variable named `CRAWLAB_TASK_ID`. By doing so, the data can be related to a task. Also, another environment variable `CRAWLAB_COLLECTION` is passed by Crawlab as the name of the collection to store results data.

+[Crawlab SDK](https://github.com/crawlab-team/crawlab-sdk) provides some `helper` methods to make it easier for you to integrate your spiders into Crawlab, e.g. saving results.

+

+⚠️Note: make sure you have already installed `crawlab-sdk` using pip.

### Scrapy

-Below is an example to integrate Crawlab with Scrapy in pipelines.

+In `settings.py` in your Scrapy project, find the variable named `ITEM_PIPELINES` (a `dict` variable). Add content below.

```python

-import os

-from pymongo import MongoClient

-

-MONGO_HOST = '192.168.99.100'

-MONGO_PORT = 27017

-MONGO_DB = 'crawlab_test'

-

-# scrapy example in the pipeline

-class JuejinPipeline(object):

- mongo = MongoClient(host=MONGO_HOST, port=MONGO_PORT)

- db = mongo[MONGO_DB]

- col_name = os.environ.get('CRAWLAB_COLLECTION')

- if not col_name:

- col_name = 'test'

- col = db[col_name]

-

- def process_item(self, item, spider):

- item['task_id'] = os.environ.get('CRAWLAB_TASK_ID')

- self.col.save(item)

- return item

+ITEM_PIPELINES = {

+ 'crawlab.pipelines.CrawlabMongoPipeline': 888,

+}

```

+Then, start the Scrapy spider. After it's done, you should be able to see scraped results in **Task Detail -> Result**

+

+### General Python Spider

+

+Please add below content to your spider files to save results.

+

+```python

+# import result saving method

+from crawlab import save_item

+

+# this is a result record, must be dict type

+result = {'name': 'crawlab'}

+

+# call result saving method

+save_item(result)

+```

+

+Then, start the spider. After it's done, you should be able to see scraped results in **Task Detail -> Result**

+

+### Other Frameworks / Languages

+

+A crawling task is actually executed through a shell command. The Task ID will be passed to the crawling task process in the form of environment variable named `CRAWLAB_TASK_ID`. By doing so, the data can be related to a task. Also, another environment variable `CRAWLAB_COLLECTION` is passed by Crawlab as the name of the collection to store results data.

+

## Comparison with Other Frameworks

There are existing spider management frameworks. So why use Crawlab?

@@ -198,13 +241,12 @@ The reason is that most of the existing platforms are depending on Scrapyd, whic

Crawlab is easy to use, general enough to adapt spiders in any language and any framework. It has also a beautiful frontend interface for users to manage spiders much more easily.

-|Framework | Type | Distributed | Frontend | Scrapyd-Dependent |

-|:---:|:---:|:---:|:---:|:---:|

-| [Crawlab](https://github.com/crawlab-team/crawlab) | Admin Platform | Y | Y | N

-| [ScrapydWeb](https://github.com/my8100/scrapydweb) | Admin Platform | Y | Y | Y

-| [SpiderKeeper](https://github.com/DormyMo/SpiderKeeper) | Admin Platform | Y | Y | Y

-| [Gerapy](https://github.com/Gerapy/Gerapy) | Admin Platform | Y | Y | Y

-| [Scrapyd](https://github.com/scrapy/scrapyd) | Web Service | Y | N | N/A

+|Framework | Technology | Pros | Cons | Github Stats |

+|:---|:---|:---|-----| :---- |

+| [Crawlab](https://github.com/crawlab-team/crawlab) | Golang + Vue|Not limited to Scrapy, available for all programming languages and frameworks. Beautiful UI interface. Naturally support distributed spiders. Support spider mangement, task management, cron job, result export, analytics, notifications, configurable spiders, online code editor, etc.|Not yet support spider versioning|   |

+| [ScrapydWeb](https://github.com/my8100/scrapydweb) | Python Flask + Vue|Beautiful UI interface, built-in Scrapy log parser, stats and graphs for task execution, support node management, cron job, mail notification, mobile. Full-feature spider management platform.|Not support spiders other than Scrapy. Limited performance because of Python Flask backend.|   |

+| [Gerapy](https://github.com/Gerapy/Gerapy) | Python Django + Vue|Gerapy is built by web crawler guru [Germey Cui](https://github.com/Germey). Simple installation and deployment. Beautiful UI interface. Support node management, code edit, configurable crawl rules, etc.|Again not support spiders other than Scrapy. A lot of bugs based on user feedback in v1.0. Look forward to improvement in v2.0|   |

+| [SpiderKeeper](https://github.com/DormyMo/SpiderKeeper) | Python Flask|Open-source Scrapyhub. Concise and simple UI interface. Support cron job.|Perhaps too simplified, not support pagination, not support node management, not support spiders other than Scrapy.|   |

## Contributors

@@ -219,6 +261,9 @@ Crawlab is easy to use, general enough to adapt spiders in any language and any

+[](https://semaphoreci.com/joeybloggs/locales)

+[](https://goreportcard.com/report/github.com/go-playground/locales)

+[](https://godoc.org/github.com/go-playground/locales)

+

+[](https://gitter.im/go-playground/locales?utm_source=badge&utm_medium=badge&utm_campaign=pr-badge)

+

+Locales is a set of locales generated from the [Unicode CLDR Project](http://cldr.unicode.org/) which can be used independently or within

+an i18n package; these were built for use with, but not exclusive to, [Universal Translator](https://github.com/go-playground/universal-translator).

+

+Features

+--------

+- [x] Rules generated from the latest [CLDR](http://cldr.unicode.org/index/downloads) data, v31.0.1

+- [x] Contains Cardinal, Ordinal and Range Plural Rules

+- [x] Contains Month, Weekday and Timezone translations built in

+- [x] Contains Date & Time formatting functions

+- [x] Contains Number, Currency, Accounting and Percent formatting functions

+- [x] Supports the "Gregorian" calendar only ( my time isn't unlimited, had to draw the line somewhere )

+

+Full Tests

+--------------------

+I could sure use your help adding tests for every locale, it is a huge undertaking and I just don't have the free time to do it all at the moment;

+any help would be **greatly appreciated!!!!** please see [issue](https://github.com/go-playground/locales/issues/1) for details.

+

+Installation

+-----------

+

+Use go get

+

+```shell

+go get github.com/go-playground/locales

+```

+

+NOTES

+--------

+You'll notice most return types are []byte, this is because most of the time the results will be concatenated with a larger body

+of text and can avoid some allocations if already appending to a byte array, otherwise just cast as string.

+

+Usage

+-------

+```go

+package main

+

+import (

+ "fmt"

+ "time"

+

+ "github.com/go-playground/locales/currency"

+ "github.com/go-playground/locales/en_CA"

+)

+

+func main() {

+

+ loc, _ := time.LoadLocation("America/Toronto")

+ datetime := time.Date(2016, 02, 03, 9, 0, 1, 0, loc)

+

+ l := en_CA.New()

+

+ // Dates

+ fmt.Println(l.FmtDateFull(datetime))

+ fmt.Println(l.FmtDateLong(datetime))

+ fmt.Println(l.FmtDateMedium(datetime))

+ fmt.Println(l.FmtDateShort(datetime))

+

+ // Times

+ fmt.Println(l.FmtTimeFull(datetime))

+ fmt.Println(l.FmtTimeLong(datetime))

+ fmt.Println(l.FmtTimeMedium(datetime))

+ fmt.Println(l.FmtTimeShort(datetime))

+

+ // Months Wide

+ fmt.Println(l.MonthWide(time.January))

+ fmt.Println(l.MonthWide(time.February))

+ fmt.Println(l.MonthWide(time.March))

+ // ...

+

+ // Months Abbreviated

+ fmt.Println(l.MonthAbbreviated(time.January))

+ fmt.Println(l.MonthAbbreviated(time.February))

+ fmt.Println(l.MonthAbbreviated(time.March))

+ // ...

+

+ // Months Narrow

+ fmt.Println(l.MonthNarrow(time.January))

+ fmt.Println(l.MonthNarrow(time.February))

+ fmt.Println(l.MonthNarrow(time.March))

+ // ...

+

+ // Weekdays Wide

+ fmt.Println(l.WeekdayWide(time.Sunday))

+ fmt.Println(l.WeekdayWide(time.Monday))

+ fmt.Println(l.WeekdayWide(time.Tuesday))

+ // ...

+

+ // Weekdays Abbreviated

+ fmt.Println(l.WeekdayAbbreviated(time.Sunday))

+ fmt.Println(l.WeekdayAbbreviated(time.Monday))

+ fmt.Println(l.WeekdayAbbreviated(time.Tuesday))

+ // ...

+

+ // Weekdays Short

+ fmt.Println(l.WeekdayShort(time.Sunday))

+ fmt.Println(l.WeekdayShort(time.Monday))

+ fmt.Println(l.WeekdayShort(time.Tuesday))

+ // ...

+

+ // Weekdays Narrow

+ fmt.Println(l.WeekdayNarrow(time.Sunday))

+ fmt.Println(l.WeekdayNarrow(time.Monday))

+ fmt.Println(l.WeekdayNarrow(time.Tuesday))

+ // ...

+

+ var f64 float64

+

+ f64 = -10356.4523

+

+ // Number

+ fmt.Println(l.FmtNumber(f64, 2))

+

+ // Currency

+ fmt.Println(l.FmtCurrency(f64, 2, currency.CAD))

+ fmt.Println(l.FmtCurrency(f64, 2, currency.USD))

+

+ // Accounting

+ fmt.Println(l.FmtAccounting(f64, 2, currency.CAD))

+ fmt.Println(l.FmtAccounting(f64, 2, currency.USD))

+

+ f64 = 78.12

+

+ // Percent

+ fmt.Println(l.FmtPercent(f64, 0))

+

+ // Plural Rules for locale, so you know what rules you must cover

+ fmt.Println(l.PluralsCardinal())

+ fmt.Println(l.PluralsOrdinal())

+

+ // Cardinal Plural Rules

+ fmt.Println(l.CardinalPluralRule(1, 0))

+ fmt.Println(l.CardinalPluralRule(1.0, 0))

+ fmt.Println(l.CardinalPluralRule(1.0, 1))

+ fmt.Println(l.CardinalPluralRule(3, 0))

+

+ // Ordinal Plural Rules

+ fmt.Println(l.OrdinalPluralRule(21, 0)) // 21st

+ fmt.Println(l.OrdinalPluralRule(22, 0)) // 22nd

+ fmt.Println(l.OrdinalPluralRule(33, 0)) // 33rd

+ fmt.Println(l.OrdinalPluralRule(34, 0)) // 34th

+

+ // Range Plural Rules

+ fmt.Println(l.RangePluralRule(1, 0, 1, 0)) // 1-1

+ fmt.Println(l.RangePluralRule(1, 0, 2, 0)) // 1-2

+ fmt.Println(l.RangePluralRule(5, 0, 8, 0)) // 5-8

+}

+```

+

+NOTES:

+-------

+These rules were generated from the [Unicode CLDR Project](http://cldr.unicode.org/), if you encounter any issues

+I strongly encourage contributing to the CLDR project to get the locale information corrected and the next time

+these locales are regenerated the fix will come with.

+

+I do however realize that time constraints are often important and so there are two options:

+

+1. Create your own locale, copy, paste and modify, and ensure it complies with the `Translator` interface.

+2. Add an exception in the locale generation code directly and once regenerated, fix will be in place.

+

+Please to not make fixes inside the locale files, they WILL get overwritten when the locales are regenerated.

+

+License

+------

+Distributed under MIT License, please see license file in code for more details.

diff --git a/backend/vendor/github.com/go-playground/locales/currency/currency.go b/backend/vendor/github.com/go-playground/locales/currency/currency.go

new file mode 100644

index 00000000..cdaba596

--- /dev/null

+++ b/backend/vendor/github.com/go-playground/locales/currency/currency.go

@@ -0,0 +1,308 @@

+package currency

+

+// Type is the currency type associated with the locales currency enum

+type Type int

+

+// locale currencies

+const (

+ ADP Type = iota

+ AED

+ AFA

+ AFN

+ ALK

+ ALL

+ AMD

+ ANG

+ AOA

+ AOK

+ AON

+ AOR

+ ARA

+ ARL

+ ARM

+ ARP

+ ARS

+ ATS

+ AUD

+ AWG

+ AZM

+ AZN

+ BAD

+ BAM

+ BAN

+ BBD

+ BDT

+ BEC

+ BEF

+ BEL

+ BGL

+ BGM

+ BGN

+ BGO

+ BHD

+ BIF

+ BMD

+ BND

+ BOB

+ BOL

+ BOP

+ BOV

+ BRB

+ BRC

+ BRE

+ BRL

+ BRN

+ BRR

+ BRZ

+ BSD

+ BTN

+ BUK

+ BWP

+ BYB

+ BYN

+ BYR

+ BZD

+ CAD

+ CDF

+ CHE

+ CHF

+ CHW

+ CLE

+ CLF

+ CLP

+ CNH

+ CNX

+ CNY

+ COP

+ COU

+ CRC

+ CSD

+ CSK

+ CUC

+ CUP

+ CVE

+ CYP

+ CZK

+ DDM

+ DEM

+ DJF

+ DKK

+ DOP

+ DZD

+ ECS

+ ECV

+ EEK

+ EGP

+ ERN

+ ESA

+ ESB

+ ESP

+ ETB

+ EUR

+ FIM

+ FJD

+ FKP

+ FRF

+ GBP

+ GEK

+ GEL

+ GHC

+ GHS

+ GIP

+ GMD

+ GNF

+ GNS

+ GQE

+ GRD

+ GTQ

+ GWE

+ GWP

+ GYD

+ HKD

+ HNL

+ HRD

+ HRK

+ HTG

+ HUF

+ IDR

+ IEP

+ ILP

+ ILR

+ ILS

+ INR

+ IQD

+ IRR

+ ISJ

+ ISK

+ ITL

+ JMD

+ JOD

+ JPY

+ KES

+ KGS

+ KHR

+ KMF

+ KPW

+ KRH

+ KRO

+ KRW

+ KWD

+ KYD

+ KZT

+ LAK

+ LBP

+ LKR

+ LRD

+ LSL

+ LTL

+ LTT

+ LUC

+ LUF

+ LUL

+ LVL

+ LVR

+ LYD

+ MAD

+ MAF

+ MCF

+ MDC

+ MDL

+ MGA

+ MGF

+ MKD

+ MKN

+ MLF

+ MMK

+ MNT

+ MOP

+ MRO

+ MTL

+ MTP

+ MUR

+ MVP

+ MVR

+ MWK

+ MXN

+ MXP

+ MXV

+ MYR

+ MZE

+ MZM

+ MZN

+ NAD

+ NGN

+ NIC

+ NIO

+ NLG

+ NOK

+ NPR

+ NZD

+ OMR

+ PAB

+ PEI

+ PEN

+ PES

+ PGK

+ PHP

+ PKR

+ PLN

+ PLZ

+ PTE

+ PYG

+ QAR

+ RHD

+ ROL

+ RON

+ RSD

+ RUB

+ RUR

+ RWF

+ SAR

+ SBD

+ SCR

+ SDD

+ SDG

+ SDP

+ SEK

+ SGD

+ SHP

+ SIT

+ SKK

+ SLL

+ SOS

+ SRD

+ SRG

+ SSP

+ STD

+ STN

+ SUR

+ SVC

+ SYP

+ SZL

+ THB

+ TJR

+ TJS

+ TMM

+ TMT

+ TND

+ TOP

+ TPE

+ TRL

+ TRY

+ TTD

+ TWD

+ TZS

+ UAH

+ UAK

+ UGS

+ UGX

+ USD

+ USN

+ USS

+ UYI

+ UYP

+ UYU

+ UZS

+ VEB

+ VEF

+ VND

+ VNN

+ VUV

+ WST

+ XAF

+ XAG

+ XAU

+ XBA

+ XBB

+ XBC

+ XBD

+ XCD

+ XDR

+ XEU

+ XFO

+ XFU

+ XOF

+ XPD

+ XPF

+ XPT

+ XRE

+ XSU

+ XTS

+ XUA

+ XXX

+ YDD

+ YER

+ YUD

+ YUM

+ YUN

+ YUR

+ ZAL

+ ZAR

+ ZMK

+ ZMW

+ ZRN

+ ZRZ

+ ZWD

+ ZWL

+ ZWR

+)

diff --git a/backend/vendor/github.com/go-playground/locales/logo.png b/backend/vendor/github.com/go-playground/locales/logo.png

new file mode 100644

index 00000000..3038276e

Binary files /dev/null and b/backend/vendor/github.com/go-playground/locales/logo.png differ

diff --git a/backend/vendor/github.com/go-playground/locales/rules.go b/backend/vendor/github.com/go-playground/locales/rules.go

new file mode 100644

index 00000000..92029001

--- /dev/null

+++ b/backend/vendor/github.com/go-playground/locales/rules.go

@@ -0,0 +1,293 @@

+package locales

+

+import (

+ "strconv"

+ "time"

+

+ "github.com/go-playground/locales/currency"

+)

+

+// // ErrBadNumberValue is returned when the number passed for

+// // plural rule determination cannot be parsed

+// type ErrBadNumberValue struct {

+// NumberValue string

+// InnerError error

+// }

+

+// // Error returns ErrBadNumberValue error string

+// func (e *ErrBadNumberValue) Error() string {

+// return fmt.Sprintf("Invalid Number Value '%s' %s", e.NumberValue, e.InnerError)

+// }

+

+// var _ error = new(ErrBadNumberValue)

+

+// PluralRule denotes the type of plural rules

+type PluralRule int

+

+// PluralRule's

+const (

+ PluralRuleUnknown PluralRule = iota

+ PluralRuleZero // zero

+ PluralRuleOne // one - singular

+ PluralRuleTwo // two - dual

+ PluralRuleFew // few - paucal

+ PluralRuleMany // many - also used for fractions if they have a separate class

+ PluralRuleOther // other - required—general plural form—also used if the language only has a single form

+)

+

+const (

+ pluralsString = "UnknownZeroOneTwoFewManyOther"

+)

+

+// Translator encapsulates an instance of a locale

+// NOTE: some values are returned as a []byte just in case the caller

+// wishes to add more and can help avoid allocations; otherwise just cast as string

+type Translator interface {

+

+ // The following Functions are for overriding, debugging or developing

+ // with a Translator Locale

+

+ // Locale returns the string value of the translator

+ Locale() string

+

+ // returns an array of cardinal plural rules associated

+ // with this translator

+ PluralsCardinal() []PluralRule

+

+ // returns an array of ordinal plural rules associated

+ // with this translator

+ PluralsOrdinal() []PluralRule

+

+ // returns an array of range plural rules associated

+ // with this translator

+ PluralsRange() []PluralRule

+

+ // returns the cardinal PluralRule given 'num' and digits/precision of 'v' for locale

+ CardinalPluralRule(num float64, v uint64) PluralRule

+

+ // returns the ordinal PluralRule given 'num' and digits/precision of 'v' for locale

+ OrdinalPluralRule(num float64, v uint64) PluralRule

+

+ // returns the ordinal PluralRule given 'num1', 'num2' and digits/precision of 'v1' and 'v2' for locale

+ RangePluralRule(num1 float64, v1 uint64, num2 float64, v2 uint64) PluralRule

+

+ // returns the locales abbreviated month given the 'month' provided

+ MonthAbbreviated(month time.Month) string

+

+ // returns the locales abbreviated months

+ MonthsAbbreviated() []string

+

+ // returns the locales narrow month given the 'month' provided

+ MonthNarrow(month time.Month) string

+

+ // returns the locales narrow months

+ MonthsNarrow() []string

+

+ // returns the locales wide month given the 'month' provided

+ MonthWide(month time.Month) string

+

+ // returns the locales wide months

+ MonthsWide() []string

+

+ // returns the locales abbreviated weekday given the 'weekday' provided

+ WeekdayAbbreviated(weekday time.Weekday) string

+

+ // returns the locales abbreviated weekdays

+ WeekdaysAbbreviated() []string

+

+ // returns the locales narrow weekday given the 'weekday' provided

+ WeekdayNarrow(weekday time.Weekday) string

+

+ // WeekdaysNarrowreturns the locales narrow weekdays

+ WeekdaysNarrow() []string

+

+ // returns the locales short weekday given the 'weekday' provided

+ WeekdayShort(weekday time.Weekday) string

+

+ // returns the locales short weekdays

+ WeekdaysShort() []string

+

+ // returns the locales wide weekday given the 'weekday' provided

+ WeekdayWide(weekday time.Weekday) string

+

+ // returns the locales wide weekdays

+ WeekdaysWide() []string

+

+ // The following Functions are common Formatting functionsfor the Translator's Locale

+

+ // returns 'num' with digits/precision of 'v' for locale and handles both Whole and Real numbers based on 'v'

+ FmtNumber(num float64, v uint64) string

+

+ // returns 'num' with digits/precision of 'v' for locale and handles both Whole and Real numbers based on 'v'

+ // NOTE: 'num' passed into FmtPercent is assumed to be in percent already

+ FmtPercent(num float64, v uint64) string

+

+ // returns the currency representation of 'num' with digits/precision of 'v' for locale

+ FmtCurrency(num float64, v uint64, currency currency.Type) string

+

+ // returns the currency representation of 'num' with digits/precision of 'v' for locale

+ // in accounting notation.

+ FmtAccounting(num float64, v uint64, currency currency.Type) string

+

+ // returns the short date representation of 't' for locale

+ FmtDateShort(t time.Time) string

+

+ // returns the medium date representation of 't' for locale

+ FmtDateMedium(t time.Time) string

+

+ // returns the long date representation of 't' for locale

+ FmtDateLong(t time.Time) string

+

+ // returns the full date representation of 't' for locale

+ FmtDateFull(t time.Time) string

+

+ // returns the short time representation of 't' for locale

+ FmtTimeShort(t time.Time) string

+

+ // returns the medium time representation of 't' for locale

+ FmtTimeMedium(t time.Time) string

+

+ // returns the long time representation of 't' for locale

+ FmtTimeLong(t time.Time) string

+

+ // returns the full time representation of 't' for locale

+ FmtTimeFull(t time.Time) string

+}

+

+// String returns the string value of PluralRule

+func (p PluralRule) String() string {

+

+ switch p {

+ case PluralRuleZero:

+ return pluralsString[7:11]

+ case PluralRuleOne:

+ return pluralsString[11:14]

+ case PluralRuleTwo:

+ return pluralsString[14:17]

+ case PluralRuleFew:

+ return pluralsString[17:20]

+ case PluralRuleMany:

+ return pluralsString[20:24]

+ case PluralRuleOther:

+ return pluralsString[24:]

+ default:

+ return pluralsString[:7]

+ }

+}

+

+//

+// Precision Notes:

+//

+// must specify a precision >= 0, and here is why https://play.golang.org/p/LyL90U0Vyh

+//

+// v := float64(3.141)

+// i := float64(int64(v))

+//

+// fmt.Println(v - i)

+//

+// or

+//

+// s := strconv.FormatFloat(v-i, 'f', -1, 64)

+// fmt.Println(s)

+//

+// these will not print what you'd expect: 0.14100000000000001

+// and so this library requires a precision to be specified, or

+// inaccurate plural rules could be applied.

+//

+//

+//

+// n - absolute value of the source number (integer and decimals).

+// i - integer digits of n.

+// v - number of visible fraction digits in n, with trailing zeros.

+// w - number of visible fraction digits in n, without trailing zeros.

+// f - visible fractional digits in n, with trailing zeros.

+// t - visible fractional digits in n, without trailing zeros.

+//

+//

+// Func(num float64, v uint64) // v = digits/precision and prevents -1 as a special case as this can lead to very unexpected behaviour, see precision note's above.

+//

+// n := math.Abs(num)

+// i := int64(n)

+// v := v

+//

+//

+// w := strconv.FormatFloat(num-float64(i), 'f', int(v), 64) // then parse backwards on string until no more zero's....

+// f := strconv.FormatFloat(n, 'f', int(v), 64) // then turn everything after decimal into an int64

+// t := strconv.FormatFloat(n, 'f', int(v), 64) // then parse backwards on string until no more zero's....

+//

+//

+//

+// General Inclusion Rules

+// - v will always be available inherently

+// - all require n

+// - w requires i

+//

+

+// W returns the number of visible fraction digits in N, without trailing zeros.

+func W(n float64, v uint64) (w int64) {

+

+ s := strconv.FormatFloat(n-float64(int64(n)), 'f', int(v), 64)

+

+ // with either be '0' or '0.xxxx', so if 1 then w will be zero

+ // otherwise need to parse

+ if len(s) != 1 {

+

+ s = s[2:]

+ end := len(s) + 1

+

+ for i := end; i >= 0; i-- {

+ if s[i] != '0' {

+ end = i + 1

+ break

+ }

+ }

+

+ w = int64(len(s[:end]))

+ }

+

+ return

+}

+

+// F returns the visible fractional digits in N, with trailing zeros.

+func F(n float64, v uint64) (f int64) {

+

+ s := strconv.FormatFloat(n-float64(int64(n)), 'f', int(v), 64)

+

+ // with either be '0' or '0.xxxx', so if 1 then f will be zero

+ // otherwise need to parse

+ if len(s) != 1 {

+

+ // ignoring error, because it can't fail as we generated

+ // the string internally from a real number

+ f, _ = strconv.ParseInt(s[2:], 10, 64)

+ }

+

+ return

+}

+

+// T returns the visible fractional digits in N, without trailing zeros.

+func T(n float64, v uint64) (t int64) {

+

+ s := strconv.FormatFloat(n-float64(int64(n)), 'f', int(v), 64)

+

+ // with either be '0' or '0.xxxx', so if 1 then t will be zero

+ // otherwise need to parse

+ if len(s) != 1 {

+

+ s = s[2:]

+ end := len(s) + 1

+

+ for i := end; i >= 0; i-- {

+ if s[i] != '0' {

+ end = i + 1

+ break

+ }

+ }

+

+ // ignoring error, because it can't fail as we generated

+ // the string internally from a real number

+ t, _ = strconv.ParseInt(s[:end], 10, 64)

+ }

+

+ return

+}

diff --git a/backend/vendor/github.com/go-playground/universal-translator/.gitignore b/backend/vendor/github.com/go-playground/universal-translator/.gitignore

new file mode 100644

index 00000000..26617857

--- /dev/null

+++ b/backend/vendor/github.com/go-playground/universal-translator/.gitignore

@@ -0,0 +1,24 @@

+# Compiled Object files, Static and Dynamic libs (Shared Objects)

+*.o

+*.a

+*.so

+

+# Folders

+_obj

+_test

+

+# Architecture specific extensions/prefixes

+*.[568vq]

+[568vq].out

+

+*.cgo1.go

+*.cgo2.c

+_cgo_defun.c

+_cgo_gotypes.go

+_cgo_export.*

+

+_testmain.go

+

+*.exe

+*.test

+*.prof

\ No newline at end of file

diff --git a/backend/vendor/github.com/go-playground/universal-translator/LICENSE b/backend/vendor/github.com/go-playground/universal-translator/LICENSE

new file mode 100644

index 00000000..8d8aba15

--- /dev/null

+++ b/backend/vendor/github.com/go-playground/universal-translator/LICENSE

@@ -0,0 +1,21 @@

+The MIT License (MIT)

+

+Copyright (c) 2016 Go Playground

+

+Permission is hereby granted, free of charge, to any person obtaining a copy

+of this software and associated documentation files (the "Software"), to deal

+in the Software without restriction, including without limitation the rights

+to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

+copies of the Software, and to permit persons to whom the Software is

+furnished to do so, subject to the following conditions:

+

+The above copyright notice and this permission notice shall be included in all

+copies or substantial portions of the Software.

+

+THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

+IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

+FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

+AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

+LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

+OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

+SOFTWARE.

diff --git a/backend/vendor/github.com/go-playground/universal-translator/README.md b/backend/vendor/github.com/go-playground/universal-translator/README.md

new file mode 100644

index 00000000..24aef158

--- /dev/null

+++ b/backend/vendor/github.com/go-playground/universal-translator/README.md

@@ -0,0 +1,90 @@

+## universal-translator

+

+[](https://semaphoreci.com/joeybloggs/locales)

+[](https://goreportcard.com/report/github.com/go-playground/locales)

+[](https://godoc.org/github.com/go-playground/locales)

+

+[](https://gitter.im/go-playground/locales?utm_source=badge&utm_medium=badge&utm_campaign=pr-badge)

+

+Locales is a set of locales generated from the [Unicode CLDR Project](http://cldr.unicode.org/) which can be used independently or within

+an i18n package; these were built for use with, but not exclusive to, [Universal Translator](https://github.com/go-playground/universal-translator).

+

+Features

+--------

+- [x] Rules generated from the latest [CLDR](http://cldr.unicode.org/index/downloads) data, v31.0.1

+- [x] Contains Cardinal, Ordinal and Range Plural Rules

+- [x] Contains Month, Weekday and Timezone translations built in

+- [x] Contains Date & Time formatting functions

+- [x] Contains Number, Currency, Accounting and Percent formatting functions

+- [x] Supports the "Gregorian" calendar only ( my time isn't unlimited, had to draw the line somewhere )

+

+Full Tests

+--------------------

+I could sure use your help adding tests for every locale, it is a huge undertaking and I just don't have the free time to do it all at the moment;

+any help would be **greatly appreciated!!!!** please see [issue](https://github.com/go-playground/locales/issues/1) for details.

+

+Installation

+-----------

+

+Use go get

+

+```shell

+go get github.com/go-playground/locales

+```

+

+NOTES

+--------

+You'll notice most return types are []byte, this is because most of the time the results will be concatenated with a larger body

+of text and can avoid some allocations if already appending to a byte array, otherwise just cast as string.

+

+Usage

+-------

+```go

+package main

+

+import (

+ "fmt"

+ "time"

+

+ "github.com/go-playground/locales/currency"

+ "github.com/go-playground/locales/en_CA"

+)

+

+func main() {

+

+ loc, _ := time.LoadLocation("America/Toronto")

+ datetime := time.Date(2016, 02, 03, 9, 0, 1, 0, loc)

+

+ l := en_CA.New()

+

+ // Dates

+ fmt.Println(l.FmtDateFull(datetime))

+ fmt.Println(l.FmtDateLong(datetime))

+ fmt.Println(l.FmtDateMedium(datetime))

+ fmt.Println(l.FmtDateShort(datetime))

+

+ // Times

+ fmt.Println(l.FmtTimeFull(datetime))

+ fmt.Println(l.FmtTimeLong(datetime))

+ fmt.Println(l.FmtTimeMedium(datetime))

+ fmt.Println(l.FmtTimeShort(datetime))

+

+ // Months Wide

+ fmt.Println(l.MonthWide(time.January))

+ fmt.Println(l.MonthWide(time.February))

+ fmt.Println(l.MonthWide(time.March))

+ // ...

+

+ // Months Abbreviated

+ fmt.Println(l.MonthAbbreviated(time.January))

+ fmt.Println(l.MonthAbbreviated(time.February))

+ fmt.Println(l.MonthAbbreviated(time.March))

+ // ...

+

+ // Months Narrow

+ fmt.Println(l.MonthNarrow(time.January))

+ fmt.Println(l.MonthNarrow(time.February))

+ fmt.Println(l.MonthNarrow(time.March))

+ // ...

+

+ // Weekdays Wide

+ fmt.Println(l.WeekdayWide(time.Sunday))

+ fmt.Println(l.WeekdayWide(time.Monday))

+ fmt.Println(l.WeekdayWide(time.Tuesday))

+ // ...

+

+ // Weekdays Abbreviated

+ fmt.Println(l.WeekdayAbbreviated(time.Sunday))

+ fmt.Println(l.WeekdayAbbreviated(time.Monday))

+ fmt.Println(l.WeekdayAbbreviated(time.Tuesday))

+ // ...

+

+ // Weekdays Short

+ fmt.Println(l.WeekdayShort(time.Sunday))

+ fmt.Println(l.WeekdayShort(time.Monday))

+ fmt.Println(l.WeekdayShort(time.Tuesday))

+ // ...

+

+ // Weekdays Narrow

+ fmt.Println(l.WeekdayNarrow(time.Sunday))

+ fmt.Println(l.WeekdayNarrow(time.Monday))

+ fmt.Println(l.WeekdayNarrow(time.Tuesday))

+ // ...

+

+ var f64 float64

+

+ f64 = -10356.4523

+

+ // Number

+ fmt.Println(l.FmtNumber(f64, 2))

+

+ // Currency

+ fmt.Println(l.FmtCurrency(f64, 2, currency.CAD))

+ fmt.Println(l.FmtCurrency(f64, 2, currency.USD))

+

+ // Accounting

+ fmt.Println(l.FmtAccounting(f64, 2, currency.CAD))

+ fmt.Println(l.FmtAccounting(f64, 2, currency.USD))

+

+ f64 = 78.12

+

+ // Percent

+ fmt.Println(l.FmtPercent(f64, 0))

+

+ // Plural Rules for locale, so you know what rules you must cover

+ fmt.Println(l.PluralsCardinal())

+ fmt.Println(l.PluralsOrdinal())

+

+ // Cardinal Plural Rules

+ fmt.Println(l.CardinalPluralRule(1, 0))

+ fmt.Println(l.CardinalPluralRule(1.0, 0))

+ fmt.Println(l.CardinalPluralRule(1.0, 1))

+ fmt.Println(l.CardinalPluralRule(3, 0))

+

+ // Ordinal Plural Rules

+ fmt.Println(l.OrdinalPluralRule(21, 0)) // 21st

+ fmt.Println(l.OrdinalPluralRule(22, 0)) // 22nd

+ fmt.Println(l.OrdinalPluralRule(33, 0)) // 33rd

+ fmt.Println(l.OrdinalPluralRule(34, 0)) // 34th

+

+ // Range Plural Rules

+ fmt.Println(l.RangePluralRule(1, 0, 1, 0)) // 1-1

+ fmt.Println(l.RangePluralRule(1, 0, 2, 0)) // 1-2

+ fmt.Println(l.RangePluralRule(5, 0, 8, 0)) // 5-8

+}

+```

+

+NOTES:

+-------

+These rules were generated from the [Unicode CLDR Project](http://cldr.unicode.org/), if you encounter any issues

+I strongly encourage contributing to the CLDR project to get the locale information corrected and the next time

+these locales are regenerated the fix will come with.

+

+I do however realize that time constraints are often important and so there are two options:

+

+1. Create your own locale, copy, paste and modify, and ensure it complies with the `Translator` interface.

+2. Add an exception in the locale generation code directly and once regenerated, fix will be in place.

+

+Please to not make fixes inside the locale files, they WILL get overwritten when the locales are regenerated.

+

+License

+------

+Distributed under MIT License, please see license file in code for more details.

diff --git a/backend/vendor/github.com/go-playground/locales/currency/currency.go b/backend/vendor/github.com/go-playground/locales/currency/currency.go

new file mode 100644

index 00000000..cdaba596

--- /dev/null

+++ b/backend/vendor/github.com/go-playground/locales/currency/currency.go

@@ -0,0 +1,308 @@

+package currency

+

+// Type is the currency type associated with the locales currency enum

+type Type int

+

+// locale currencies

+const (

+ ADP Type = iota

+ AED

+ AFA

+ AFN

+ ALK

+ ALL

+ AMD

+ ANG

+ AOA

+ AOK

+ AON

+ AOR

+ ARA

+ ARL

+ ARM

+ ARP

+ ARS

+ ATS

+ AUD

+ AWG

+ AZM

+ AZN

+ BAD

+ BAM

+ BAN

+ BBD

+ BDT

+ BEC

+ BEF

+ BEL

+ BGL

+ BGM

+ BGN

+ BGO

+ BHD

+ BIF

+ BMD

+ BND

+ BOB

+ BOL

+ BOP

+ BOV

+ BRB

+ BRC

+ BRE

+ BRL

+ BRN

+ BRR

+ BRZ

+ BSD

+ BTN

+ BUK

+ BWP

+ BYB

+ BYN

+ BYR

+ BZD

+ CAD

+ CDF

+ CHE

+ CHF

+ CHW

+ CLE

+ CLF

+ CLP

+ CNH

+ CNX

+ CNY

+ COP

+ COU

+ CRC

+ CSD

+ CSK

+ CUC

+ CUP

+ CVE

+ CYP

+ CZK

+ DDM

+ DEM

+ DJF

+ DKK

+ DOP

+ DZD

+ ECS

+ ECV

+ EEK

+ EGP

+ ERN

+ ESA

+ ESB

+ ESP

+ ETB

+ EUR

+ FIM

+ FJD

+ FKP

+ FRF

+ GBP

+ GEK

+ GEL

+ GHC

+ GHS

+ GIP

+ GMD

+ GNF

+ GNS

+ GQE

+ GRD

+ GTQ

+ GWE

+ GWP

+ GYD

+ HKD

+ HNL

+ HRD

+ HRK

+ HTG

+ HUF

+ IDR

+ IEP

+ ILP

+ ILR

+ ILS

+ INR

+ IQD

+ IRR

+ ISJ

+ ISK

+ ITL

+ JMD

+ JOD

+ JPY

+ KES

+ KGS

+ KHR

+ KMF

+ KPW

+ KRH

+ KRO

+ KRW

+ KWD

+ KYD

+ KZT

+ LAK

+ LBP

+ LKR

+ LRD

+ LSL

+ LTL

+ LTT

+ LUC

+ LUF

+ LUL

+ LVL

+ LVR

+ LYD

+ MAD

+ MAF

+ MCF

+ MDC

+ MDL

+ MGA

+ MGF

+ MKD

+ MKN

+ MLF

+ MMK

+ MNT

+ MOP

+ MRO

+ MTL

+ MTP

+ MUR

+ MVP

+ MVR

+ MWK

+ MXN

+ MXP

+ MXV

+ MYR

+ MZE

+ MZM

+ MZN

+ NAD

+ NGN

+ NIC

+ NIO

+ NLG

+ NOK

+ NPR

+ NZD

+ OMR

+ PAB

+ PEI

+ PEN

+ PES

+ PGK

+ PHP

+ PKR

+ PLN

+ PLZ

+ PTE

+ PYG

+ QAR

+ RHD

+ ROL

+ RON

+ RSD

+ RUB

+ RUR

+ RWF

+ SAR

+ SBD

+ SCR

+ SDD

+ SDG

+ SDP

+ SEK

+ SGD

+ SHP

+ SIT

+ SKK

+ SLL

+ SOS

+ SRD

+ SRG

+ SSP

+ STD

+ STN

+ SUR

+ SVC

+ SYP

+ SZL

+ THB

+ TJR

+ TJS

+ TMM

+ TMT

+ TND

+ TOP

+ TPE

+ TRL

+ TRY

+ TTD

+ TWD

+ TZS

+ UAH

+ UAK

+ UGS

+ UGX

+ USD

+ USN

+ USS

+ UYI

+ UYP

+ UYU

+ UZS

+ VEB

+ VEF

+ VND

+ VNN

+ VUV

+ WST

+ XAF

+ XAG

+ XAU

+ XBA

+ XBB

+ XBC

+ XBD

+ XCD

+ XDR

+ XEU

+ XFO

+ XFU

+ XOF

+ XPD

+ XPF

+ XPT

+ XRE

+ XSU

+ XTS

+ XUA

+ XXX

+ YDD

+ YER

+ YUD

+ YUM

+ YUN

+ YUR

+ ZAL

+ ZAR

+ ZMK

+ ZMW

+ ZRN

+ ZRZ

+ ZWD

+ ZWL

+ ZWR

+)

diff --git a/backend/vendor/github.com/go-playground/locales/logo.png b/backend/vendor/github.com/go-playground/locales/logo.png

new file mode 100644

index 00000000..3038276e

Binary files /dev/null and b/backend/vendor/github.com/go-playground/locales/logo.png differ

diff --git a/backend/vendor/github.com/go-playground/locales/rules.go b/backend/vendor/github.com/go-playground/locales/rules.go

new file mode 100644

index 00000000..92029001

--- /dev/null

+++ b/backend/vendor/github.com/go-playground/locales/rules.go

@@ -0,0 +1,293 @@

+package locales

+

+import (

+ "strconv"

+ "time"

+

+ "github.com/go-playground/locales/currency"

+)

+

+// // ErrBadNumberValue is returned when the number passed for

+// // plural rule determination cannot be parsed

+// type ErrBadNumberValue struct {

+// NumberValue string

+// InnerError error

+// }

+

+// // Error returns ErrBadNumberValue error string

+// func (e *ErrBadNumberValue) Error() string {

+// return fmt.Sprintf("Invalid Number Value '%s' %s", e.NumberValue, e.InnerError)

+// }

+

+// var _ error = new(ErrBadNumberValue)

+

+// PluralRule denotes the type of plural rules

+type PluralRule int

+

+// PluralRule's

+const (

+ PluralRuleUnknown PluralRule = iota

+ PluralRuleZero // zero

+ PluralRuleOne // one - singular

+ PluralRuleTwo // two - dual

+ PluralRuleFew // few - paucal

+ PluralRuleMany // many - also used for fractions if they have a separate class

+ PluralRuleOther // other - required—general plural form—also used if the language only has a single form

+)

+

+const (

+ pluralsString = "UnknownZeroOneTwoFewManyOther"

+)

+

+// Translator encapsulates an instance of a locale

+// NOTE: some values are returned as a []byte just in case the caller

+// wishes to add more and can help avoid allocations; otherwise just cast as string

+type Translator interface {

+

+ // The following Functions are for overriding, debugging or developing

+ // with a Translator Locale

+

+ // Locale returns the string value of the translator

+ Locale() string

+

+ // returns an array of cardinal plural rules associated

+ // with this translator

+ PluralsCardinal() []PluralRule

+

+ // returns an array of ordinal plural rules associated

+ // with this translator

+ PluralsOrdinal() []PluralRule

+

+ // returns an array of range plural rules associated

+ // with this translator

+ PluralsRange() []PluralRule

+

+ // returns the cardinal PluralRule given 'num' and digits/precision of 'v' for locale

+ CardinalPluralRule(num float64, v uint64) PluralRule

+

+ // returns the ordinal PluralRule given 'num' and digits/precision of 'v' for locale

+ OrdinalPluralRule(num float64, v uint64) PluralRule

+

+ // returns the ordinal PluralRule given 'num1', 'num2' and digits/precision of 'v1' and 'v2' for locale

+ RangePluralRule(num1 float64, v1 uint64, num2 float64, v2 uint64) PluralRule

+

+ // returns the locales abbreviated month given the 'month' provided

+ MonthAbbreviated(month time.Month) string

+

+ // returns the locales abbreviated months

+ MonthsAbbreviated() []string

+

+ // returns the locales narrow month given the 'month' provided

+ MonthNarrow(month time.Month) string

+

+ // returns the locales narrow months

+ MonthsNarrow() []string

+

+ // returns the locales wide month given the 'month' provided

+ MonthWide(month time.Month) string

+

+ // returns the locales wide months

+ MonthsWide() []string

+

+ // returns the locales abbreviated weekday given the 'weekday' provided

+ WeekdayAbbreviated(weekday time.Weekday) string

+

+ // returns the locales abbreviated weekdays

+ WeekdaysAbbreviated() []string

+

+ // returns the locales narrow weekday given the 'weekday' provided

+ WeekdayNarrow(weekday time.Weekday) string

+

+ // WeekdaysNarrowreturns the locales narrow weekdays

+ WeekdaysNarrow() []string

+

+ // returns the locales short weekday given the 'weekday' provided

+ WeekdayShort(weekday time.Weekday) string

+

+ // returns the locales short weekdays

+ WeekdaysShort() []string

+

+ // returns the locales wide weekday given the 'weekday' provided

+ WeekdayWide(weekday time.Weekday) string

+

+ // returns the locales wide weekdays

+ WeekdaysWide() []string

+

+ // The following Functions are common Formatting functionsfor the Translator's Locale

+

+ // returns 'num' with digits/precision of 'v' for locale and handles both Whole and Real numbers based on 'v'

+ FmtNumber(num float64, v uint64) string

+

+ // returns 'num' with digits/precision of 'v' for locale and handles both Whole and Real numbers based on 'v'

+ // NOTE: 'num' passed into FmtPercent is assumed to be in percent already

+ FmtPercent(num float64, v uint64) string

+

+ // returns the currency representation of 'num' with digits/precision of 'v' for locale

+ FmtCurrency(num float64, v uint64, currency currency.Type) string

+

+ // returns the currency representation of 'num' with digits/precision of 'v' for locale

+ // in accounting notation.

+ FmtAccounting(num float64, v uint64, currency currency.Type) string

+

+ // returns the short date representation of 't' for locale

+ FmtDateShort(t time.Time) string

+

+ // returns the medium date representation of 't' for locale

+ FmtDateMedium(t time.Time) string

+

+ // returns the long date representation of 't' for locale

+ FmtDateLong(t time.Time) string

+

+ // returns the full date representation of 't' for locale

+ FmtDateFull(t time.Time) string

+

+ // returns the short time representation of 't' for locale

+ FmtTimeShort(t time.Time) string

+

+ // returns the medium time representation of 't' for locale

+ FmtTimeMedium(t time.Time) string

+

+ // returns the long time representation of 't' for locale

+ FmtTimeLong(t time.Time) string

+

+ // returns the full time representation of 't' for locale

+ FmtTimeFull(t time.Time) string

+}

+

+// String returns the string value of PluralRule

+func (p PluralRule) String() string {

+

+ switch p {

+ case PluralRuleZero:

+ return pluralsString[7:11]

+ case PluralRuleOne:

+ return pluralsString[11:14]

+ case PluralRuleTwo:

+ return pluralsString[14:17]

+ case PluralRuleFew:

+ return pluralsString[17:20]

+ case PluralRuleMany:

+ return pluralsString[20:24]

+ case PluralRuleOther:

+ return pluralsString[24:]

+ default:

+ return pluralsString[:7]

+ }

+}

+

+//

+// Precision Notes:

+//

+// must specify a precision >= 0, and here is why https://play.golang.org/p/LyL90U0Vyh

+//

+// v := float64(3.141)

+// i := float64(int64(v))

+//

+// fmt.Println(v - i)

+//

+// or

+//

+// s := strconv.FormatFloat(v-i, 'f', -1, 64)

+// fmt.Println(s)

+//

+// these will not print what you'd expect: 0.14100000000000001

+// and so this library requires a precision to be specified, or

+// inaccurate plural rules could be applied.

+//

+//

+//

+// n - absolute value of the source number (integer and decimals).

+// i - integer digits of n.

+// v - number of visible fraction digits in n, with trailing zeros.

+// w - number of visible fraction digits in n, without trailing zeros.

+// f - visible fractional digits in n, with trailing zeros.

+// t - visible fractional digits in n, without trailing zeros.

+//

+//

+// Func(num float64, v uint64) // v = digits/precision and prevents -1 as a special case as this can lead to very unexpected behaviour, see precision note's above.

+//

+// n := math.Abs(num)

+// i := int64(n)

+// v := v

+//

+//

+// w := strconv.FormatFloat(num-float64(i), 'f', int(v), 64) // then parse backwards on string until no more zero's....

+// f := strconv.FormatFloat(n, 'f', int(v), 64) // then turn everything after decimal into an int64

+// t := strconv.FormatFloat(n, 'f', int(v), 64) // then parse backwards on string until no more zero's....

+//

+//

+//

+// General Inclusion Rules

+// - v will always be available inherently

+// - all require n

+// - w requires i

+//

+

+// W returns the number of visible fraction digits in N, without trailing zeros.

+func W(n float64, v uint64) (w int64) {

+

+ s := strconv.FormatFloat(n-float64(int64(n)), 'f', int(v), 64)

+

+ // with either be '0' or '0.xxxx', so if 1 then w will be zero

+ // otherwise need to parse

+ if len(s) != 1 {

+

+ s = s[2:]

+ end := len(s) + 1

+

+ for i := end; i >= 0; i-- {

+ if s[i] != '0' {

+ end = i + 1

+ break

+ }

+ }

+

+ w = int64(len(s[:end]))

+ }

+

+ return

+}

+

+// F returns the visible fractional digits in N, with trailing zeros.

+func F(n float64, v uint64) (f int64) {

+

+ s := strconv.FormatFloat(n-float64(int64(n)), 'f', int(v), 64)

+

+ // with either be '0' or '0.xxxx', so if 1 then f will be zero

+ // otherwise need to parse

+ if len(s) != 1 {

+

+ // ignoring error, because it can't fail as we generated

+ // the string internally from a real number

+ f, _ = strconv.ParseInt(s[2:], 10, 64)

+ }

+

+ return

+}

+

+// T returns the visible fractional digits in N, without trailing zeros.

+func T(n float64, v uint64) (t int64) {

+

+ s := strconv.FormatFloat(n-float64(int64(n)), 'f', int(v), 64)

+

+ // with either be '0' or '0.xxxx', so if 1 then t will be zero

+ // otherwise need to parse

+ if len(s) != 1 {

+

+ s = s[2:]

+ end := len(s) + 1

+

+ for i := end; i >= 0; i-- {

+ if s[i] != '0' {

+ end = i + 1

+ break

+ }

+ }

+

+ // ignoring error, because it can't fail as we generated

+ // the string internally from a real number

+ t, _ = strconv.ParseInt(s[:end], 10, 64)

+ }

+

+ return

+}

diff --git a/backend/vendor/github.com/go-playground/universal-translator/.gitignore b/backend/vendor/github.com/go-playground/universal-translator/.gitignore

new file mode 100644

index 00000000..26617857

--- /dev/null

+++ b/backend/vendor/github.com/go-playground/universal-translator/.gitignore

@@ -0,0 +1,24 @@

+# Compiled Object files, Static and Dynamic libs (Shared Objects)

+*.o

+*.a

+*.so

+

+# Folders

+_obj

+_test

+

+# Architecture specific extensions/prefixes

+*.[568vq]

+[568vq].out

+

+*.cgo1.go

+*.cgo2.c

+_cgo_defun.c

+_cgo_gotypes.go

+_cgo_export.*

+

+_testmain.go

+

+*.exe

+*.test

+*.prof

\ No newline at end of file

diff --git a/backend/vendor/github.com/go-playground/universal-translator/LICENSE b/backend/vendor/github.com/go-playground/universal-translator/LICENSE

new file mode 100644

index 00000000..8d8aba15

--- /dev/null

+++ b/backend/vendor/github.com/go-playground/universal-translator/LICENSE

@@ -0,0 +1,21 @@

+The MIT License (MIT)

+

+Copyright (c) 2016 Go Playground

+

+Permission is hereby granted, free of charge, to any person obtaining a copy

+of this software and associated documentation files (the "Software"), to deal

+in the Software without restriction, including without limitation the rights

+to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

+copies of the Software, and to permit persons to whom the Software is

+furnished to do so, subject to the following conditions:

+

+The above copyright notice and this permission notice shall be included in all

+copies or substantial portions of the Software.

+

+THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

+IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

+FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

+AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

+LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

+OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

+SOFTWARE.

diff --git a/backend/vendor/github.com/go-playground/universal-translator/README.md b/backend/vendor/github.com/go-playground/universal-translator/README.md

new file mode 100644

index 00000000..24aef158

--- /dev/null

+++ b/backend/vendor/github.com/go-playground/universal-translator/README.md

@@ -0,0 +1,90 @@

+## universal-translator

+ +

+[](https://semaphoreci.com/joeybloggs/universal-translator)

+[](https://coveralls.io/github/go-playground/universal-translator)

+[](https://goreportcard.com/report/github.com/go-playground/universal-translator)

+[](https://godoc.org/github.com/go-playground/universal-translator)

+

+[](https://gitter.im/go-playground/universal-translator?utm_source=badge&utm_medium=badge&utm_campaign=pr-badge)

+

+Universal Translator is an i18n Translator for Go/Golang using CLDR data + pluralization rules

+

+Why another i18n library?

+--------------------------

+Because none of the plural rules seem to be correct out there, including the previous implementation of this package,

+so I took it upon myself to create [locales](https://github.com/go-playground/locales) for everyone to use; this package

+is a thin wrapper around [locales](https://github.com/go-playground/locales) in order to store and translate text for

+use in your applications.

+

+Features

+--------

+- [x] Rules generated from the [CLDR](http://cldr.unicode.org/index/downloads) data, v30.0.3

+- [x] Contains Cardinal, Ordinal and Range Plural Rules

+- [x] Contains Month, Weekday and Timezone translations built in

+- [x] Contains Date & Time formatting functions

+- [x] Contains Number, Currency, Accounting and Percent formatting functions

+- [x] Supports the "Gregorian" calendar only ( my time isn't unlimited, had to draw the line somewhere )

+- [x] Support loading translations from files

+- [x] Exporting translations to file(s), mainly for getting them professionally translated

+- [ ] Code Generation for translation files -> Go code.. i.e. after it has been professionally translated

+- [ ] Tests for all languages, I need help with this, please see [here](https://github.com/go-playground/locales/issues/1)

+

+Installation

+-----------

+

+Use go get

+

+```shell

+go get github.com/go-playground/universal-translator

+```

+

+Usage & Documentation

+-------

+

+Please see https://godoc.org/github.com/go-playground/universal-translator for usage docs

+

+##### Examples:

+

+- [Basic](https://github.com/go-playground/universal-translator/tree/master/examples/basic)

+- [Full - no files](https://github.com/go-playground/universal-translator/tree/master/examples/full-no-files)

+- [Full - with files](https://github.com/go-playground/universal-translator/tree/master/examples/full-with-files)

+

+File formatting

+--------------

+All types, Plain substitution, Cardinal, Ordinal and Range translations can all be contained withing the same file(s);

+they are only separated for easy viewing.

+

+##### Examples:

+

+- [Formats](https://github.com/go-playground/universal-translator/tree/master/examples/file-formats)

+

+##### Basic Makeup

+NOTE: not all fields are needed for all translation types, see [examples](https://github.com/go-playground/universal-translator/tree/master/examples/file-formats)

+```json

+{

+ "locale": "en",

+ "key": "days-left",

+ "trans": "You have {0} day left.",

+ "type": "Cardinal",

+ "rule": "One",

+ "override": false

+}

+```

+|Field|Description|

+|---|---|

+|locale|The locale for which the translation is for.|

+|key|The translation key that will be used to store and lookup each translation; normally it is a string or integer.|

+|trans|The actual translation text.|

+|type|The type of translation Cardinal, Ordinal, Range or "" for a plain substitution(not required to be defined if plain used)|

+|rule|The plural rule for which the translation is for eg. One, Two, Few, Many or Other.(not required to be defined if plain used)|

+|override|If you wish to override an existing translation that has already been registered, set this to 'true'. 99% of the time there is no need to define it.|

+

+Help With Tests

+---------------

+To anyone interesting in helping or contributing, I sure could use some help creating tests for each language.

+Please see issue [here](https://github.com/go-playground/locales/issues/1) for details.

+

+License

+------

+Distributed under MIT License, please see license file in code for more details.

diff --git a/backend/vendor/github.com/go-playground/universal-translator/errors.go b/backend/vendor/github.com/go-playground/universal-translator/errors.go

new file mode 100644

index 00000000..38b163b6

--- /dev/null

+++ b/backend/vendor/github.com/go-playground/universal-translator/errors.go

@@ -0,0 +1,148 @@

+package ut

+

+import (

+ "errors"

+ "fmt"

+

+ "github.com/go-playground/locales"

+)

+

+var (

+ // ErrUnknowTranslation indicates the translation could not be found

+ ErrUnknowTranslation = errors.New("Unknown Translation")

+)

+

+var _ error = new(ErrConflictingTranslation)

+var _ error = new(ErrRangeTranslation)

+var _ error = new(ErrOrdinalTranslation)

+var _ error = new(ErrCardinalTranslation)

+var _ error = new(ErrMissingPluralTranslation)

+var _ error = new(ErrExistingTranslator)

+

+// ErrExistingTranslator is the error representing a conflicting translator

+type ErrExistingTranslator struct {

+ locale string

+}

+

+// Error returns ErrExistingTranslator's internal error text

+func (e *ErrExistingTranslator) Error() string {

+ return fmt.Sprintf("error: conflicting translator for locale '%s'", e.locale)

+}

+

+// ErrConflictingTranslation is the error representing a conflicting translation

+type ErrConflictingTranslation struct {

+ locale string

+ key interface{}

+ rule locales.PluralRule

+ text string

+}

+

+// Error returns ErrConflictingTranslation's internal error text

+func (e *ErrConflictingTranslation) Error() string {

+

+ if _, ok := e.key.(string); !ok {

+ return fmt.Sprintf("error: conflicting key '%#v' rule '%s' with text '%s' for locale '%s', value being ignored", e.key, e.rule, e.text, e.locale)

+ }

+

+ return fmt.Sprintf("error: conflicting key '%s' rule '%s' with text '%s' for locale '%s', value being ignored", e.key, e.rule, e.text, e.locale)

+}

+

+// ErrRangeTranslation is the error representing a range translation error

+type ErrRangeTranslation struct {

+ text string

+}

+

+// Error returns ErrRangeTranslation's internal error text

+func (e *ErrRangeTranslation) Error() string {

+ return e.text

+}

+

+// ErrOrdinalTranslation is the error representing an ordinal translation error

+type ErrOrdinalTranslation struct {

+ text string

+}

+

+// Error returns ErrOrdinalTranslation's internal error text

+func (e *ErrOrdinalTranslation) Error() string {

+ return e.text

+}

+

+// ErrCardinalTranslation is the error representing a cardinal translation error

+type ErrCardinalTranslation struct {

+ text string

+}

+

+// Error returns ErrCardinalTranslation's internal error text

+func (e *ErrCardinalTranslation) Error() string {

+ return e.text

+}

+

+// ErrMissingPluralTranslation is the error signifying a missing translation given

+// the locales plural rules.

+type ErrMissingPluralTranslation struct {

+ locale string

+ key interface{}

+ rule locales.PluralRule

+ translationType string

+}

+

+// Error returns ErrMissingPluralTranslation's internal error text

+func (e *ErrMissingPluralTranslation) Error() string {

+

+ if _, ok := e.key.(string); !ok {

+ return fmt.Sprintf("error: missing '%s' plural rule '%s' for translation with key '%#v' and locale '%s'", e.translationType, e.rule, e.key, e.locale)

+ }

+

+ return fmt.Sprintf("error: missing '%s' plural rule '%s' for translation with key '%s' and locale '%s'", e.translationType, e.rule, e.key, e.locale)

+}

+

+// ErrMissingBracket is the error representing a missing bracket in a translation

+// eg. This is a {0 <-- missing ending '}'

+type ErrMissingBracket struct {

+ locale string

+ key interface{}

+ text string

+}

+

+// Error returns ErrMissingBracket error message

+func (e *ErrMissingBracket) Error() string {

+ return fmt.Sprintf("error: missing bracket '{}', in translation. locale: '%s' key: '%v' text: '%s'", e.locale, e.key, e.text)

+}

+

+// ErrBadParamSyntax is the error representing a bad parameter definition in a translation

+// eg. This is a {must-be-int}

+type ErrBadParamSyntax struct {

+ locale string

+ param string

+ key interface{}

+ text string

+}

+

+// Error returns ErrBadParamSyntax error message

+func (e *ErrBadParamSyntax) Error() string {

+ return fmt.Sprintf("error: bad parameter syntax, missing parameter '%s' in translation. locale: '%s' key: '%v' text: '%s'", e.param, e.locale, e.key, e.text)

+}

+

+// import/export errors

+