mirror of

https://github.com/crawlab-team/crawlab.git

synced 2026-01-22 17:31:03 +01:00

22

README-zh.md

22

README-zh.md

@@ -97,49 +97,49 @@ Docker部署的详情,请见[相关文档](https://tikazyq.github.io/crawlab/I

|

||||

|

||||

#### 登录

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/login.png?v0.3.0">

|

||||

|

||||

|

||||

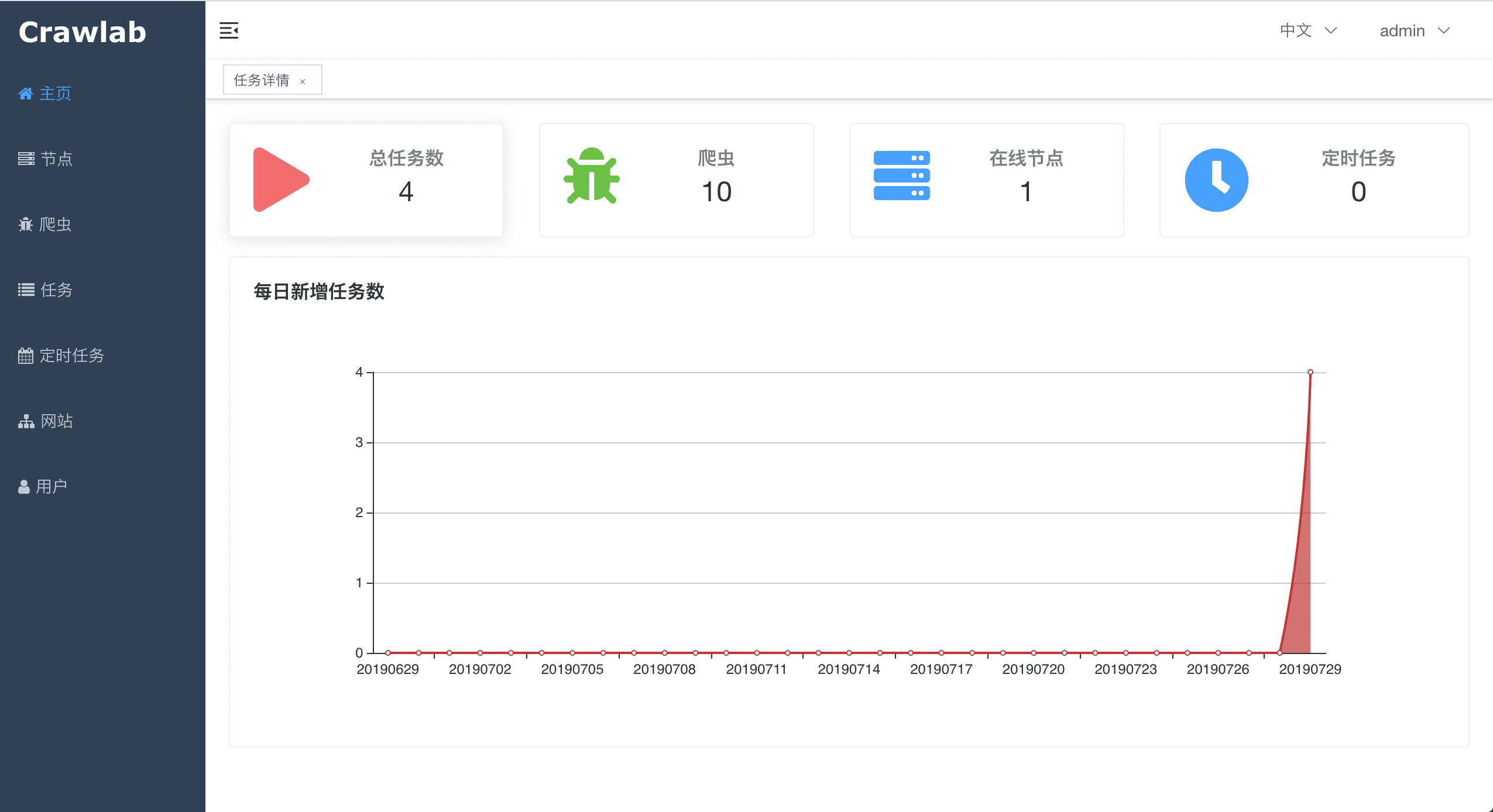

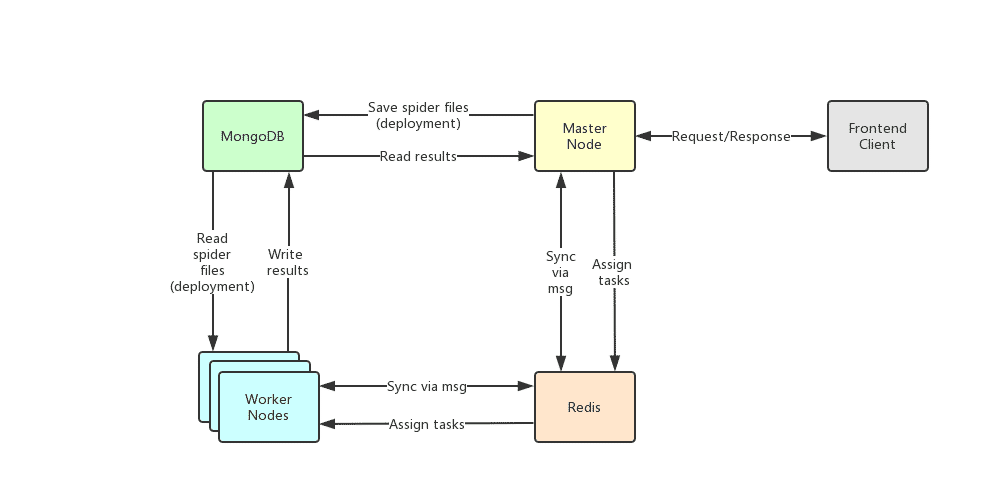

#### 首页

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/home.png?v0.3.0">

|

||||

|

||||

|

||||

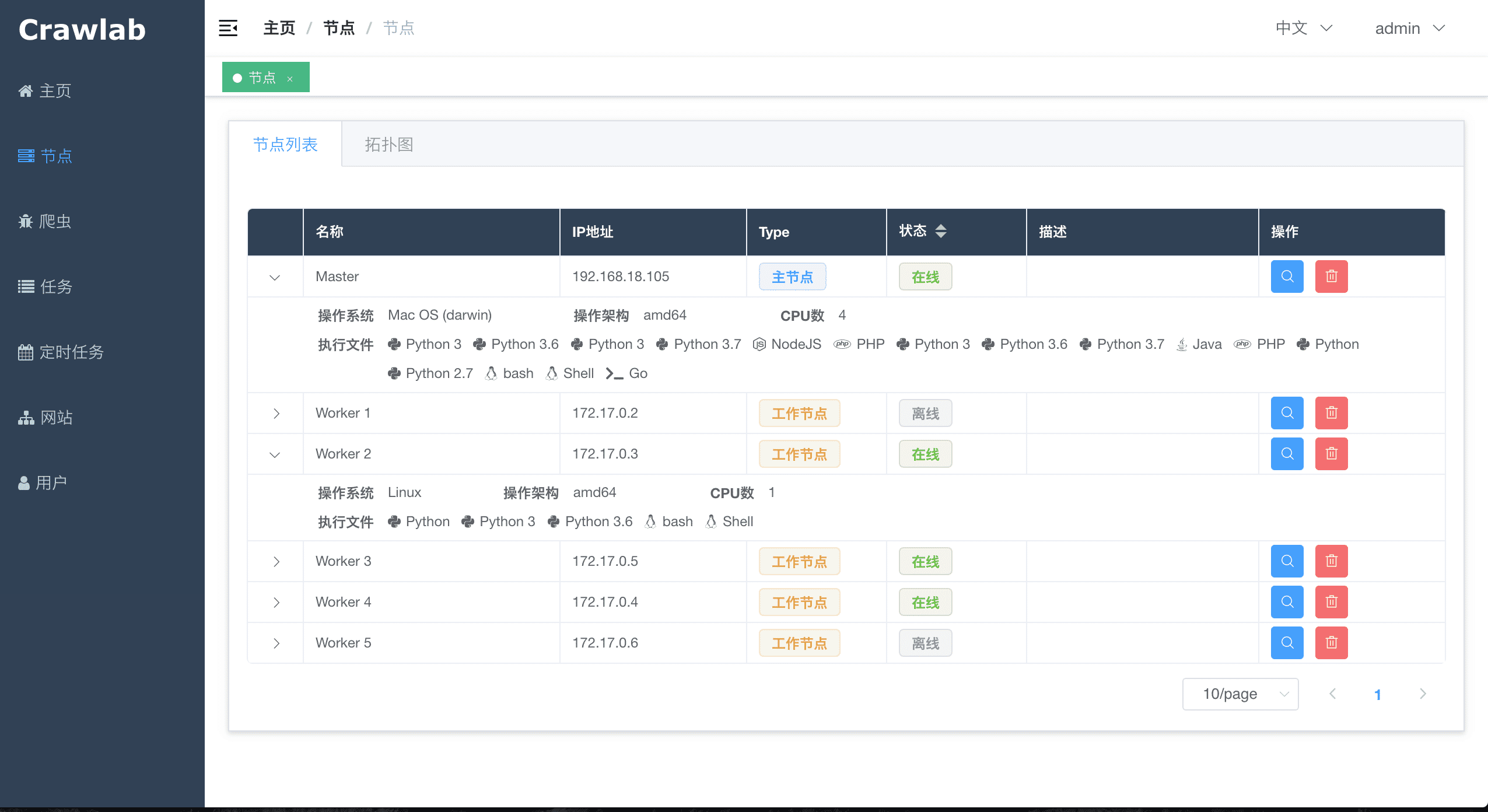

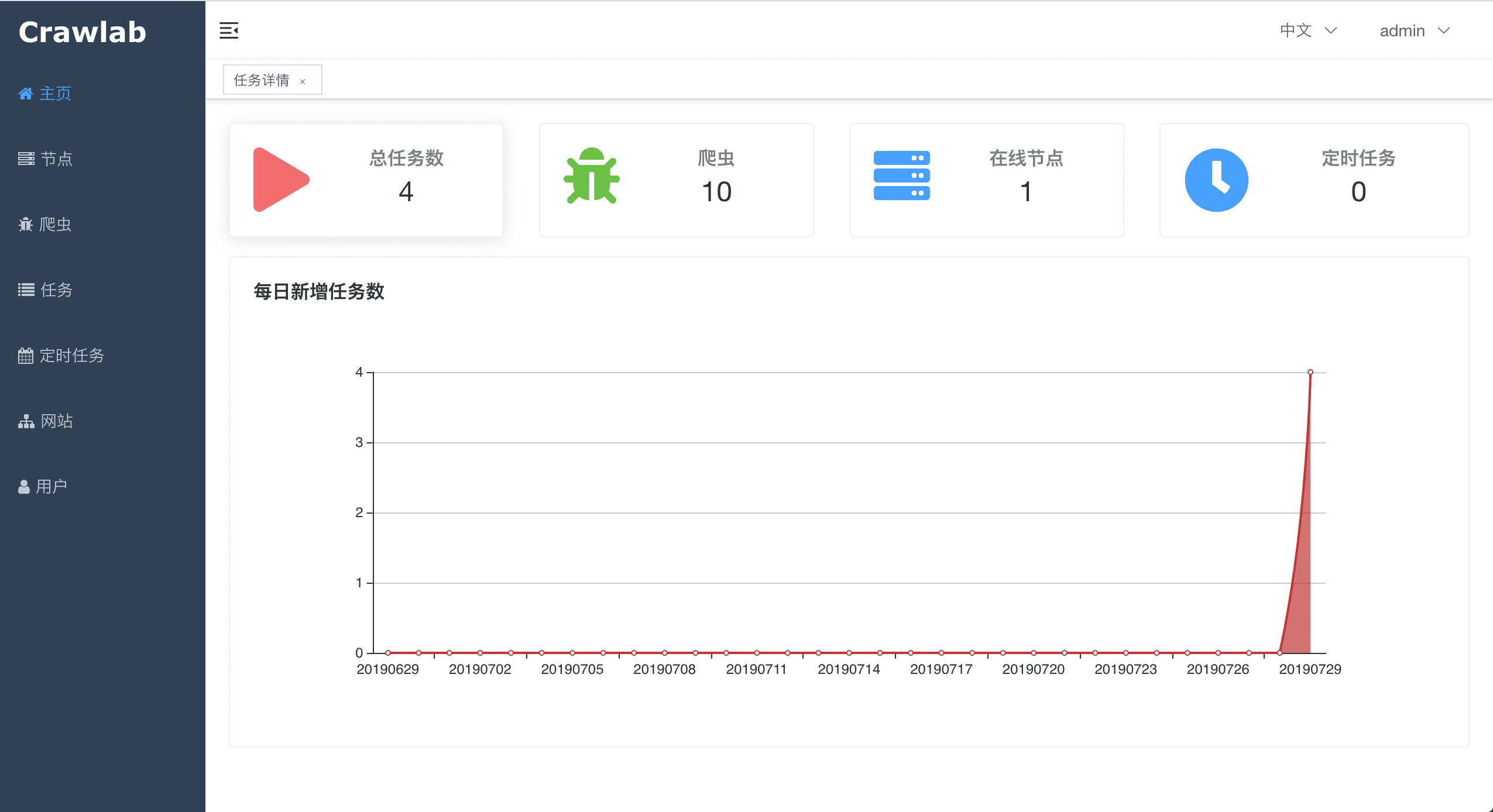

#### 节点列表

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/node-list.png?v0.3.0">

|

||||

|

||||

|

||||

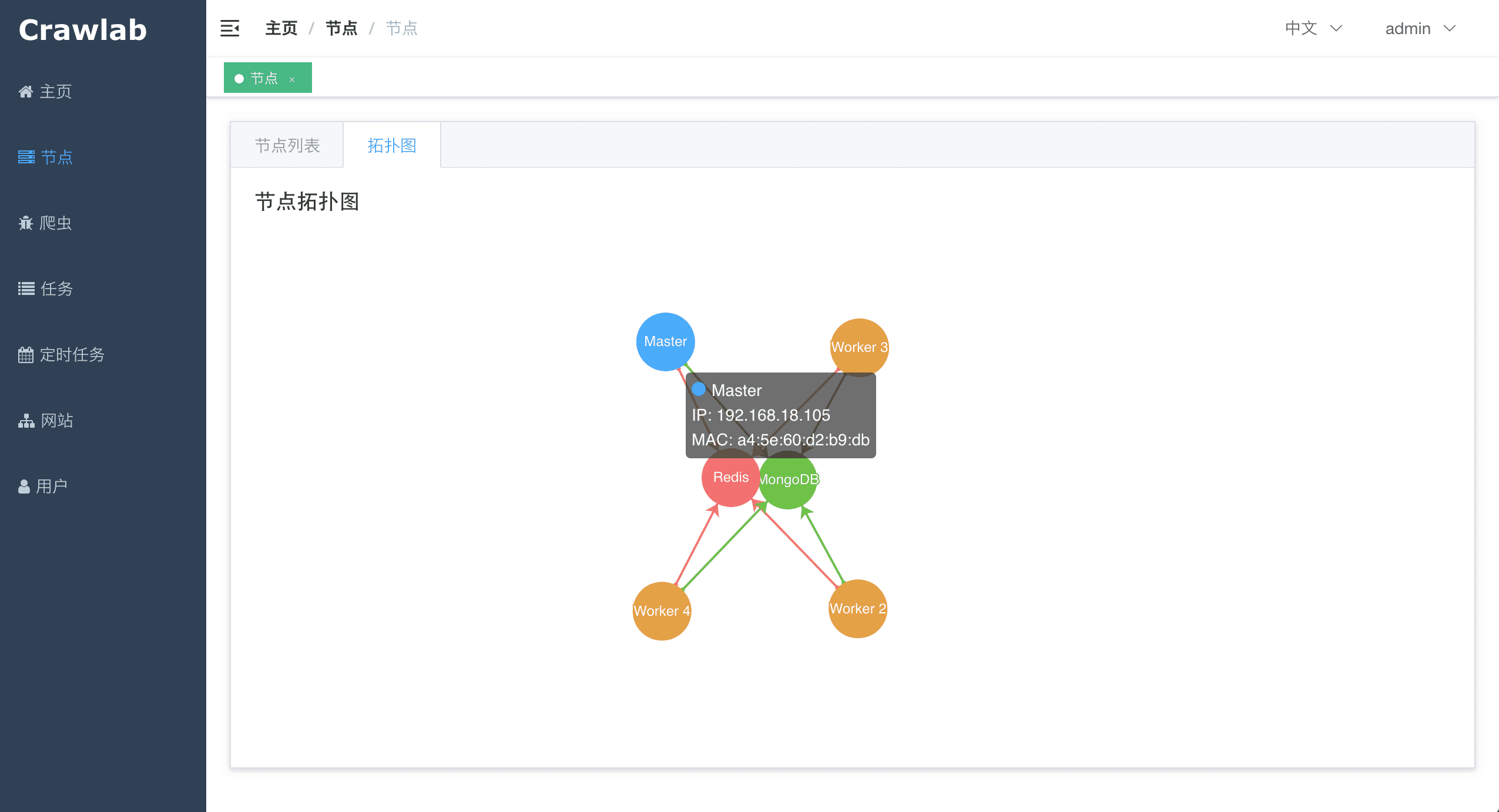

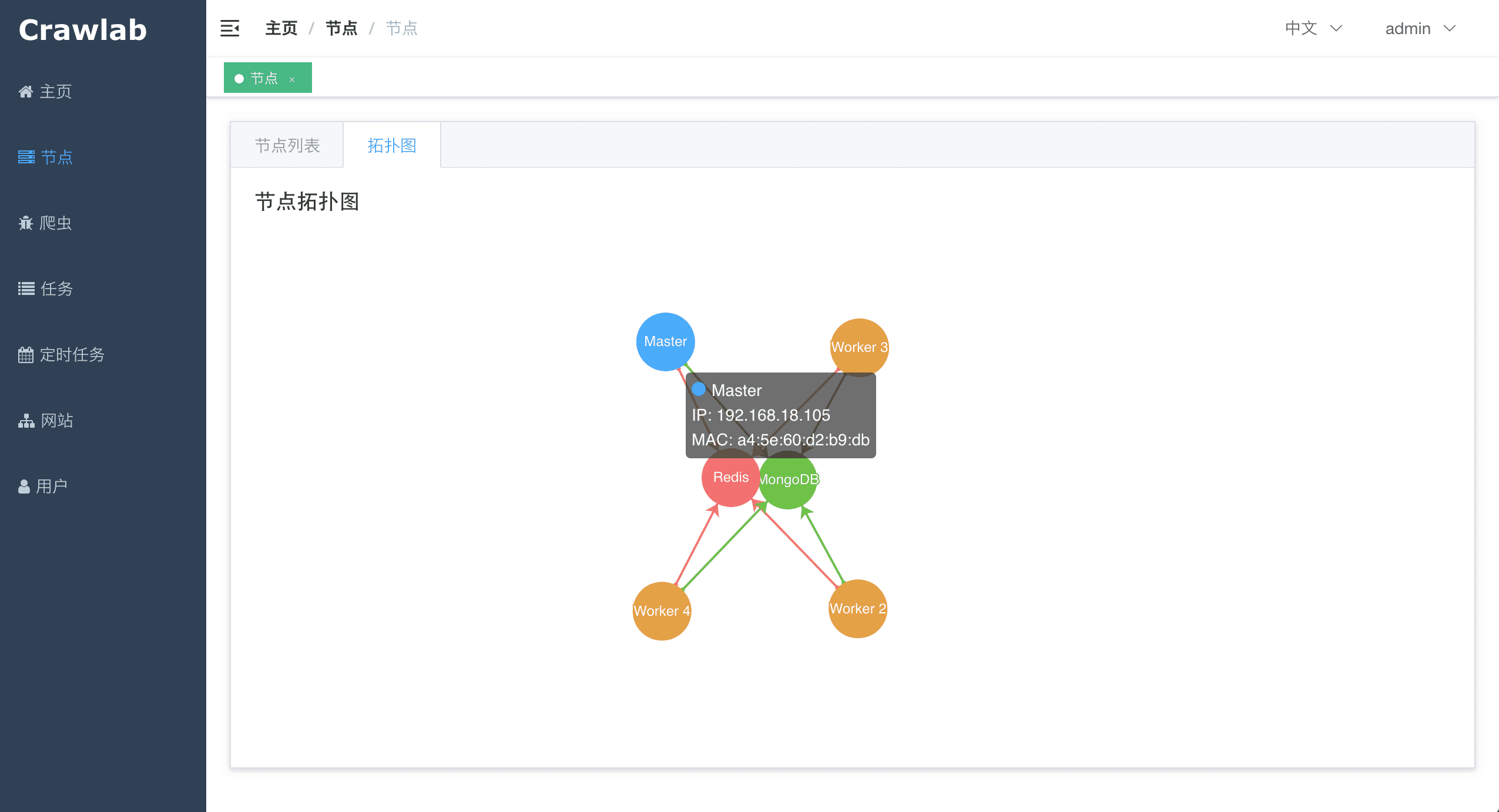

#### 节点拓扑图

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/node-network.png?v0.3.0">

|

||||

|

||||

|

||||

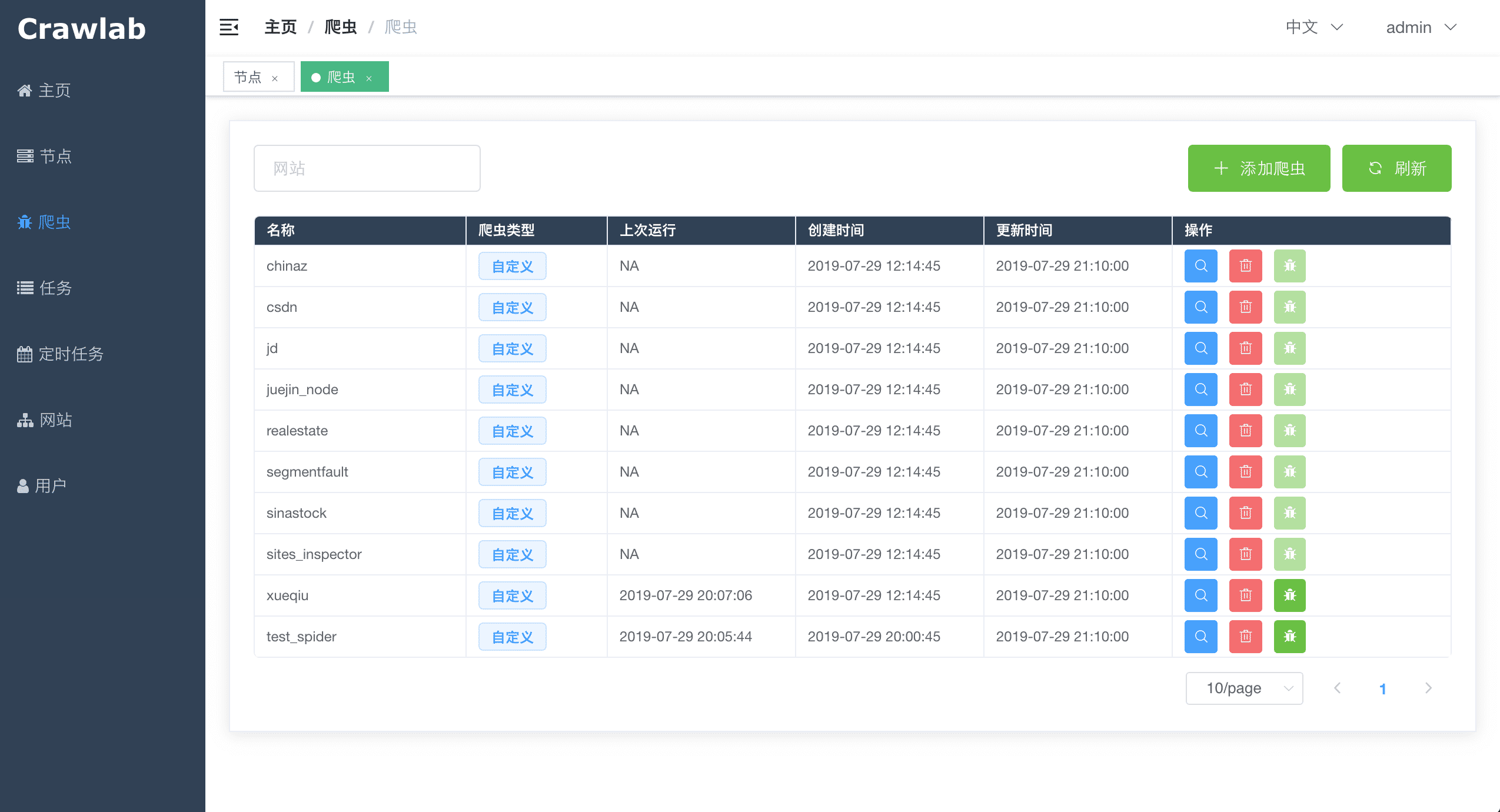

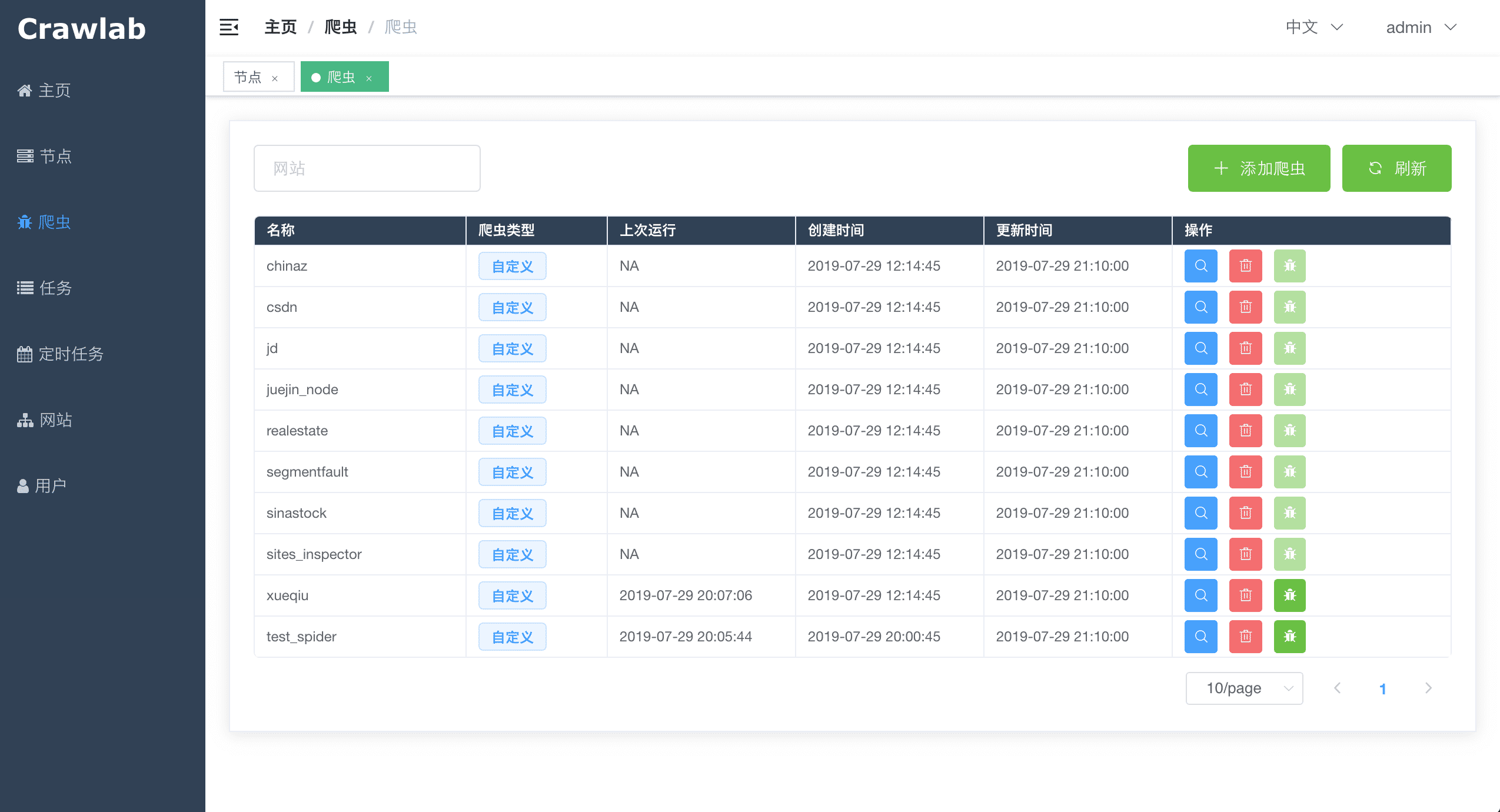

#### 爬虫列表

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/spider-list.png?v0.3.0">

|

||||

|

||||

|

||||

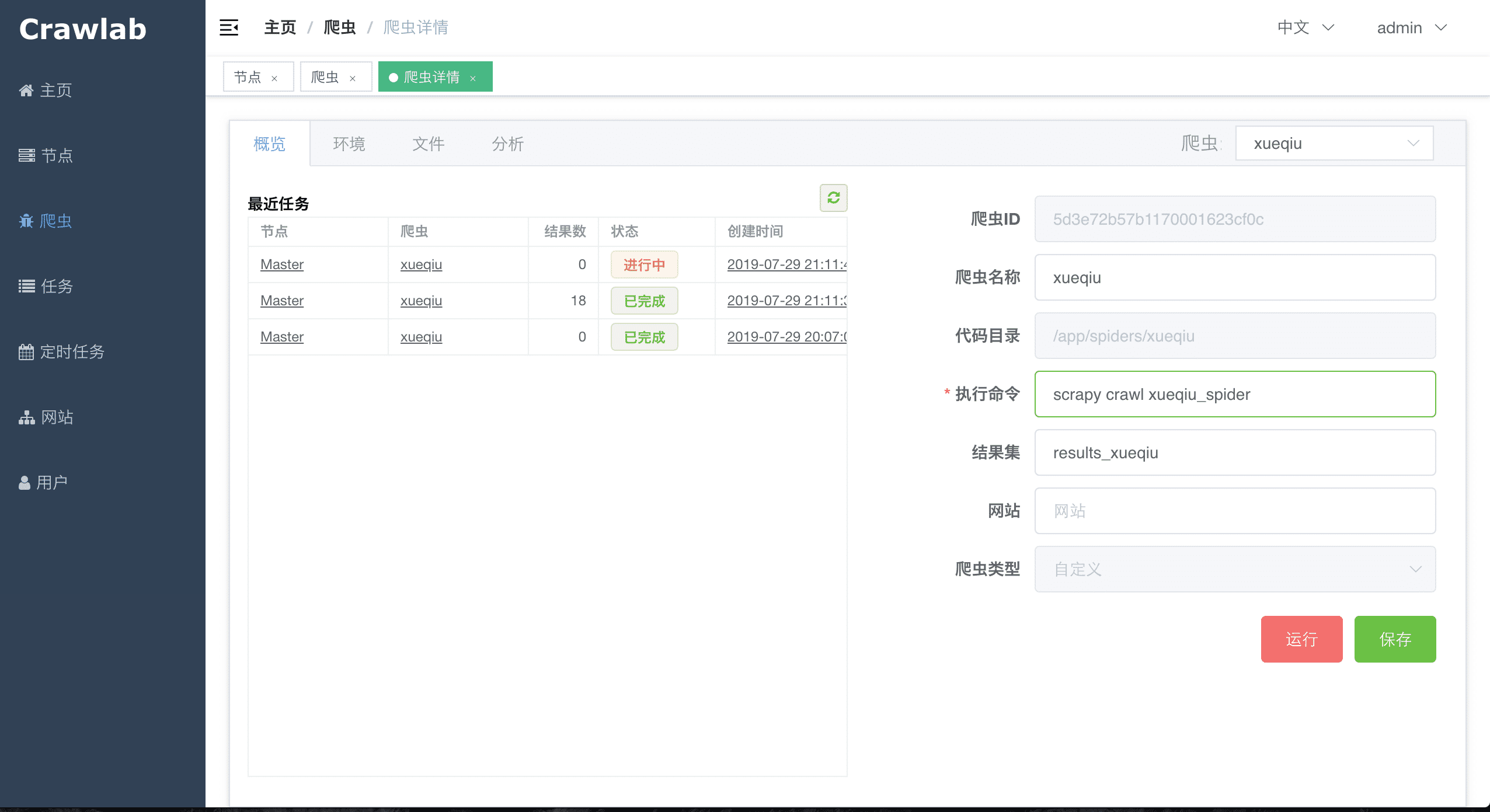

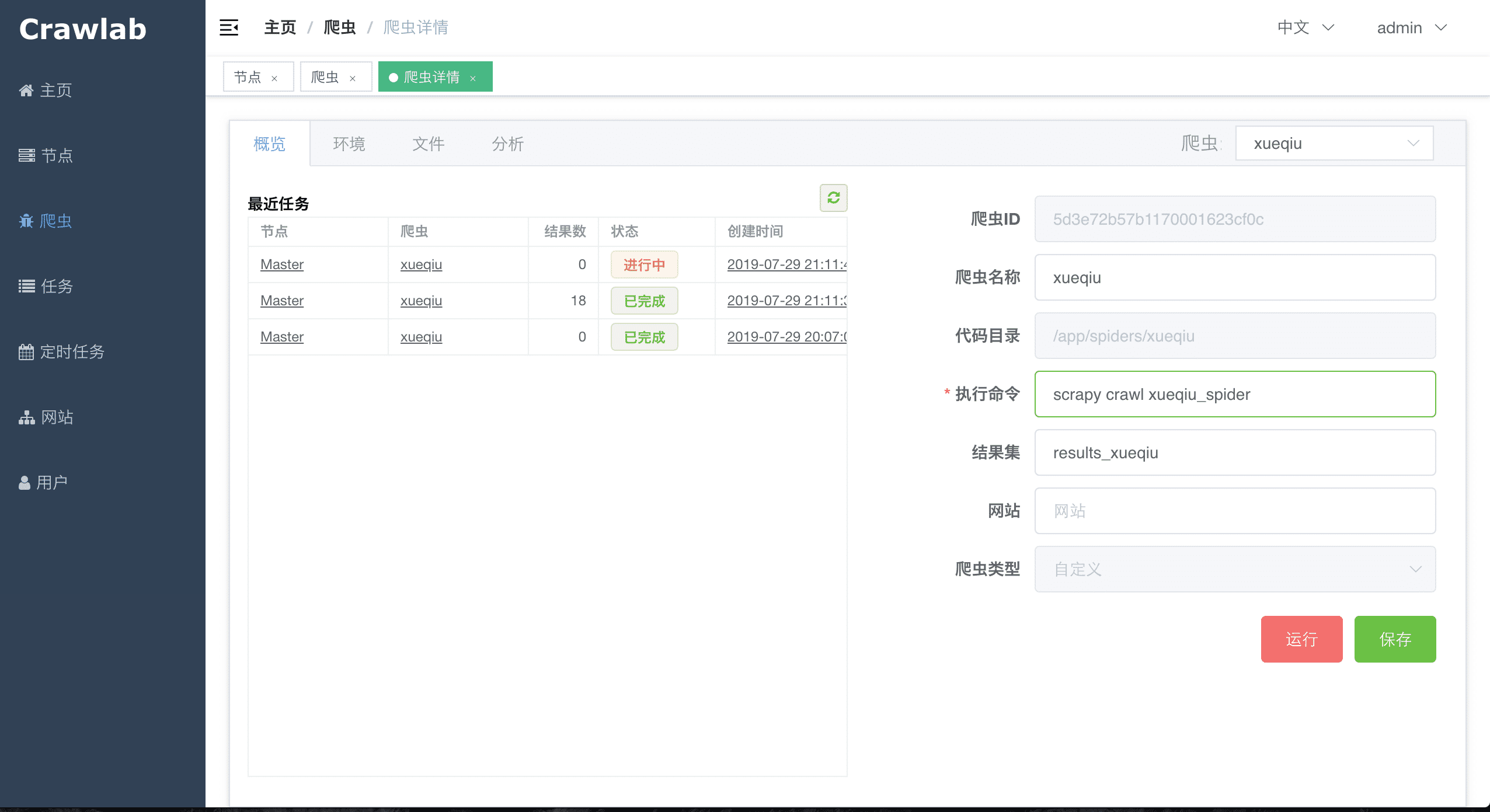

#### 爬虫概览

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/spider-overview.png?v0.3.0">

|

||||

|

||||

|

||||

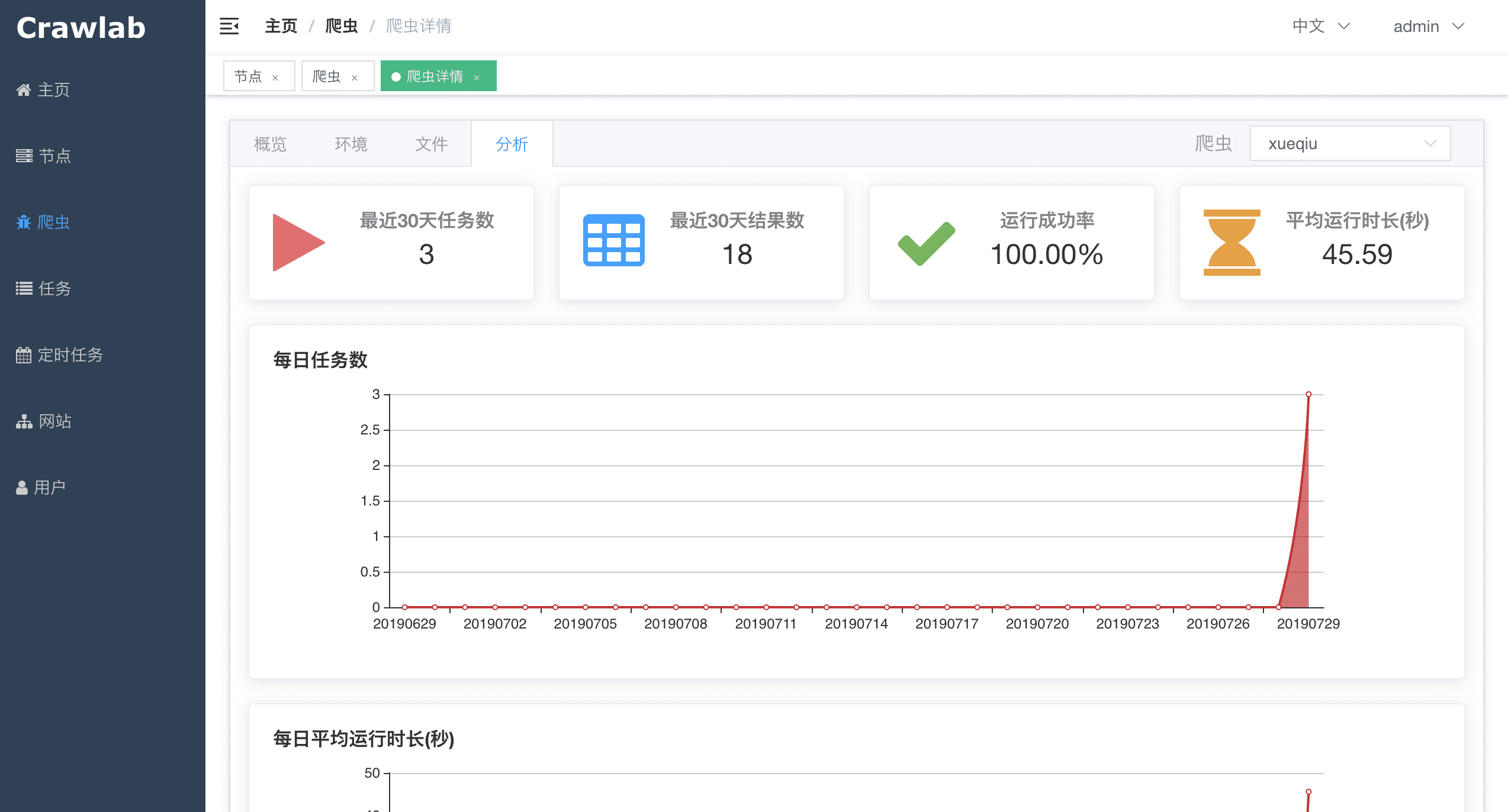

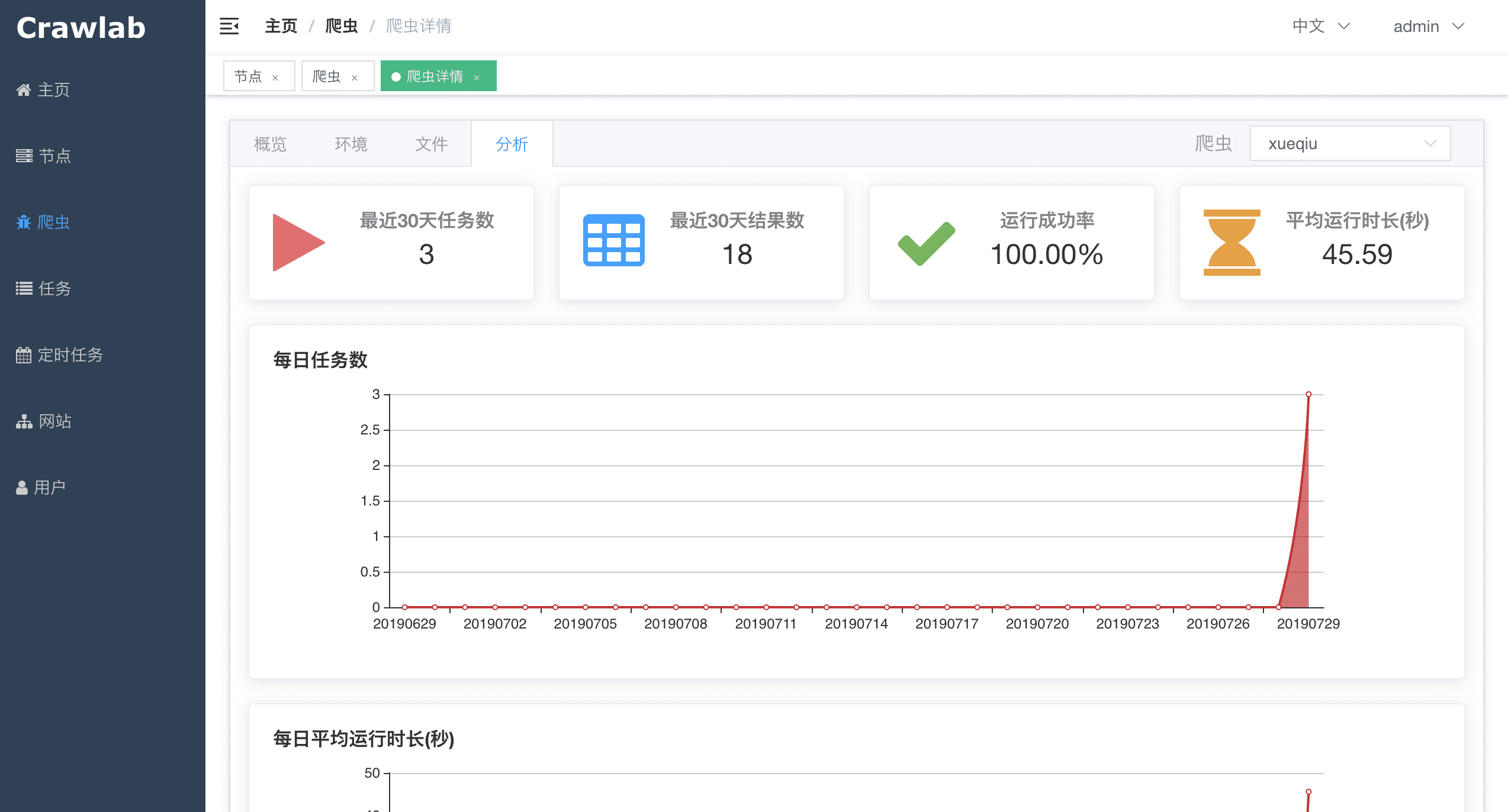

#### 爬虫分析

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/spider-analytics.png?v0.3.0">

|

||||

|

||||

|

||||

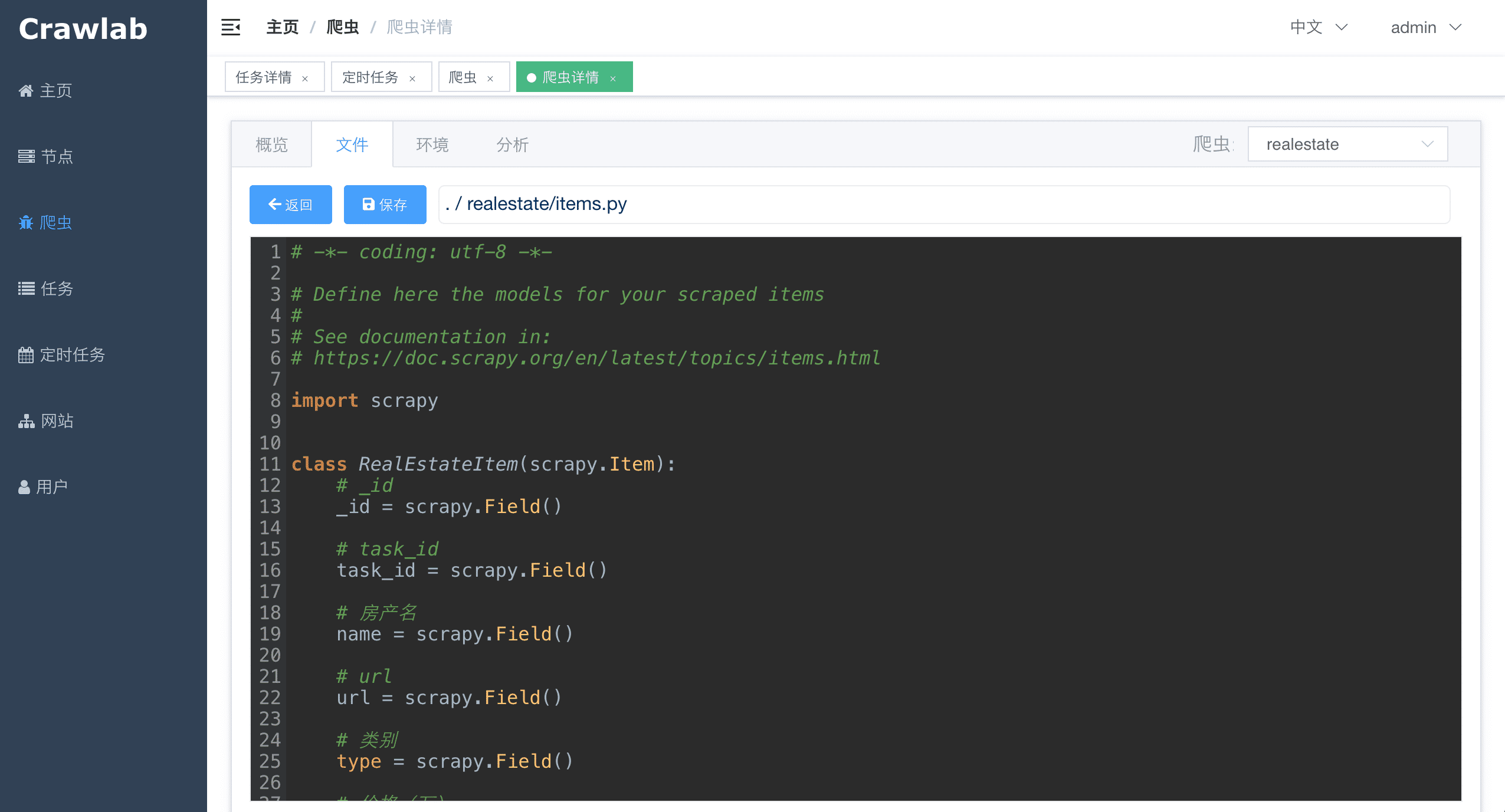

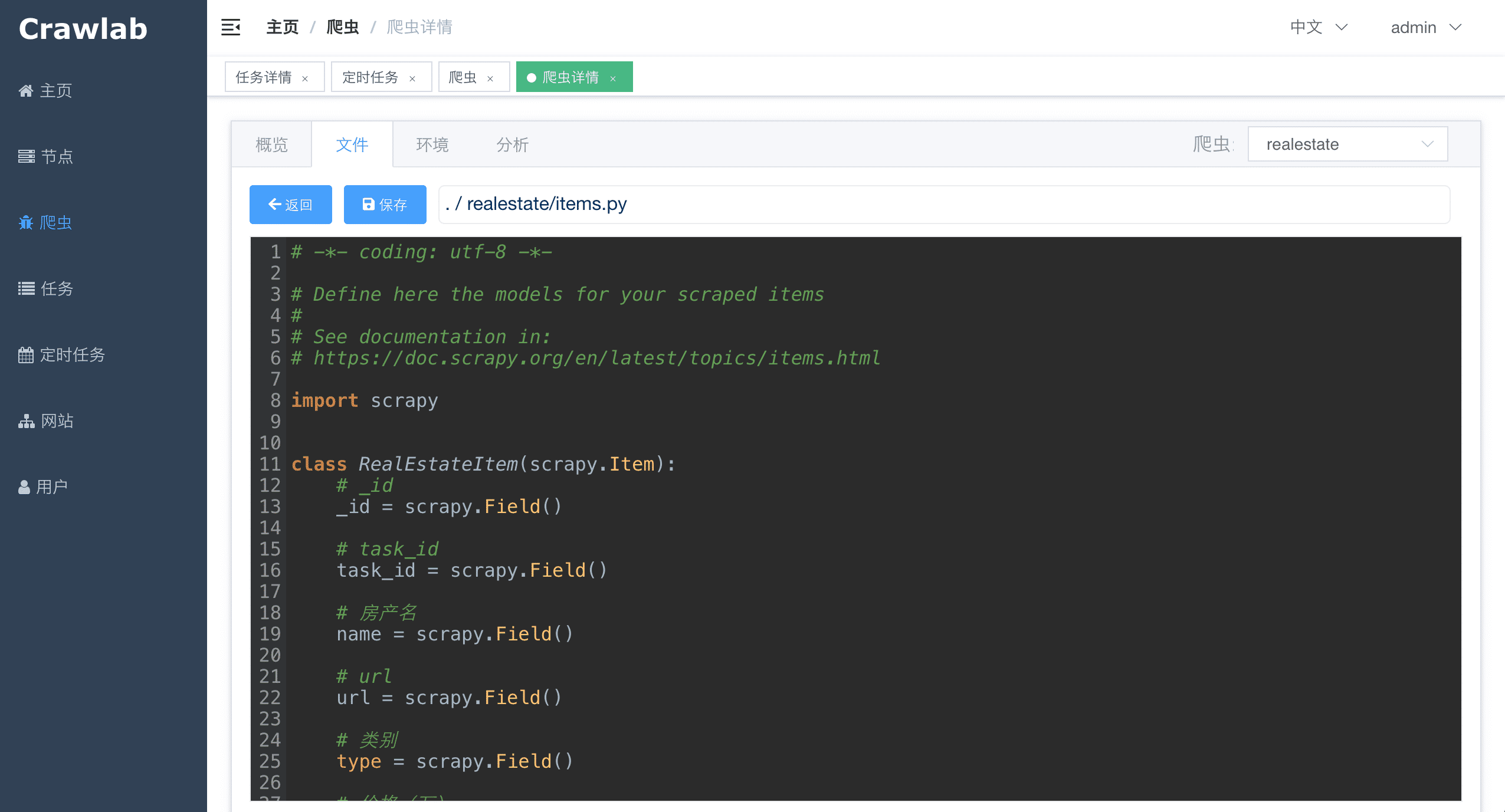

#### 爬虫文件

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/spider-file.png?v0.3.0">

|

||||

|

||||

|

||||

#### 任务详情 - 抓取结果

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/task-results.png?v0.3.0_1">

|

||||

|

||||

|

||||

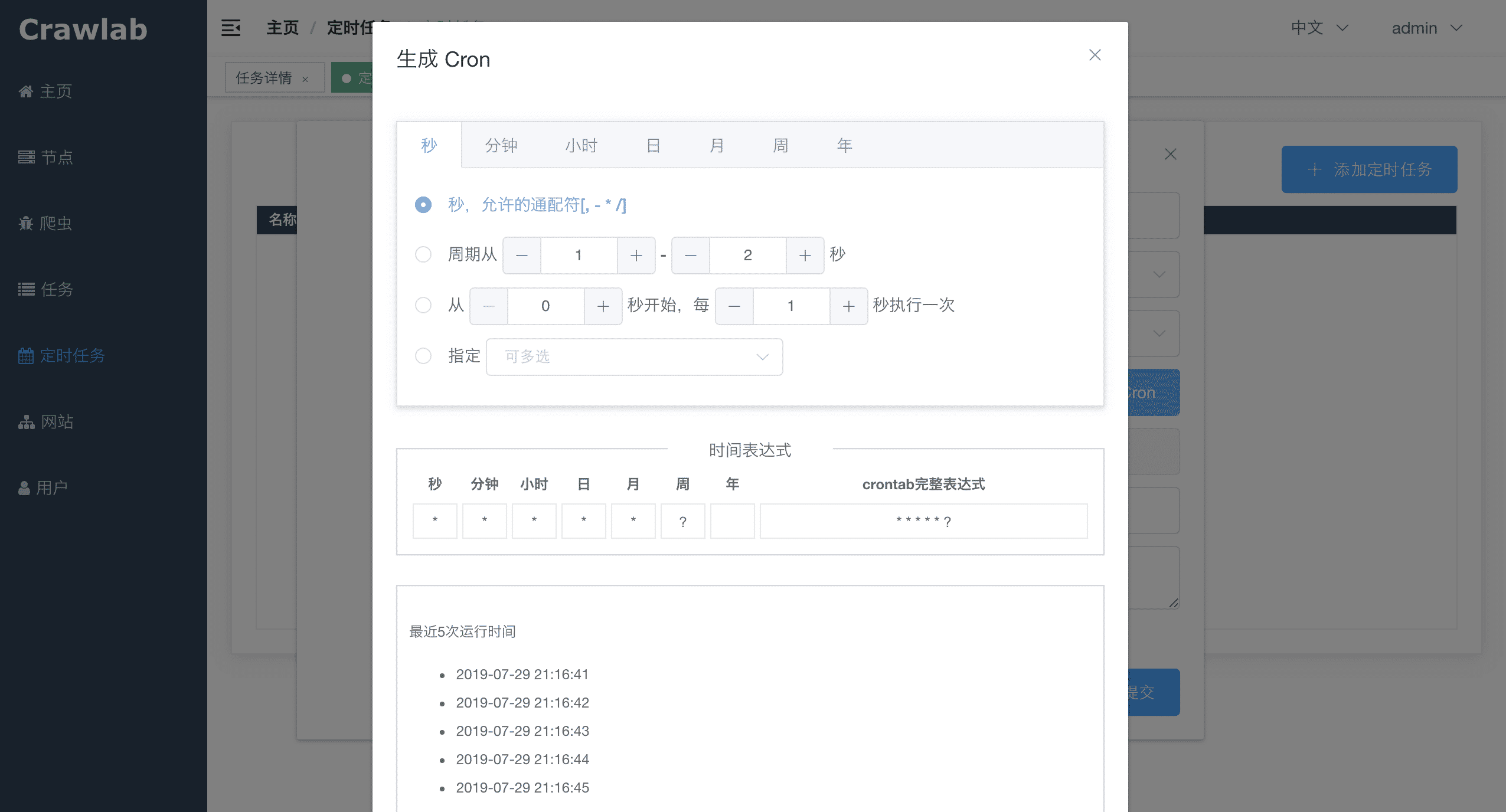

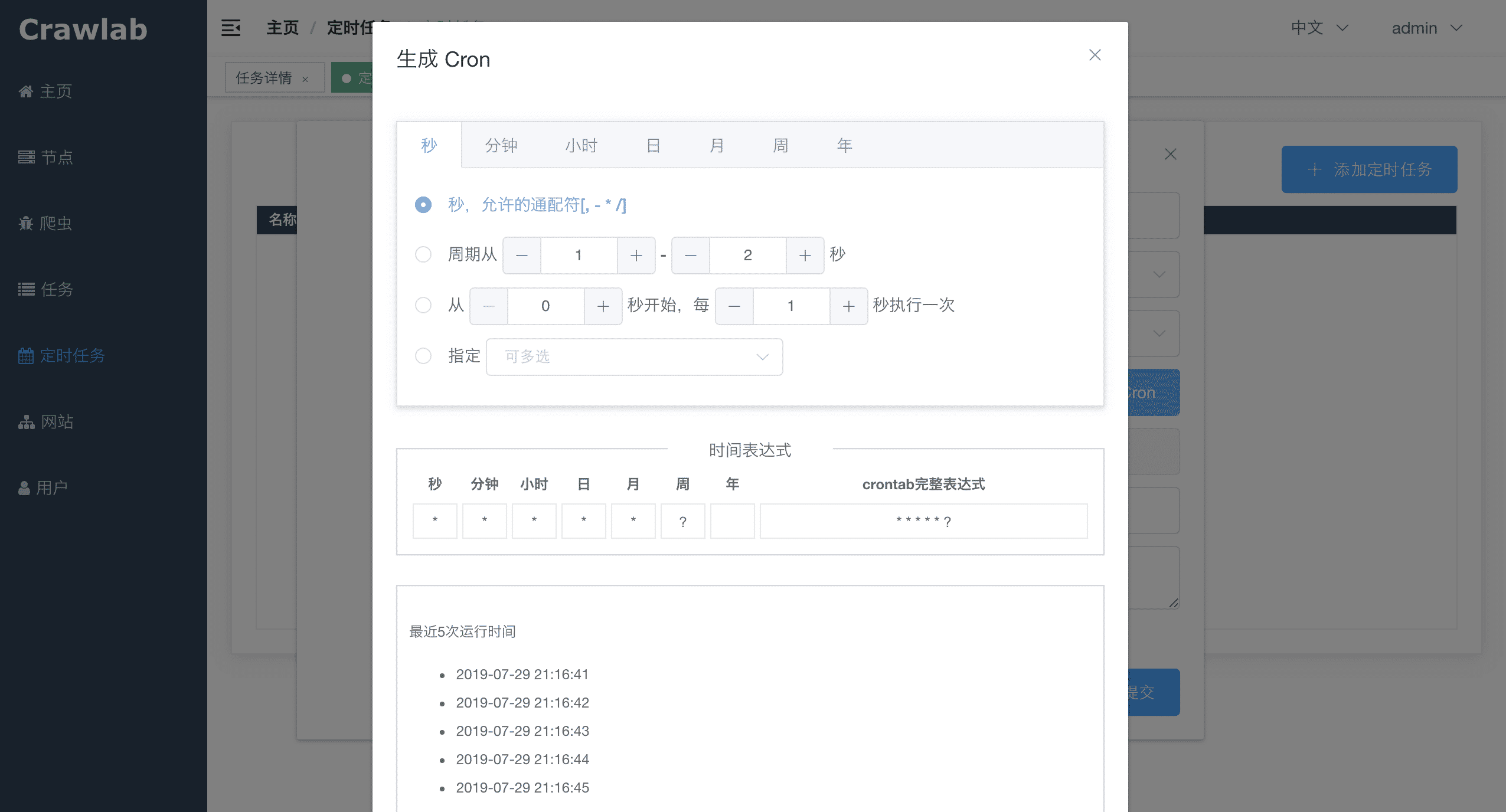

#### 定时任务

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/schedule.png?v0.3.0">

|

||||

|

||||

|

||||

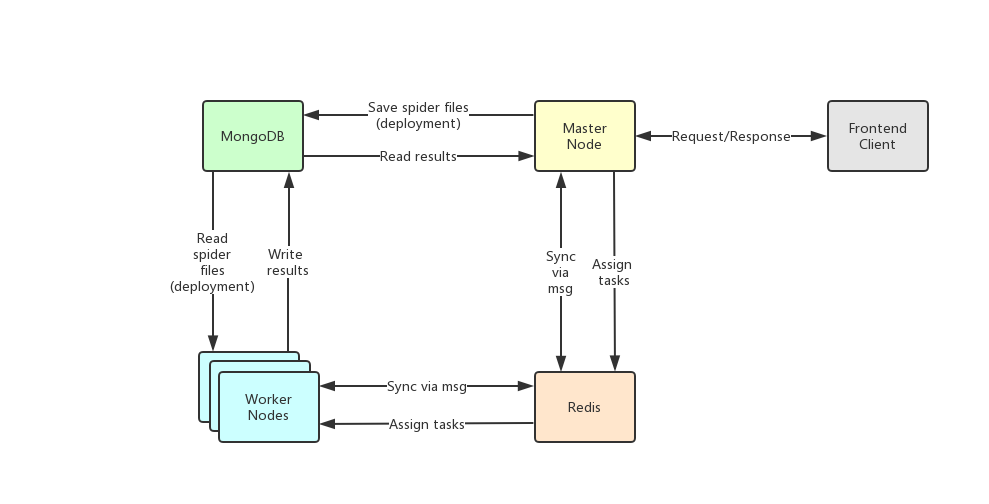

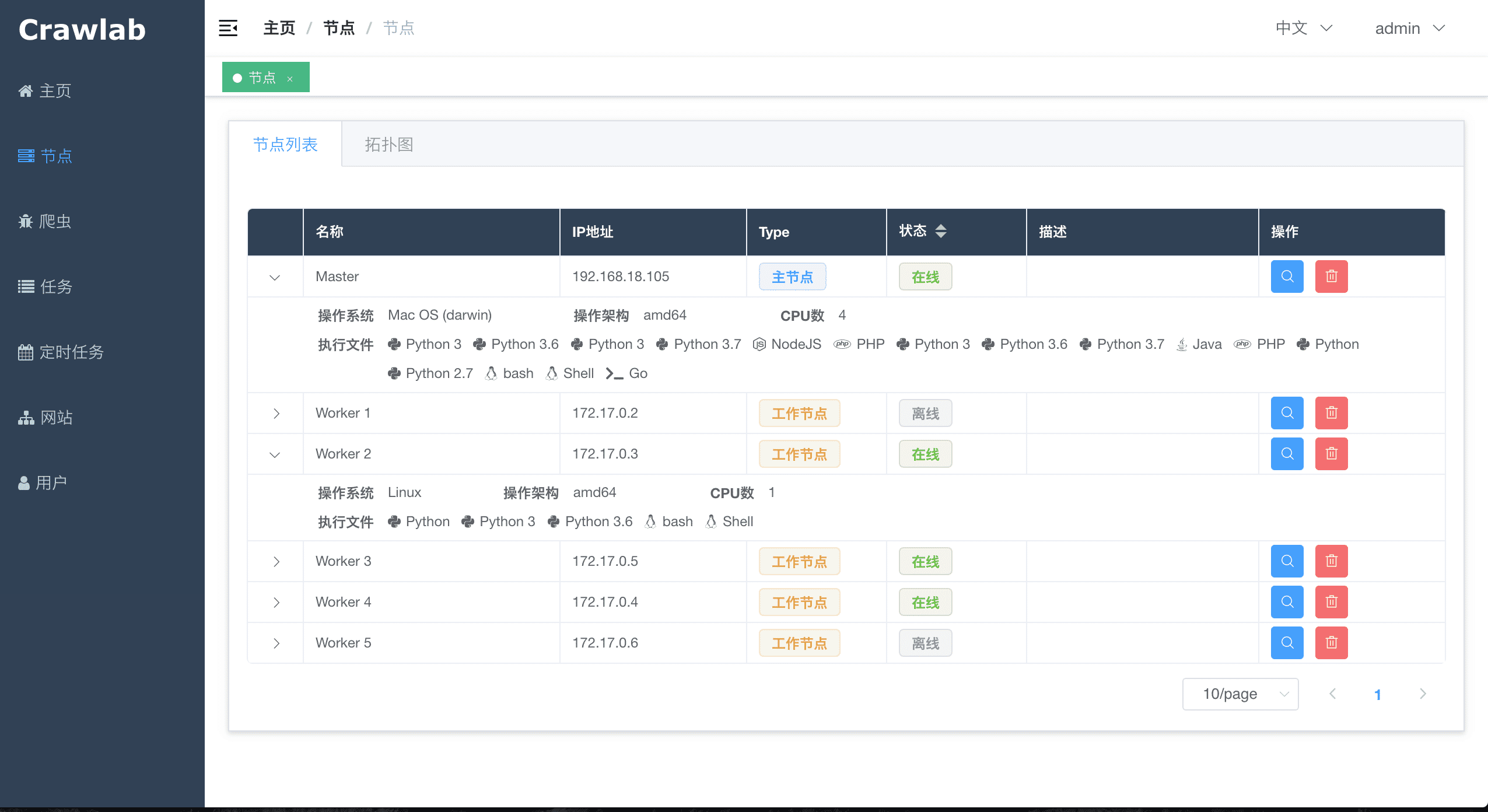

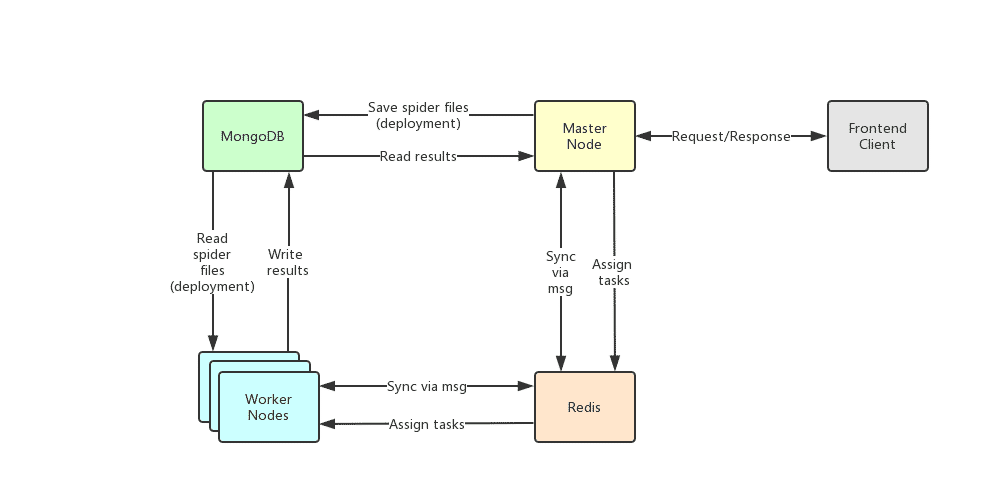

## 架构

|

||||

|

||||

Crawlab的架构包括了一个主节点(Master Node)和多个工作节点(Worker Node),以及负责通信和数据储存的Redis和MongoDB数据库。

|

||||

|

||||

|

||||

|

||||

|

||||

前端应用向主节点请求数据,主节点通过MongoDB和Redis来执行任务派发调度以及部署,工作节点收到任务之后,开始执行爬虫任务,并将任务结果储存到MongoDB。架构相对于`v0.3.0`之前的Celery版本有所精简,去除了不必要的节点监控模块Flower,节点监控主要由Redis完成。

|

||||

|

||||

|

||||

22

README.md

22

README.md

@@ -95,49 +95,49 @@ For Docker Deployment details, please refer to [relevant documentation](https://

|

||||

|

||||

#### Login

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/login.png?v0.3.0">

|

||||

|

||||

|

||||

#### Home Page

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/home.png?v0.3.0">

|

||||

|

||||

|

||||

#### Node List

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/node-list.png?v0.3.0">

|

||||

|

||||

|

||||

#### Node Network

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/node-network.png?v0.3.0">

|

||||

|

||||

|

||||

#### Spider List

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/spider-list.png?v0.3.0">

|

||||

|

||||

|

||||

#### Spider Overview

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/spider-overview.png?v0.3.0">

|

||||

|

||||

|

||||

#### Spider Analytics

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/spider-analytics.png?v0.3.0">

|

||||

|

||||

|

||||

#### Spider Files

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/spider-file.png?v0.3.0">

|

||||

|

||||

|

||||

#### Task Results

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/task-results.png?v0.3.0_1">

|

||||

|

||||

|

||||

#### Cron Job

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/schedule.png?v0.3.0">

|

||||

|

||||

|

||||

## Architecture

|

||||

|

||||

The architecture of Crawlab is consisted of the Master Node and multiple Worker Nodes, and Redis and MongoDB databases which are mainly for nodes communication and data storage.

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/architecture.png">

|

||||

|

||||

|

||||

The frontend app makes requests to the Master Node, which assigns tasks and deploys spiders through MongoDB and Redis. When a Worker Node receives a task, it begins to execute the crawling task, and stores the results to MongoDB. The architecture is much more concise compared with versions before `v0.3.0`. It has removed unnecessary Flower module which offers node monitoring services. They are now done by Redis.

|

||||

|

||||

|

||||

Reference in New Issue

Block a user