mirror of

https://github.com/crawlab-team/crawlab.git

synced 2026-01-21 17:21:09 +01:00

Merge branch 'master' of https://github.com/tikazyq/crawlab

This commit is contained in:

15

Jenkinsfile

vendored

15

Jenkinsfile

vendored

@@ -12,11 +12,13 @@ pipeline {

|

||||

script {

|

||||

if (env.GIT_BRANCH == 'develop') {

|

||||

env.MODE = 'develop'

|

||||

env.TAG = 'develop'

|

||||

env.BASE_URL = '/dev'

|

||||

} else if (env.GIT_BRANCH == 'master') {

|

||||

env.MODE = 'production'

|

||||

} else {

|

||||

env.MODE = 'test'

|

||||

}

|

||||

env.TAG = 'master'

|

||||

env.BASE_URL = '/demo'

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

@@ -24,7 +26,7 @@ pipeline {

|

||||

steps {

|

||||

echo "Building..."

|

||||

sh """

|

||||

docker build -t tikazyq/crawlab:latest -f Dockerfile.local .

|

||||

docker build -t tikazyq/crawlab:${ENV:TAG} -f Dockerfile.local .

|

||||

"""

|

||||

}

|

||||

}

|

||||

@@ -37,7 +39,10 @@ pipeline {

|

||||

steps {

|

||||

echo 'Deploying....'

|

||||

sh """

|

||||

cd ./jenkins

|

||||

echo ${ENV:GIT_BRANCH}

|

||||

"""

|

||||

sh """

|

||||

cd ./jenkins/${ENV:GIT_BRANCH}

|

||||

docker-compose stop | true

|

||||

docker-compose up -d

|

||||

"""

|

||||

|

||||

61

README-zh.md

61

README-zh.md

@@ -1,6 +1,6 @@

|

||||

# Crawlab

|

||||

|

||||

|

||||

|

||||

|

||||

<a href="https://github.com/tikazyq/crawlab/blob/master/LICENSE" target="_blank">

|

||||

<img src="https://img.shields.io/badge/License-BSD-blue.svg">

|

||||

@@ -12,13 +12,13 @@

|

||||

|

||||

基于Golang的分布式爬虫管理平台,支持Python、NodeJS、Go、Java、PHP等多种编程语言以及多种爬虫框架。

|

||||

|

||||

[查看演示 Demo](http://114.67.75.98:8080) | [文档](https://tikazyq.github.io/crawlab-docs)

|

||||

[查看演示 Demo](http://crawlab.cn/demo) | [文档](https://tikazyq.github.io/crawlab-docs)

|

||||

|

||||

## 安装

|

||||

|

||||

三种方式:

|

||||

1. [Docker](https://tikazyq.github.io/crawlab/Installation/Docker.md)(推荐)

|

||||

2. [直接部署](https://tikazyq.github.io/crawlab/Installation/Direct.md)(了解内核)

|

||||

1. [Docker](https://tikazyq.github.io/crawlab/Installation/Docker.html)(推荐)

|

||||

2. [直接部署](https://tikazyq.github.io/crawlab/Installation/Direct.html)(了解内核)

|

||||

|

||||

### 要求(Docker)

|

||||

- Docker 18.03+

|

||||

@@ -52,7 +52,7 @@ docker run -d --rm --name crawlab \

|

||||

|

||||

当然也可以用`docker-compose`来一键启动,甚至不用配置MongoDB和Redis数据库,**当然我们推荐这样做**。在当前目录中创建`docker-compose.yml`文件,输入以下内容。

|

||||

|

||||

```bash

|

||||

```yaml

|

||||

version: '3.3'

|

||||

services:

|

||||

master:

|

||||

@@ -87,59 +87,59 @@ services:

|

||||

docker-compose up

|

||||

```

|

||||

|

||||

Docker部署的详情,请见[相关文档](https://tikazyq.github.io/crawlab/Installation/Docker.md)。

|

||||

Docker部署的详情,请见[相关文档](https://tikazyq.github.io/crawlab/Installation/Docker.html)。

|

||||

|

||||

### 直接部署

|

||||

|

||||

请参考[相关文档](https://tikazyq.github.io/crawlab/Installation/Direct.md)。

|

||||

请参考[相关文档](https://tikazyq.github.io/crawlab/Installation/Direct.html)。

|

||||

|

||||

## 截图

|

||||

|

||||

#### 登录

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/login.png?v0.3.0">

|

||||

|

||||

|

||||

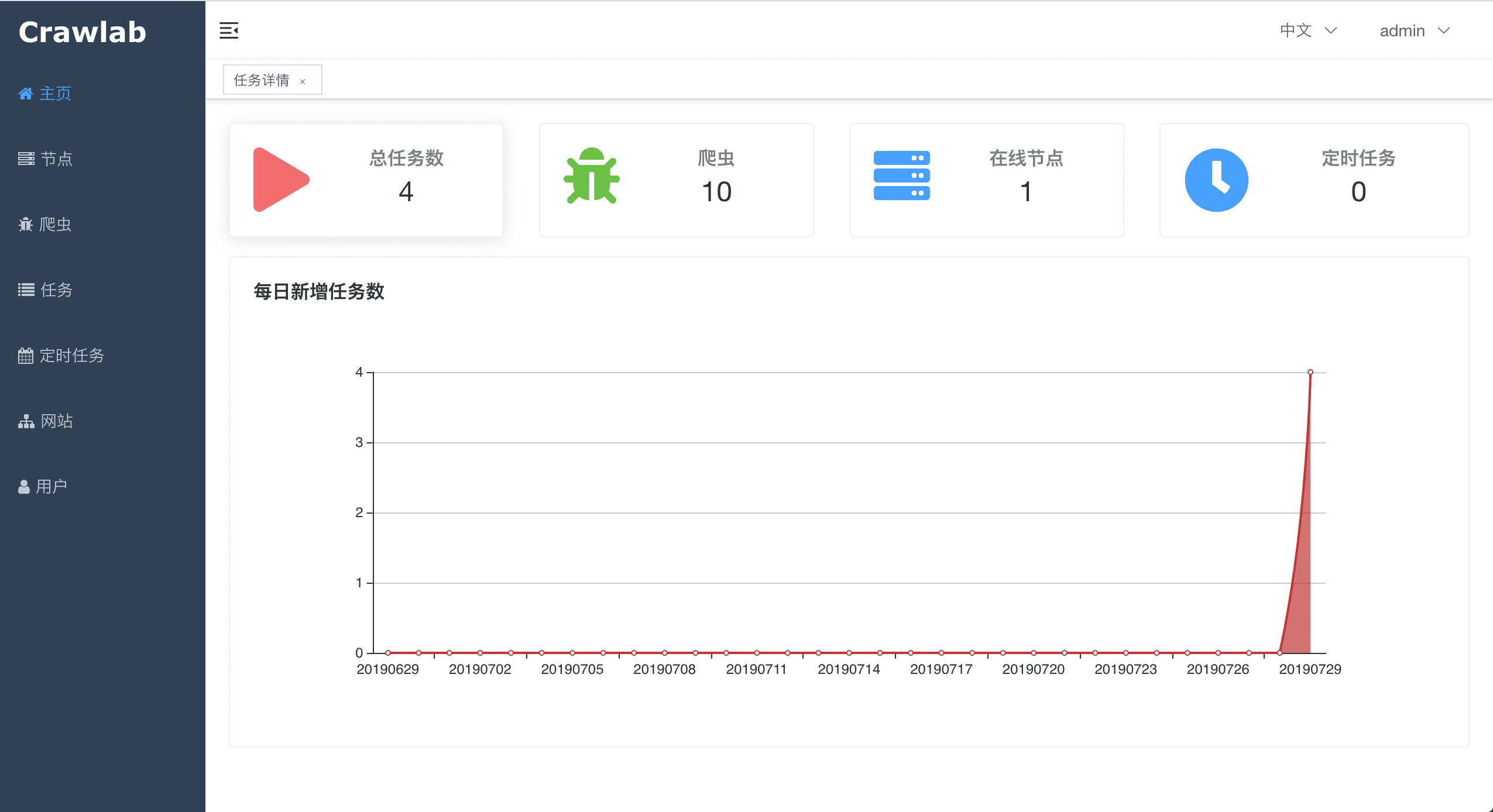

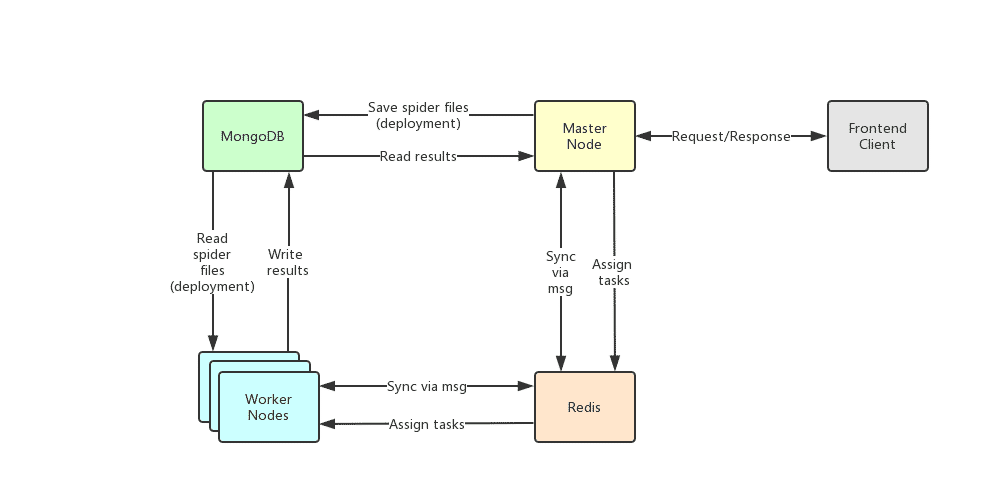

#### 首页

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/home.png?v0.3.0">

|

||||

|

||||

|

||||

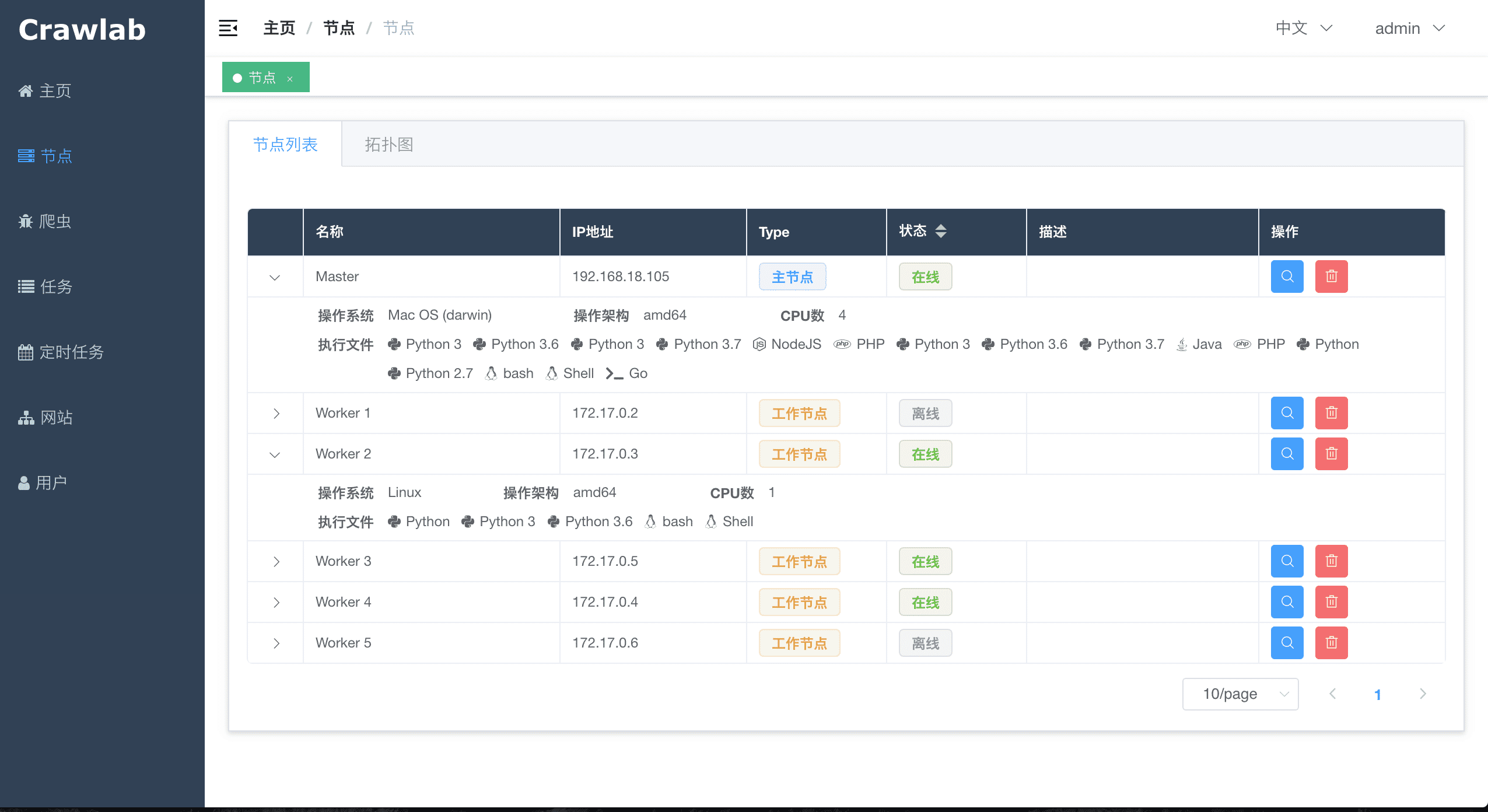

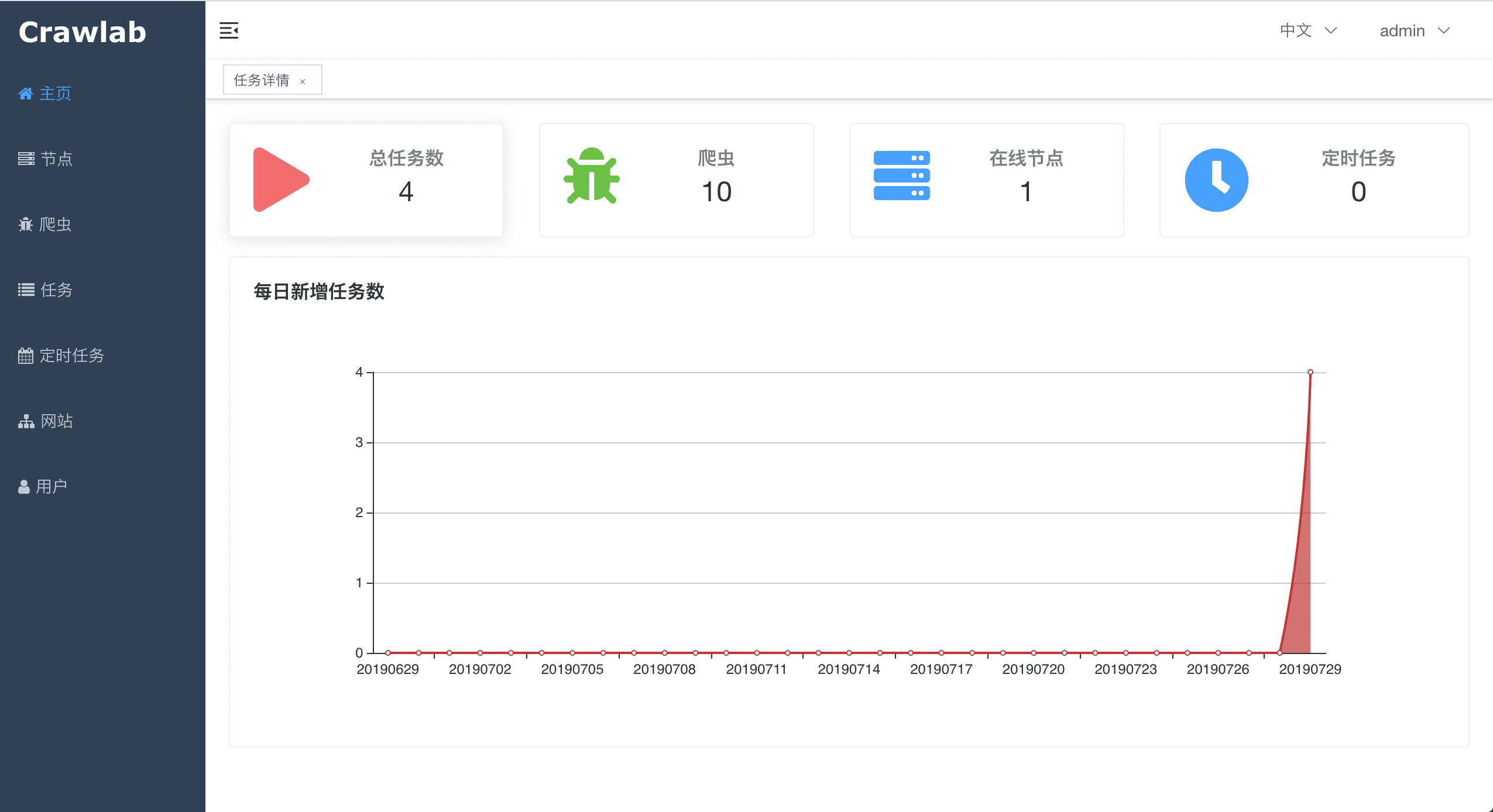

#### 节点列表

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/node-list.png?v0.3.0">

|

||||

|

||||

|

||||

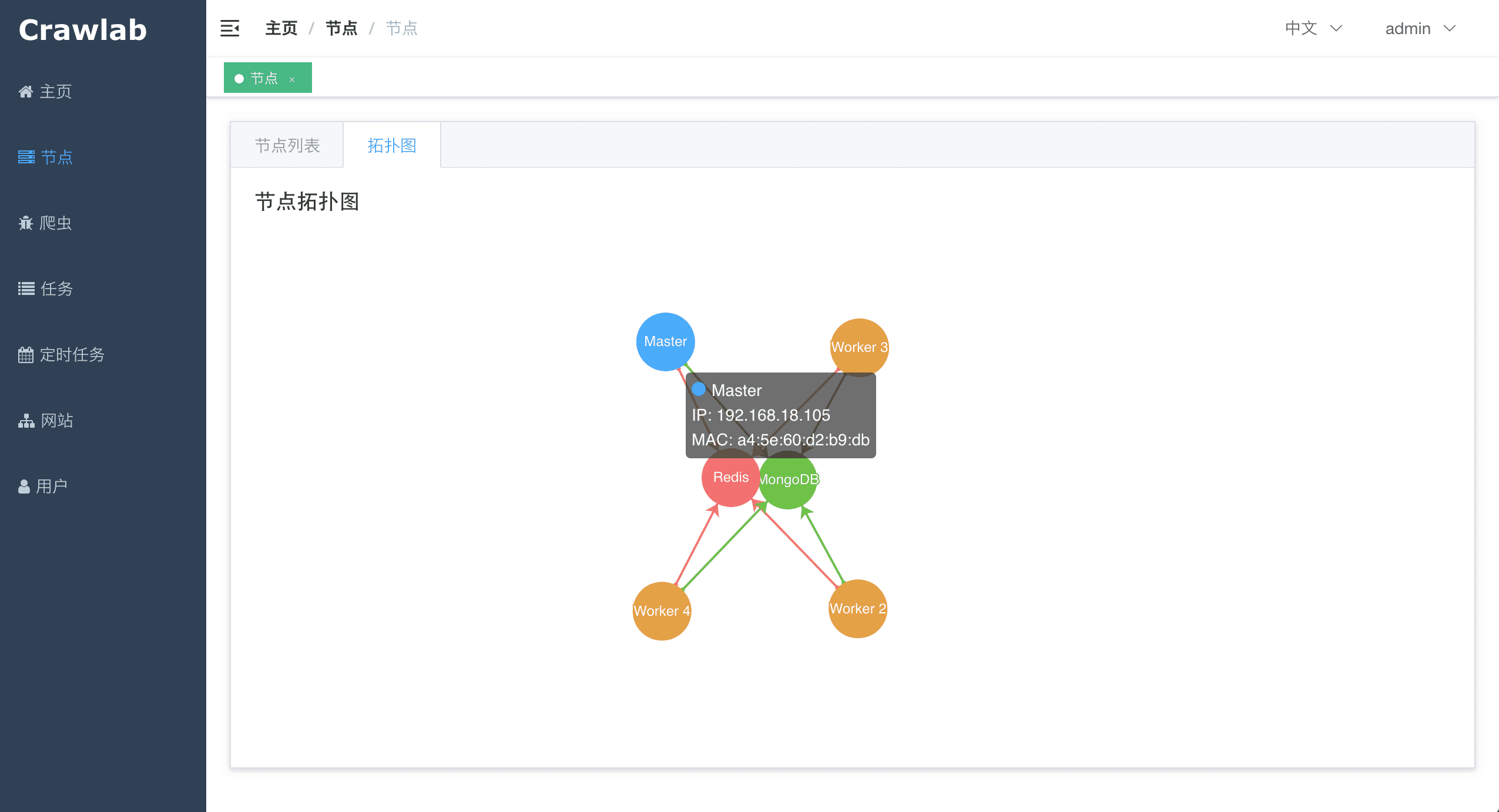

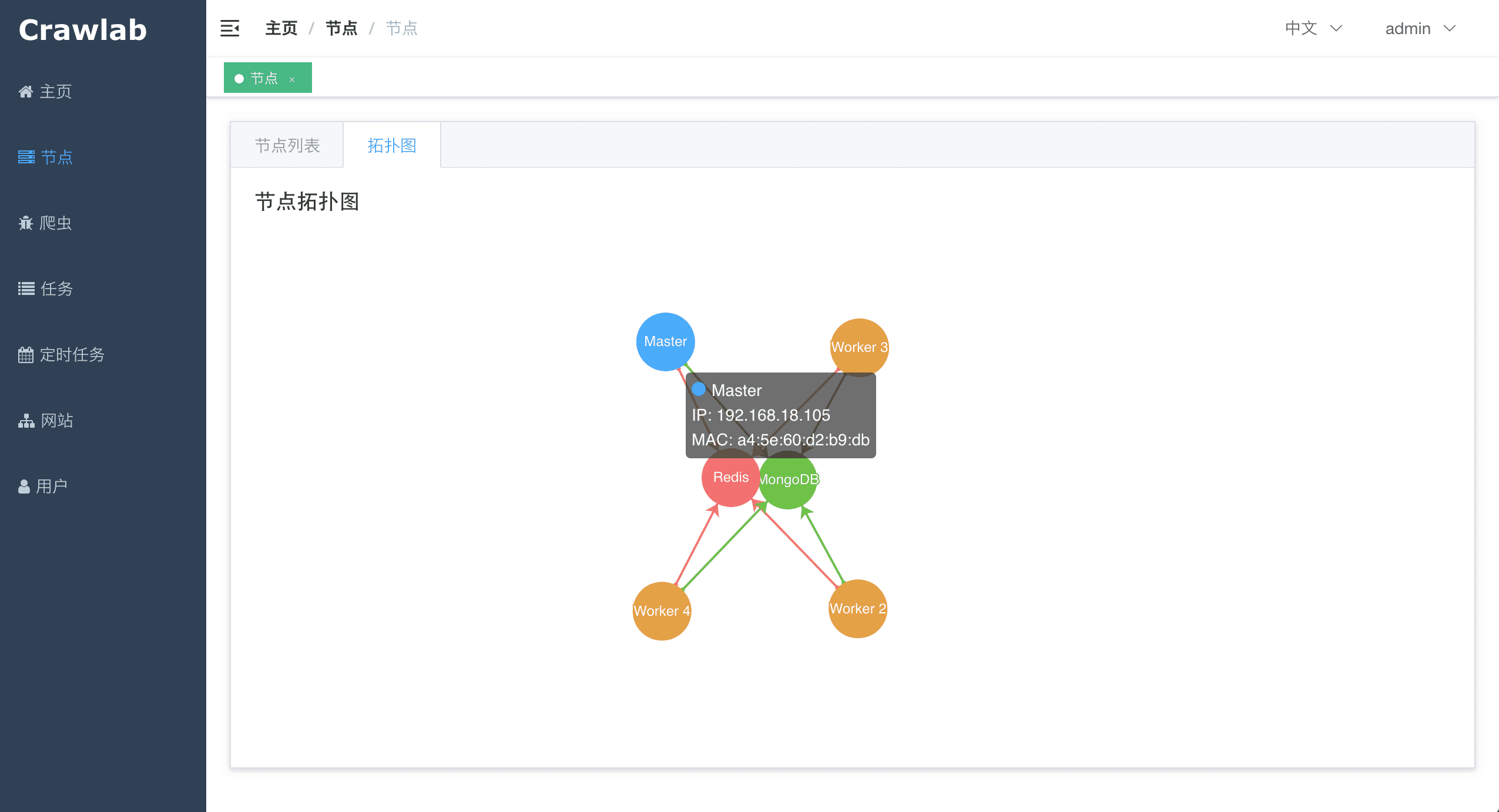

#### 节点拓扑图

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/node-network.png?v0.3.0">

|

||||

|

||||

|

||||

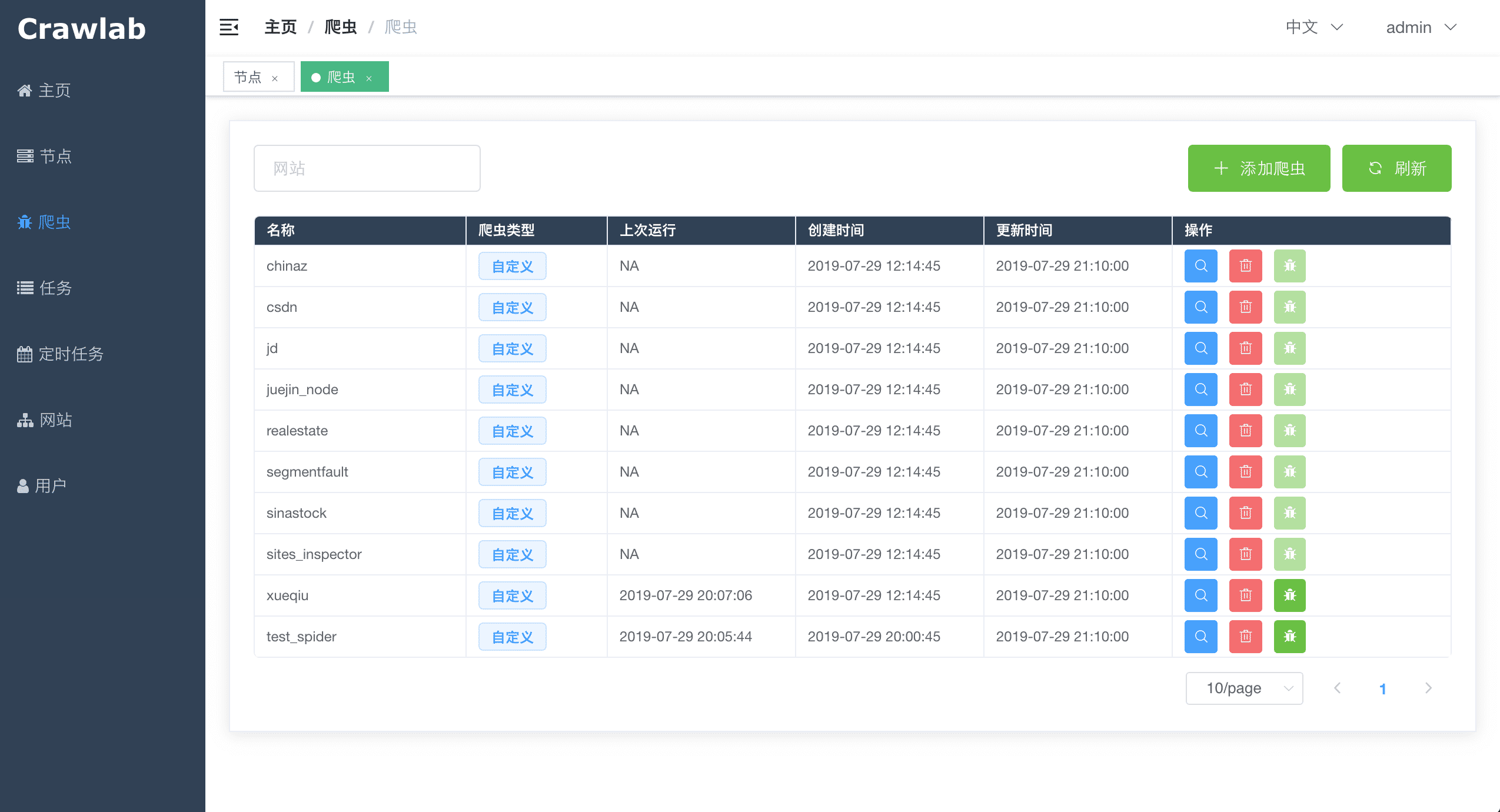

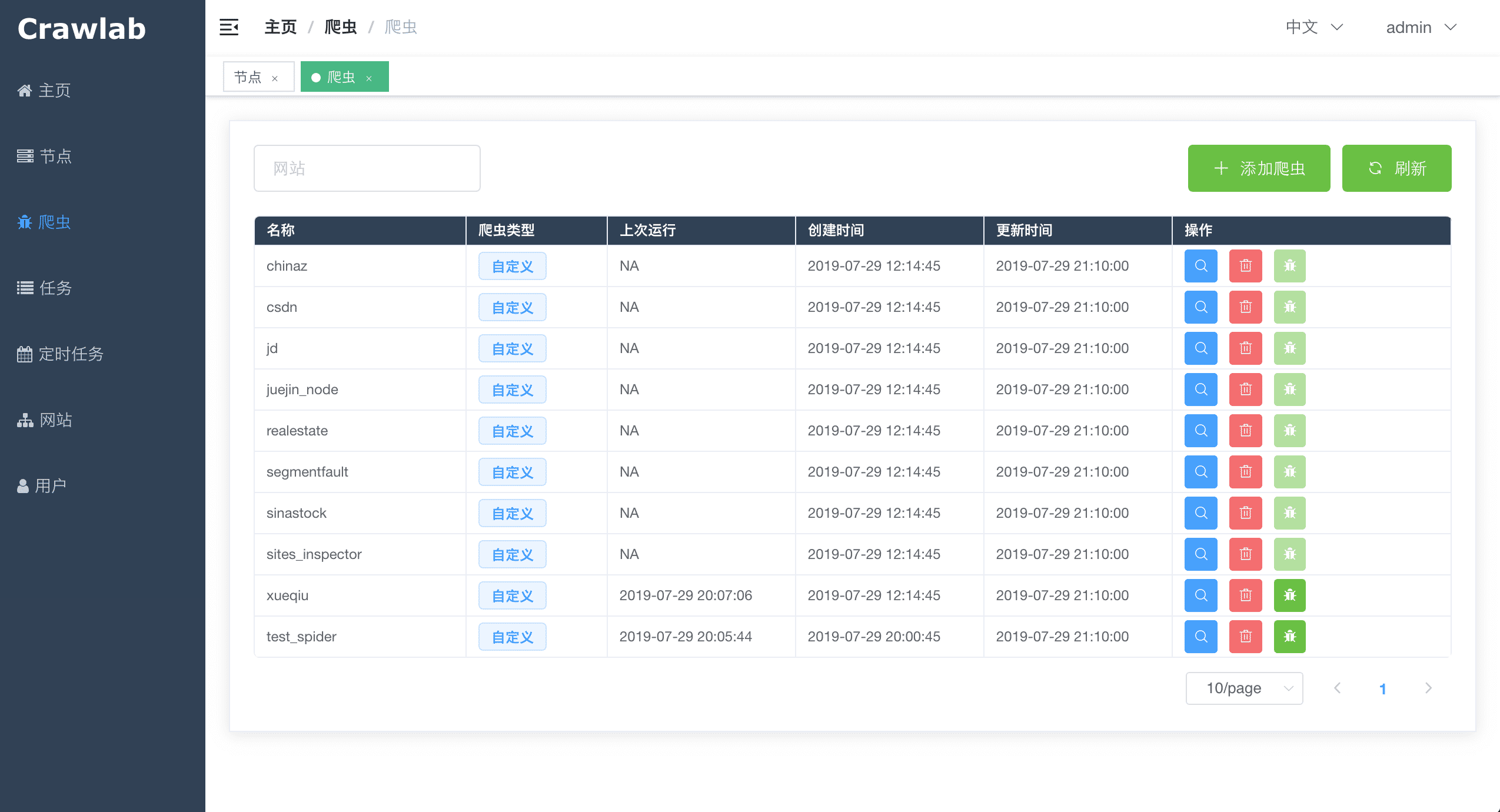

#### 爬虫列表

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/spider-list.png?v0.3.0">

|

||||

|

||||

|

||||

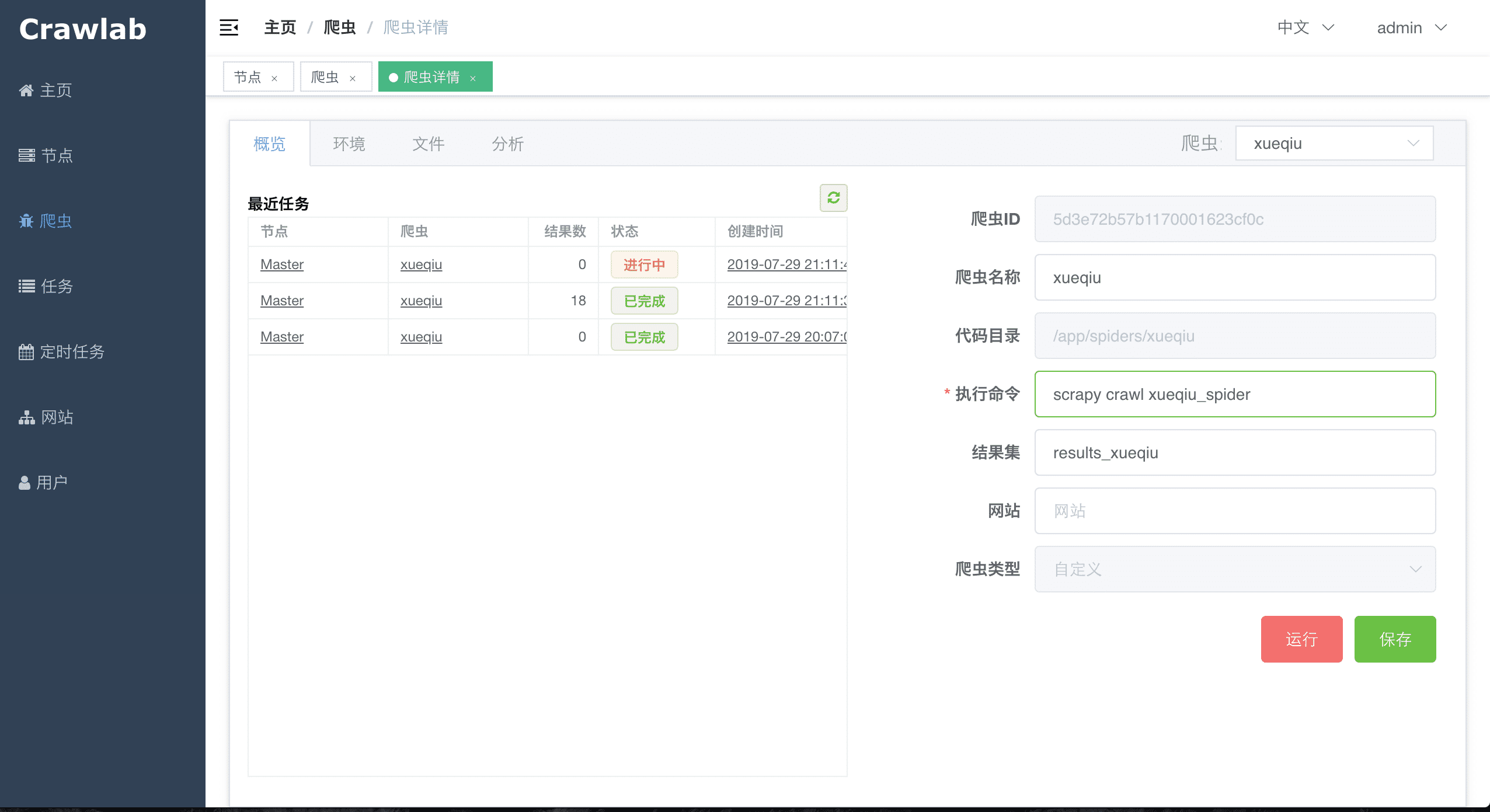

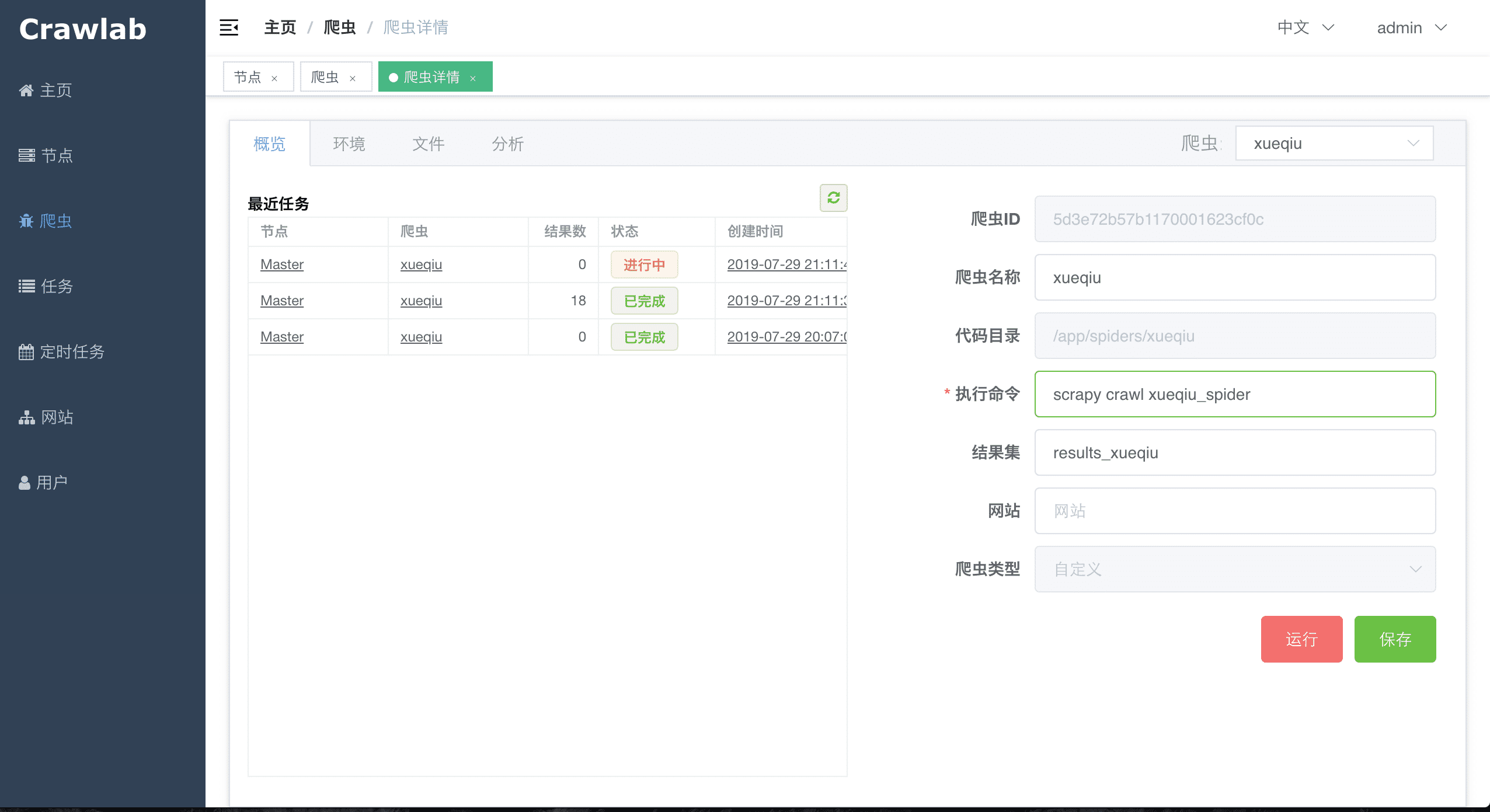

#### 爬虫概览

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/spider-overview.png?v0.3.0">

|

||||

|

||||

|

||||

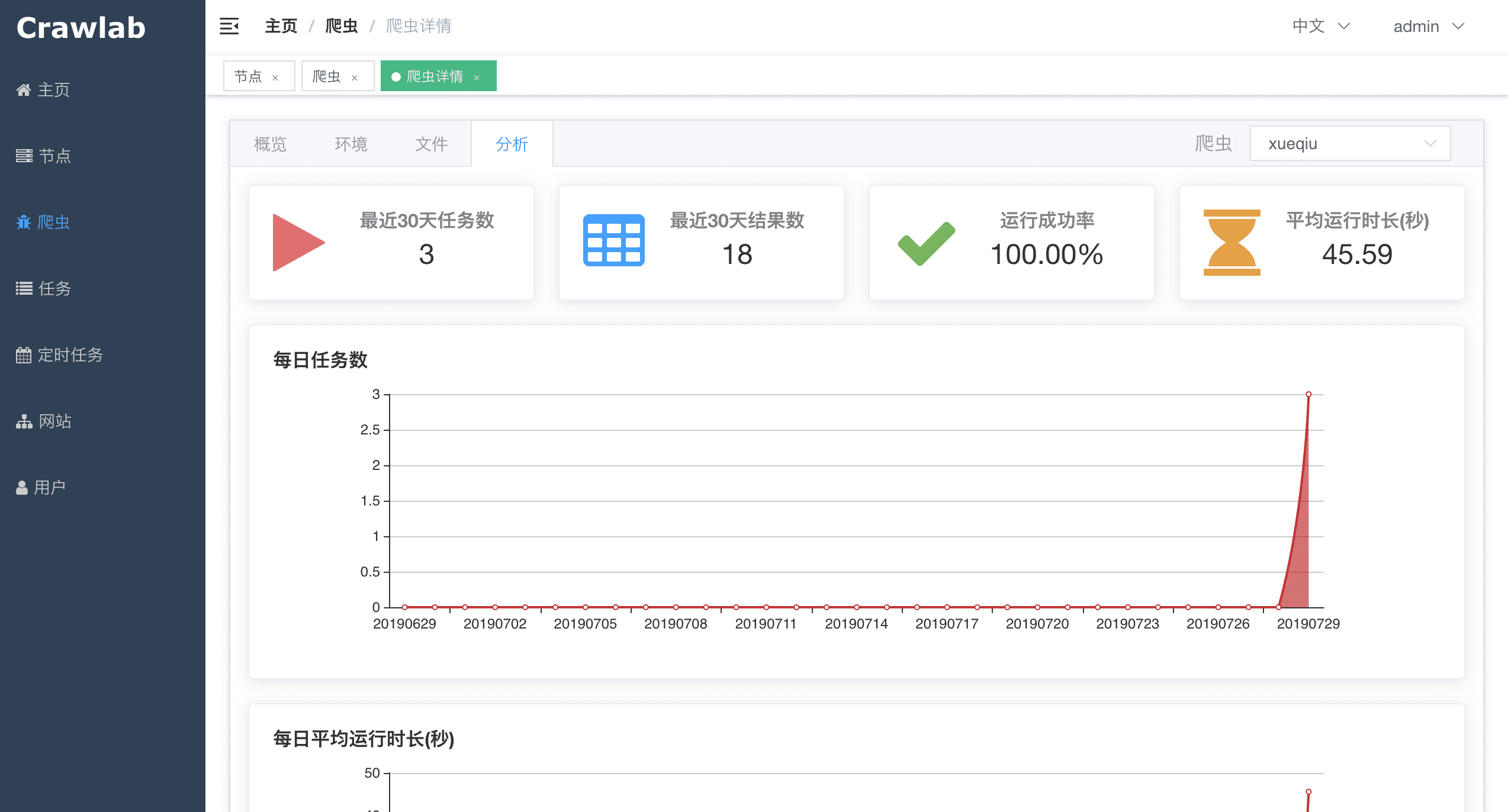

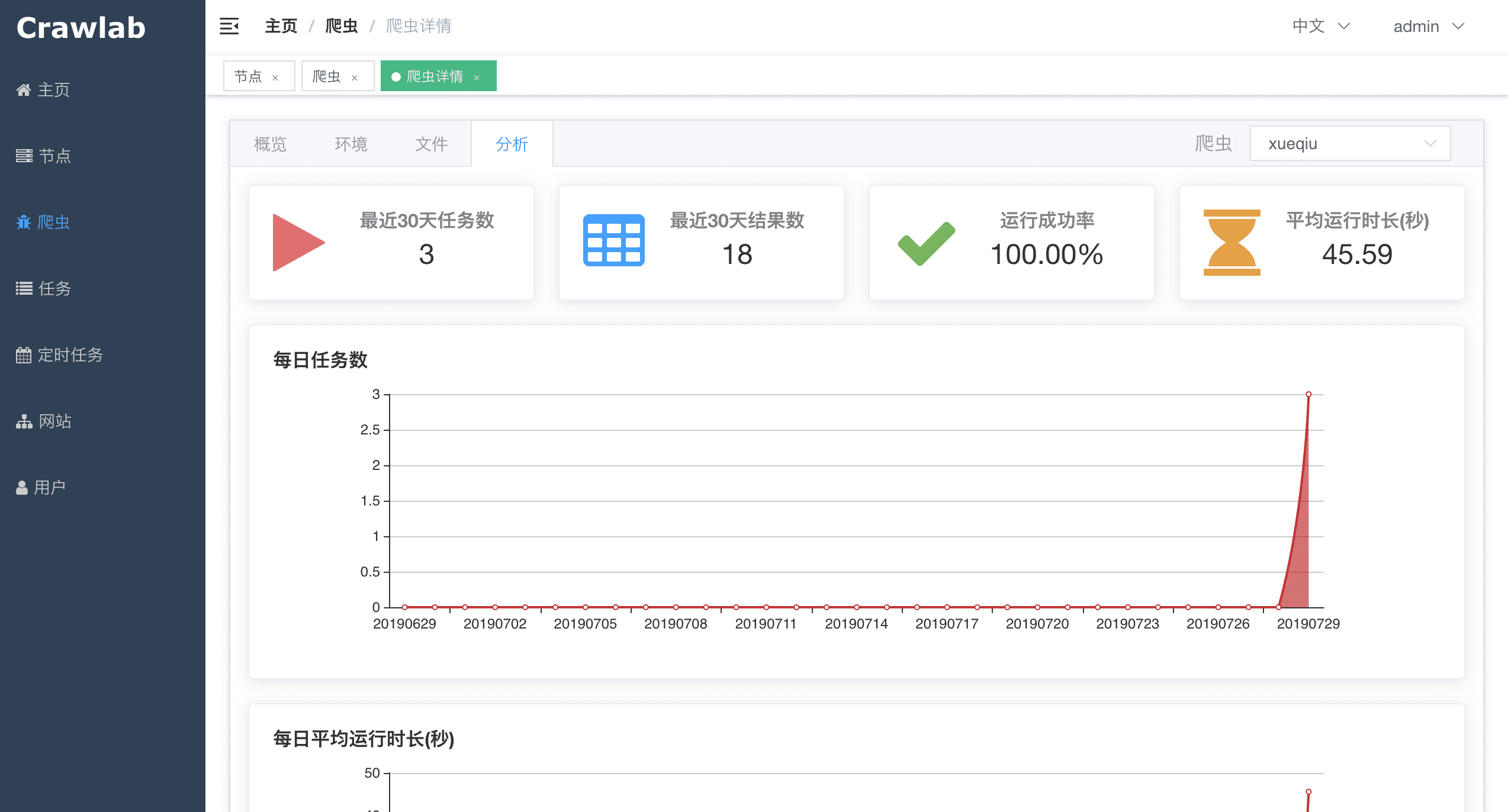

#### 爬虫分析

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/spider-analytics.png?v0.3.0">

|

||||

|

||||

|

||||

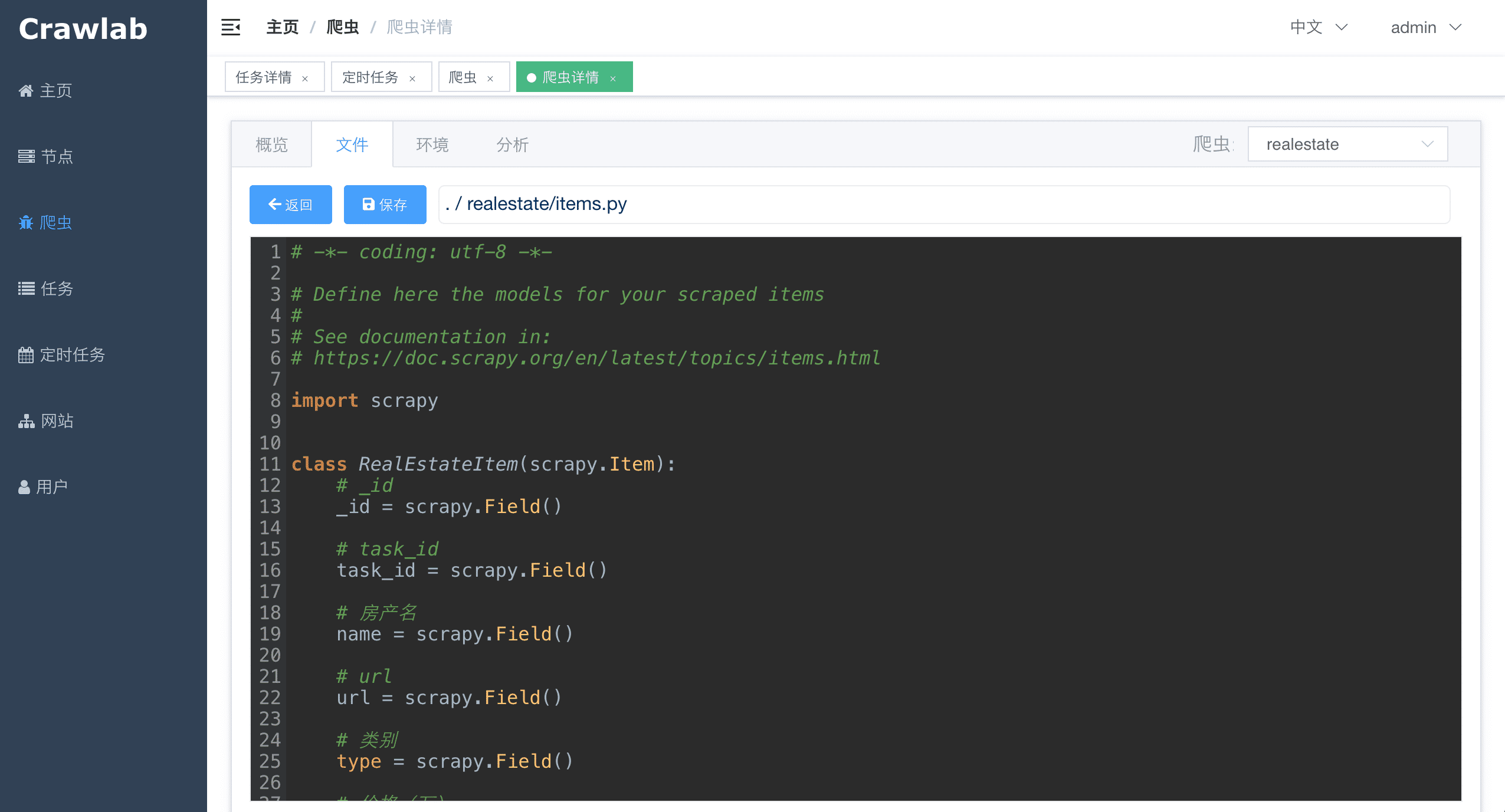

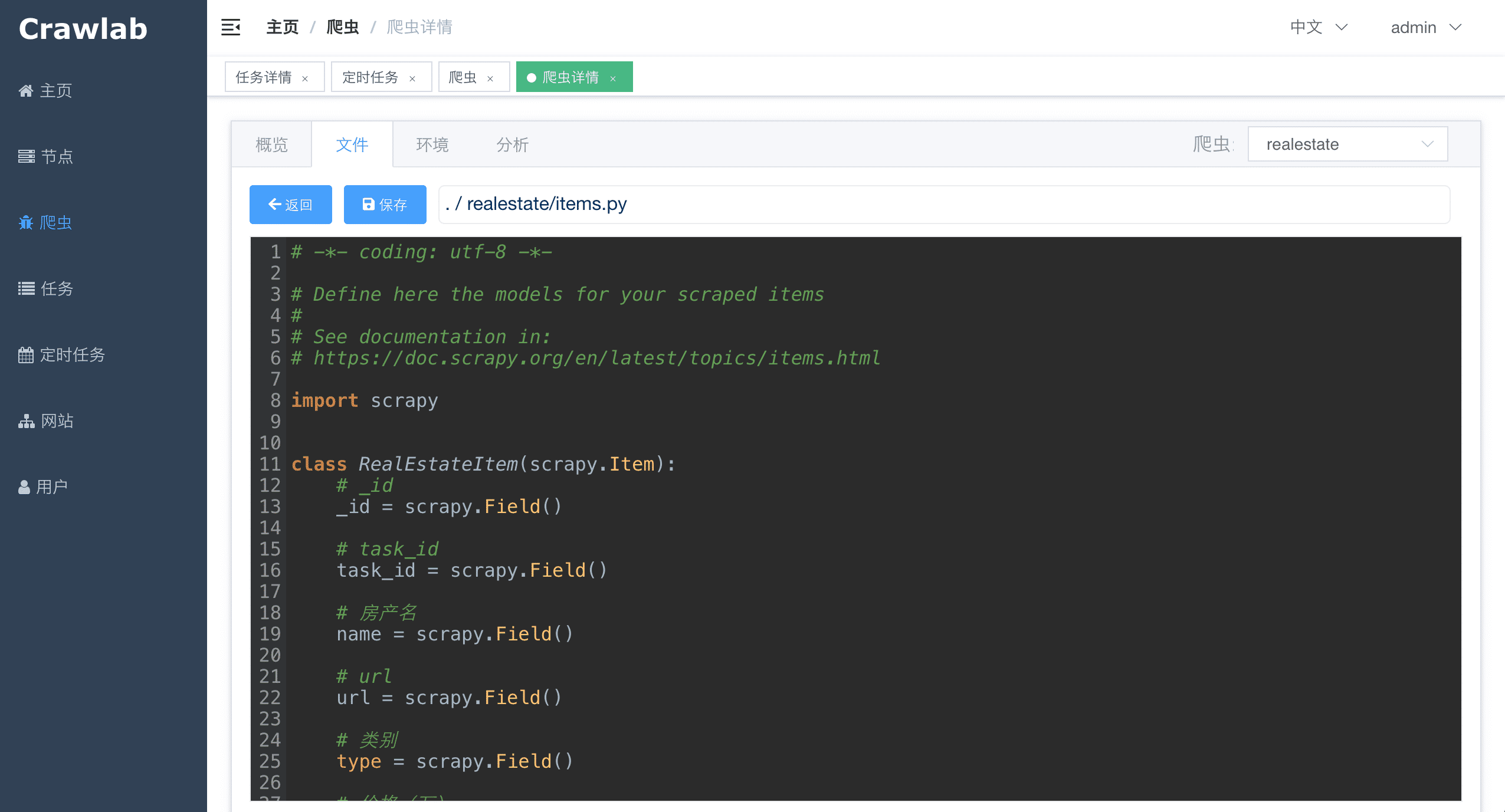

#### 爬虫文件

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/spider-file.png?v0.3.0">

|

||||

|

||||

|

||||

#### 任务详情 - 抓取结果

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/task-results.png?v0.3.0_1">

|

||||

|

||||

|

||||

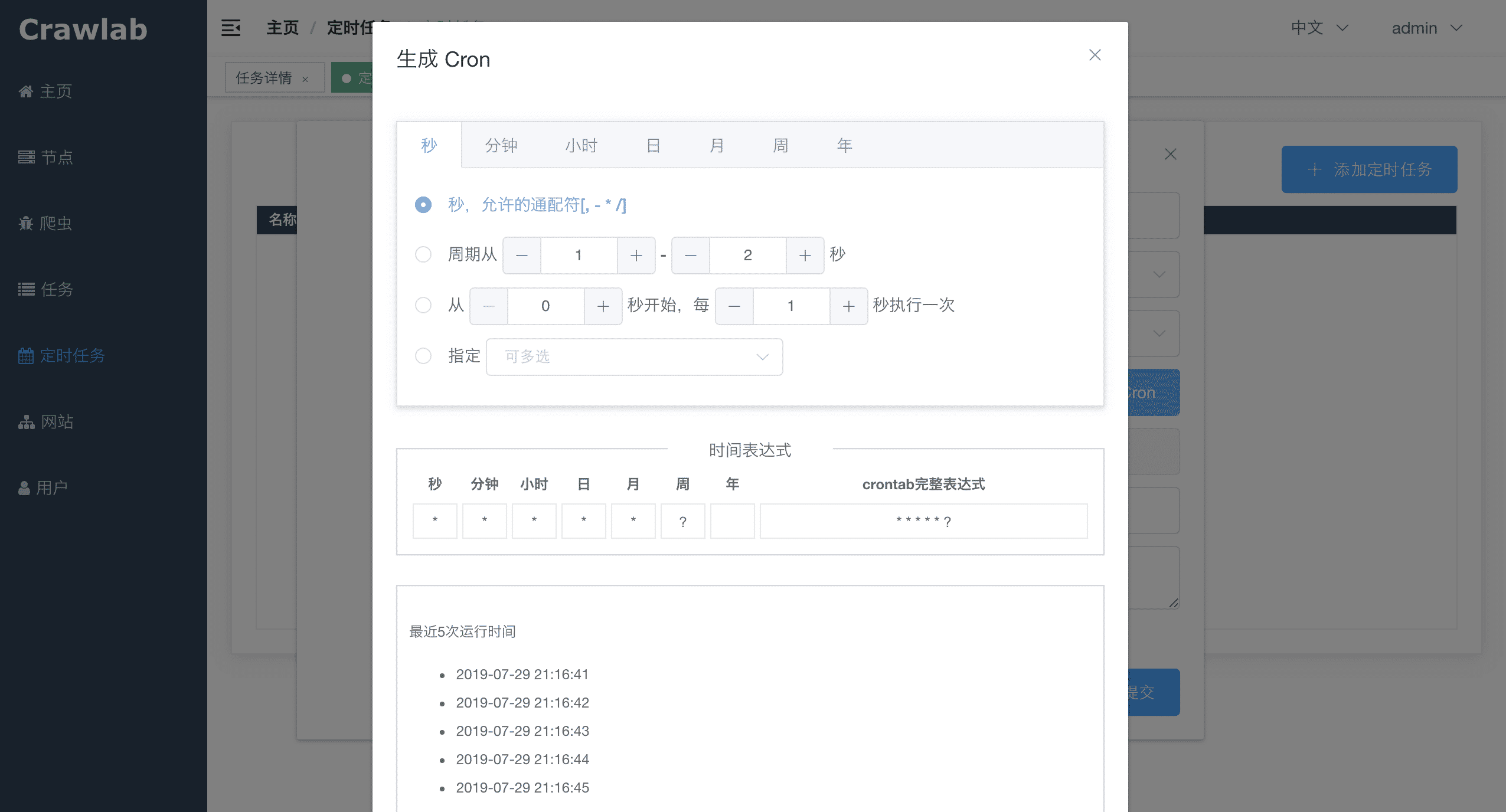

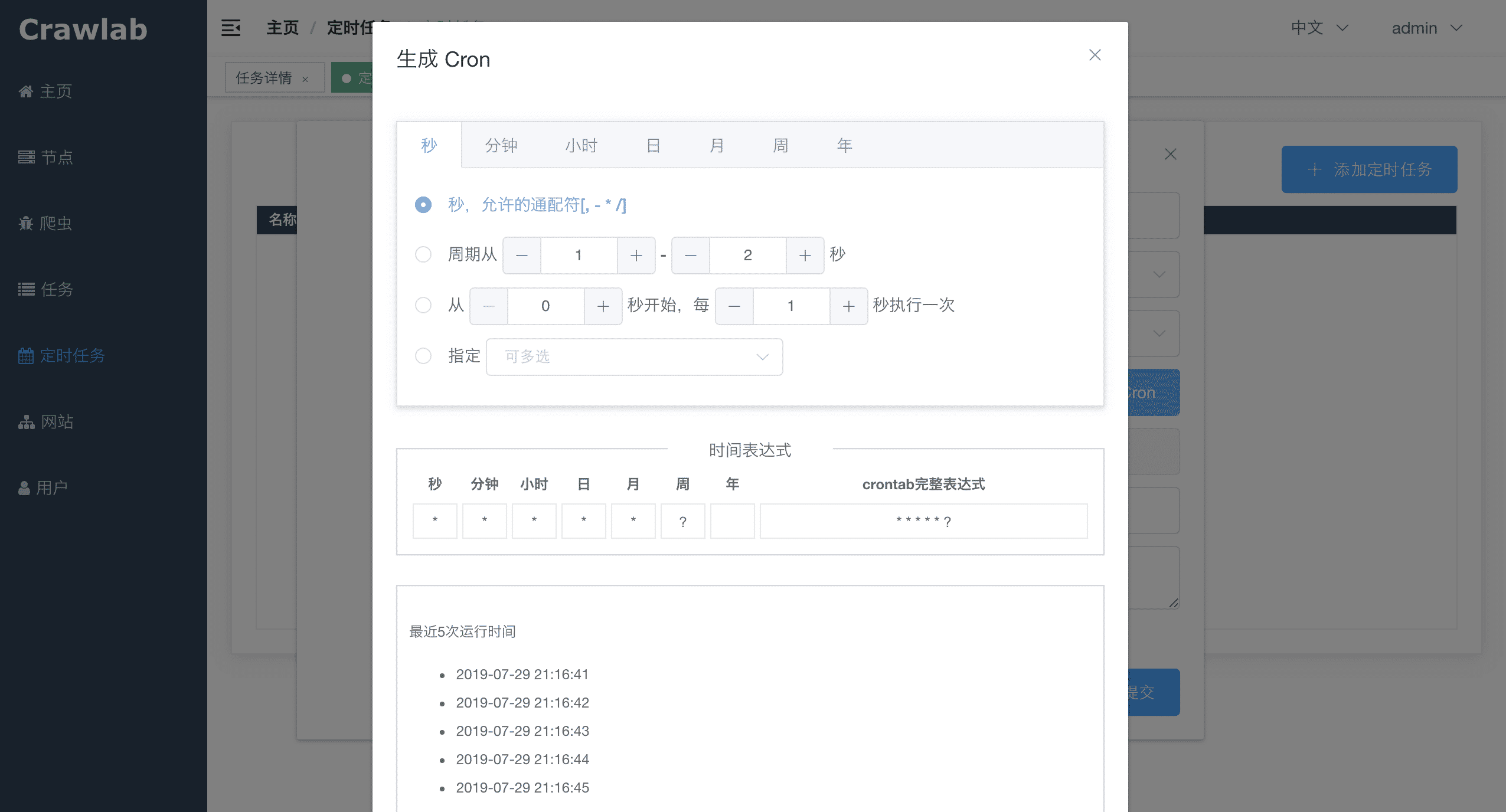

#### 定时任务

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/schedule.png?v0.3.0">

|

||||

|

||||

|

||||

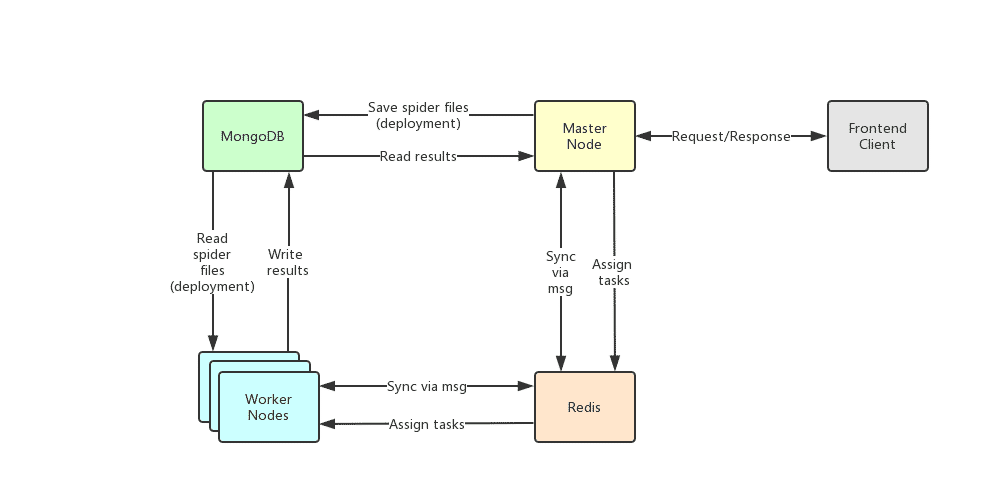

## 架构

|

||||

|

||||

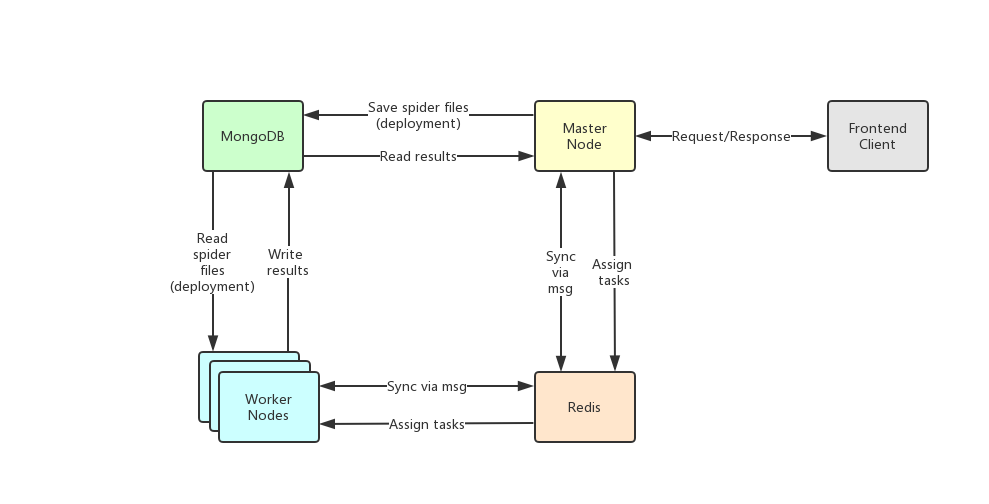

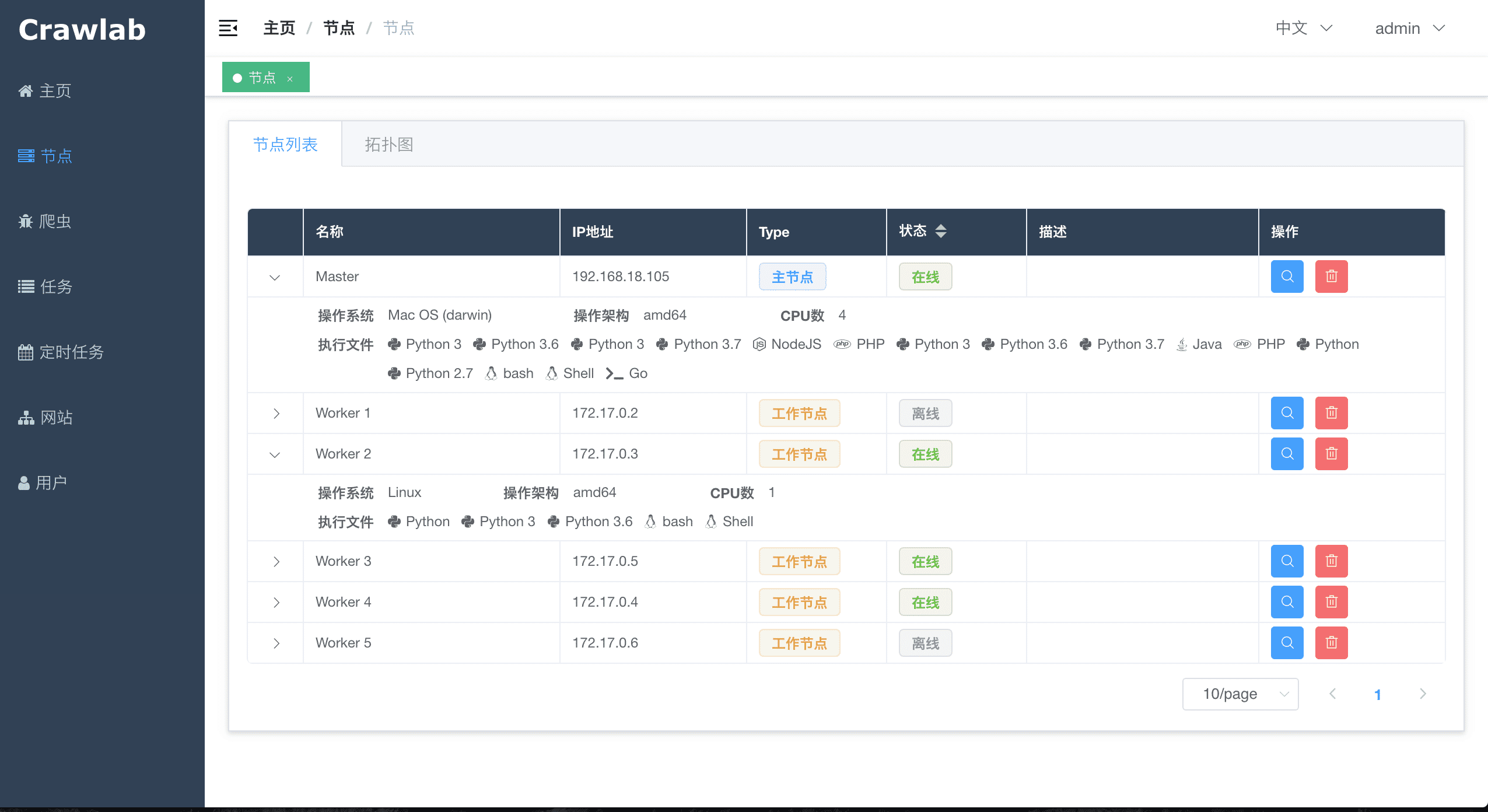

Crawlab的架构包括了一个主节点(Master Node)和多个工作节点(Worker Node),以及负责通信和数据储存的Redis和MongoDB数据库。

|

||||

|

||||

|

||||

|

||||

|

||||

前端应用向主节点请求数据,主节点通过MongoDB和Redis来执行任务派发调度以及部署,工作节点收到任务之后,开始执行爬虫任务,并将任务结果储存到MongoDB。架构相对于`v0.3.0`之前的Celery版本有所精简,去除了不必要的节点监控模块Flower,节点监控主要由Redis完成。

|

||||

|

||||

@@ -221,8 +221,33 @@ Crawlab使用起来很方便,也很通用,可以适用于几乎任何主流

|

||||

| [Gerapy](https://github.com/Gerapy/Gerapy) | 管理平台 | Y | Y | Y

|

||||

| [Scrapyd](https://github.com/scrapy/scrapyd) | 网络服务 | Y | N | N/A

|

||||

|

||||

## Q&A

|

||||

|

||||

#### 1. 为何我访问 http://localhost:8080 提示访问不了?

|

||||

|

||||

假如您是Docker部署的,请检查一下您是否用了Docker Machine,这样的话您需要输入地址 http://192.168.99.100:8080 才行。

|

||||

|

||||

另外,请确保您用了`-p 8080:8080`来映射端口,并检查宿主机是否开放了8080端口。

|

||||

|

||||

#### 2. 我可以看到登录页面了,但为何我点击登陆的时候按钮一直转圈圈?

|

||||

|

||||

绝大多数情况下,您可能是没有正确配置`CRAWLAB_API_ADDRESS`这个环境变量。这个变量是告诉前端应该通过哪个地址来请求API数据的,因此需要将它设置为宿主机的IP地址+端口,例如 `192.168.0.1:8000`。接着,重启容器,在浏览器中输入宿主机IP+端口,就可以顺利登陆了。

|

||||

|

||||

请注意,8080是前端端口,8000是后端端口,您在浏览器中只需要输入前端的地址就可以了,要注意区分。

|

||||

|

||||

#### 3. 在爬虫页面有一些不认识的爬虫列表,这些是什么呢?

|

||||

|

||||

这些是demo爬虫,如果需要添加您自己的爬虫,请将您的爬虫文件打包成zip文件,再在爬虫页面中点击**添加爬虫**上传就可以了。

|

||||

|

||||

注意,Crawlab将取文件名作为爬虫名称,这个您可以后期更改。另外,请不要将zip文件名设置为中文,可能会导致上传不成功。

|

||||

|

||||

####

|

||||

|

||||

## 相关文章

|

||||

|

||||

- [爬虫管理平台Crawlab v0.3.0发布(Golang版本)](https://juejin.im/post/5d418deff265da03c926d75c)

|

||||

- [爬虫平台Crawlab核心原理--分布式架构](https://juejin.im/post/5d4ba9d1e51d4561cf15df79)

|

||||

- [爬虫平台Crawlab核心原理--自动提取字段算法](https://juejin.im/post/5cf4a7fa5188254c5879facd)

|

||||

- [爬虫管理平台Crawlab部署指南(Docker and more)](https://juejin.im/post/5d01027a518825142939320f)

|

||||

- [[爬虫手记] 我是如何在3分钟内开发完一个爬虫的](https://juejin.im/post/5ceb4342f265da1bc8540660)

|

||||

- [手把手教你如何用Crawlab构建技术文章聚合平台(二)](https://juejin.im/post/5c92365d6fb9a070c5510e71)

|

||||

|

||||

38

README.md

38

README.md

@@ -1,6 +1,6 @@

|

||||

# Crawlab

|

||||

|

||||

|

||||

|

||||

|

||||

<a href="https://github.com/tikazyq/crawlab/blob/master/LICENSE" target="_blank">

|

||||

<img src="https://img.shields.io/badge/license-BSD-blue.svg">

|

||||

@@ -12,13 +12,13 @@

|

||||

|

||||

Golang-based distributed web crawler management platform, supporting various languages including Python, NodeJS, Go, Java, PHP and various web crawler frameworks including Scrapy, Puppeteer, Selenium.

|

||||

|

||||

[Demo](http://114.67.75.98:8080) | [Documentation](https://tikazyq.github.io/crawlab-docs)

|

||||

[Demo](http://crawlab.cn/demo) | [Documentation](https://tikazyq.github.io/crawlab-docs)

|

||||

|

||||

## Installation

|

||||

|

||||

Two methods:

|

||||

1. [Docker](https://tikazyq.github.io/crawlab/Installation/Docker.md) (Recommended)

|

||||

2. [Direct Deploy](https://tikazyq.github.io/crawlab/Installation/Direct.md) (Check Internal Kernel)

|

||||

1. [Docker](https://tikazyq.github.io/crawlab/Installation/Docker.html) (Recommended)

|

||||

2. [Direct Deploy](https://tikazyq.github.io/crawlab/Installation/Direct.html) (Check Internal Kernel)

|

||||

|

||||

### Pre-requisite (Docker)

|

||||

- Docker 18.03+

|

||||

@@ -53,7 +53,7 @@ docker run -d --rm --name crawlab \

|

||||

Surely you can use `docker-compose` to one-click to start up. By doing so, you don't even have to configure MongoDB and Redis databases. Create a file named `docker-compose.yml` and input the code below.

|

||||

|

||||

|

||||

```bash

|

||||

```yaml

|

||||

version: '3.3'

|

||||

services:

|

||||

master:

|

||||

@@ -88,56 +88,56 @@ Then execute the command below, and Crawlab Master Node + MongoDB + Redis will s

|

||||

docker-compose up

|

||||

```

|

||||

|

||||

For Docker Deployment details, please refer to [relevant documentation](https://tikazyq.github.io/crawlab/Installation/Docker.md).

|

||||

For Docker Deployment details, please refer to [relevant documentation](https://tikazyq.github.io/crawlab/Installation/Docker.html).

|

||||

|

||||

|

||||

## Screenshot

|

||||

|

||||

#### Login

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/login.png?v0.3.0">

|

||||

|

||||

|

||||

#### Home Page

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/home.png?v0.3.0">

|

||||

|

||||

|

||||

#### Node List

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/node-list.png?v0.3.0">

|

||||

|

||||

|

||||

#### Node Network

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/node-network.png?v0.3.0">

|

||||

|

||||

|

||||

#### Spider List

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/spider-list.png?v0.3.0">

|

||||

|

||||

|

||||

#### Spider Overview

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/spider-overview.png?v0.3.0">

|

||||

|

||||

|

||||

#### Spider Analytics

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/spider-analytics.png?v0.3.0">

|

||||

|

||||

|

||||

#### Spider Files

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/spider-file.png?v0.3.0">

|

||||

|

||||

|

||||

#### Task Results

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/task-results.png?v0.3.0_1">

|

||||

|

||||

|

||||

#### Cron Job

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/schedule.png?v0.3.0">

|

||||

|

||||

|

||||

## Architecture

|

||||

|

||||

The architecture of Crawlab is consisted of the Master Node and multiple Worker Nodes, and Redis and MongoDB databases which are mainly for nodes communication and data storage.

|

||||

|

||||

<img src="https://crawlab.oss-cn-hangzhou.aliyuncs.com/v0.3.0/architecture.png">

|

||||

|

||||

|

||||

The frontend app makes requests to the Master Node, which assigns tasks and deploys spiders through MongoDB and Redis. When a Worker Node receives a task, it begins to execute the crawling task, and stores the results to MongoDB. The architecture is much more concise compared with versions before `v0.3.0`. It has removed unnecessary Flower module which offers node monitoring services. They are now done by Redis.

|

||||

|

||||

@@ -169,7 +169,7 @@ Redis is a very popular Key-Value database. It offers node communication service

|

||||

### Frontend

|

||||

|

||||

Frontend is a SPA based on

|

||||

[Vue-Element-Admin](https://github.com/PanJiaChen/vue-element-admin). It has re-used many Element-UI components to support correspoinding display.

|

||||

[Vue-Element-Admin](https://github.com/PanJiaChen/vue-element-admin). It has re-used many Element-UI components to support corresponding display.

|

||||

|

||||

## Integration with Other Frameworks

|

||||

|

||||

@@ -206,7 +206,7 @@ class JuejinPipeline(object):

|

||||

|

||||

There are existing spider management frameworks. So why use Crawlab?

|

||||

|

||||

The reason is that most of the existing platforms are depending on Scrapyd, which limits the choice only within python and scrapy. Surely scrapy is a great web crawl frameowrk, but it cannot do everything.

|

||||

The reason is that most of the existing platforms are depending on Scrapyd, which limits the choice only within python and scrapy. Surely scrapy is a great web crawl framework, but it cannot do everything.

|

||||

|

||||

Crawlab is easy to use, general enough to adapt spiders in any language and any framework. It has also a beautiful frontend interface for users to manage spiders much more easily.

|

||||

|

||||

|

||||

@@ -77,7 +77,7 @@ func DeCompress(srcFile *os.File, dstPath string) error {

|

||||

|

||||

// 如果是目录,则创建一个

|

||||

if info.IsDir() {

|

||||

err = os.MkdirAll(filepath.Join(dstPath, innerFile.Name), os.ModePerm)

|

||||

err = os.MkdirAll(filepath.Join(dstPath, innerFile.Name), os.ModeDir|os.ModePerm)

|

||||

if err != nil {

|

||||

log.Errorf("Unzip File Error : " + err.Error())

|

||||

debug.PrintStack()

|

||||

@@ -89,7 +89,7 @@ func DeCompress(srcFile *os.File, dstPath string) error {

|

||||

// 如果文件目录不存在,则创建一个

|

||||

dirPath := filepath.Dir(innerFile.Name)

|

||||

if !Exists(dirPath) {

|

||||

err = os.MkdirAll(filepath.Join(dstPath, dirPath), os.ModePerm)

|

||||

err = os.MkdirAll(filepath.Join(dstPath, dirPath), os.ModeDir|os.ModePerm)

|

||||

if err != nil {

|

||||

log.Errorf("Unzip File Error : " + err.Error())

|

||||

debug.PrintStack()

|

||||

|

||||

@@ -6,8 +6,17 @@ then

|

||||

:

|

||||

else

|

||||

jspath=`ls /app/dist/js/app.*.js`

|

||||

cp ${jspath} ${jspath}.bak

|

||||

sed -i "s/localhost:8000/${CRAWLAB_API_ADDRESS}/g" ${jspath}

|

||||

sed -i "s?localhost:8000?${CRAWLAB_API_ADDRESS}?g" ${jspath}

|

||||

fi

|

||||

|

||||

# replace base url

|

||||

if [ "${CRAWLAB_BASE_URL}" = "" ];

|

||||

then

|

||||

:

|

||||

else

|

||||

indexpath=/app/dist/index.html

|

||||

sed -i "s?/js/?${CRAWLAB_BASE_URL}/js/?g" ${indexpath}

|

||||

sed -i "s?/css/?${CRAWLAB_BASE_URL}/css/?g" ${indexpath}

|

||||

fi

|

||||

|

||||

# start nginx

|

||||

|

||||

@@ -29,7 +29,7 @@

|

||||

<el-input v-model="spiderForm.col" :placeholder="$t('Results Collection')"

|

||||

:disabled="isView"></el-input>

|

||||

</el-form-item>

|

||||

<el-form-item :label="$t('Site')">

|

||||

<el-form-item v-if="false" :label="$t('Site')">

|

||||

<el-autocomplete v-model="spiderForm.site"

|

||||

:placeholder="$t('Site')"

|

||||

:fetch-suggestions="fetchSiteSuggestions"

|

||||

|

||||

@@ -2,7 +2,7 @@

|

||||

<div class="navbar">

|

||||

<hamburger :toggle-click="toggleSideBar" :is-active="sidebar.opened" class="hamburger-container"/>

|

||||

<breadcrumb class="breadcrumb"/>

|

||||

<el-dropdown class="avatar-container" trigger="click">

|

||||

<el-dropdown class="avatar-container right" trigger="click">

|

||||

<span class="el-dropdown-link">

|

||||

{{username}}

|

||||

<i class="el-icon-arrow-down el-icon--right"></i>

|

||||

@@ -13,7 +13,7 @@

|

||||

</el-dropdown-item>

|

||||

</el-dropdown-menu>

|

||||

</el-dropdown>

|

||||

<el-dropdown class="lang-list" trigger="click">

|

||||

<el-dropdown class="lang-list right" trigger="click">

|

||||

<span class="el-dropdown-link">

|

||||

{{$t($store.getters['lang/lang'])}}

|

||||

<i class="el-icon-arrow-down el-icon--right"></i>

|

||||

@@ -27,6 +27,12 @@

|

||||

</el-dropdown-item>

|

||||

</el-dropdown-menu>

|

||||

</el-dropdown>

|

||||

<el-dropdown class="documentation right">

|

||||

<a href="https://tikazyq.github.io/crawlab-docs" target="_blank">

|

||||

<font-awesome-icon :icon="['far', 'question-circle']"/>

|

||||

<span style="margin-left: 5px;">文档</span>

|

||||

</a>

|

||||

</el-dropdown>

|

||||

</div>

|

||||

</template>

|

||||

|

||||

@@ -86,7 +92,6 @@ export default {

|

||||

.lang-list {

|

||||

cursor: pointer;

|

||||

display: inline-block;

|

||||

float: right;

|

||||

margin-right: 35px;

|

||||

/*position: absolute;*/

|

||||

/*right: 80px;*/

|

||||

@@ -103,10 +108,21 @@ export default {

|

||||

cursor: pointer;

|

||||

height: 50px;

|

||||

display: inline-block;

|

||||

float: right;

|

||||

margin-right: 35px;

|

||||

/*position: absolute;*/

|

||||

/*right: 35px;*/

|

||||

}

|

||||

|

||||

.documentation {

|

||||

margin-right: 35px;

|

||||

|

||||

.span {

|

||||

margin-left: 5px;

|

||||

}

|

||||

}

|

||||

|

||||

.right {

|

||||

float: right

|

||||

}

|

||||

}

|

||||

</style>

|

||||

|

||||

@@ -78,7 +78,7 @@ export default {

|

||||

this.$refs['spider-stats'].update()

|

||||

}, 0)

|

||||

}

|

||||

this.$st.sendEv('爬虫详情', '切换标签', 'tabName', tab.name)

|

||||

this.$st.sendEv('爬虫详情', '切换标签', tab.name)

|

||||

},

|

||||

onSpiderChange (id) {

|

||||

this.$router.push(`/spiders/${id}`)

|

||||

|

||||

@@ -145,7 +145,9 @@

|

||||

<el-table :data="filteredTableData"

|

||||

class="table"

|

||||

:header-cell-style="{background:'rgb(48, 65, 86)',color:'white'}"

|

||||

border>

|

||||

border

|

||||

@row-click="onRowClick"

|

||||

>

|

||||

<template v-for="col in columns">

|

||||

<el-table-column v-if="col.name === 'type'"

|

||||

:key="col.name"

|

||||

@@ -494,6 +496,11 @@ export default {

|

||||

getTime (str) {

|

||||

if (!str || str.match('^0001')) return 'NA'

|

||||

return dayjs(str).format('YYYY-MM-DD HH:mm:ss')

|

||||

},

|

||||

onRowClick (row, event, column) {

|

||||

if (column.label !== this.$t('Action')) {

|

||||

this.onView(row)

|

||||

}

|

||||

}

|

||||

},

|

||||

created () {

|

||||

@@ -584,3 +591,9 @@ export default {

|

||||

}

|

||||

|

||||

</style>

|

||||

|

||||

<style scoped>

|

||||

.el-table >>> tr {

|

||||

cursor: pointer;

|

||||

}

|

||||

</style>

|

||||

|

||||

@@ -83,7 +83,7 @@ export default {

|

||||

},

|

||||

methods: {

|

||||

onTabClick (tab) {

|

||||

this.$st.sendEv('任务详情', '切换标签', 'tabName', tab.name)

|

||||

this.$st.sendEv('任务详情', '切换标签', tab.name)

|

||||

},

|

||||

onSpiderChange (id) {

|

||||

this.$router.push(`/spiders/${id}`)

|

||||

|

||||

@@ -36,7 +36,9 @@

|

||||

<el-table :data="filteredTableData"

|

||||

class="table"

|

||||

:header-cell-style="{background:'rgb(48, 65, 86)',color:'white'}"

|

||||

border>

|

||||

border

|

||||

@row-click="onRowClick"

|

||||

>

|

||||

<template v-for="col in columns">

|

||||

<el-table-column v-if="col.name === 'spider_name'"

|

||||

:key="col.name"

|

||||

@@ -295,6 +297,11 @@ export default {

|

||||

getTotalDuration (row) {

|

||||

if (row.finish_ts.match('^0001')) return 'NA'

|

||||

return dayjs(row.finish_ts).diff(row.create_ts, 'second')

|

||||

},

|

||||

onRowClick (row, event, column) {

|

||||

if (column.label !== this.$t('Action')) {

|

||||

this.onView(row)

|

||||

}

|

||||

}

|

||||

},

|

||||

created () {

|

||||

@@ -362,3 +369,9 @@ export default {

|

||||

text-align: right;

|

||||

}

|

||||

</style>

|

||||

|

||||

<style scoped>

|

||||

.el-table >>> tr {

|

||||

cursor: pointer;

|

||||

}

|

||||

</style>

|

||||

|

||||

3

frontend/vue.config.js

Normal file

3

frontend/vue.config.js

Normal file

@@ -0,0 +1,3 @@

|

||||

module.exports = {

|

||||

publicPath: process.env.BASE_URL || '/'

|

||||

}

|

||||

36

jenkins/develop/docker-compose.yaml

Normal file

36

jenkins/develop/docker-compose.yaml

Normal file

@@ -0,0 +1,36 @@

|

||||

version: '3.3'

|

||||

services:

|

||||

master:

|

||||

image: "tikazyq/crawlab:develop"

|

||||

environment:

|

||||

CRAWLAB_API_ADDRESS: "crawlab.cn/dev/api"

|

||||

CRAWLAB_BASE_URL: "/dev"

|

||||

CRAWLAB_SERVER_MASTER: "Y"

|

||||

CRAWLAB_MONGO_HOST: "mongo"

|

||||

CRAWLAB_REDIS_ADDRESS: "redis"

|

||||

CRAWLAB_LOG_PATH: "/var/logs/crawlab"

|

||||

ports:

|

||||

- "8090:8080" # frontend

|

||||

- "8010:8000" # backend

|

||||

depends_on:

|

||||

- mongo

|

||||

- redis

|

||||

worker:

|

||||

image: "tikazyq/crawlab:develop"

|

||||

environment:

|

||||

CRAWLAB_SERVER_MASTER: "N"

|

||||

CRAWLAB_MONGO_HOST: "mongo"

|

||||

CRAWLAB_REDIS_ADDRESS: "redis"

|

||||

depends_on:

|

||||

- mongo

|

||||

- redis

|

||||

mongo:

|

||||

image: mongo:latest

|

||||

restart: always

|

||||

ports:

|

||||

- "27027:27017"

|

||||

redis:

|

||||

image: redis:latest

|

||||

restart: always

|

||||

ports:

|

||||

- "6389:6379"

|

||||

@@ -1,13 +1,14 @@

|

||||

version: '3.3'

|

||||

services:

|

||||

master:

|

||||

image: tikazyq/crawlab:latest

|

||||

container_name: master

|

||||

image: "tikazyq/crawlab:master"

|

||||

environment:

|

||||

CRAWLAB_API_ADDRESS: "114.67.75.98:8000"

|

||||

CRAWLAB_API_ADDRESS: "crawlab.cn/api"

|

||||

CRAWLAB_BASE_URL: "/demo"

|

||||

CRAWLAB_SERVER_MASTER: "Y"

|

||||

CRAWLAB_MONGO_HOST: "mongo"

|

||||

CRAWLAB_REDIS_ADDRESS: "redis"

|

||||

CRAWLAB_LOG_PATH: "/var/logs/crawlab"

|

||||

ports:

|

||||

- "8080:8080" # frontend

|

||||

- "8000:8000" # backend

|

||||

@@ -15,8 +16,7 @@ services:

|

||||

- mongo

|

||||

- redis

|

||||

worker:

|

||||

image: tikazyq/crawlab:latest

|

||||

container_name: worker

|

||||

image: "tikazyq/crawlab:master"

|

||||

environment:

|

||||

CRAWLAB_SERVER_MASTER: "N"

|

||||

CRAWLAB_MONGO_HOST: "mongo"

|

||||

Reference in New Issue

Block a user