diff --git a/CHANGELOG.md b/CHANGELOG.md

index b24576ea..b4204f16 100644

--- a/CHANGELOG.md

+++ b/CHANGELOG.md

@@ -1,4 +1,22 @@

-# 0.2.4 (unreleased)

+# 0.3.0 (2019-07-31)

+### Features / Enhancement

+- **Golang Backend**: Refactored code from Python backend to Golang, much more stability and performance.

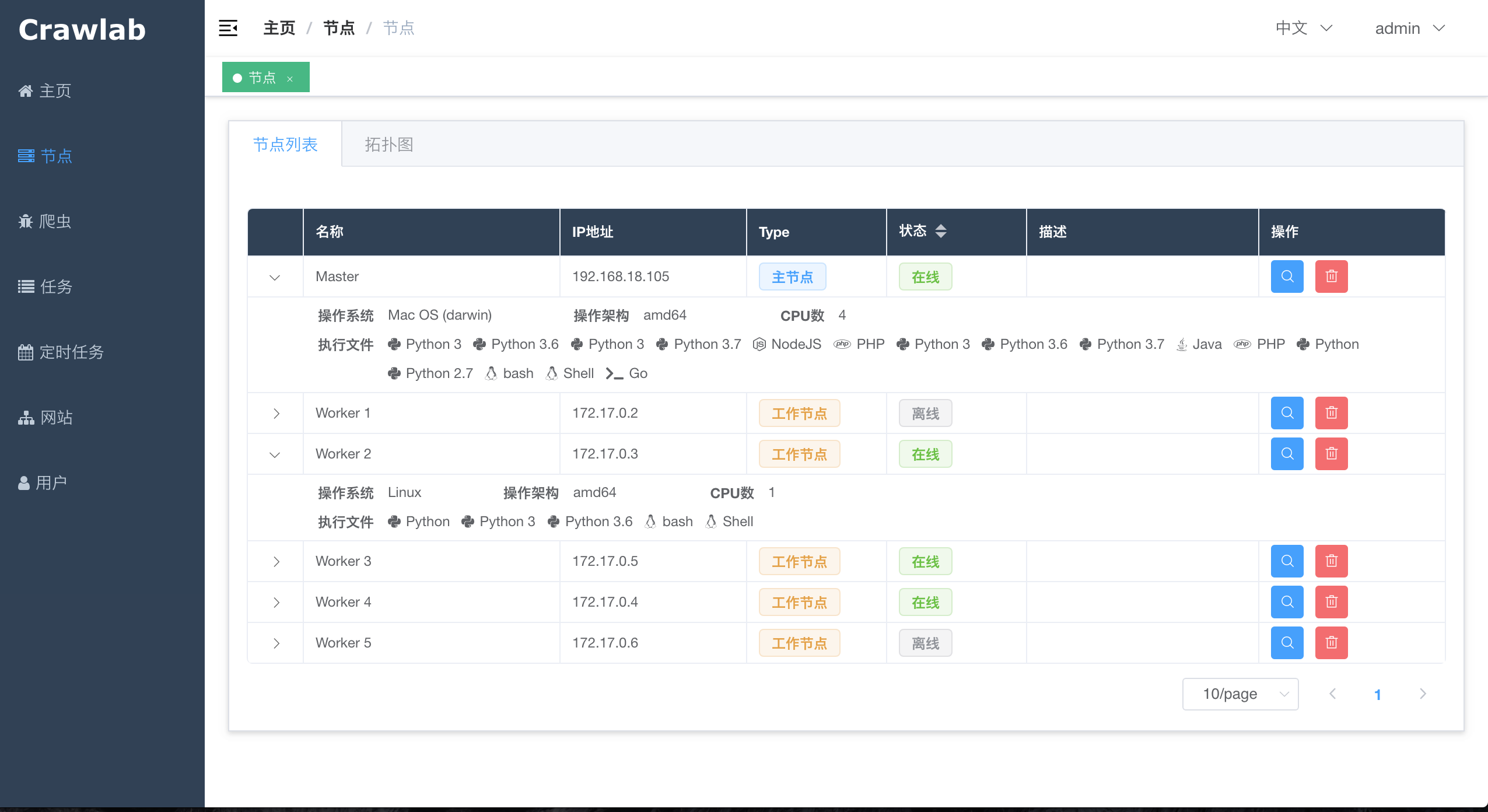

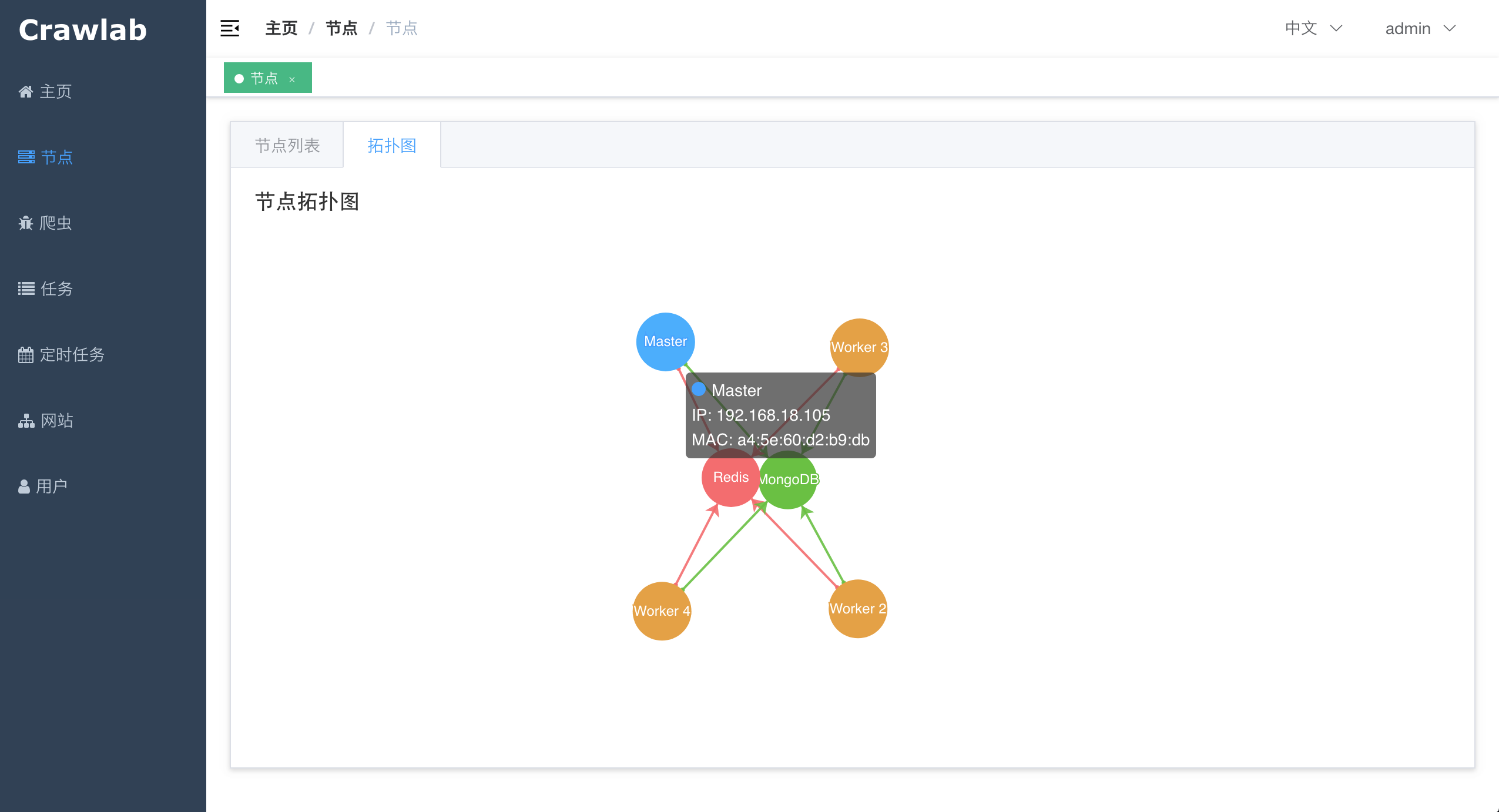

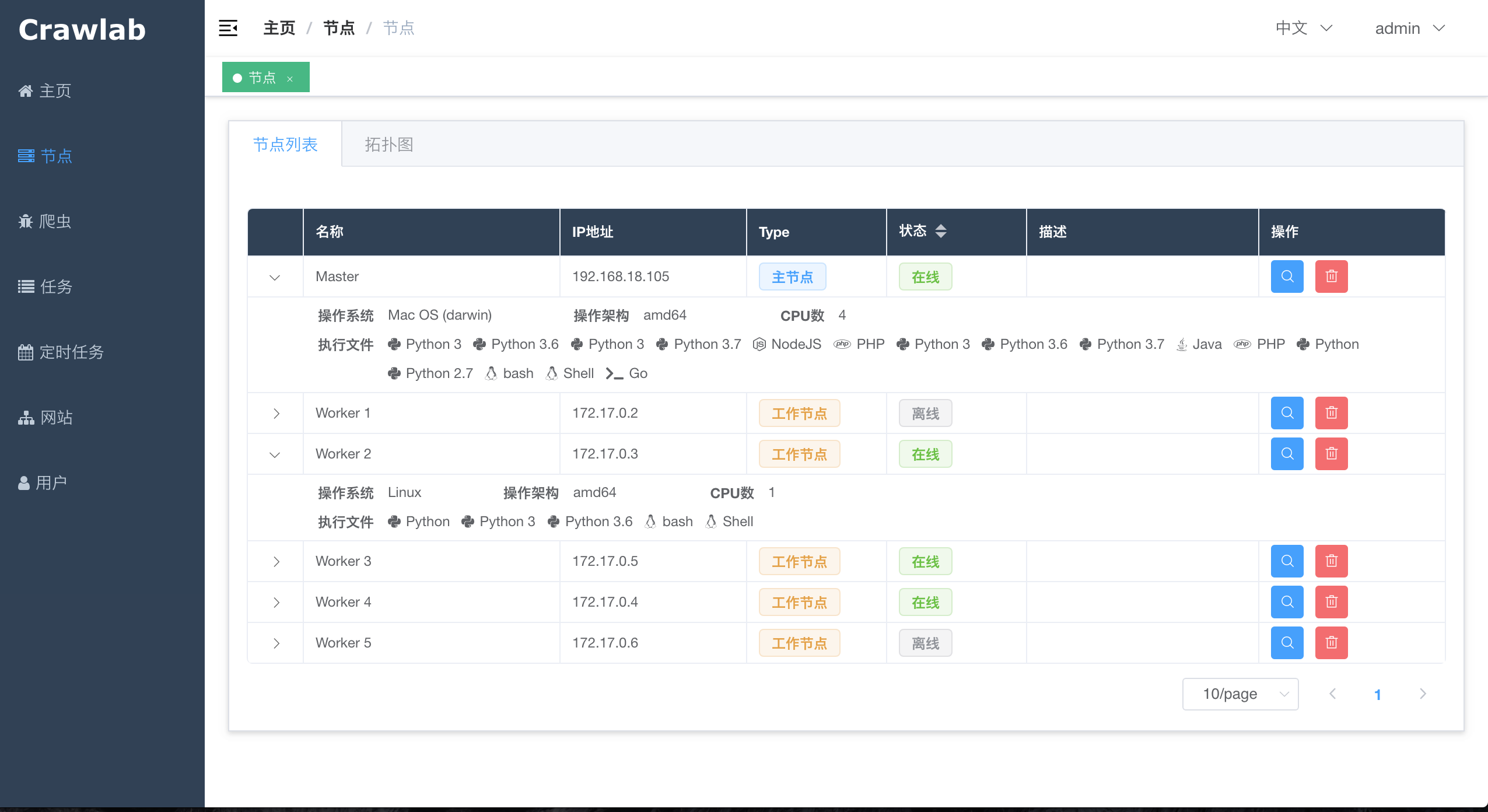

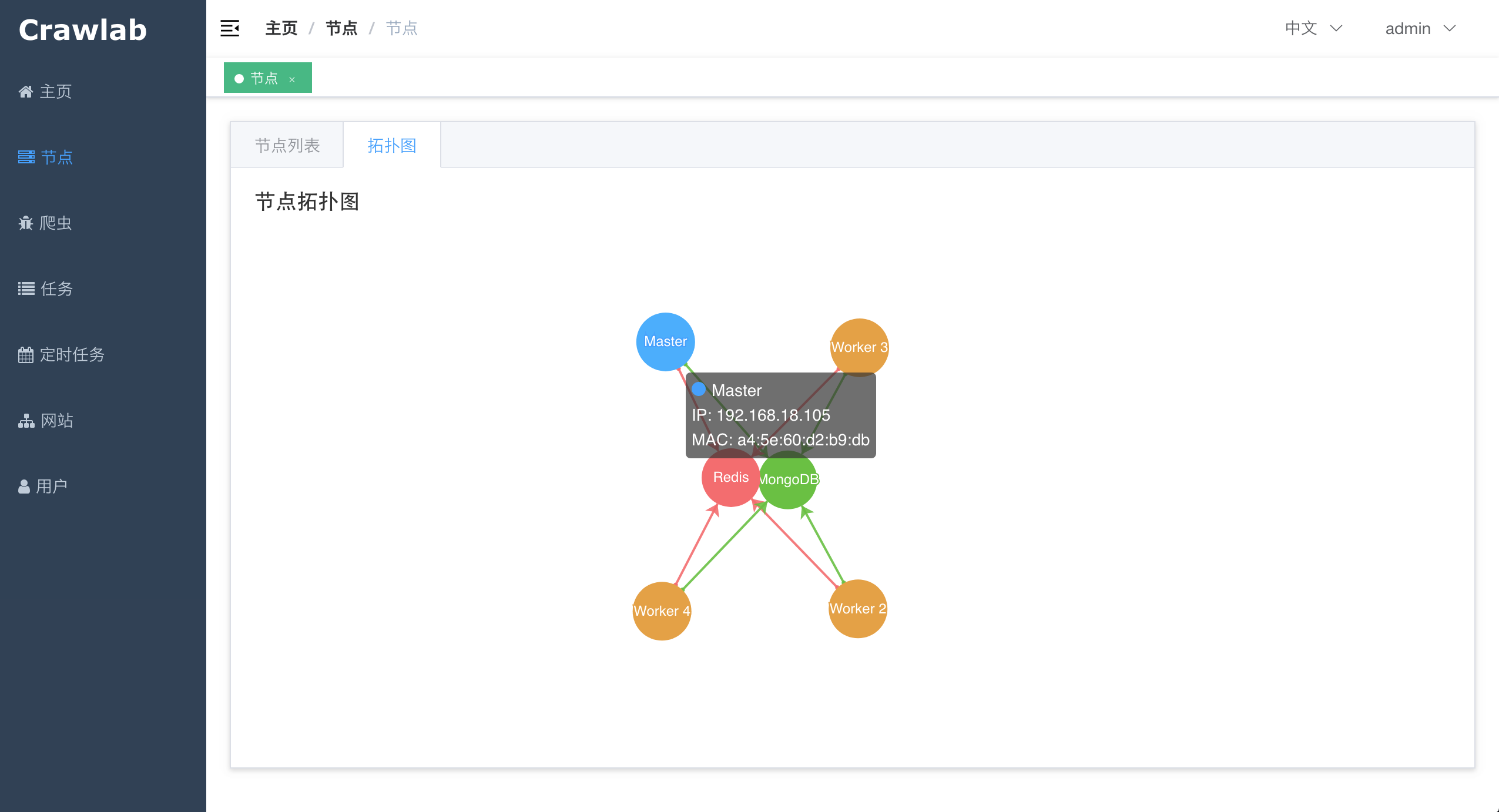

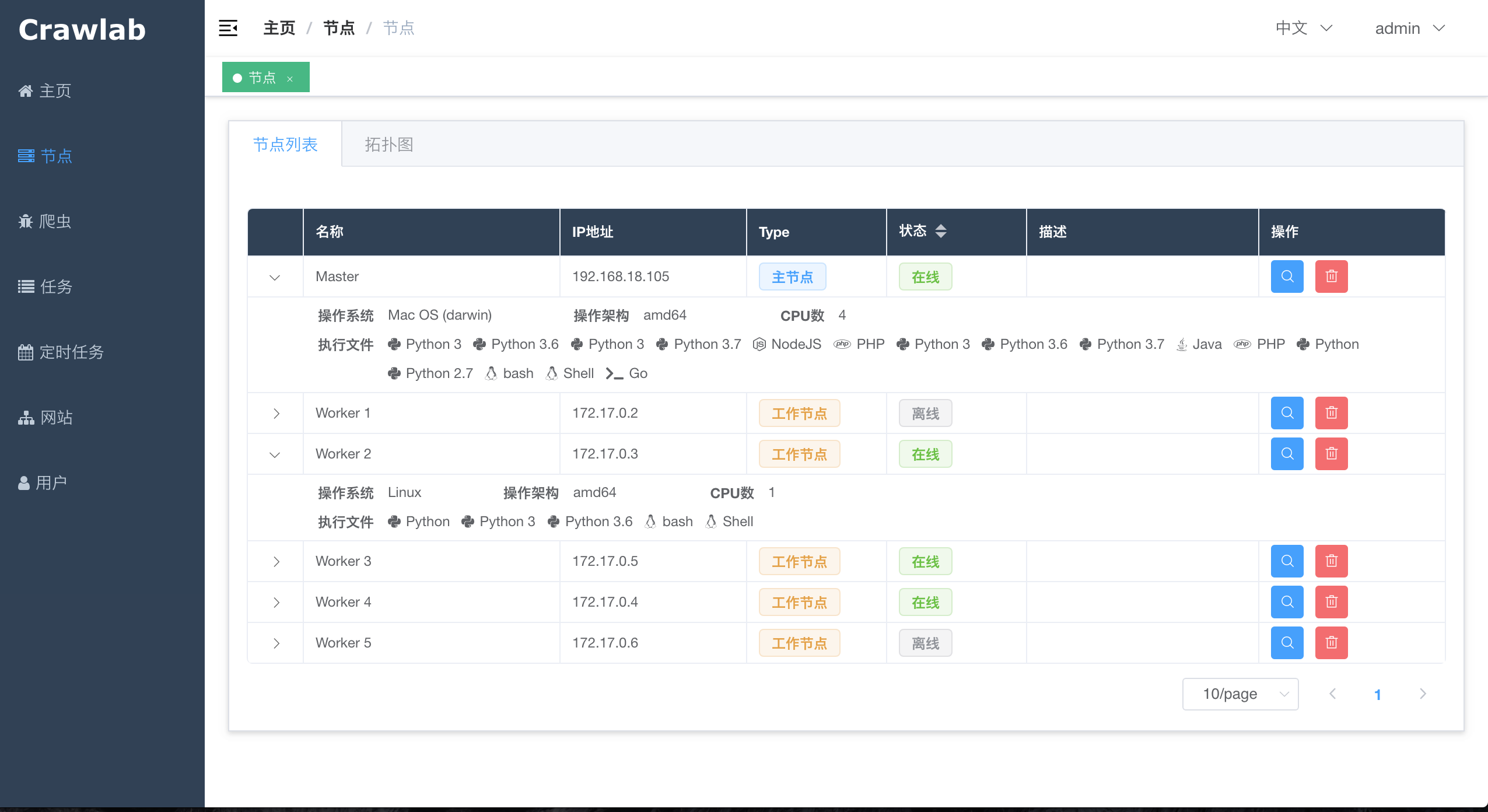

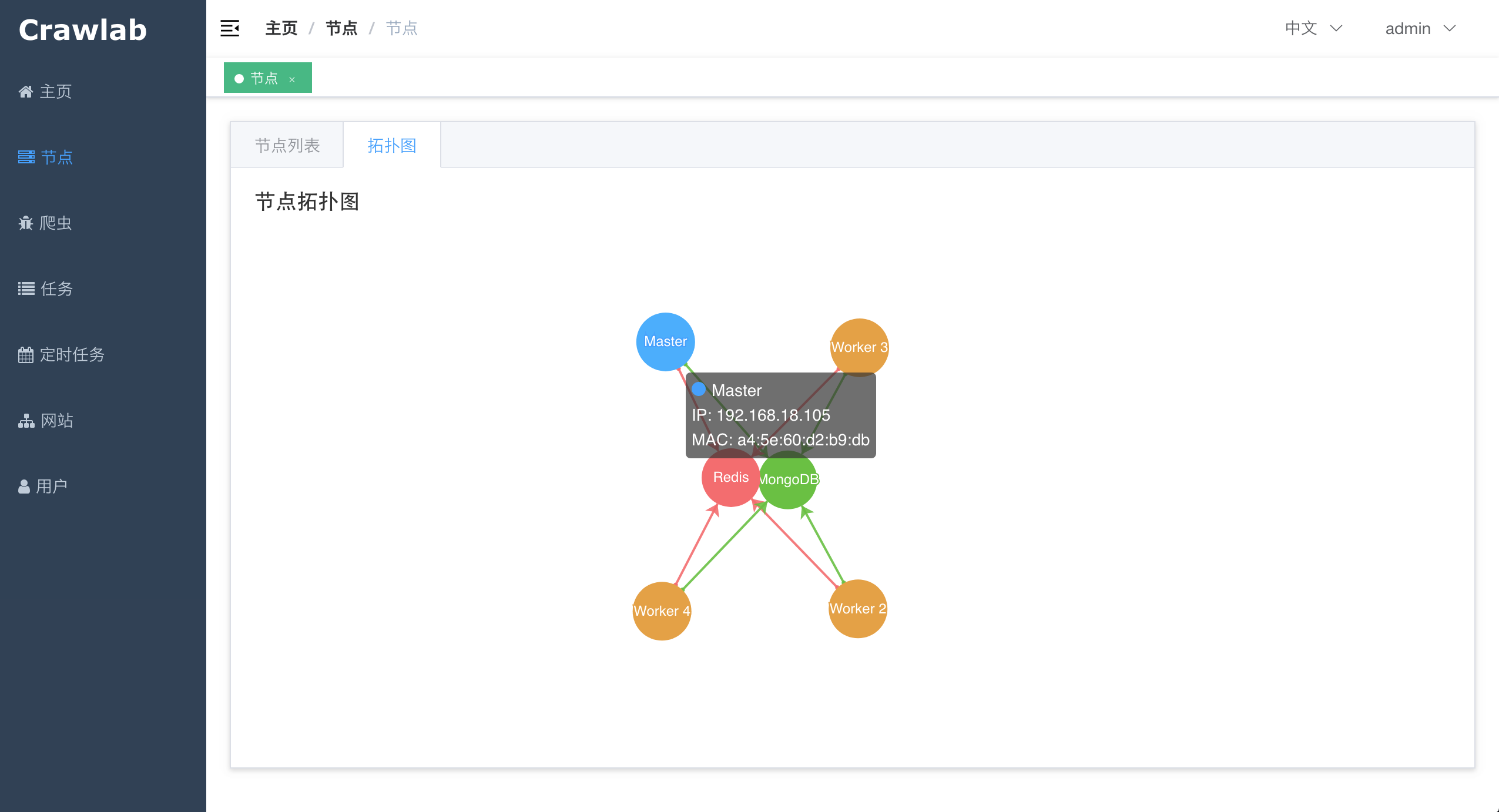

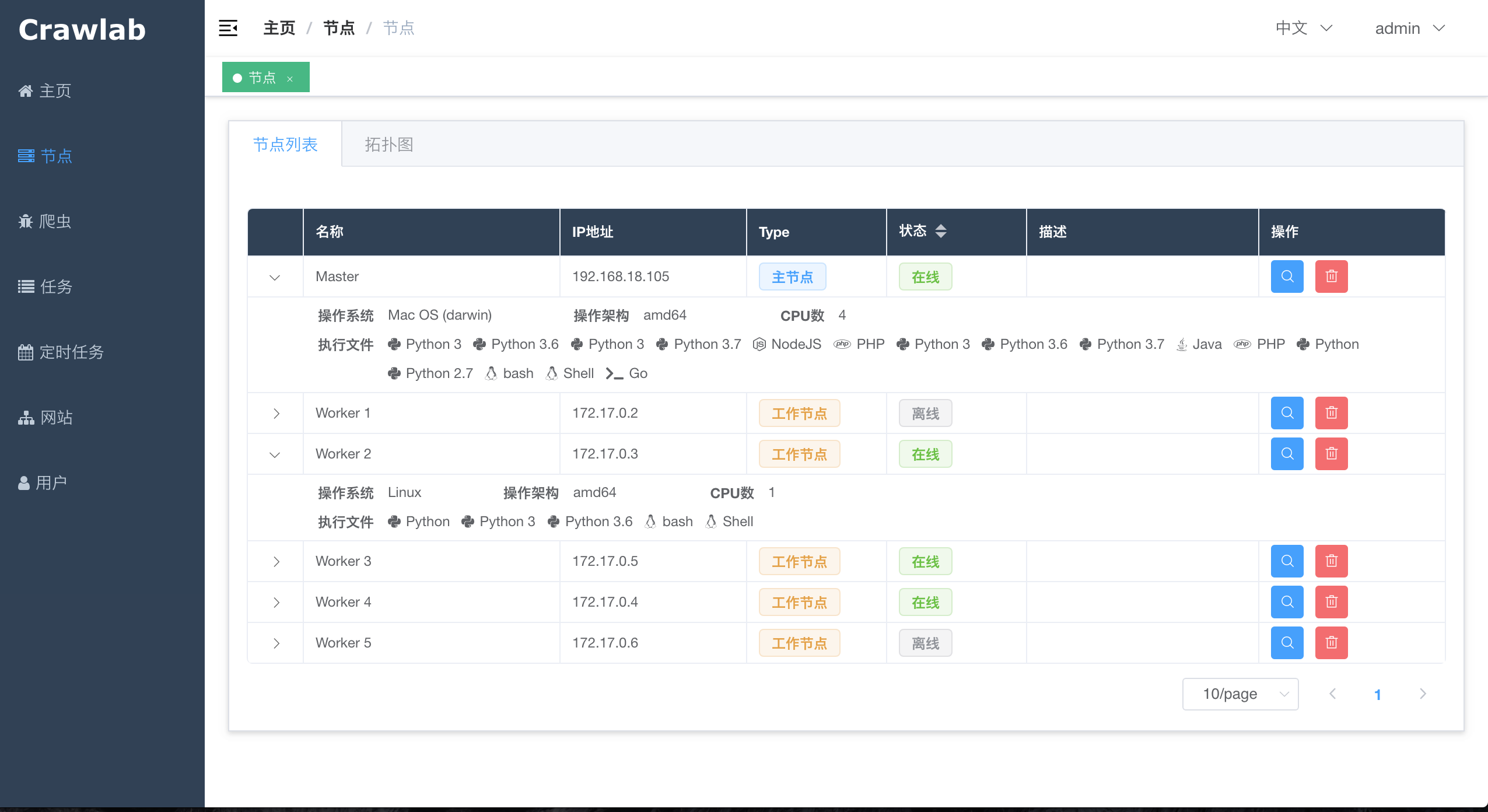

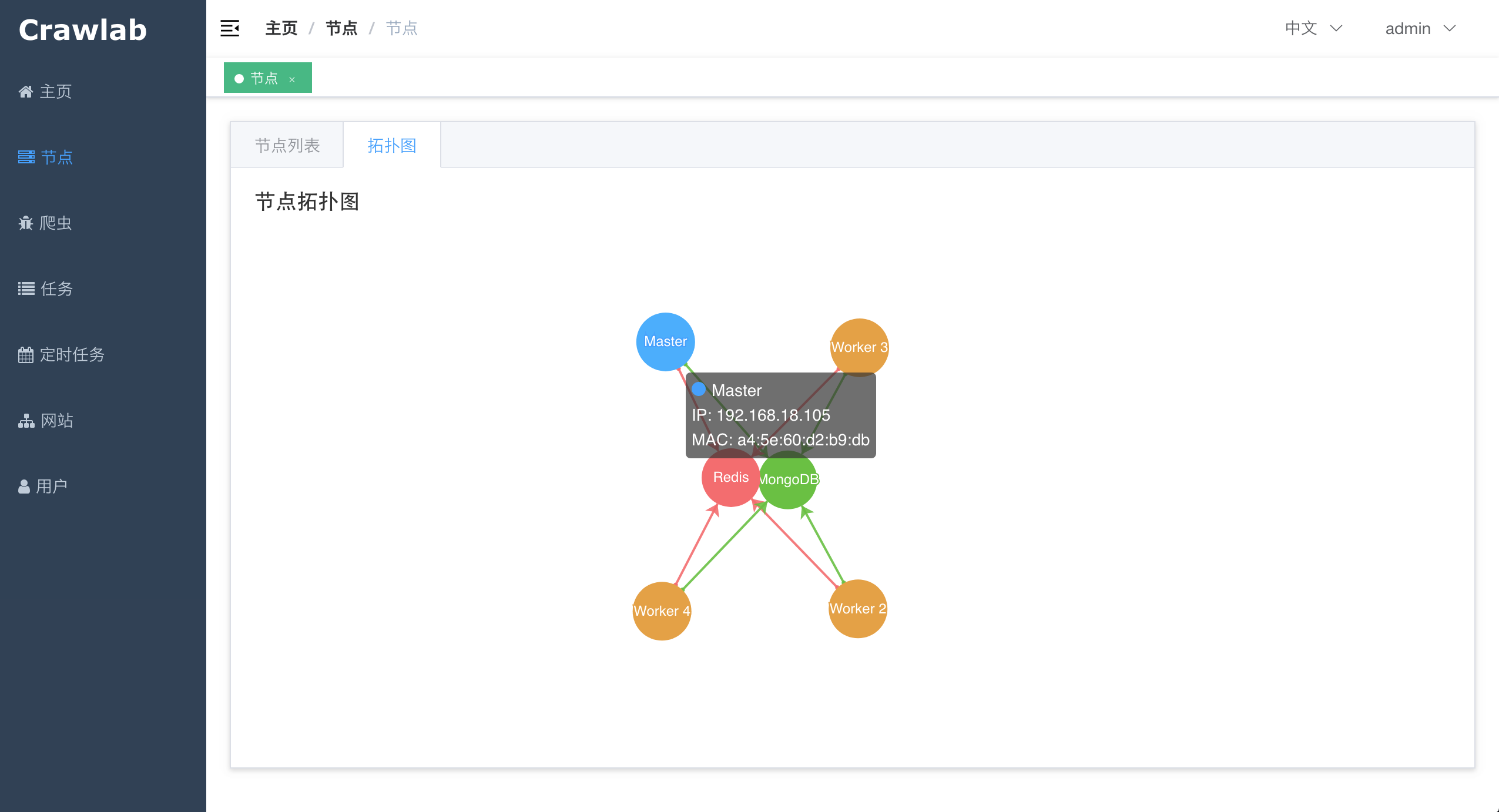

+- **Node Network Graph**: Visualization of node typology.

+- **Node System Info**: Available to see system info including OS, CPUs and executables.

+- **Node Monitoring Enhancement**: Nodes are monitored and registered through Redis.

+- **File Management**: Available to edit spider files online, including code highlight.

+- **Login/Regiser/User Management**: Require users to login to use Crawlab, allow user registration and user management, some role-based authorization.

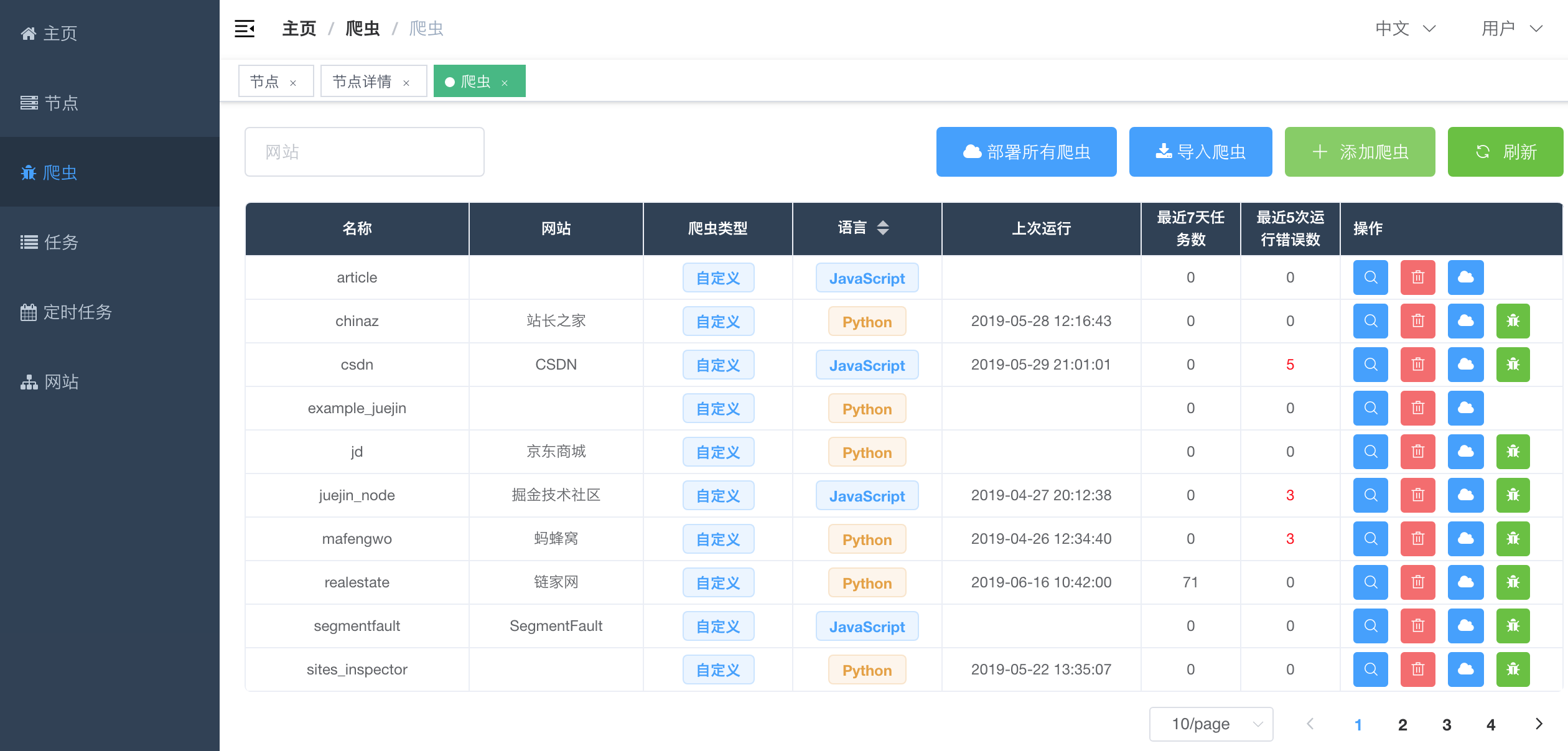

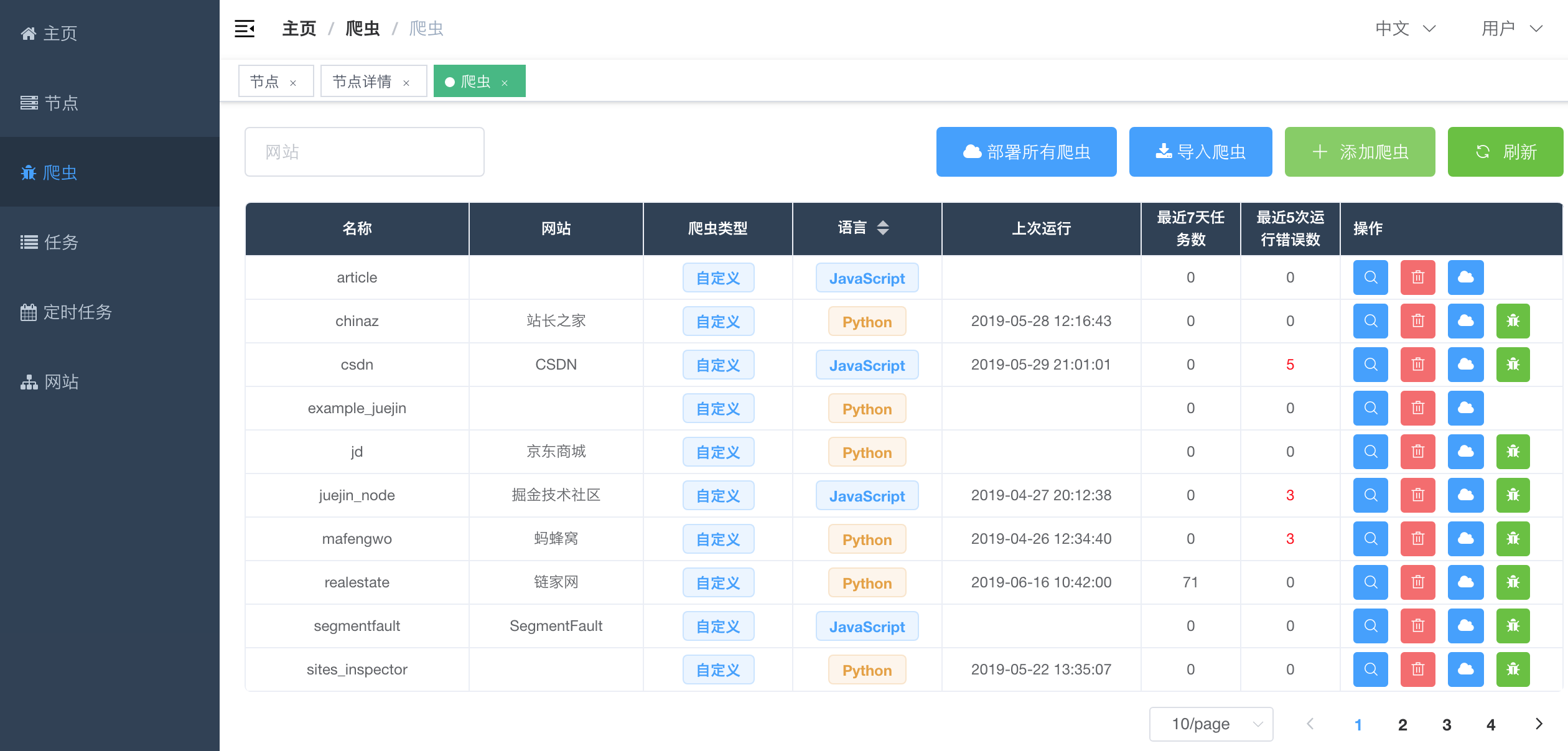

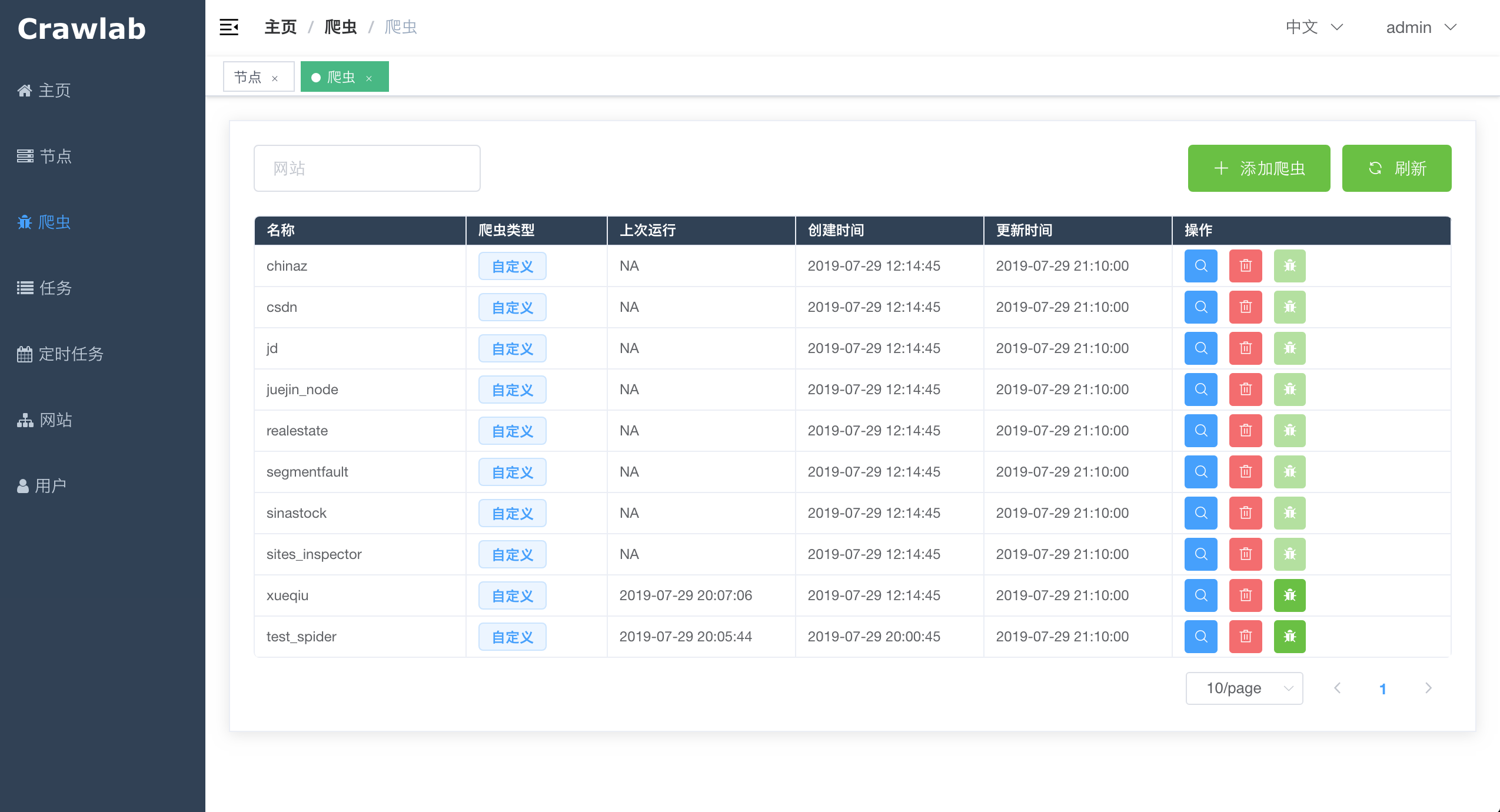

+- **Automatic Spider Deployment**: Spiders are deployed/synchronized to all online nodes automatically.

+- **Smaller Docker Image**: Slimmed Docker image and reduced Docker image size from 1.3G to \~700M by applying Multi-Stage Build.

+

+### Bug Fixes

+- **Node Status**. Node status does not change even though it goes offline actually. [#87](https://github.com/tikazyq/crawlab/issues/87)

+- **Spider Deployment Error**. Fixed through Automatic Spider Deployment [#83](https://github.com/tikazyq/crawlab/issues/83)

+- **Node not showing**. Node not able to show online [#81](https://github.com/tikazyq/crawlab/issues/81)

+- **Cron Job not working**. Fixed through new Golang backend [#64](https://github.com/tikazyq/crawlab/issues/64)

+- **Flower Error**. Fixed through new Golang backend [#57](https://github.com/tikazyq/crawlab/issues/57)

+

+# 0.2.4 (2019-07-07)

### Features / Enhancement

- **Documentation**: Better and much more detailed documentation.

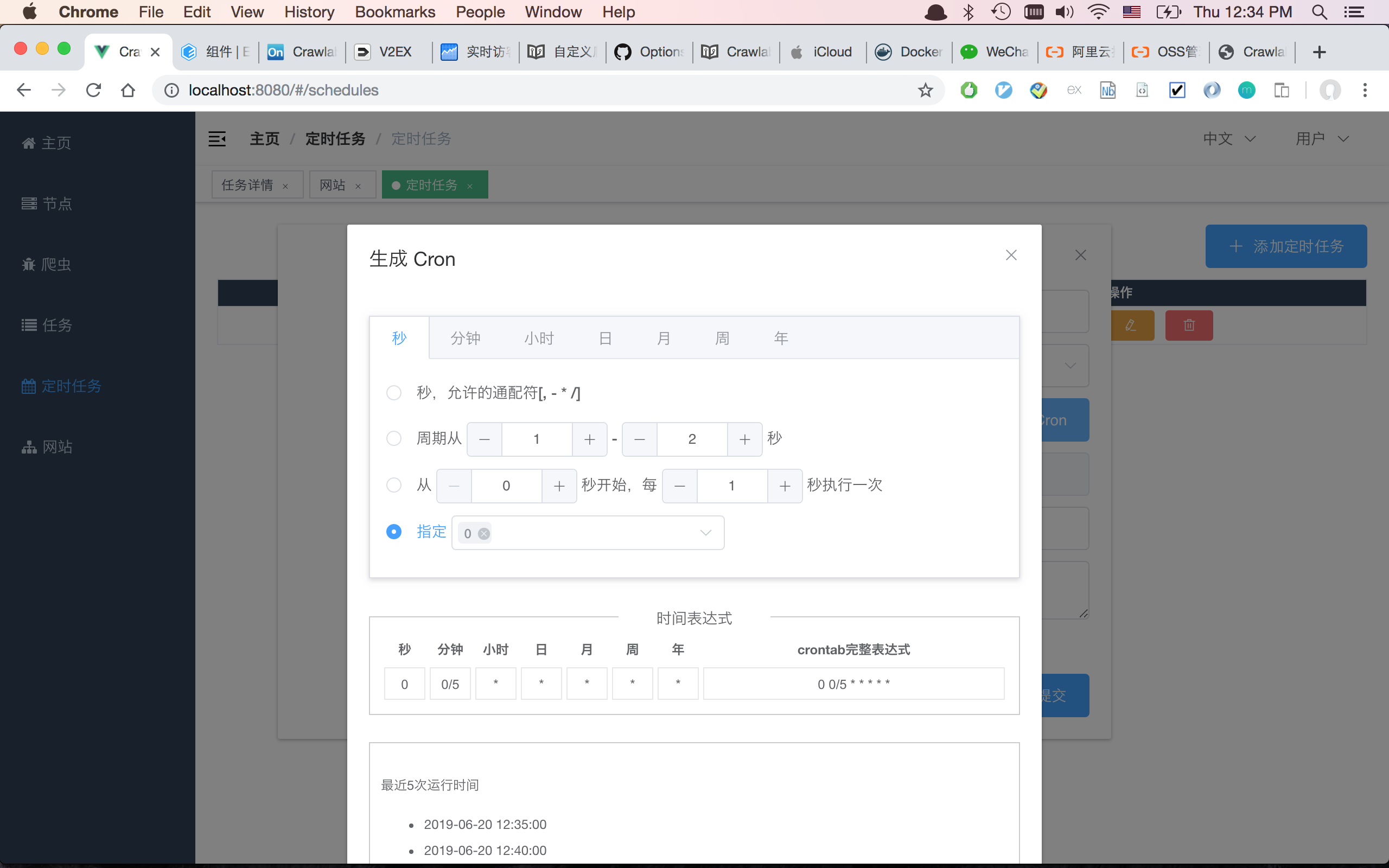

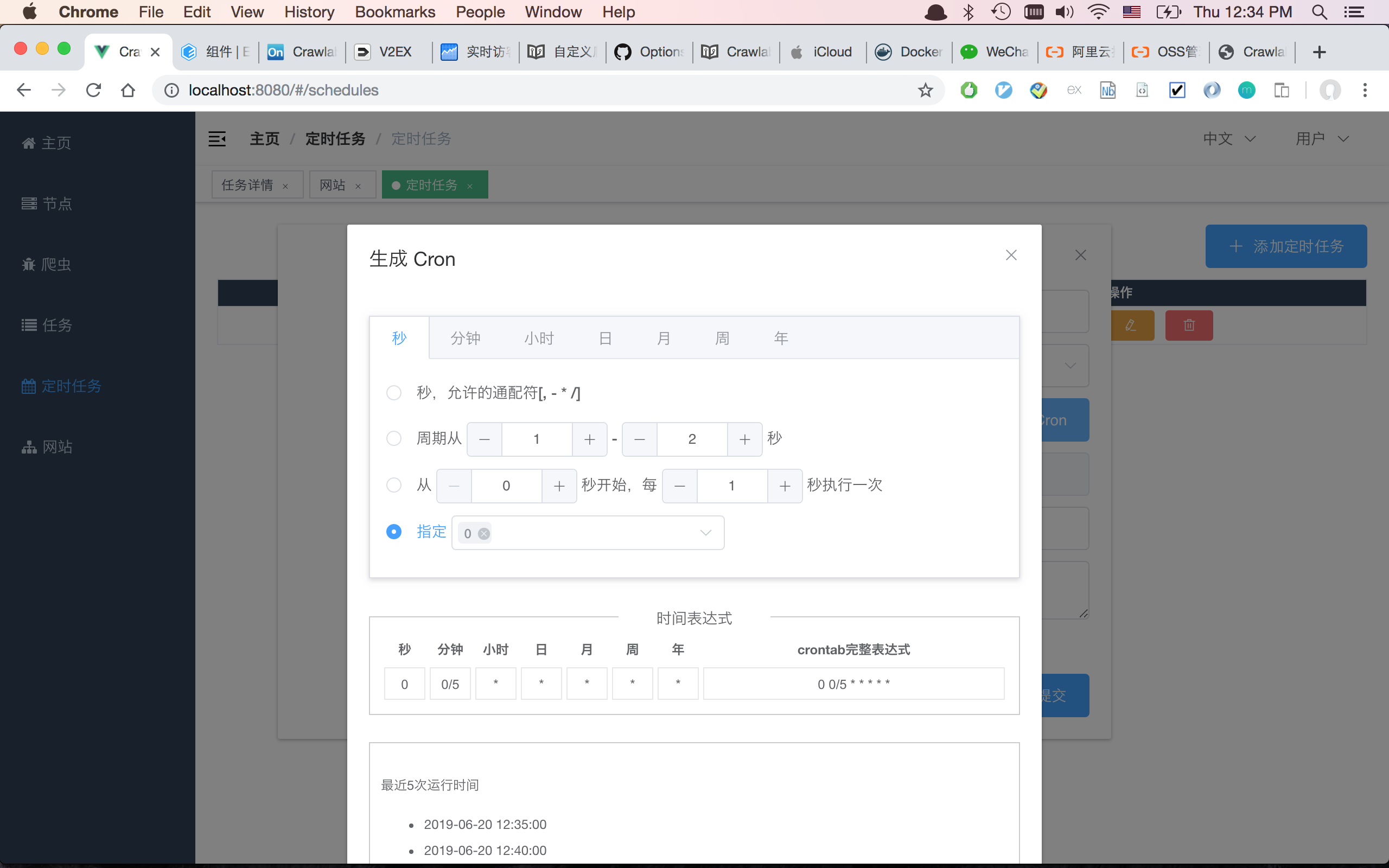

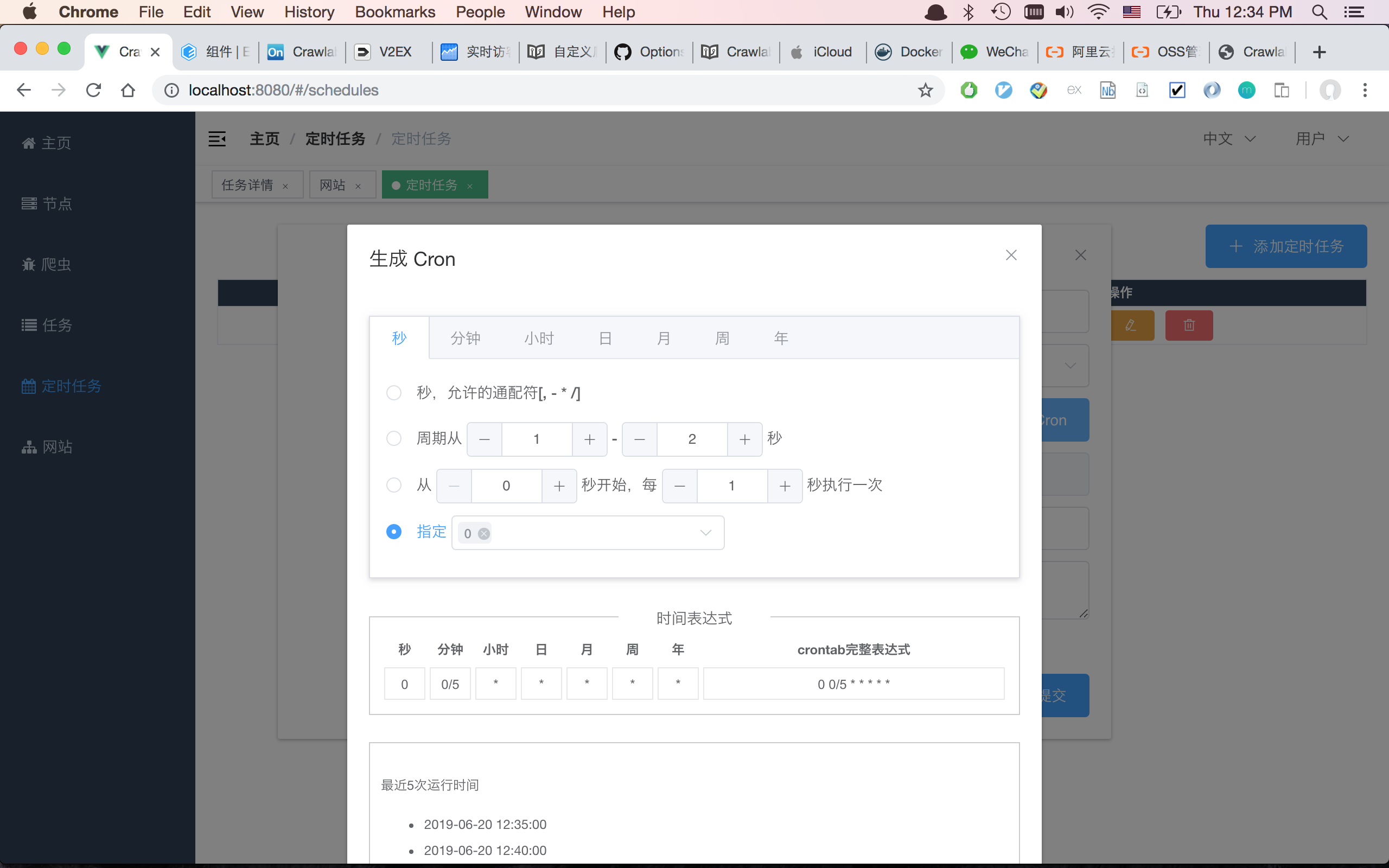

- **Better Crontab**: Make crontab expression through crontab UI.

diff --git a/README-zh.md b/README-zh.md

index 301b0de0..4dc87800 100644

--- a/README-zh.md

+++ b/README-zh.md

@@ -6,50 +6,101 @@

-中文 | [English](https://github.com/tikazyq/crawlab/blob/master/README.md)

+中文 | [English](https://github.com/tikazyq/crawlab)

基于Golang的分布式爬虫管理平台,支持Python、NodeJS、Go、Java、PHP等多种编程语言以及多种爬虫框架。

[查看演示 Demo](http://114.67.75.98:8080) | [文档](https://tikazyq.github.io/crawlab-docs)

-## 要求

-- Go 1.12+

-- Node.js 8.12+

-- MongoDB 3.6+

-- Redis

-

## 安装

三种方式:

1. [Docker](https://tikazyq.github.io/crawlab/Installation/Docker.md)(推荐)

-2. [直接部署](https://tikazyq.github.io/crawlab/Installation/Direct.md)

-3. [预览模式](https://tikazyq.github.io/crawlab/Installation/Direct.md)(快速体验)

+2. [直接部署](https://tikazyq.github.io/crawlab/Installation/Direct.md)(了解内核)

+

+### 要求(Docker)

+- Docker 18.03+

+- Redis

+- MongoDB 3.6+

+

+### 要求(直接部署)

+- Go 1.12+

+- Node 8.12+

+- Redis

+- MongoDB 3.6+

+

+## 运行

+

+### Docker

+

+运行主节点示例。`192.168.99.1`是在Docker Machine网络中的宿主机IP地址。`192.168.99.100`是Docker主节点的IP地址。

+

+```bash

+docker run -d --rm --name crawlab \

+ -e CRAWLAB_REDIS_ADDRESS=192.168.99.1:6379 \

+ -e CRAWLAB_MONGO_HOST=192.168.99.1 \

+ -e CRAWLAB_SERVER_MASTER=Y \

+ -e CRAWLAB_API_ADDRESS=192.168.99.100:8000 \

+ -e CRAWLAB_SPIDER_PATH=/app/spiders \

+ -p 8080:8080 \

+ -p 8000:8000 \

+ -v /var/logs/crawlab:/var/logs/crawlab \

+ tikazyq/crawlab:0.3.0

+```

+

+当然也可以用`docker-compose`来一键启动,甚至不用配置MongoDB和Redis数据库。

+

+```bash

+docker-compose up

+```

+

+Docker部署的详情,请见[相关文档](https://tikazyq.github.io/crawlab/Installation/Docker.md)。

+

+### 直接部署

+

+请参考[相关文档](https://tikazyq.github.io/crawlab/Installation/Direct.md)。

## 截图

+#### 登录

+

+

+

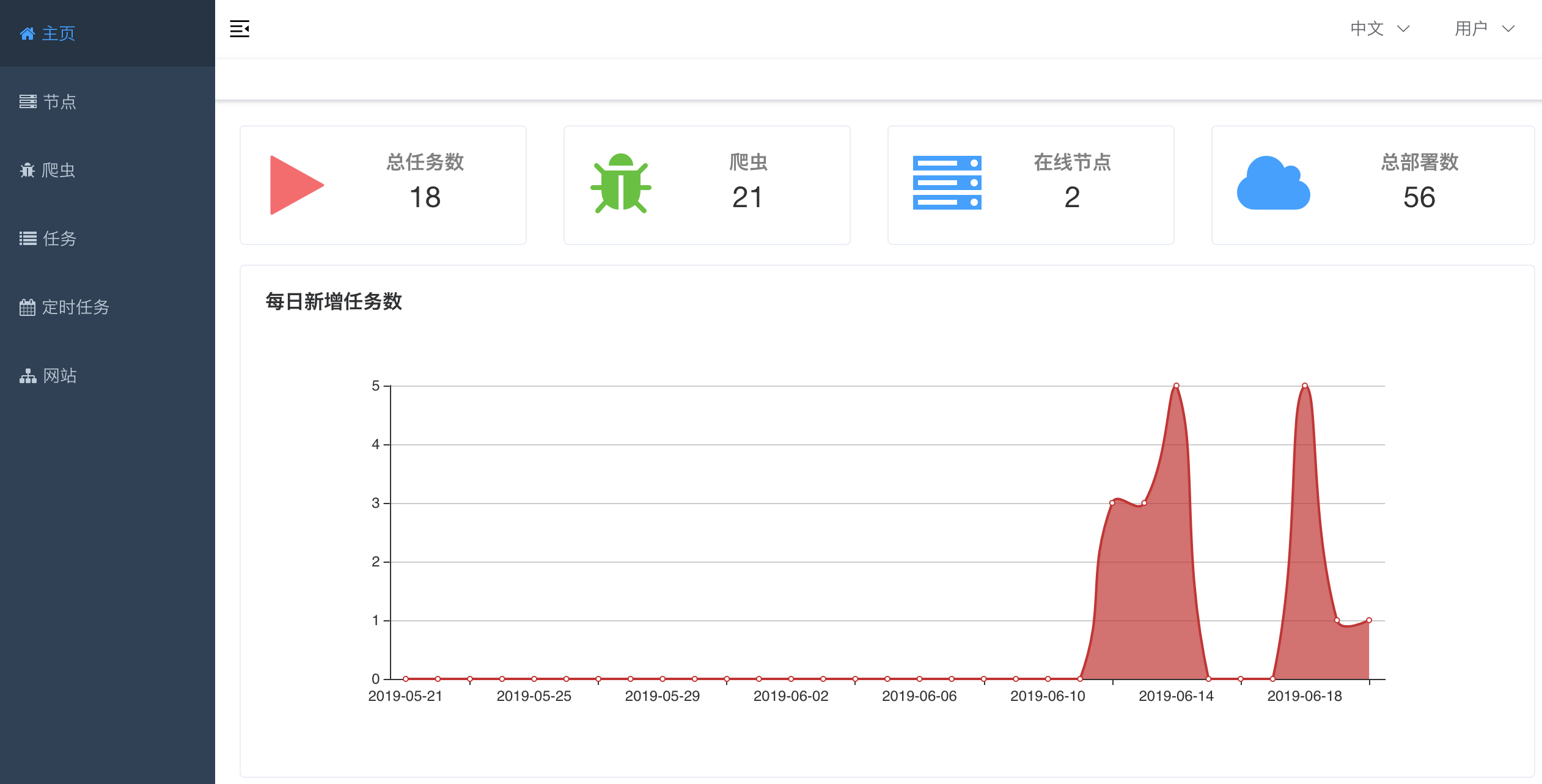

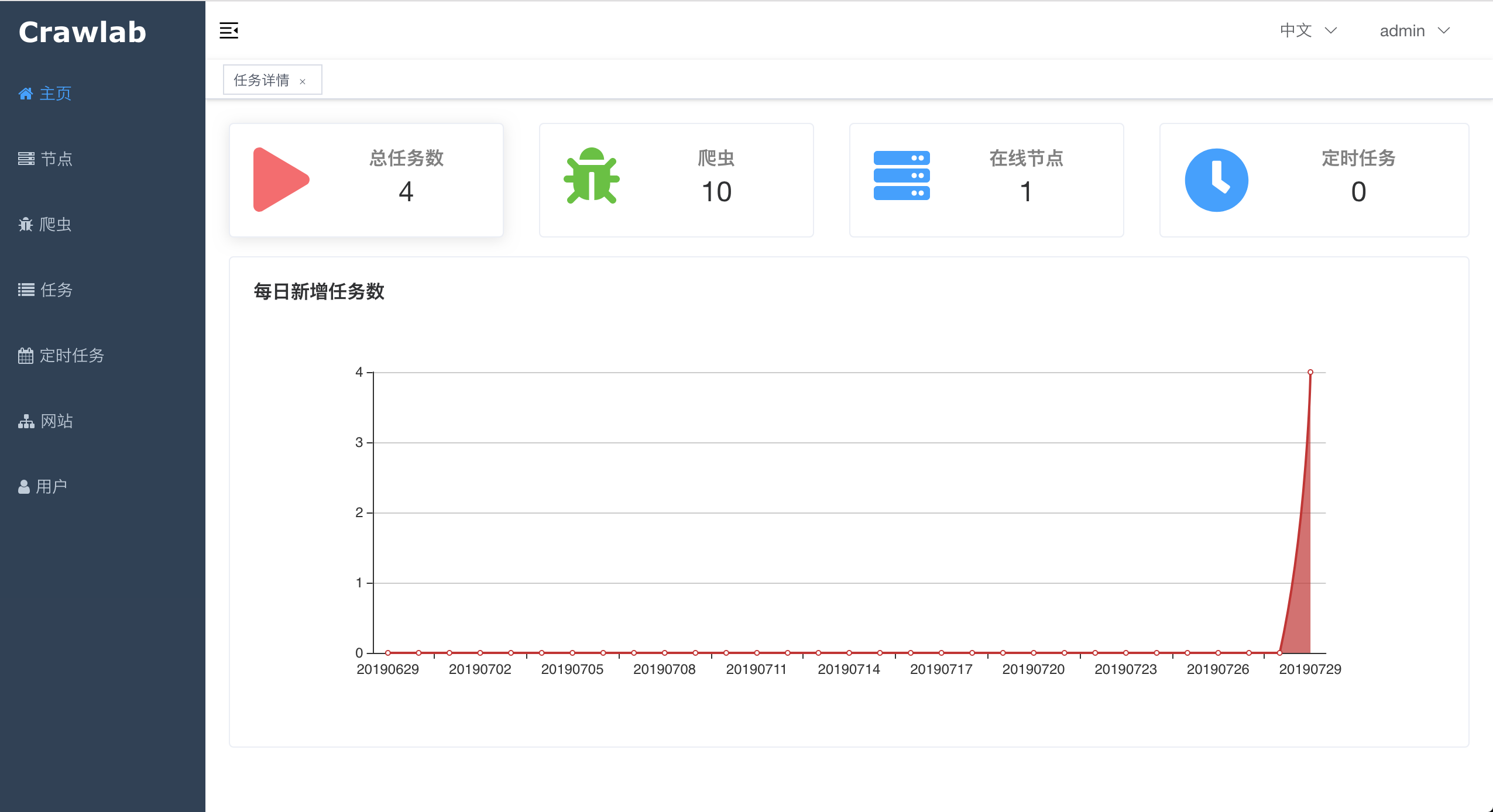

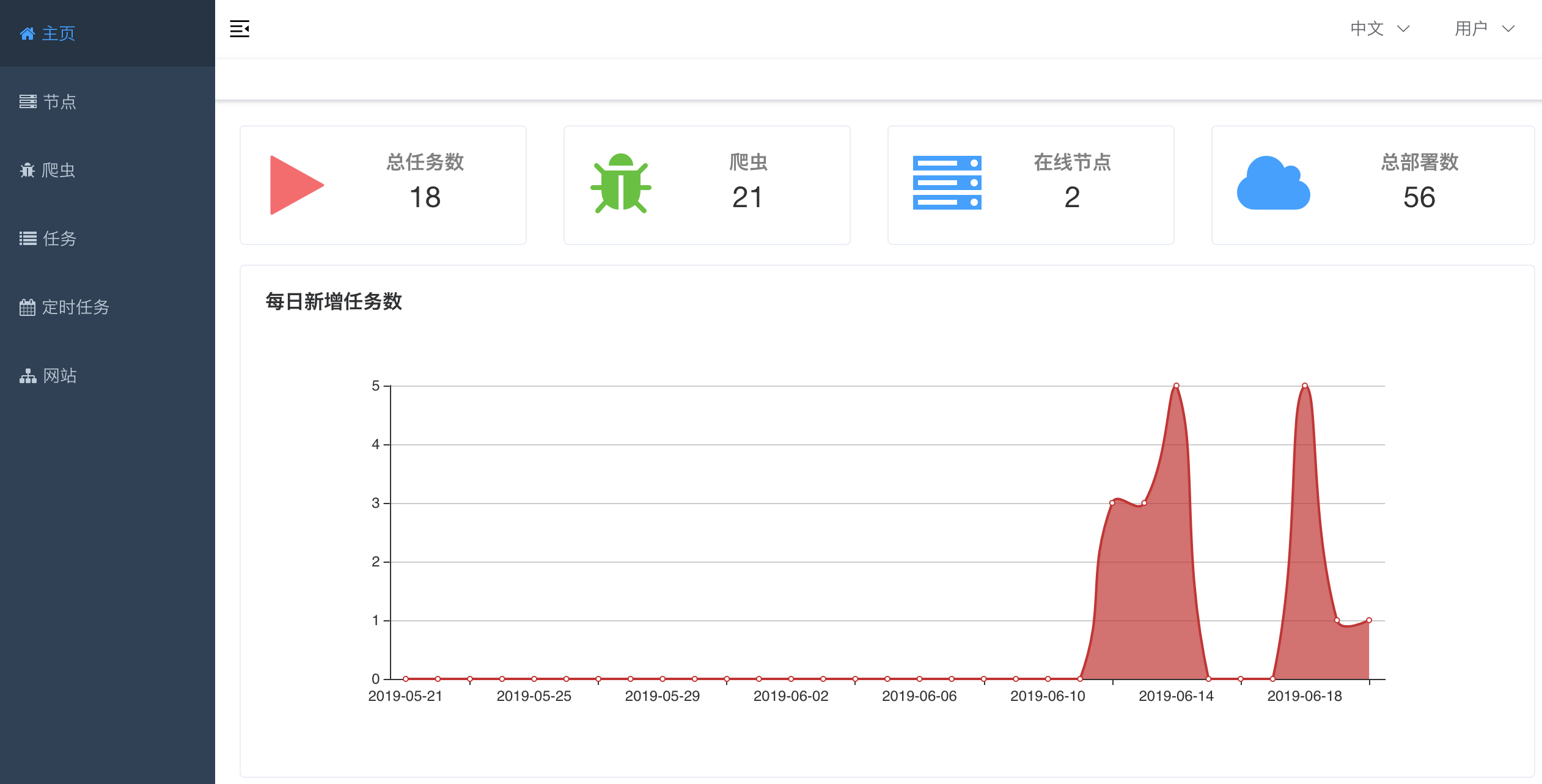

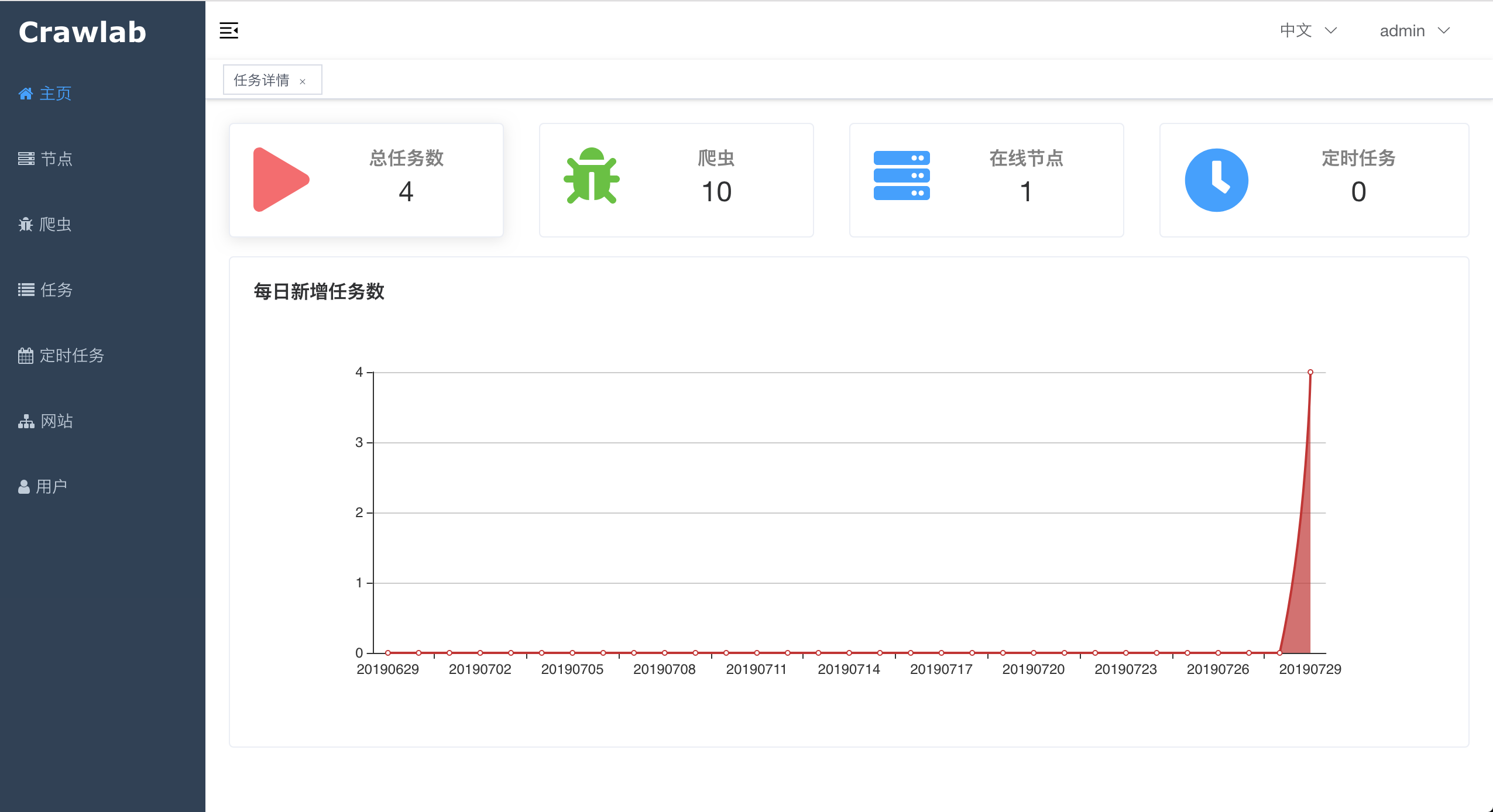

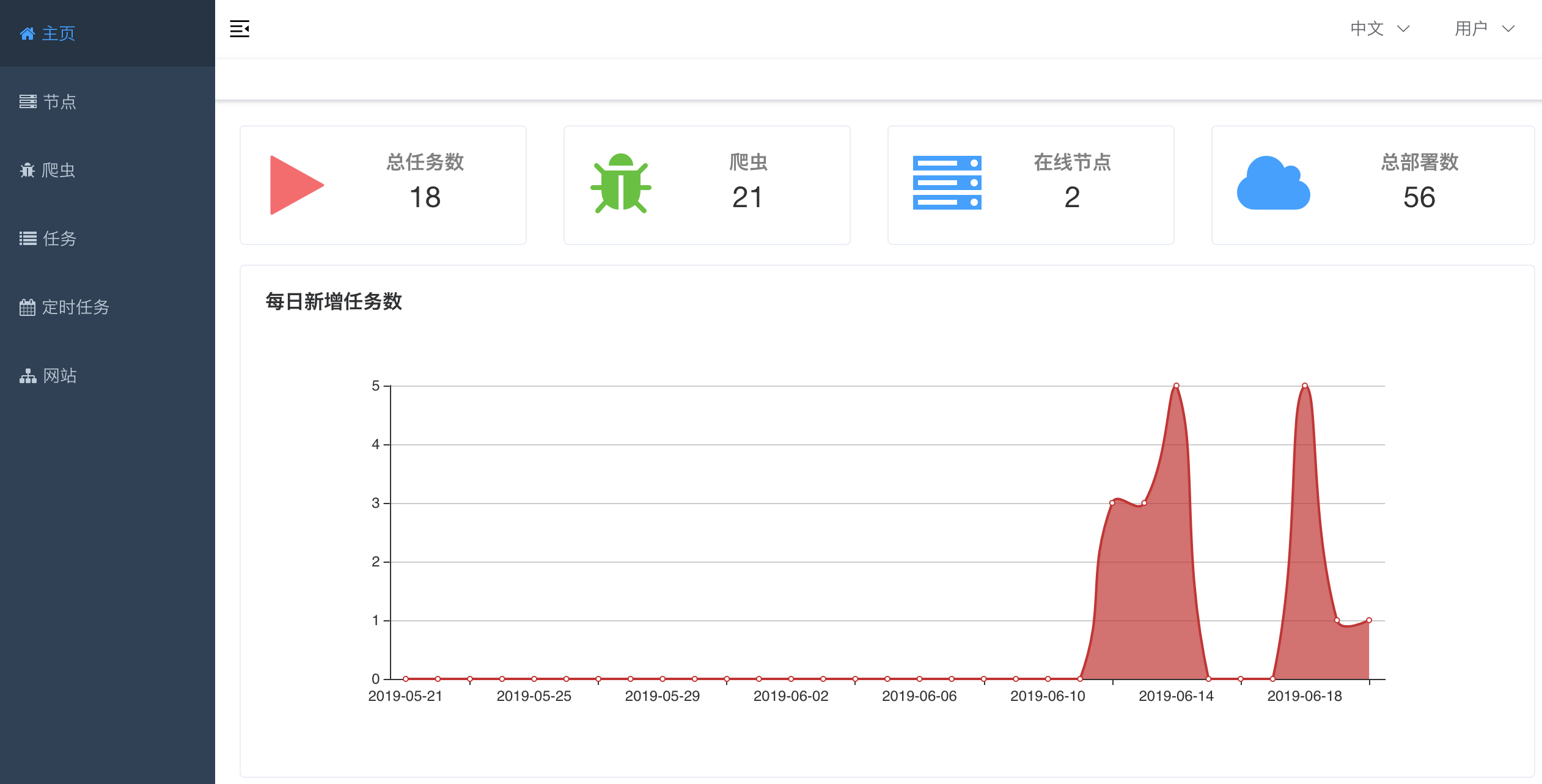

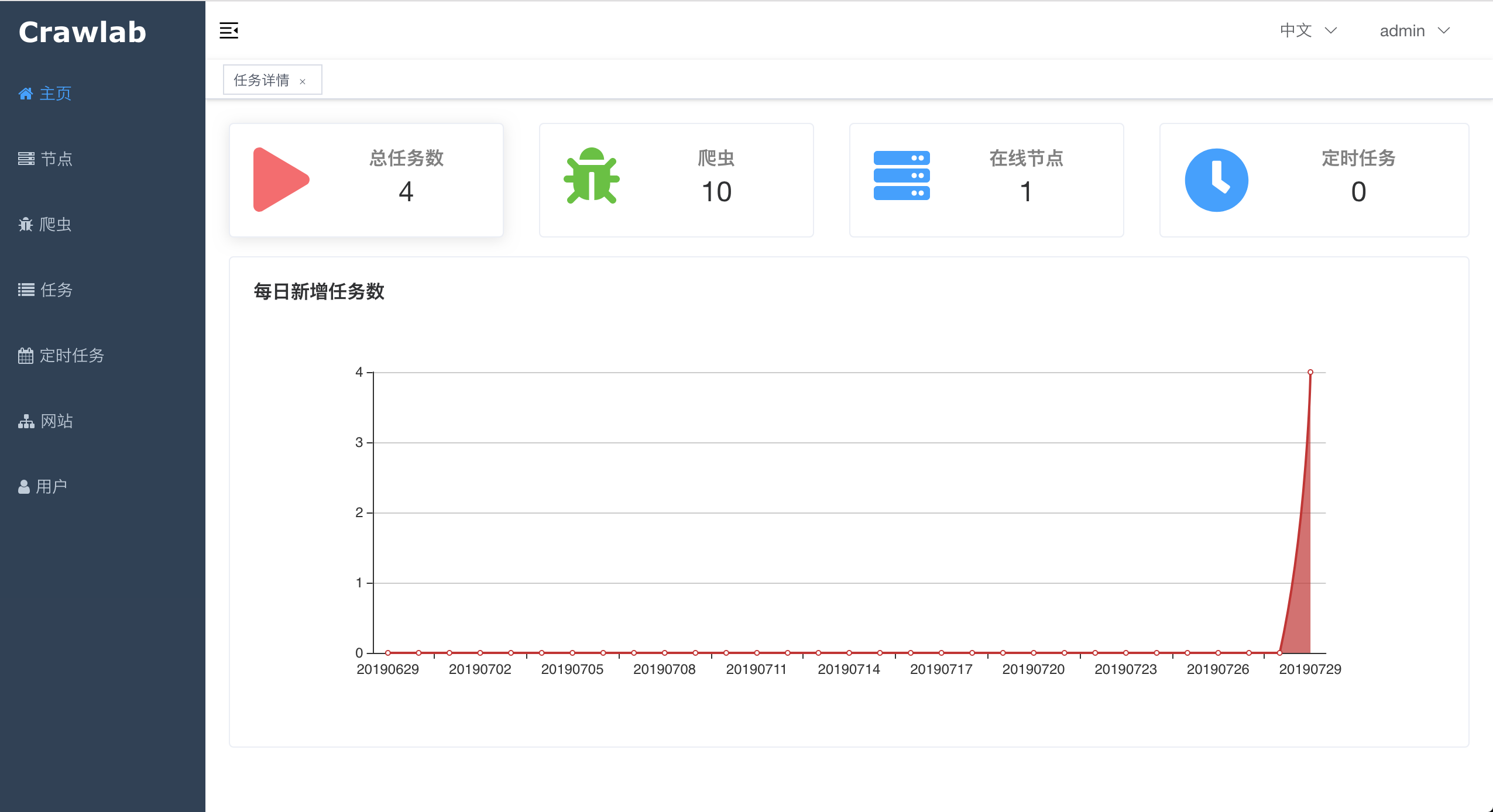

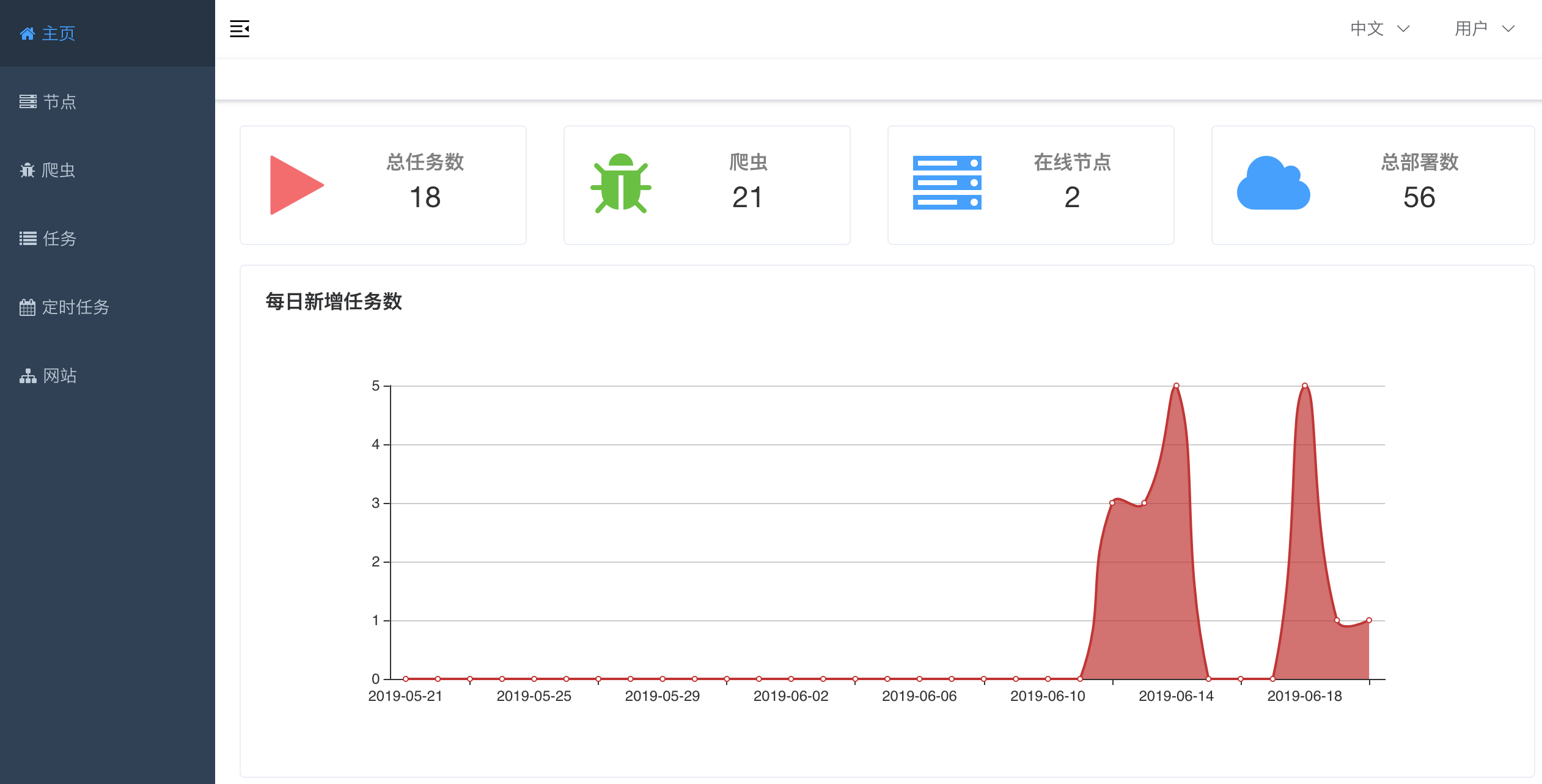

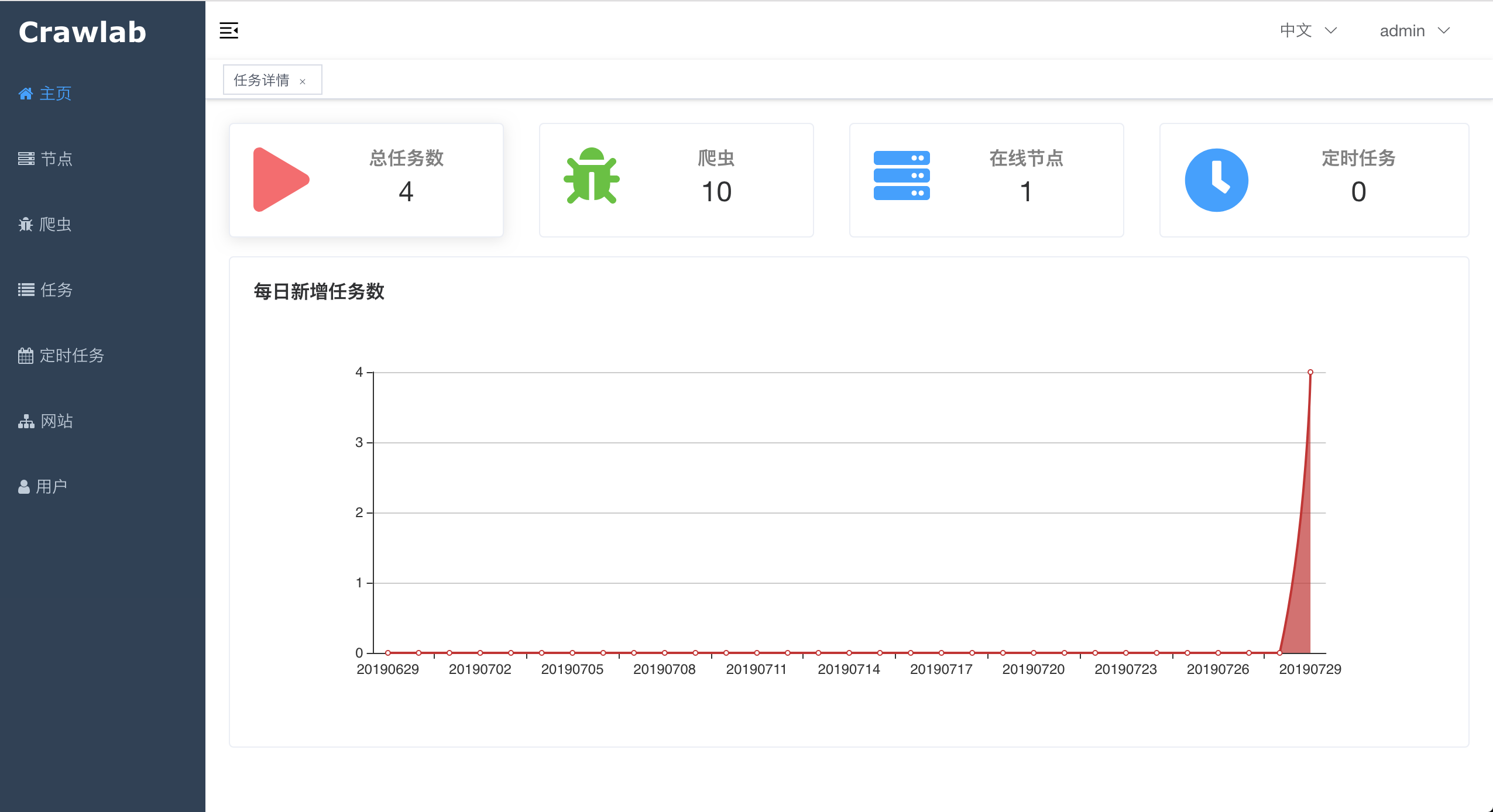

#### 首页

-

+

+

+#### 节点列表

+

+

+

+#### 节点拓扑图

+

+

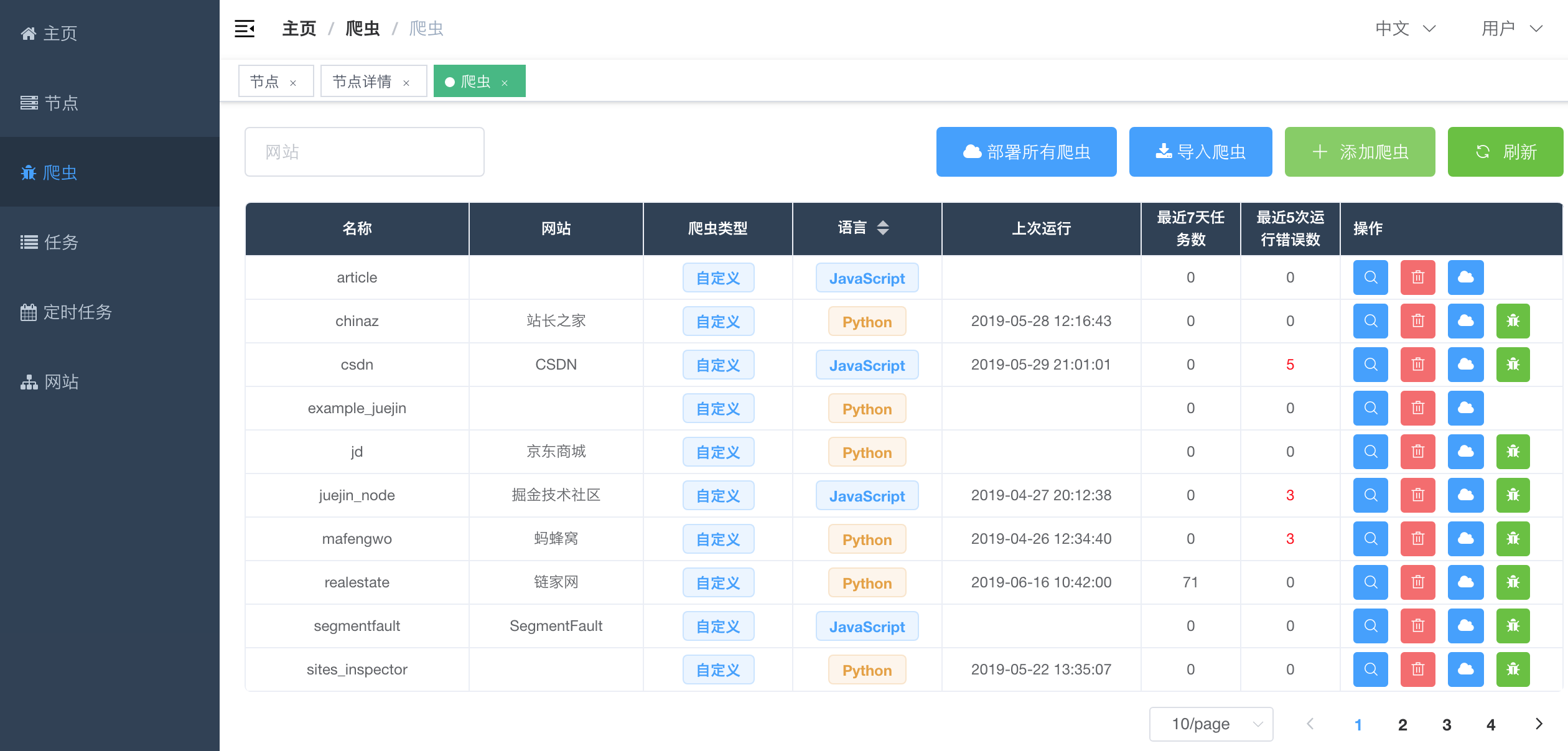

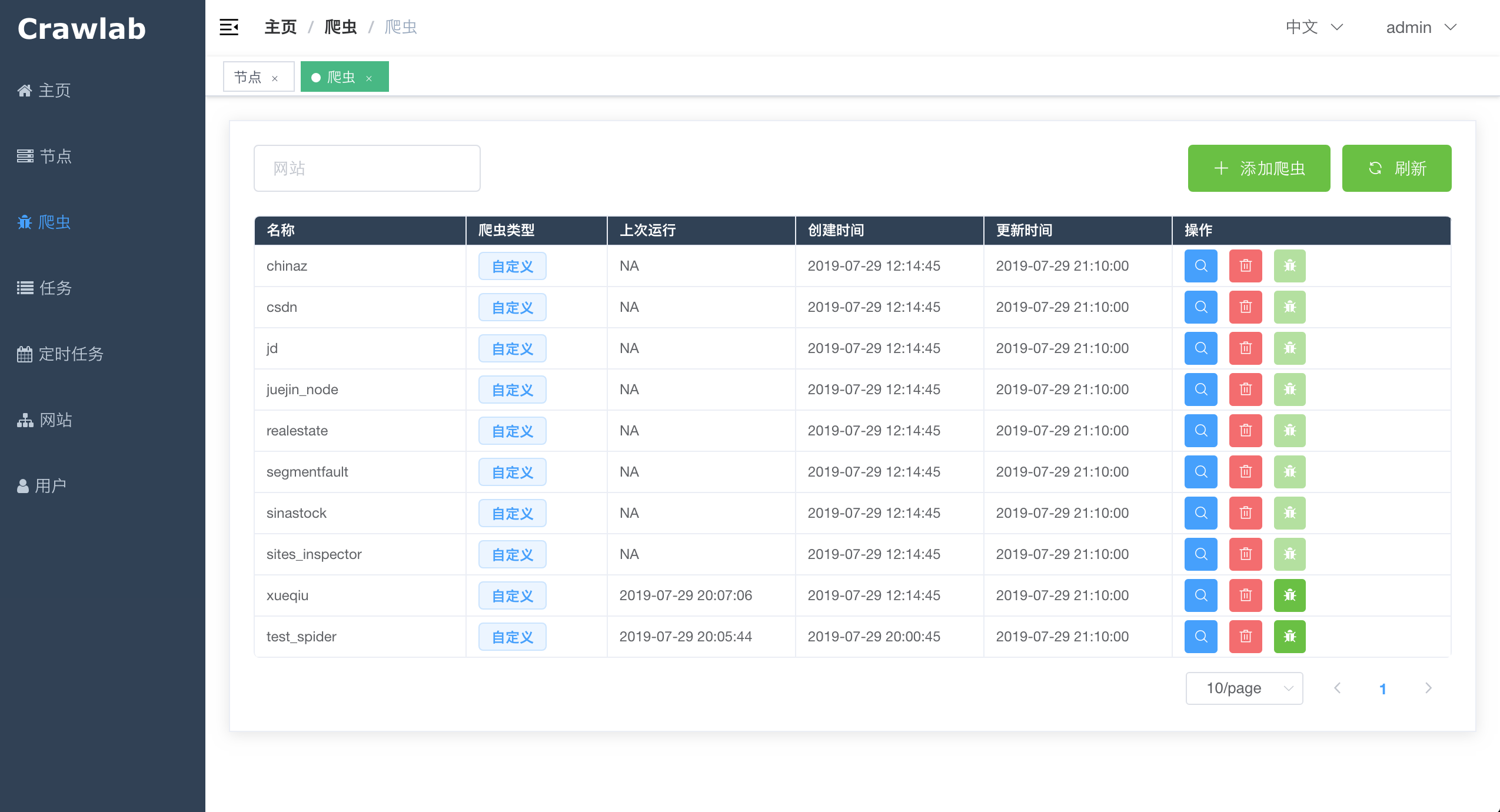

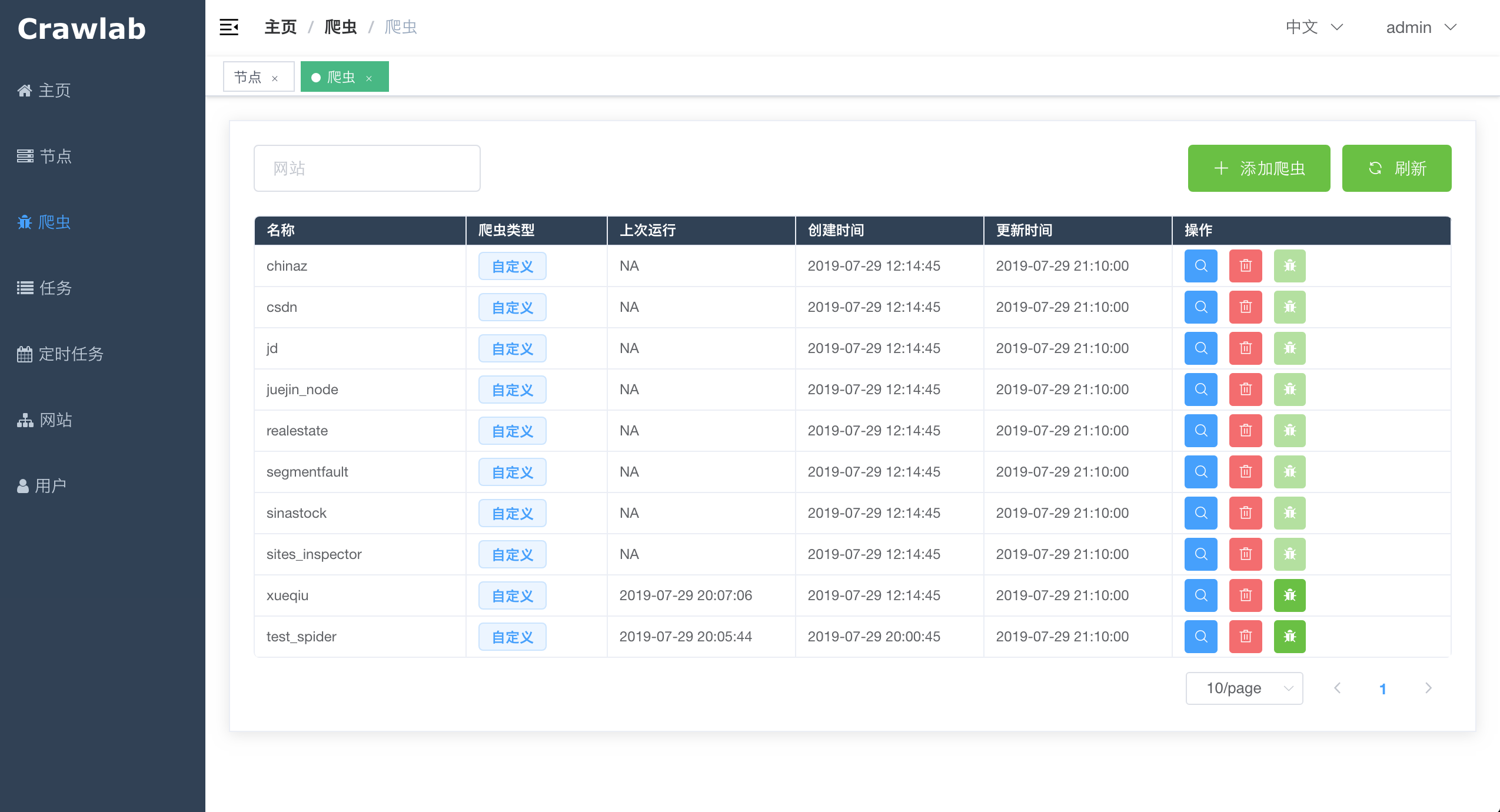

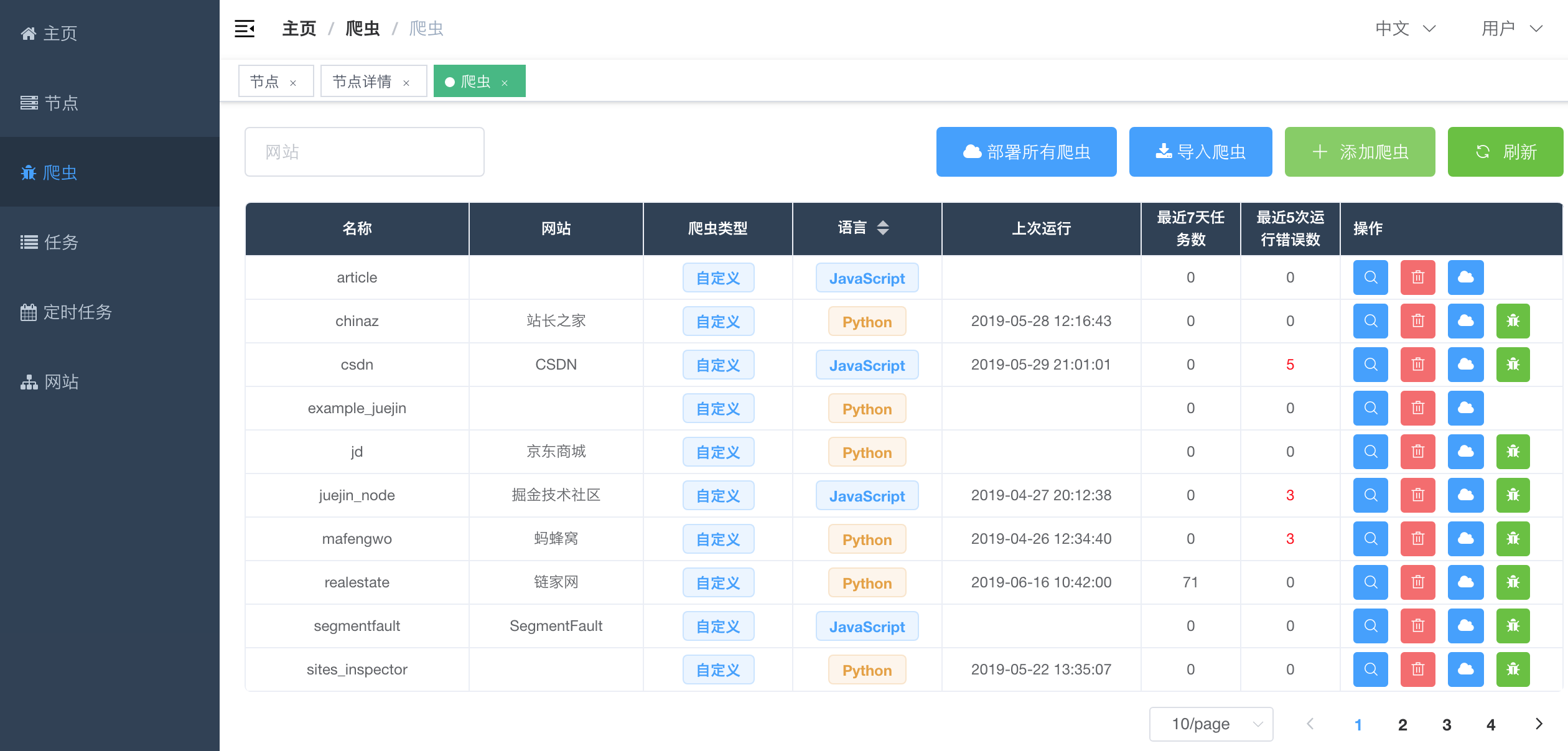

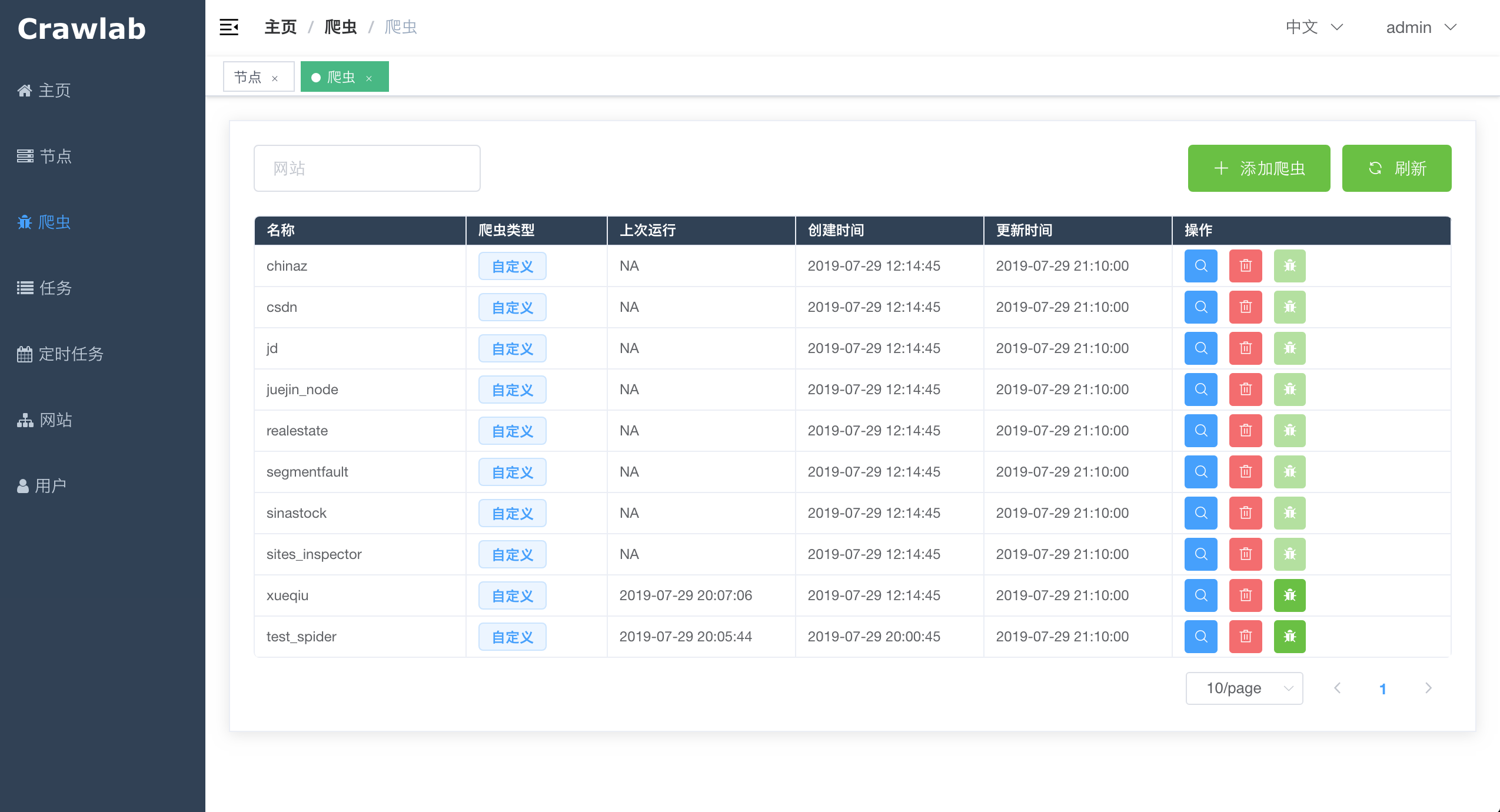

#### 爬虫列表

-

+

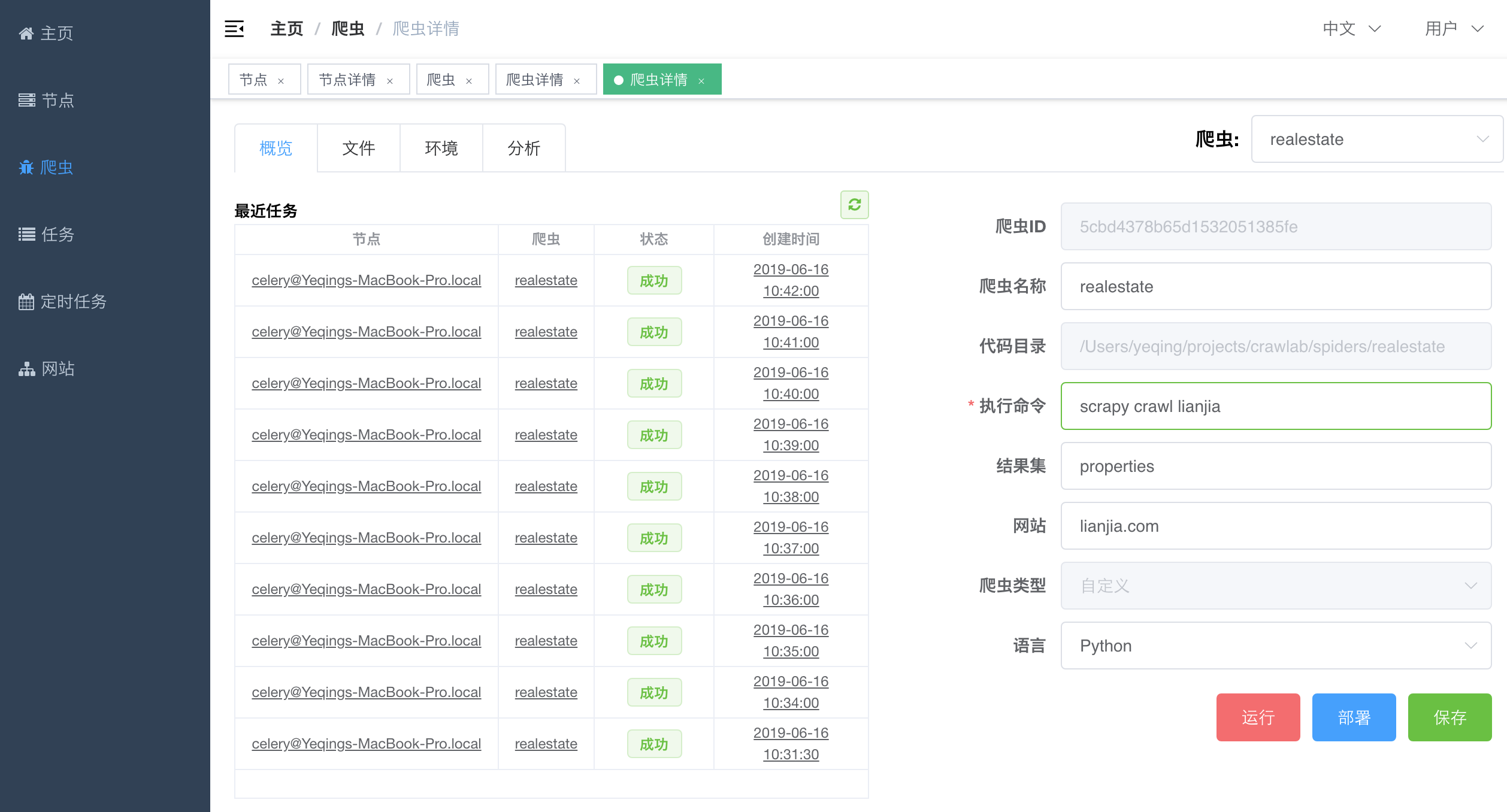

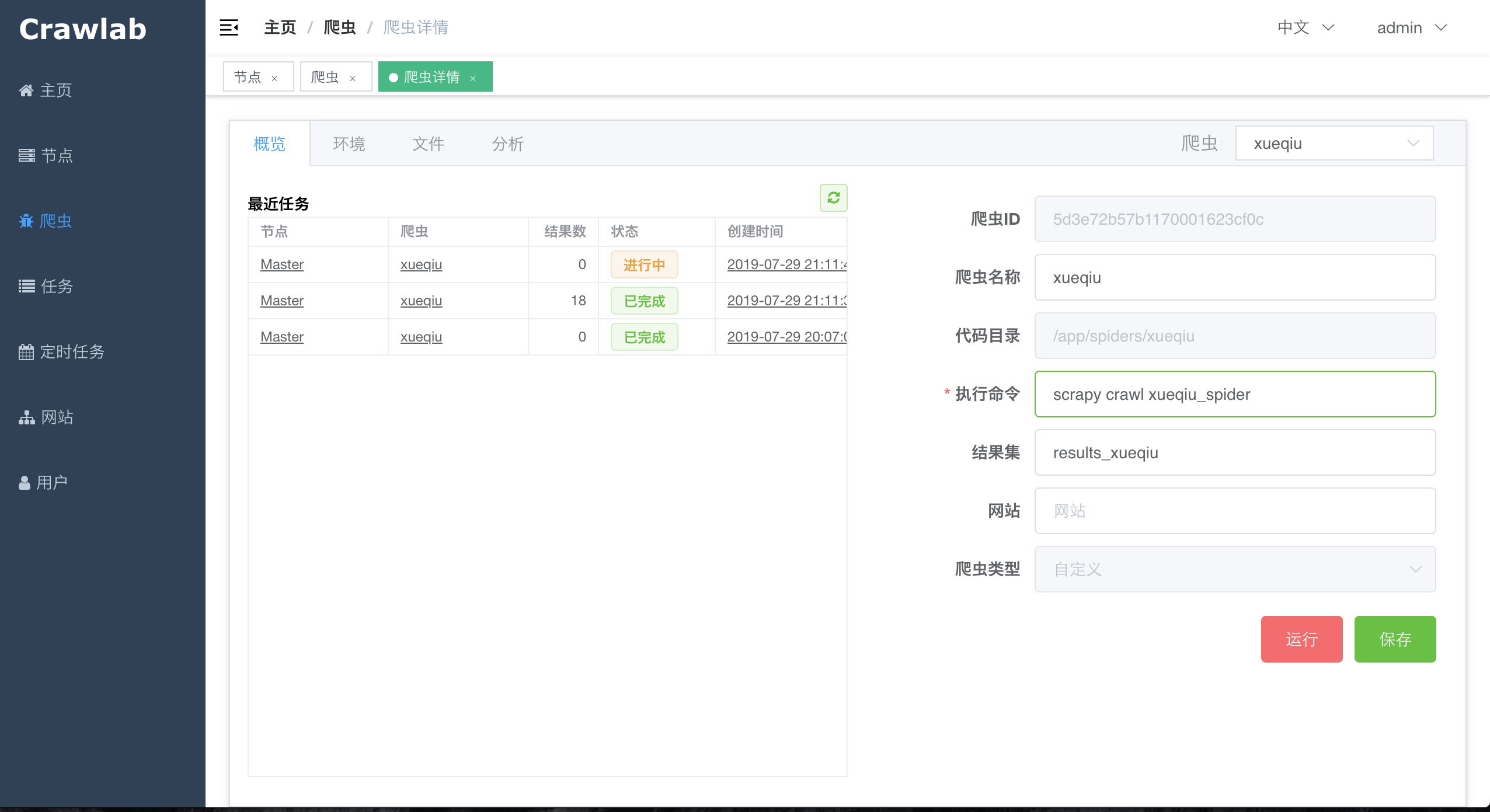

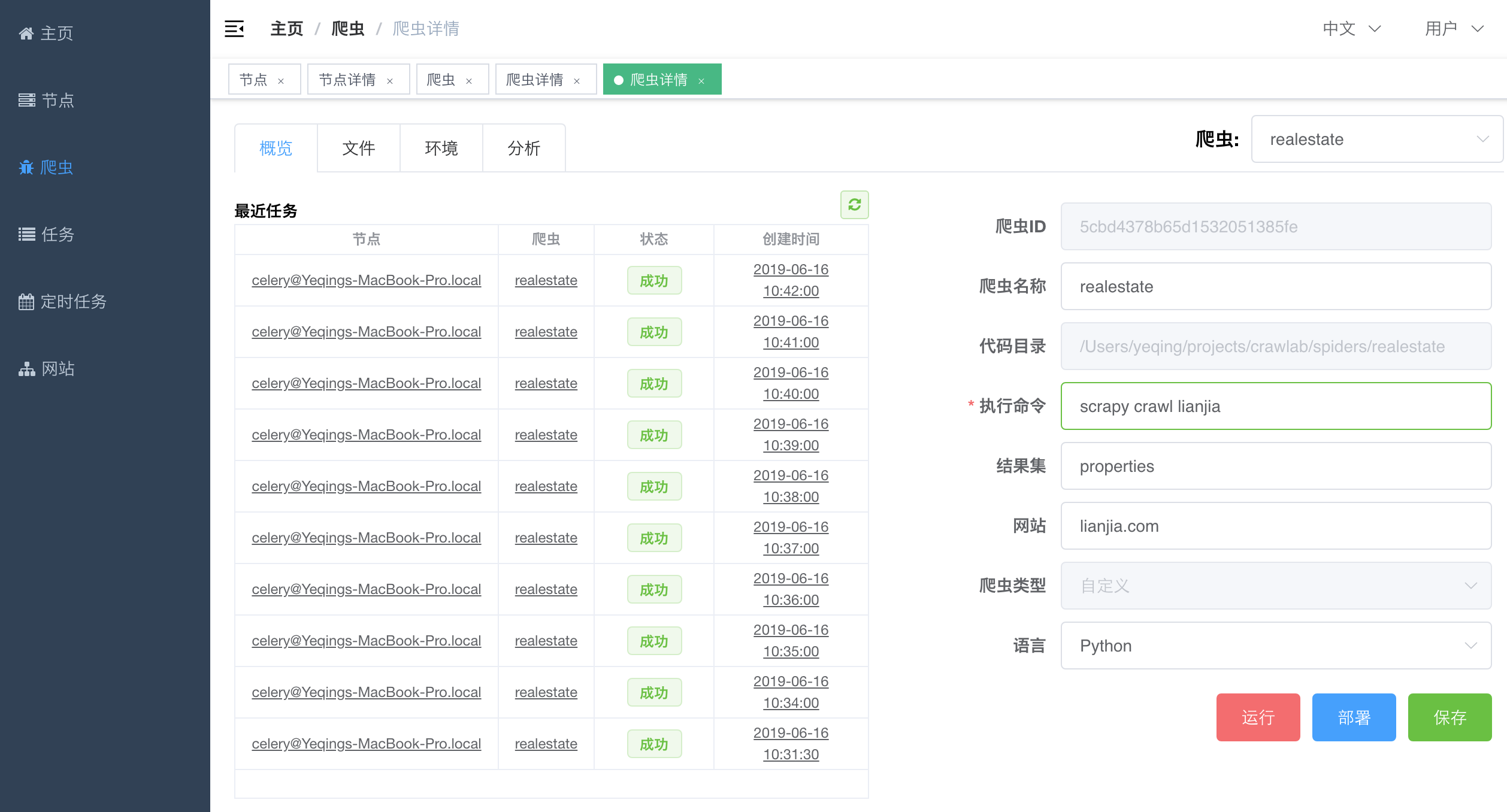

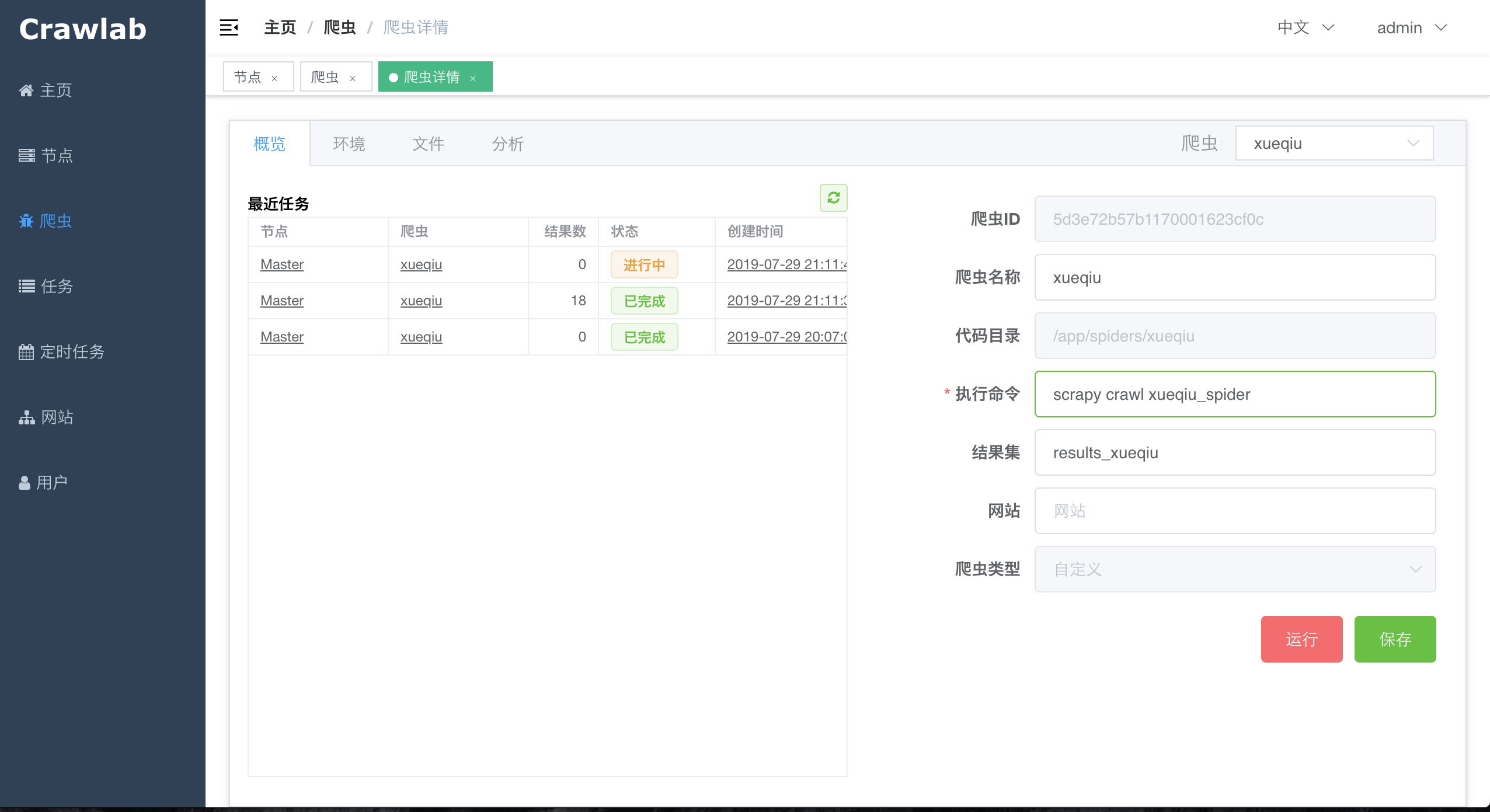

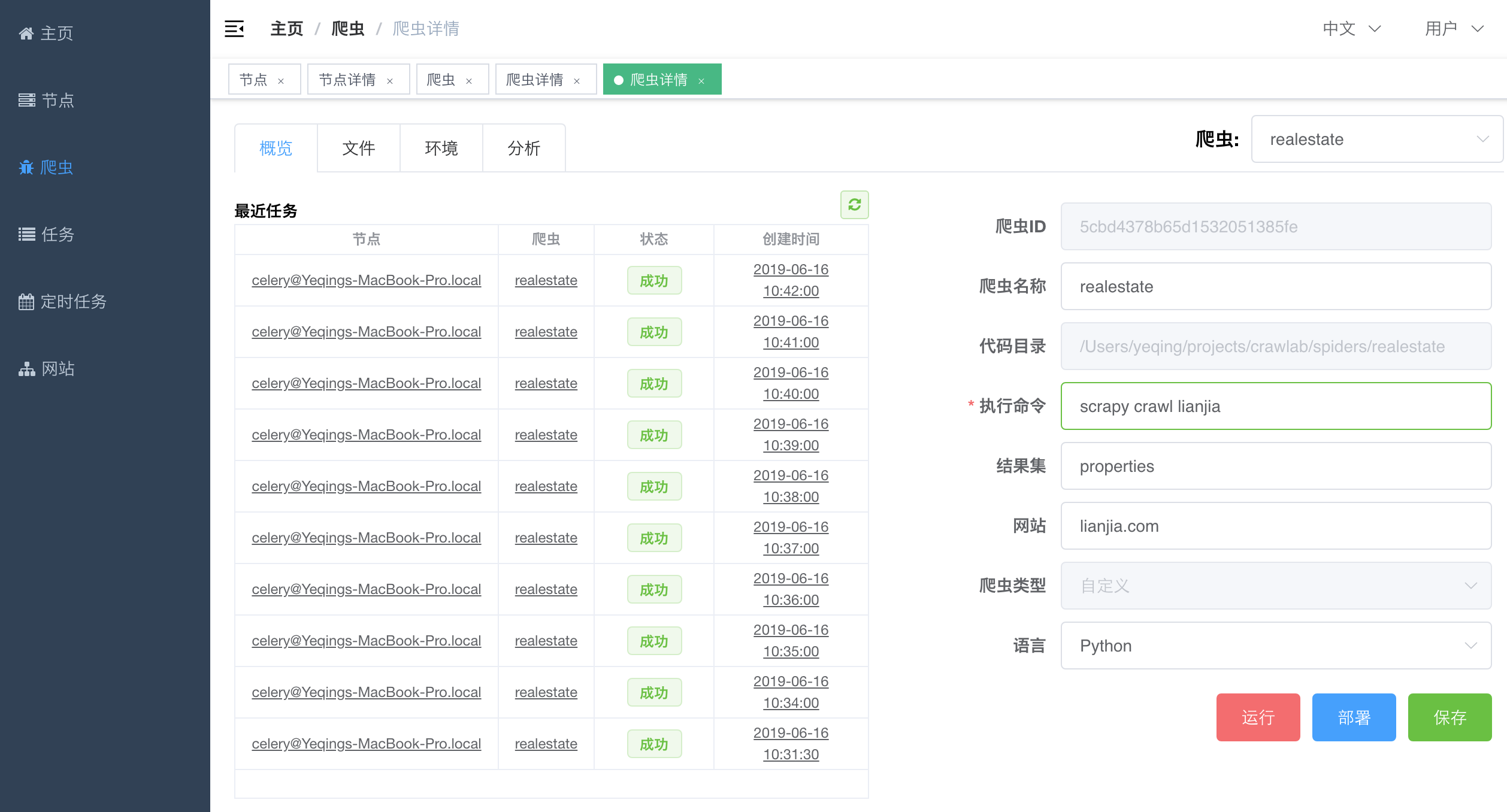

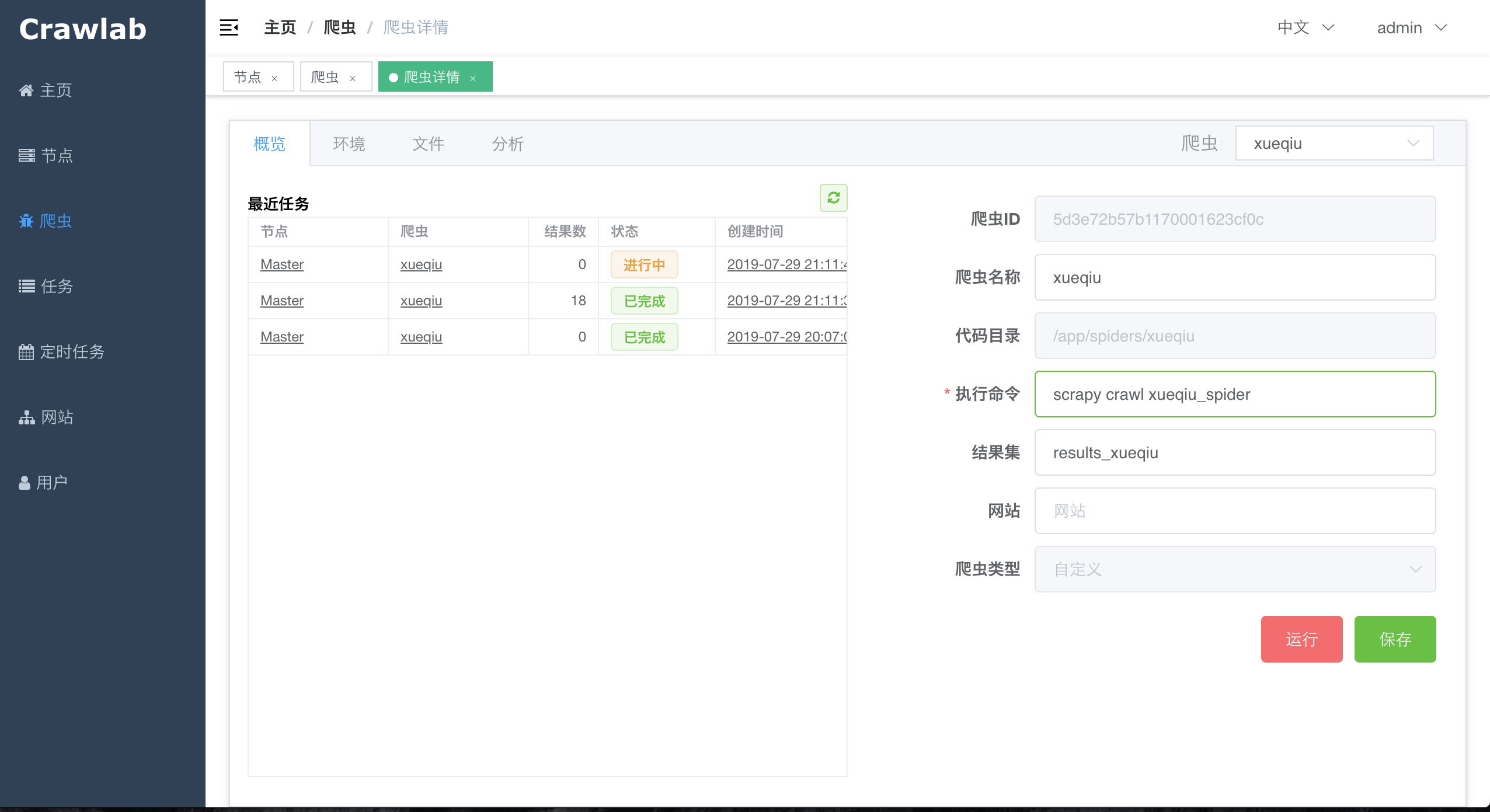

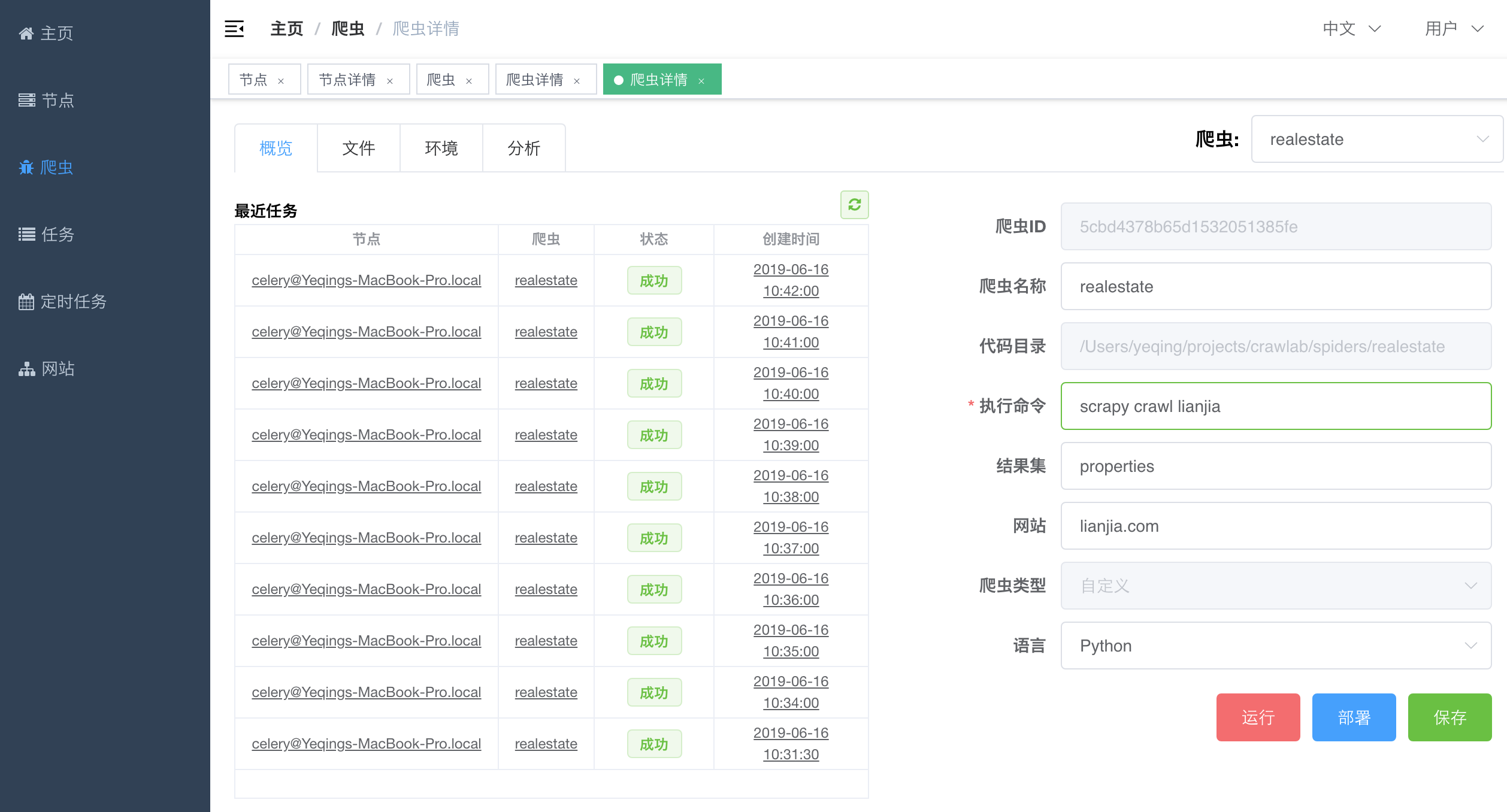

-#### 爬虫详情 - 概览

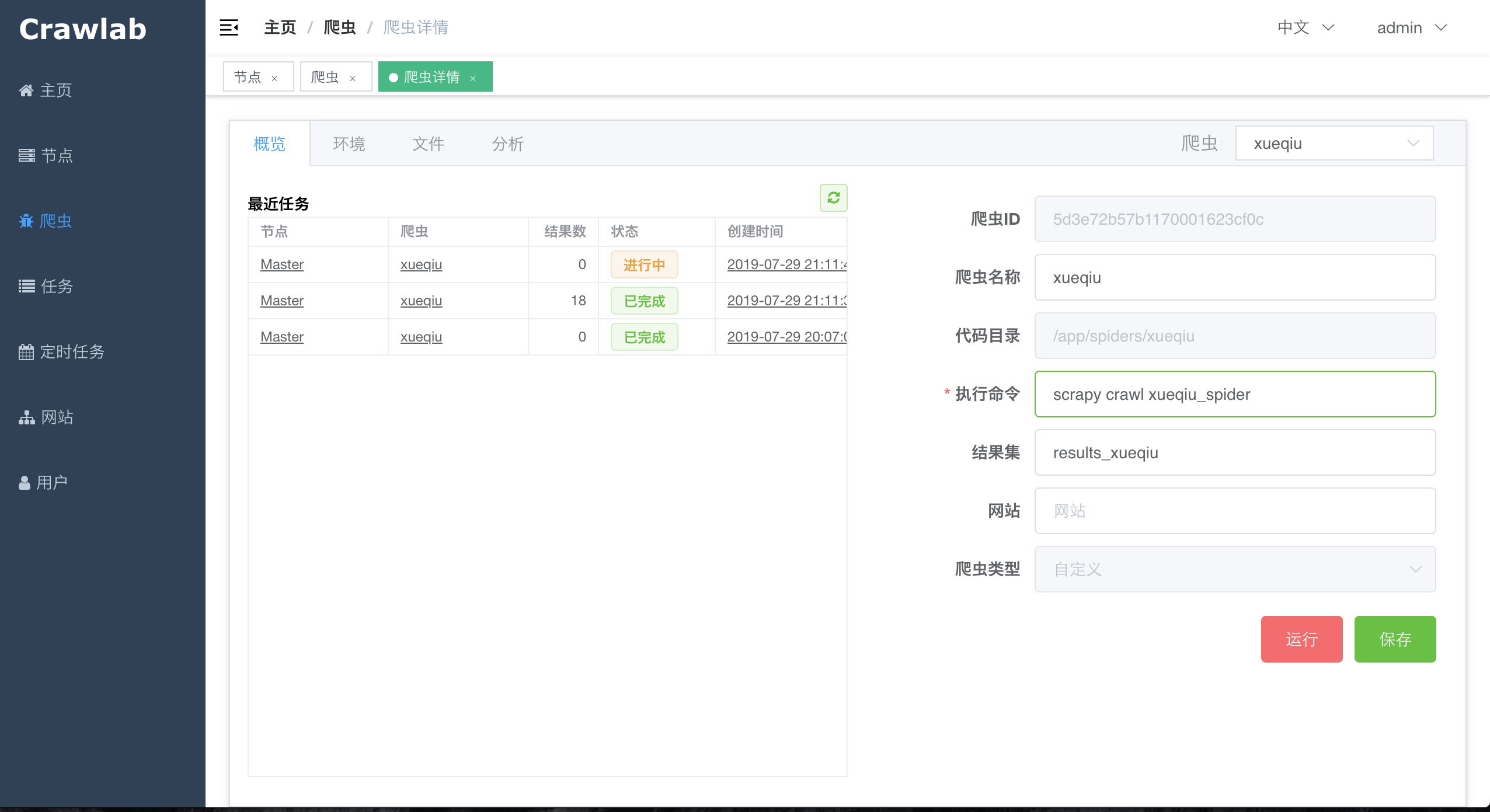

+#### 爬虫概览

-

+

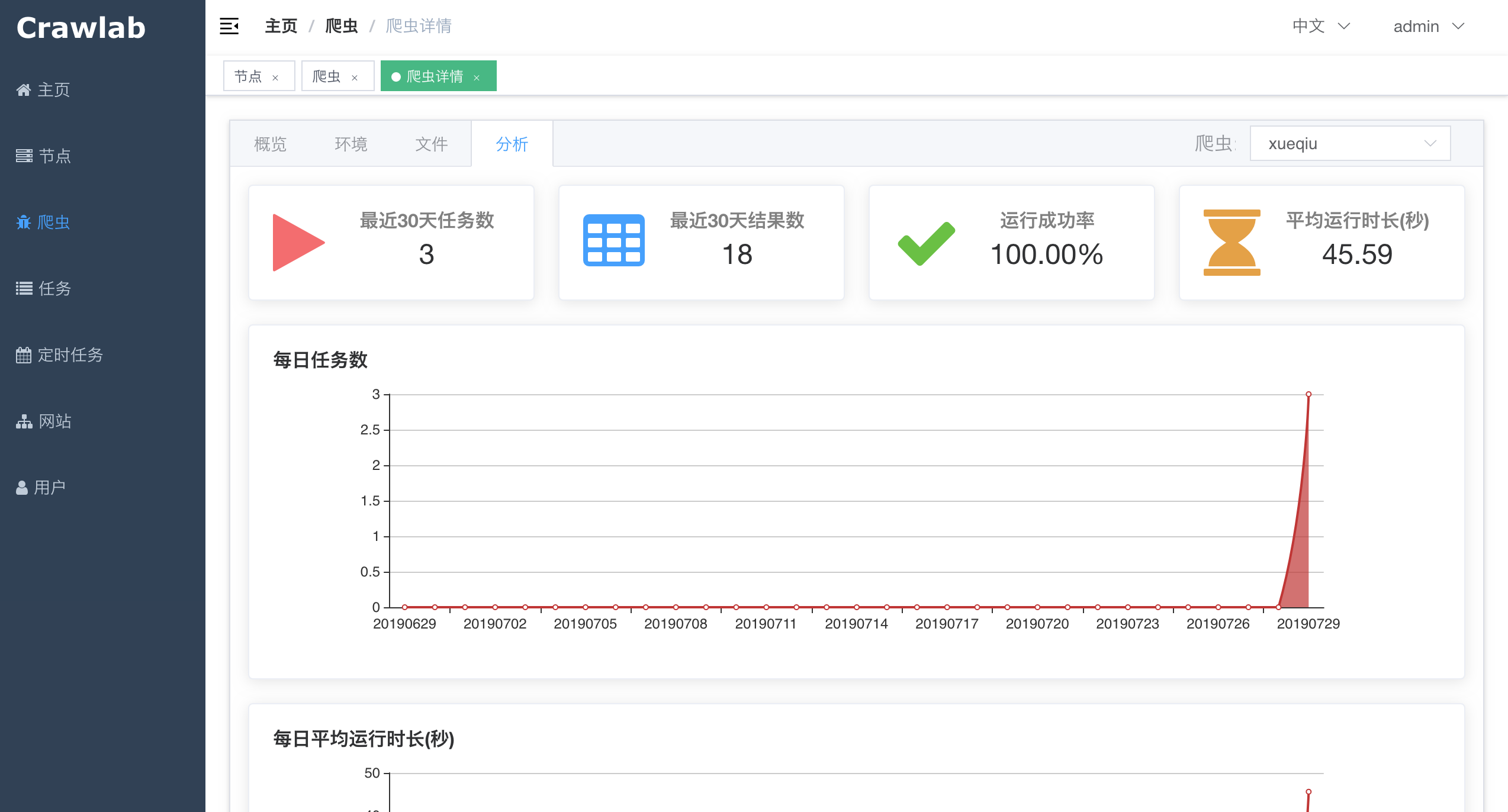

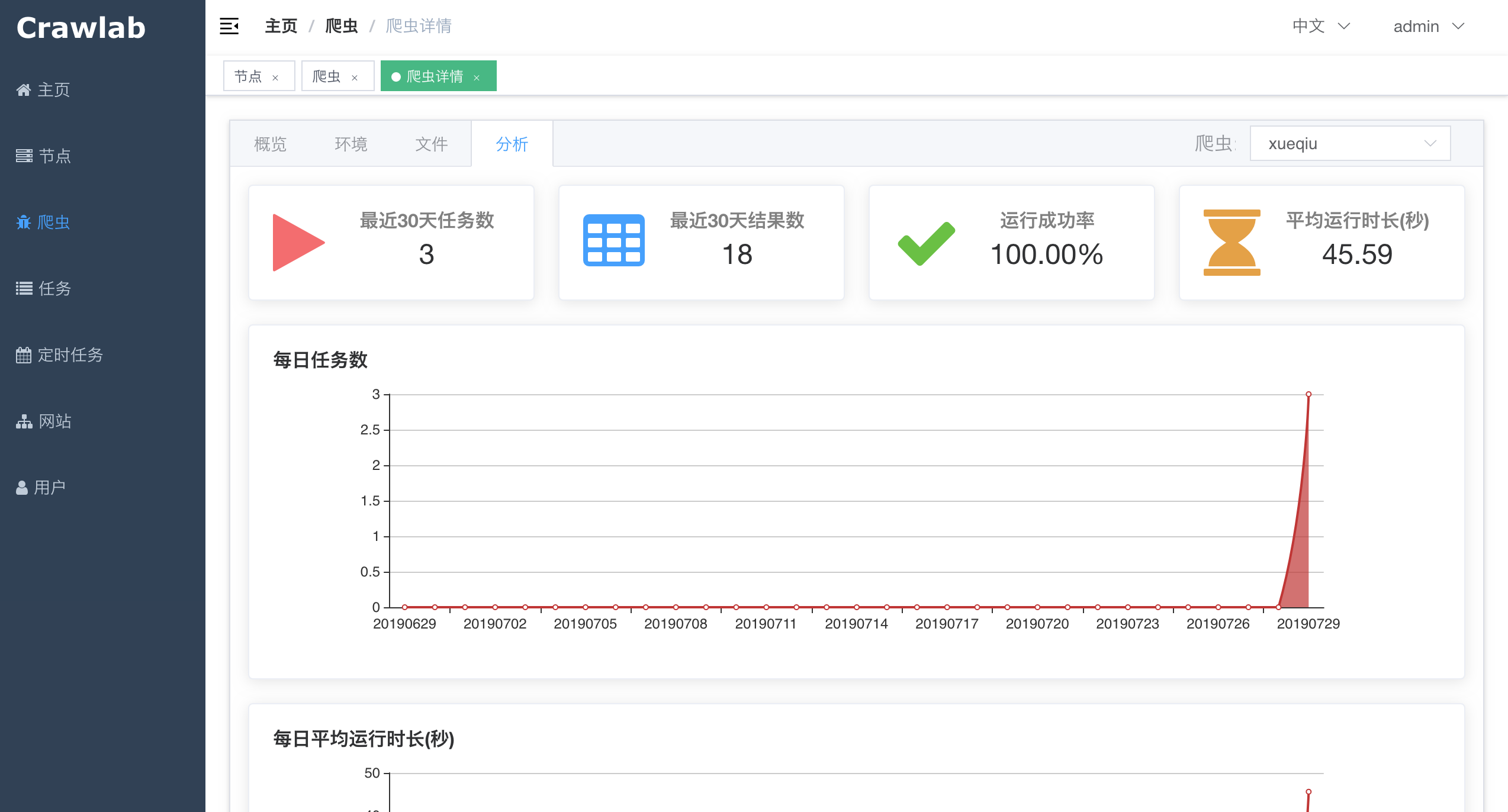

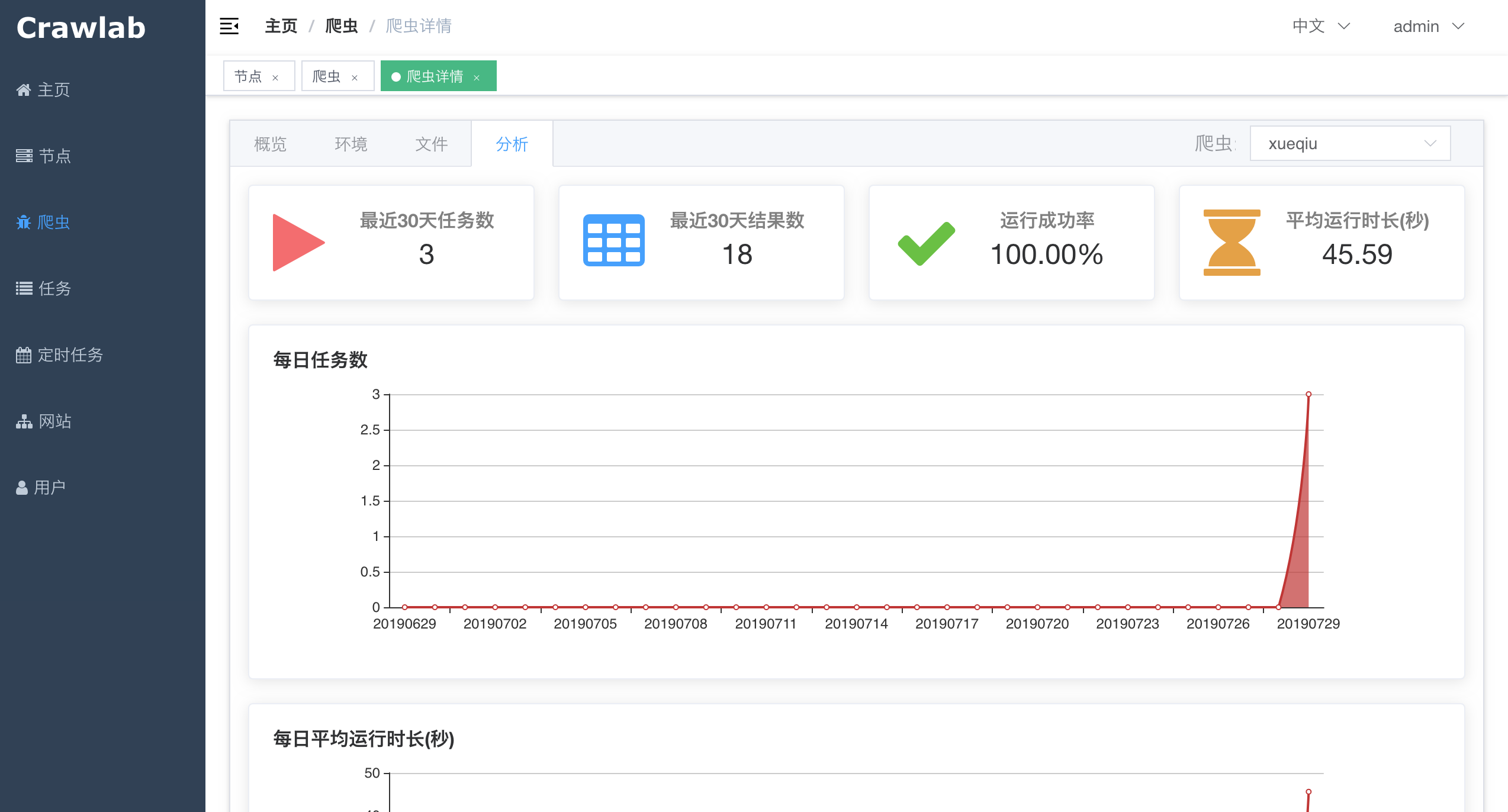

-#### 爬虫详情 - 分析

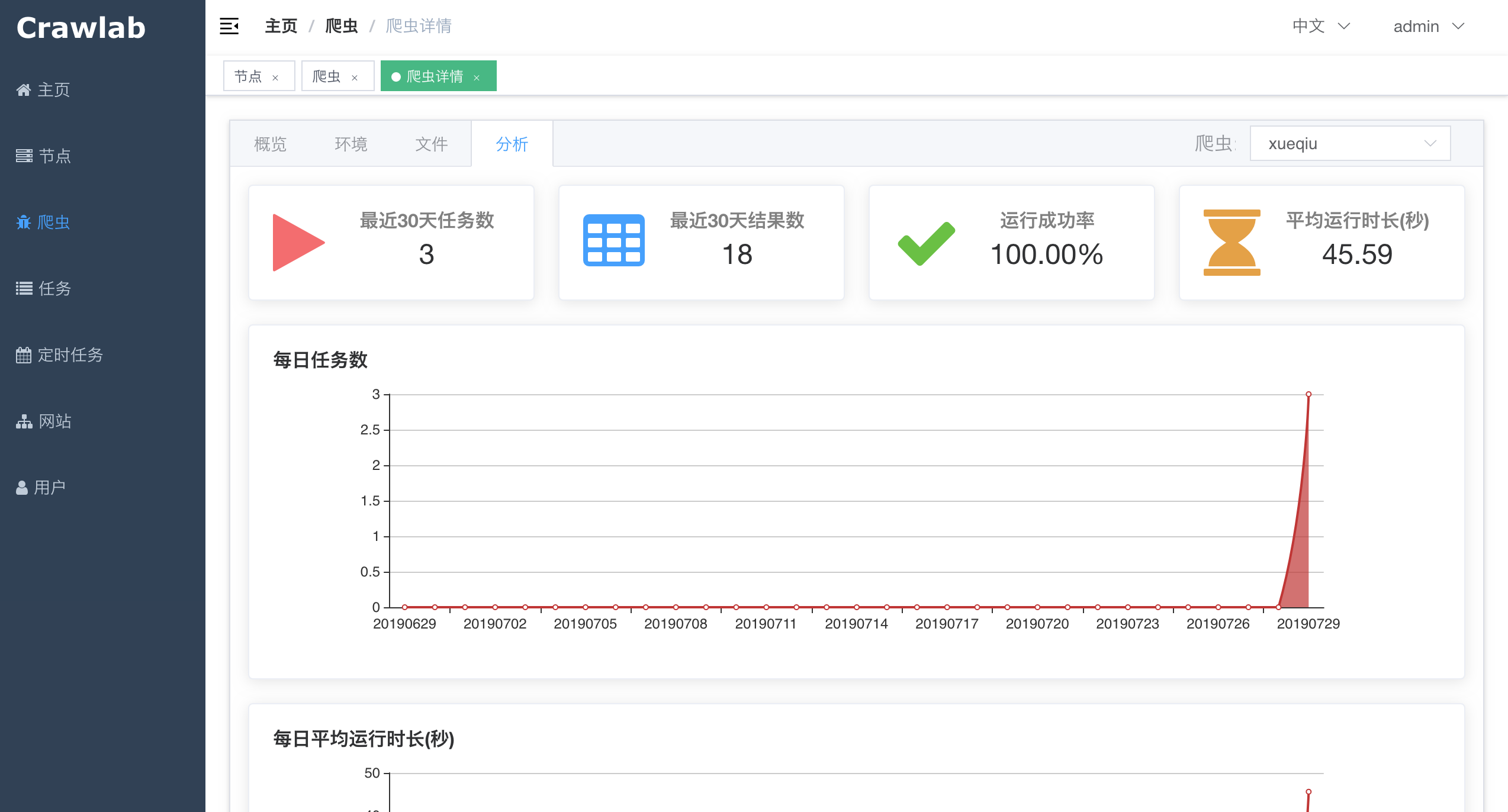

+#### 爬虫分析

-

+

+

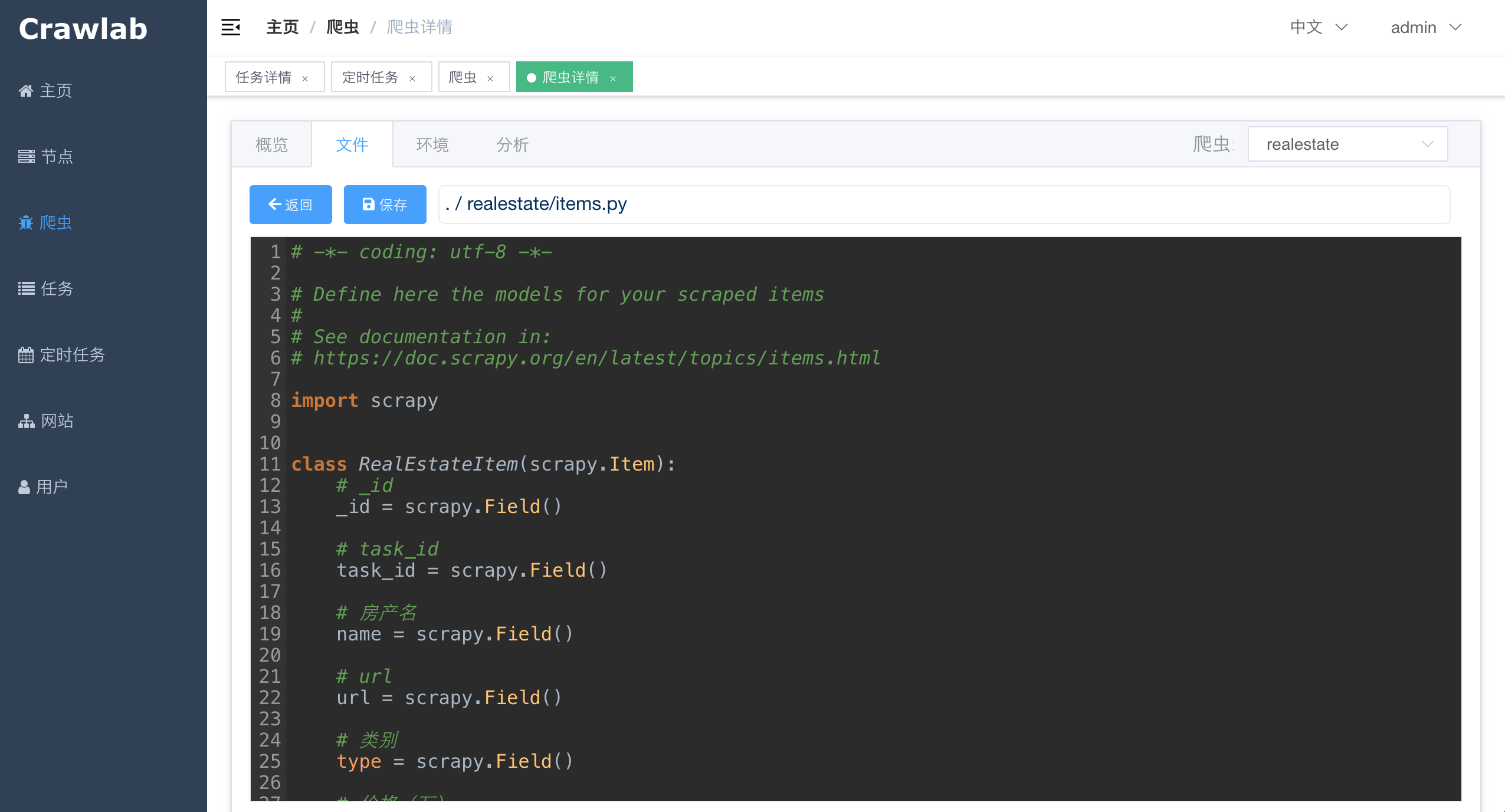

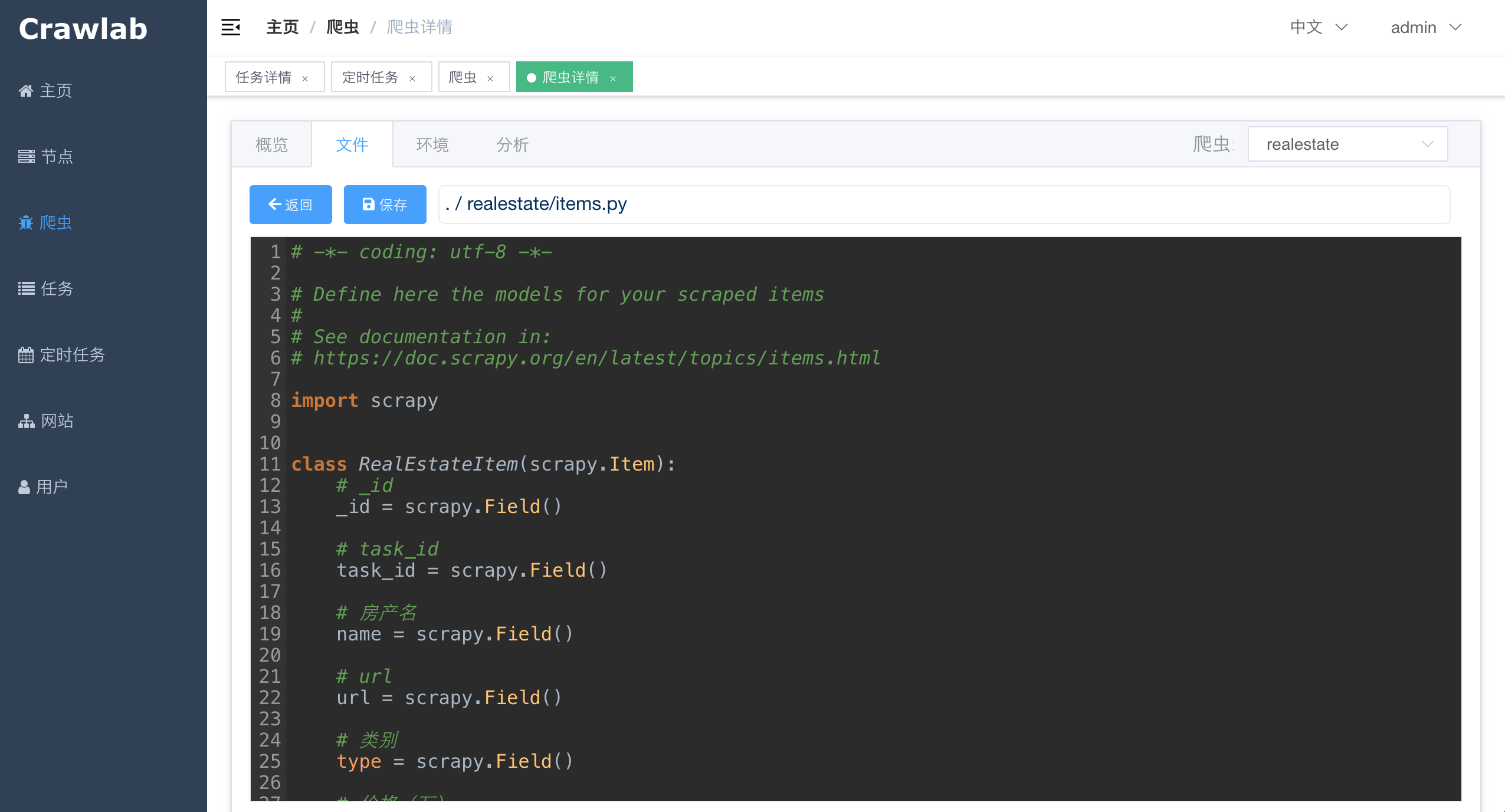

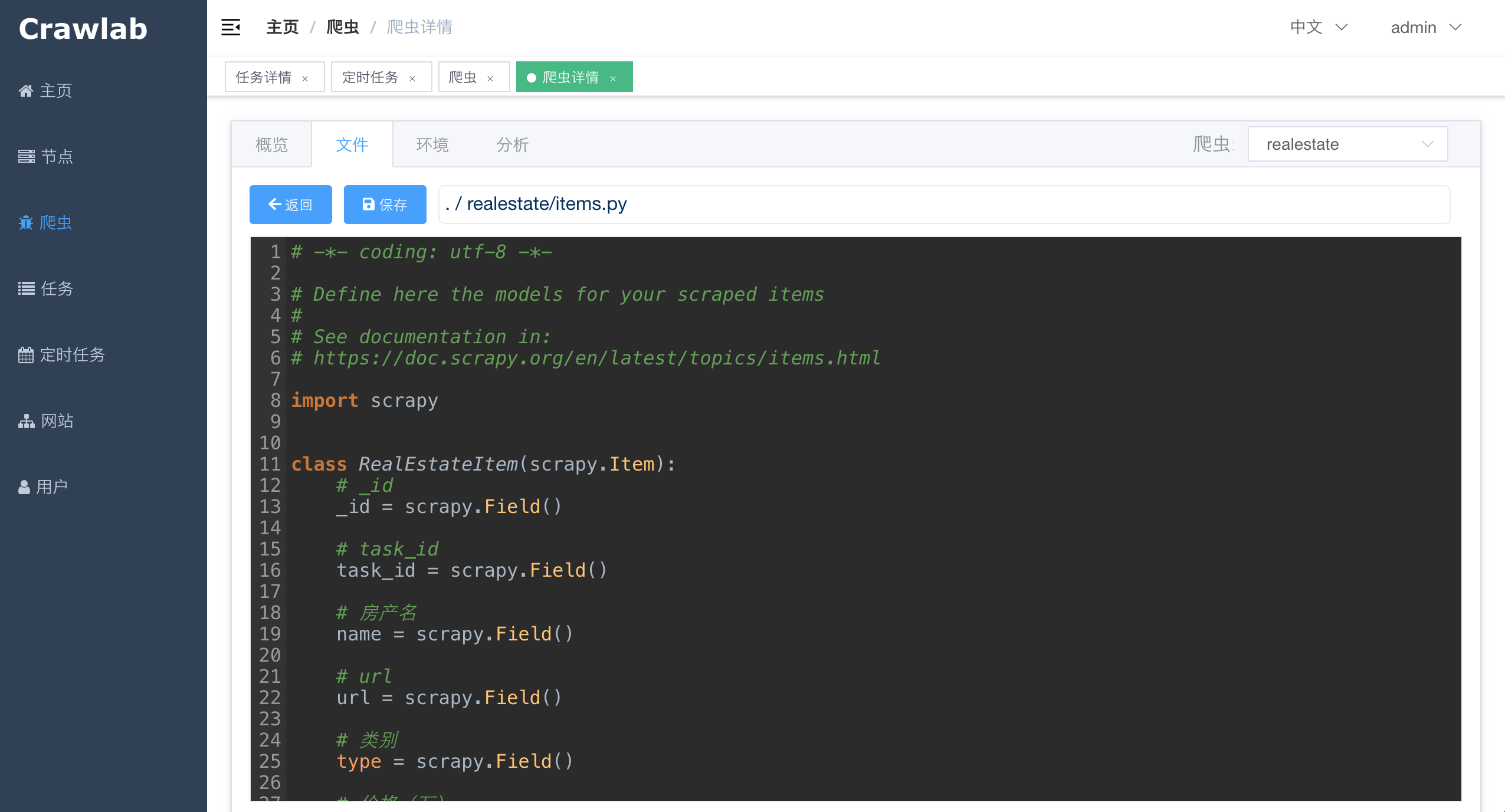

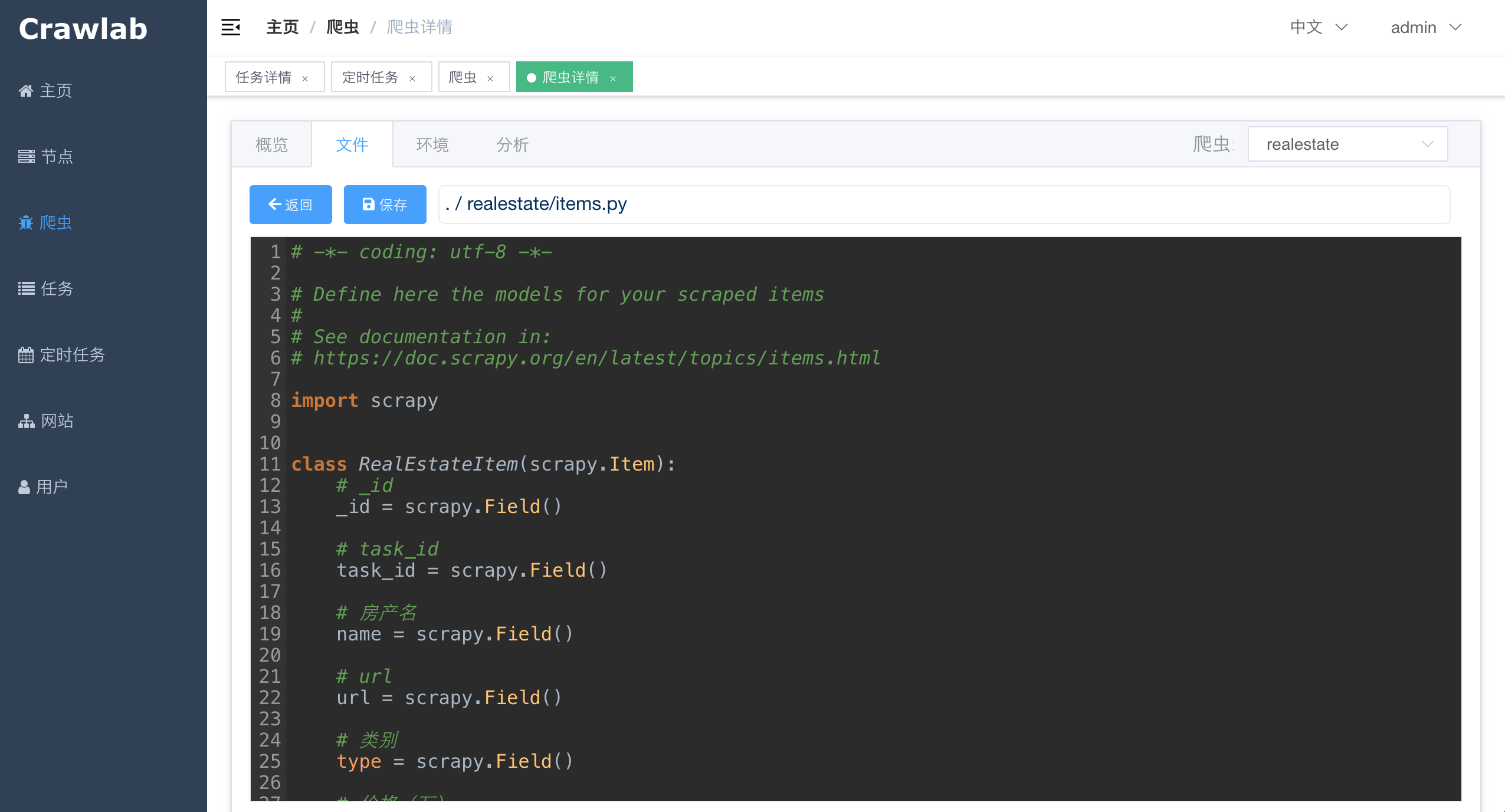

+#### 爬虫文件

+

+

#### 任务详情 - 抓取结果

-

+

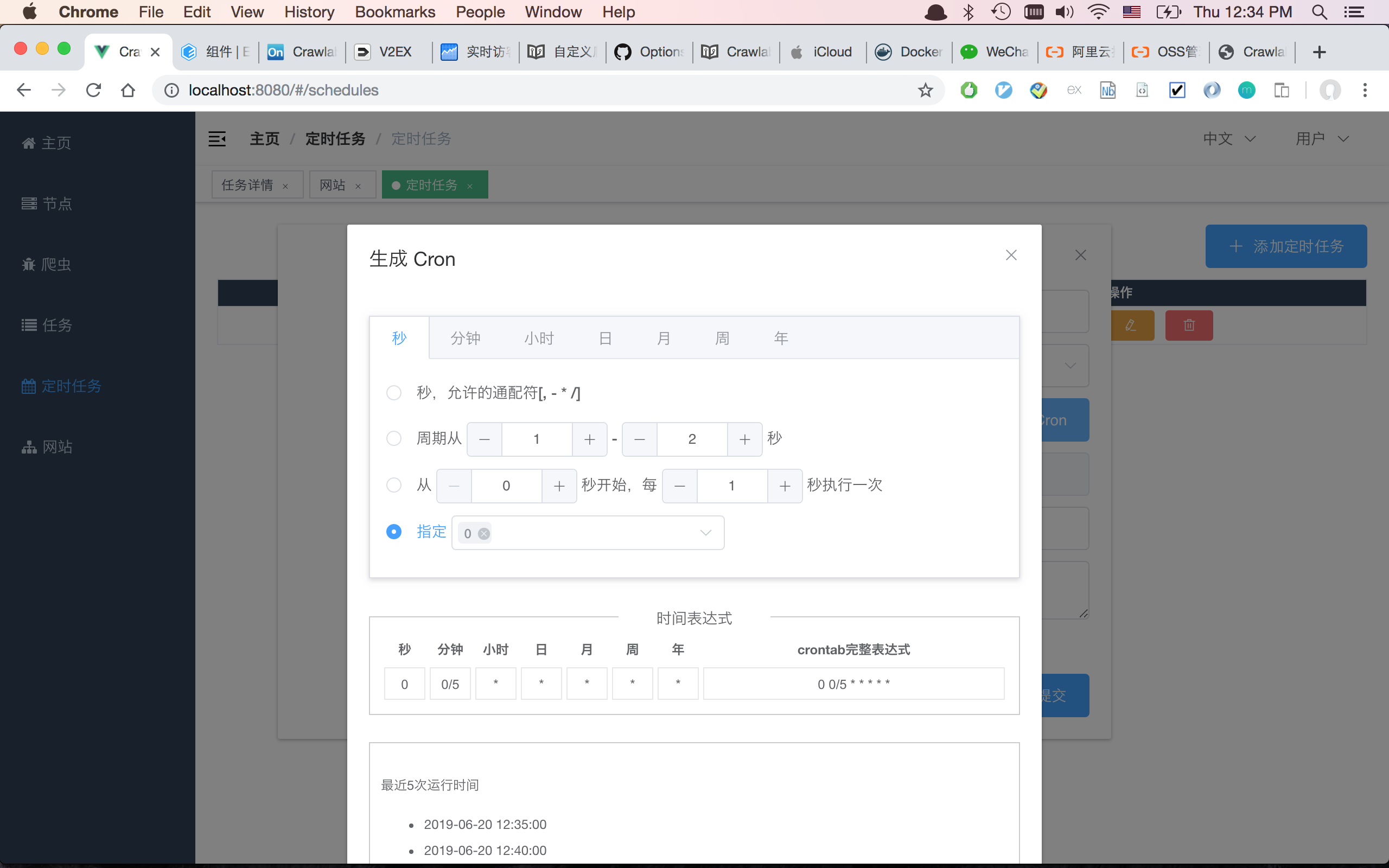

#### 定时任务

-

+

## 架构

@@ -59,7 +110,7 @@ Crawlab的架构包括了一个主节点(Master Node)和多个工作节点

前端应用向主节点请求数据,主节点通过MongoDB和Redis来执行任务派发调度以及部署,工作节点收到任务之后,开始执行爬虫任务,并将任务结果储存到MongoDB。架构相对于`v0.3.0`之前的Celery版本有所精简,去除了不必要的节点监控模块Flower,节点监控主要由Redis完成。

-### 主节点 Master Node

+### 主节点

主节点是整个Crawlab架构的核心,属于Crawlab的中控系统。

@@ -68,36 +119,33 @@ Crawlab的架构包括了一个主节点(Master Node)和多个工作节点

2. 工作节点管理和通信

3. 爬虫部署

4. 前端以及API服务

+5. 执行任务(可以将主节点当成工作节点)

主节点负责与前端应用进行通信,并通过Redis将爬虫任务派发给工作节点。同时,主节点会同步(部署)爬虫给工作节点,通过Redis和MongoDB的GridFS。

### 工作节点

-工作节点的主要功能是执行爬虫任务和储存抓取数据与日志,并且通过Redis的PubSub跟主节点通信。

+工作节点的主要功能是执行爬虫任务和储存抓取数据与日志,并且通过Redis的`PubSub`跟主节点通信。通过增加工作节点数量,Crawlab可以做到横向扩展,不同的爬虫任务可以分配到不同的节点上执行。

-### 爬虫 Spider

+### MongoDB

-爬虫源代码或配置规则储存在`App`上,需要被部署到各个`worker`节点中。

+MongoDB是Crawlab的运行数据库,储存有节点、爬虫、任务、定时任务等数据,另外GridFS文件储存方式是主节点储存爬虫文件并同步到工作节点的中间媒介。

-### 任务 Task

+### Redis

-任务被触发并被节点执行。用户可以在任务详情页面中看到任务到状态、日志和抓取结果。

+Redis是非常受欢迎的Key-Value数据库,在Crawlab中主要实现节点间数据通信的功能。例如,节点会将自己信息通过`HSET`储存在Redis的`nodes`哈希列表中,主节点根据哈希列表来判断在线节点。

-### 前端 Frontend

+### 前端

前端是一个基于[Vue-Element-Admin](https://github.com/PanJiaChen/vue-element-admin)的单页应用。其中重用了很多Element-UI的控件来支持相应的展示。

-### Flower

-

-一个Celery的插件,用于监控Celery节点。

-

## 与其他框架的集成

-任务是利用python的`subprocess`模块中的`Popen`来实现的。任务ID将以环境变量`CRAWLAB_TASK_ID`的形式存在于爬虫任务运行的进程中,并以此来关联抓取数据。

+爬虫任务本质上是由一个shell命令来实现的。任务ID将以环境变量`CRAWLAB_TASK_ID`的形式存在于爬虫任务运行的进程中,并以此来关联抓取数据。另外,`CRAWLAB_COLLECTION`是Crawlab传过来的所存放collection的名称。

-在你的爬虫程序中,你需要将`CRAWLAB_TASK_ID`的值以`task_id`作为可以存入数据库中。这样Crawlab就知道如何将爬虫任务与抓取数据关联起来了。当前,Crawlab只支持MongoDB。

+在爬虫程序中,需要将`CRAWLAB_TASK_ID`的值以`task_id`作为可以存入数据库中`CRAWLAB_COLLECTION`的collection中。这样Crawlab就知道如何将爬虫任务与抓取数据关联起来了。当前,Crawlab只支持MongoDB。

-### Scrapy

+### 集成Scrapy

以下是Crawlab跟Scrapy集成的例子,利用了Crawlab传过来的task_id和collection_name。

@@ -135,11 +183,20 @@ Crawlab使用起来很方便,也很通用,可以适用于几乎任何主流

|框架 | 类型 | 分布式 | 前端 | 依赖于Scrapyd |

|:---:|:---:|:---:|:---:|:---:|

| [Crawlab](https://github.com/tikazyq/crawlab) | 管理平台 | Y | Y | N

-| [Gerapy](https://github.com/Gerapy/Gerapy) | 管理平台 | Y | Y | Y

-| [SpiderKeeper](https://github.com/DormyMo/SpiderKeeper) | 管理平台 | Y | Y | Y

| [ScrapydWeb](https://github.com/my8100/scrapydweb) | 管理平台 | Y | Y | Y

+| [SpiderKeeper](https://github.com/DormyMo/SpiderKeeper) | 管理平台 | Y | Y | Y

+| [Gerapy](https://github.com/Gerapy/Gerapy) | 管理平台 | Y | Y | Y

| [Scrapyd](https://github.com/scrapy/scrapyd) | 网络服务 | Y | N | N/A

+## 相关文章

+

+- [爬虫管理平台Crawlab部署指南(Docker and more)](https://juejin.im/post/5d01027a518825142939320f)

+- [[爬虫手记] 我是如何在3分钟内开发完一个爬虫的](https://juejin.im/post/5ceb4342f265da1bc8540660)

+- [手把手教你如何用Crawlab构建技术文章聚合平台(二)](https://juejin.im/post/5c92365d6fb9a070c5510e71)

+- [手把手教你如何用Crawlab构建技术文章聚合平台(一)](https://juejin.im/user/5a1ba6def265da430b7af463/posts)

+

+**注意: v0.3.0版本已将基于Celery的Python版本切换为了Golang版本,如何部署请参照文档**

+

## 社区 & 赞助

如果您觉得Crawlab对您的日常开发或公司有帮助,请加作者微信 tikazyq1 并注明"Crawlab",作者会将你拉入群。或者,您可以扫下方支付宝二维码给作者打赏去升级团队协作软件或买一杯咖啡。

diff --git a/backend/database/mongo.go b/backend/database/mongo.go

index ecf3ba22..d1771c1d 100644

--- a/backend/database/mongo.go

+++ b/backend/database/mongo.go

@@ -33,9 +33,17 @@ func InitMongo() error {

var mongoHost = viper.GetString("mongo.host")

var mongoPort = viper.GetString("mongo.port")

var mongoDb = viper.GetString("mongo.db")

+ var mongoUsername = viper.GetString("mongo.username")

+ var mongoPassword = viper.GetString("mongo.password")

if Session == nil {

- sess, err := mgo.Dial("mongodb://" + mongoHost + ":" + mongoPort + "/" + mongoDb)

+ var uri string

+ if mongoUsername == "" {

+ uri = "mongodb://" + mongoHost + ":" + mongoPort + "/" + mongoDb

+ } else {

+ uri = "mongodb://" + mongoUsername + ":" + mongoPassword + "@" + mongoHost + ":" + mongoPort + "/" + mongoDb

+ }

+ sess, err := mgo.Dial(uri)

if err != nil {

return err

}

diff --git a/backend/main.go b/backend/main.go

index 7feb9ffc..b6bb191d 100644

--- a/backend/main.go

+++ b/backend/main.go

@@ -95,8 +95,7 @@ func main() {

// 爬虫

app.GET("/spiders", routes.GetSpiderList) // 爬虫列表

app.GET("/spiders/:id", routes.GetSpider) // 爬虫详情

- app.PUT("/spiders", routes.PutSpider) // 上传爬虫

- app.POST("/spiders", routes.PublishAllSpiders) // 发布所有爬虫

+ app.POST("/spiders", routes.PutSpider) // 上传爬虫

app.POST("/spiders/:id", routes.PostSpider) // 修改爬虫

app.POST("/spiders/:id/publish", routes.PublishSpider) // 发布爬虫

app.DELETE("/spiders/:id", routes.DeleteSpider) // 删除爬虫

diff --git a/backend/services/task.go b/backend/services/task.go

index 4b75a0da..8c0ff8a1 100644

--- a/backend/services/task.go

+++ b/backend/services/task.go

@@ -130,7 +130,7 @@ func ExecuteShellCmd(cmdStr string, cwd string, t model.Task, s model.Spider) (e

// 添加任务环境变量

for _, env := range s.Envs {

- cmd.Env = append(cmd.Env, env.Name + "=" + env.Value)

+ cmd.Env = append(cmd.Env, env.Name+"="+env.Value)

}

// 起一个goroutine来监控进程

@@ -344,14 +344,16 @@ func ExecuteTask(id int) {

}

// 起一个cron执行器来统计任务结果数

- cronExec := cron.New(cron.WithSeconds())

- _, err = cronExec.AddFunc("*/5 * * * * *", SaveTaskResultCount(t.Id))

- if err != nil {

- log.Errorf(GetWorkerPrefix(id) + err.Error())

- return

+ if spider.Col != "" {

+ cronExec := cron.New(cron.WithSeconds())

+ _, err = cronExec.AddFunc("*/5 * * * * *", SaveTaskResultCount(t.Id))

+ if err != nil {

+ log.Errorf(GetWorkerPrefix(id) + err.Error())

+ return

+ }

+ cronExec.Start()

+ defer cronExec.Stop()

}

- cronExec.Start()

- defer cronExec.Stop()

// 执行Shell命令

if err := ExecuteShellCmd(cmd, cwd, t, spider); err != nil {

@@ -360,9 +362,11 @@ func ExecuteTask(id int) {

}

// 更新任务结果数

- if err := model.UpdateTaskResultCount(t.Id); err != nil {

- log.Errorf(GetWorkerPrefix(id) + err.Error())

- return

+ if spider.Col != "" {

+ if err := model.UpdateTaskResultCount(t.Id); err != nil {

+ log.Errorf(GetWorkerPrefix(id) + err.Error())

+ return

+ }

}

// 完成进程

diff --git a/crawlab/.gitignore b/crawlab/.gitignore

deleted file mode 100644

index ccc81841..00000000

--- a/crawlab/.gitignore

+++ /dev/null

@@ -1,114 +0,0 @@

-.idea/

-

-# Byte-compiled / optimized / DLL files

-__pycache__/

-*.py[cod]

-*$py.class

-

-# C extensions

-*.so

-

-# Distribution / packaging

-.Python

-build/

-develop-eggs/

-dist/

-downloads/

-eggs/

-.eggs/

-lib/

-lib64/

-parts/

-sdist/

-var/

-wheels/

-*.egg-info/

-.installed.cfg

-*.egg

-MANIFEST

-

-# PyInstaller

-# Usually these files are written by a python script from a template

-# before PyInstaller builds the exe, so as to inject date/other infos into it.

-*.manifest

-*.spec

-

-# Installer logs

-pip-log.txt

-pip-delete-this-directory.txt

-

-# Unit test / coverage reports

-htmlcov/

-.tox/

-.coverage

-.coverage.*

-.cache

-nosetests.xml

-coverage.xml

-*.cover

-.hypothesis/

-.pytest_cache/

-

-# Translations

-*.mo

-*.pot

-

-# Django stuff:

-*.log

-local_settings.py

-db.sqlite3

-

-# Flask stuff:

-instance/

-.webassets-cache

-

-# Scrapy stuff:

-.scrapy

-

-# Sphinx documentation

-docs/_build/

-

-# PyBuilder

-target/

-

-# Jupyter Notebook

-.ipynb_checkpoints

-

-# pyenv

-.python-version

-

-# celery beat schedule file

-celerybeat-schedule

-

-# SageMath parsed files

-*.sage.py

-

-# Environments

-.env

-.venv

-env/

-venv/

-ENV/

-env.bak/

-venv.bak/

-

-# Spyder project settings

-.spyderproject

-.spyproject

-

-# Rope project settings

-.ropeproject

-

-# mkdocs documentation

-/site

-

-# mypy

-.mypy_cache/

-

-# node_modules

-node_modules/

-

-# egg-info

-*.egg-info

-

-tmp/

diff --git a/crawlab/__init__.py b/crawlab/__init__.py

deleted file mode 100644

index e69de29b..00000000

diff --git a/crawlab/app.py b/crawlab/app.py

deleted file mode 100644

index 484e9234..00000000

--- a/crawlab/app.py

+++ /dev/null

@@ -1,106 +0,0 @@

-import os

-import sys

-from multiprocessing import Process

-

-from flask import Flask

-from flask_cors import CORS

-from flask_restful import Api

-# from flask_restplus import Api

-

-file_dir = os.path.dirname(os.path.realpath(__file__))

-root_path = os.path.abspath(os.path.join(file_dir, '.'))

-sys.path.append(root_path)

-

-from utils.log import other

-from constants.node import NodeStatus

-from db.manager import db_manager

-from routes.schedules import ScheduleApi

-from tasks.celery import celery_app

-from tasks.scheduler import scheduler

-from config import FLASK_HOST, FLASK_PORT, PROJECT_LOGS_FOLDER

-from routes.sites import SiteApi

-from routes.deploys import DeployApi

-from routes.files import FileApi

-from routes.nodes import NodeApi

-from routes.spiders import SpiderApi, SpiderImportApi, SpiderManageApi

-from routes.stats import StatsApi

-from routes.tasks import TaskApi

-

-# flask app instance

-app = Flask(__name__)

-app.config.from_object('config')

-

-# init flask api instance

-api = Api(app)

-

-# cors support

-CORS(app, supports_credentials=True)

-

-# reference api routes

-api.add_resource(NodeApi,

- '/api/nodes',

- '/api/nodes/',

- '/api/nodes//')

-api.add_resource(SpiderApi,

- '/api/spiders',

- '/api/spiders/',

- '/api/spiders//')

-api.add_resource(SpiderImportApi,

- '/api/spiders/import/')

-api.add_resource(SpiderManageApi,

- '/api/spiders/manage/')

-api.add_resource(TaskApi,

- '/api/tasks',

- '/api/tasks/',

- '/api/tasks//')

-api.add_resource(DeployApi,

- '/api/deploys',

- '/api/deploys/',

- '/api/deploys//')

-api.add_resource(FileApi,

- '/api/files',

- '/api/files/')

-api.add_resource(StatsApi,

- '/api/stats',

- '/api/stats/')

-api.add_resource(ScheduleApi,

- '/api/schedules',

- '/api/schedules/')

-api.add_resource(SiteApi,

- '/api/sites',

- '/api/sites/',

- '/api/sites/get/')

-

-

-def monitor_nodes_status(celery_app):

- def update_nodes_status(event):

- node_id = event.get('hostname')

- db_manager.update_one('nodes', id=node_id, values={

- 'status': NodeStatus.ONLINE

- })

-

- def update_nodes_status_online(event):

- other.info(f"{event}")

-

- with celery_app.connection() as connection:

- recv = celery_app.events.Receiver(connection, handlers={

- 'worker-heartbeat': update_nodes_status,

- # 'worker-online': update_nodes_status_online,

- })

- recv.capture(limit=None, timeout=None, wakeup=True)

-

-

-# run scheduler as a separate process

-scheduler.run()

-

-# monitor node status

-p_monitor = Process(target=monitor_nodes_status, args=(celery_app,))

-p_monitor.start()

-

-# create folder if it does not exist

-if not os.path.exists(PROJECT_LOGS_FOLDER):

- os.makedirs(PROJECT_LOGS_FOLDER)

-

-if __name__ == '__main__':

- # run app instance

- app.run(host=FLASK_HOST, port=FLASK_PORT)

diff --git a/crawlab/config/__init__.py b/crawlab/config/__init__.py

deleted file mode 100644

index 4d2d8d10..00000000

--- a/crawlab/config/__init__.py

+++ /dev/null

@@ -1,3 +0,0 @@

-# encoding: utf-8

-

-from config.config import *

diff --git a/crawlab/config/config.py b/crawlab/config/config.py

deleted file mode 100644

index 749ecdba..00000000

--- a/crawlab/config/config.py

+++ /dev/null

@@ -1,52 +0,0 @@

-import os

-

-BASE_DIR = os.path.dirname(os.path.dirname(os.path.dirname(os.path.abspath(__file__))))

-

-# 爬虫源码路径

-PROJECT_SOURCE_FILE_FOLDER = os.path.join(BASE_DIR, "spiders")

-

-# 爬虫部署路径

-PROJECT_DEPLOY_FILE_FOLDER = '/var/crawlab'

-

-# 爬虫日志路径

-PROJECT_LOGS_FOLDER = '/var/log/crawlab'

-

-# 打包临时文件夹

-PROJECT_TMP_FOLDER = '/tmp'

-

-# MongoDB 变量

-MONGO_HOST = '127.0.0.1'

-MONGO_PORT = 27017

-MONGO_USERNAME = None

-MONGO_PASSWORD = None

-MONGO_DB = 'crawlab_test'

-MONGO_AUTH_DB = 'crawlab_test'

-

-# Celery中间者URL

-BROKER_URL = 'redis://127.0.0.1:6379/0'

-

-# Celery后台URL

-if MONGO_USERNAME is not None:

- CELERY_RESULT_BACKEND = f'mongodb://{MONGO_USERNAME}:{MONGO_PASSWORD}@{MONGO_HOST}:{MONGO_PORT}/'

-else:

- CELERY_RESULT_BACKEND = f'mongodb://{MONGO_HOST}:{MONGO_PORT}/'

-

-# Celery MongoDB设置

-CELERY_MONGODB_BACKEND_SETTINGS = {

- 'database': 'crawlab_test',

- 'taskmeta_collection': 'tasks_celery',

-}

-

-# Celery时区

-CELERY_TIMEZONE = 'Asia/Shanghai'

-

-# 是否启用UTC

-CELERY_ENABLE_UTC = True

-

-# flower variables

-FLOWER_API_ENDPOINT = 'http://localhost:5555/api'

-

-# Flask 变量

-DEBUG = False

-FLASK_HOST = '0.0.0.0'

-FLASK_PORT = 8000

diff --git a/crawlab/constants/__init__.py b/crawlab/constants/__init__.py

deleted file mode 100644

index e69de29b..00000000

diff --git a/crawlab/constants/file.py b/crawlab/constants/file.py

deleted file mode 100644

index 7418936e..00000000

--- a/crawlab/constants/file.py

+++ /dev/null

@@ -1,3 +0,0 @@

-class FileType:

- FILE = 1

- FOLDER = 2

diff --git a/crawlab/constants/lang.py b/crawlab/constants/lang.py

deleted file mode 100644

index b0dfdb4e..00000000

--- a/crawlab/constants/lang.py

+++ /dev/null

@@ -1,4 +0,0 @@

-class LangType:

- PYTHON = 1

- NODE = 2

- GO = 3

diff --git a/crawlab/constants/manage.py b/crawlab/constants/manage.py

deleted file mode 100644

index 1c57837d..00000000

--- a/crawlab/constants/manage.py

+++ /dev/null

@@ -1,6 +0,0 @@

-class ActionType:

- APP = 'app'

- FLOWER = 'flower'

- WORKER = 'worker'

- SCHEDULER = 'scheduler'

- RUN_ALL = 'run_all'

diff --git a/crawlab/constants/node.py b/crawlab/constants/node.py

deleted file mode 100644

index 4cdc3fda..00000000

--- a/crawlab/constants/node.py

+++ /dev/null

@@ -1,3 +0,0 @@

-class NodeStatus:

- ONLINE = 'online'

- OFFLINE = 'offline'

diff --git a/crawlab/constants/spider.py b/crawlab/constants/spider.py

deleted file mode 100644

index 97cbbdf2..00000000

--- a/crawlab/constants/spider.py

+++ /dev/null

@@ -1,44 +0,0 @@

-class SpiderType:

- CONFIGURABLE = 'configurable'

- CUSTOMIZED = 'customized'

-

-

-class LangType:

- PYTHON = 'python'

- JAVASCRIPT = 'javascript'

- JAVA = 'java'

- GO = 'go'

- OTHER = 'other'

-

-

-class CronEnabled:

- ON = 1

- OFF = 0

-

-

-class CrawlType:

- LIST = 'list'

- DETAIL = 'detail'

- LIST_DETAIL = 'list-detail'

-

-

-class QueryType:

- CSS = 'css'

- XPATH = 'xpath'

-

-

-class ExtractType:

- TEXT = 'text'

- ATTRIBUTE = 'attribute'

-

-

-SUFFIX_IGNORE = [

- 'pyc'

-]

-

-FILE_SUFFIX_LANG_MAPPING = {

- 'py': LangType.PYTHON,

- 'js': LangType.JAVASCRIPT,

- 'java': LangType.JAVA,

- 'go': LangType.GO,

-}

diff --git a/crawlab/constants/task.py b/crawlab/constants/task.py

deleted file mode 100644

index 07e169b3..00000000

--- a/crawlab/constants/task.py

+++ /dev/null

@@ -1,8 +0,0 @@

-class TaskStatus:

- PENDING = 'PENDING'

- STARTED = 'STARTED'

- SUCCESS = 'SUCCESS'

- FAILURE = 'FAILURE'

- RETRY = 'RETRY'

- REVOKED = 'REVOKED'

- UNAVAILABLE = 'UNAVAILABLE'

diff --git a/crawlab/db/__init__.py b/crawlab/db/__init__.py

deleted file mode 100644

index e69de29b..00000000

diff --git a/crawlab/db/manager.py b/crawlab/db/manager.py

deleted file mode 100644

index ac157dfb..00000000

--- a/crawlab/db/manager.py

+++ /dev/null

@@ -1,189 +0,0 @@

-from bson import ObjectId

-from pymongo import MongoClient, DESCENDING

-from config import MONGO_HOST, MONGO_PORT, MONGO_DB, MONGO_USERNAME, MONGO_PASSWORD, MONGO_AUTH_DB

-from utils import is_object_id

-

-

-class DbManager(object):

- __doc__ = """

- Database Manager class for handling database CRUD actions.

- """

-

- def __init__(self):

- self.mongo = MongoClient(host=MONGO_HOST,

- port=MONGO_PORT,

- username=MONGO_USERNAME,

- password=MONGO_PASSWORD,

- authSource=MONGO_AUTH_DB or MONGO_DB,

- connect=False)

- self.db = self.mongo[MONGO_DB]

-

- def save(self, col_name: str, item: dict, **kwargs) -> None:

- """

- Save the item in the specified collection

- :param col_name: collection name

- :param item: item object

- """

- col = self.db[col_name]

-

- # in case some fields cannot be saved in MongoDB

- if item.get('stats') is not None:

- item.pop('stats')

-

- return col.save(item, **kwargs)

-

- def remove(self, col_name: str, cond: dict, **kwargs) -> None:

- """

- Remove items given specified condition.

- :param col_name: collection name

- :param cond: condition or filter

- """

- col = self.db[col_name]

- col.remove(cond, **kwargs)

-

- def update(self, col_name: str, cond: dict, values: dict, **kwargs):

- """

- Update items given specified condition.

- :param col_name: collection name

- :param cond: condition or filter

- :param values: values to update

- """

- col = self.db[col_name]

- col.update(cond, {'$set': values}, **kwargs)

-

- def update_one(self, col_name: str, id: str, values: dict, **kwargs):

- """

- Update an item given specified _id

- :param col_name: collection name

- :param id: _id

- :param values: values to update

- """

- col = self.db[col_name]

- _id = id

- if is_object_id(id):

- _id = ObjectId(id)

- # print('UPDATE: _id = "%s", values = %s' % (str(_id), jsonify(values)))

- col.find_one_and_update({'_id': _id}, {'$set': values})

-

- def remove_one(self, col_name: str, id: str, **kwargs):

- """

- Remove an item given specified _id

- :param col_name: collection name

- :param id: _id

- """

- col = self.db[col_name]

- _id = id

- if is_object_id(id):

- _id = ObjectId(id)

- col.remove({'_id': _id})

-

- def list(self, col_name: str, cond: dict, sort_key=None, sort_direction=DESCENDING, skip: int = 0, limit: int = 100,

- **kwargs) -> list:

- """

- Return a list of items given specified condition, sort_key, sort_direction, skip, and limit.

- :param col_name: collection name

- :param cond: condition or filter

- :param sort_key: key to sort

- :param sort_direction: sort direction

- :param skip: skip number

- :param limit: limit number

- """

- if sort_key is None:

- sort_key = '_i'

- col = self.db[col_name]

- data = []

- for item in col.find(cond).sort(sort_key, sort_direction).skip(skip).limit(limit):

- data.append(item)

- return data

-

- def _get(self, col_name: str, cond: dict) -> dict:

- """

- Get an item given specified condition.

- :param col_name: collection name

- :param cond: condition or filter

- """

- col = self.db[col_name]

- return col.find_one(cond)

-

- def get(self, col_name: str, id: (ObjectId, str)) -> dict:

- """

- Get an item given specified _id.

- :param col_name: collection name

- :param id: _id

- """

- if type(id) == ObjectId:

- _id = id

- elif is_object_id(id):

- _id = ObjectId(id)

- else:

- _id = id

- return self._get(col_name=col_name, cond={'_id': _id})

-

- def get_one_by_key(self, col_name: str, key, value) -> dict:

- """

- Get an item given key/value condition.

- :param col_name: collection name

- :param key: key

- :param value: value

- """

- return self._get(col_name=col_name, cond={key: value})

-

- def count(self, col_name: str, cond) -> int:

- """

- Get total count of a collection given specified condition

- :param col_name: collection name

- :param cond: condition or filter

- """

- col = self.db[col_name]

- return col.count(cond)

-

- def get_latest_version(self, spider_id, node_id):

- """

- @deprecated

- """

- col = self.db['deploys']

- for item in col.find({'spider_id': ObjectId(spider_id), 'node_id': node_id}) \

- .sort('version', DESCENDING):

- return item.get('version')

- return None

-

- def get_last_deploy(self, spider_id):

- """

- Get latest deploy for a given spider_id

- """

- col = self.db['deploys']

- for item in col.find({'spider_id': ObjectId(spider_id)}) \

- .sort('finish_ts', DESCENDING):

- return item

- return None

-

- def get_last_task(self, spider_id):

- """

- Get latest deploy for a given spider_id

- """

- col = self.db['tasks']

- for item in col.find({'spider_id': ObjectId(spider_id)}) \

- .sort('create_ts', DESCENDING):

- return item

- return None

-

- def aggregate(self, col_name: str, pipelines, **kwargs):

- """

- Perform MongoDB col.aggregate action to aggregate stats given collection name and pipelines.

- Reference: https://docs.mongodb.com/manual/reference/command/aggregate/

- :param col_name: collection name

- :param pipelines: pipelines

- """

- col = self.db[col_name]

- return col.aggregate(pipelines, **kwargs)

-

- def create_index(self, col_name: str, keys: dict, **kwargs):

- col = self.db[col_name]

- col.create_index(keys=keys, **kwargs)

-

- def distinct(self, col_name: str, key: str, filter: dict):

- col = self.db[col_name]

- return sorted(col.distinct(key, filter))

-

-

-db_manager = DbManager()

diff --git a/crawlab/flower.py b/crawlab/flower.py

deleted file mode 100644

index 818d96f7..00000000

--- a/crawlab/flower.py

+++ /dev/null

@@ -1,20 +0,0 @@

-import os

-import sys

-import subprocess

-

-# make sure the working directory is in system path

-FILE_DIR = os.path.dirname(os.path.realpath(__file__))

-ROOT_PATH = os.path.abspath(os.path.join(FILE_DIR, '..'))

-sys.path.append(ROOT_PATH)

-

-from utils.log import other

-from config import BROKER_URL

-

-if __name__ == '__main__':

- p = subprocess.Popen([sys.executable, '-m', 'celery', 'flower', '-b', BROKER_URL],

- stdout=subprocess.PIPE,

- stderr=subprocess.STDOUT,

- cwd=ROOT_PATH)

- for line in iter(p.stdout.readline, 'b'):

- if line.decode('utf-8') != '':

- other.info(line.decode('utf-8'))

diff --git a/crawlab/requirements.txt b/crawlab/requirements.txt

deleted file mode 100644

index 282c4cc2..00000000

--- a/crawlab/requirements.txt

+++ /dev/null

@@ -1,17 +0,0 @@

-Flask_CSV==1.2.0

-gevent==1.4.0

-requests==2.21.0

-Scrapy==1.6.0

-pymongo==3.7.2

-APScheduler==3.6.0

-coloredlogs==10.0

-Flask_RESTful==0.3.7

-Flask==1.0.2

-lxml==4.3.3

-Flask_Cors==3.0.7

-Werkzeug==0.15.2

-eventlet

-Celery

-Flower

-redis

-gunicorn

diff --git a/crawlab/routes/__init__.py b/crawlab/routes/__init__.py

deleted file mode 100644

index e3b7fa36..00000000

--- a/crawlab/routes/__init__.py

+++ /dev/null

@@ -1,7 +0,0 @@

-# from app import api

-# from routes.deploys import DeployApi

-# from routes.files import FileApi

-# from routes.nodes import NodeApi

-# from routes.spiders import SpiderApi

-# from routes.tasks import TaskApi

-# print(api)

diff --git a/crawlab/routes/base.py b/crawlab/routes/base.py

deleted file mode 100644

index 3bb2c1b0..00000000

--- a/crawlab/routes/base.py

+++ /dev/null

@@ -1,189 +0,0 @@

-from flask_restful import reqparse, Resource

-# from flask_restplus import reqparse, Resource

-

-from db.manager import db_manager

-from utils import jsonify

-

-DEFAULT_ARGS = [

- 'page_num',

- 'page_size',

- 'filter'

-]

-

-

-class BaseApi(Resource):

- """

- Base class for API. All API classes should inherit this class.

- """

- col_name = 'tmp'

- parser = reqparse.RequestParser()

- arguments = []

-

- def __init__(self):

- super(BaseApi).__init__()

- self.parser.add_argument('page_num', type=int)

- self.parser.add_argument('page_size', type=int)

- self.parser.add_argument('filter', type=str)

-

- for arg, type in self.arguments:

- self.parser.add_argument(arg, type=type)

-

- def get(self, id: str = None, action: str = None) -> (dict, tuple):

- """

- GET method for retrieving item information.

- If id is specified and action is not, return the object of the given id;

- If id and action are both specified, execute the given action results of the given id;

- If neither id nor action is specified, return the list of items given the page_size, page_num and filter

- :param id:

- :param action:

- :return:

- """

- # import pdb

- # pdb.set_trace()

- args = self.parser.parse_args()

-

- # action by id

- if action is not None:

- if not hasattr(self, action):

- return {

- 'status': 'ok',

- 'code': 400,

- 'error': 'action "%s" invalid' % action

- }, 400

- return getattr(self, action)(id)

-

- # list items

- elif id is None:

- # filter

- cond = {}

- if args.get('filter') is not None:

- cond = args.filter

- # cond = json.loads(args.filter)

-

- # page number

- page = 1

- if args.get('page_num') is not None:

- page = args.page

- # page = int(args.page)

-

- # page size

- page_size = 10

- if args.get('page_size') is not None:

- page_size = args.page_size

- # page = int(args.page_size)

-

- # TODO: sort functionality

-

- # total count

- total_count = db_manager.count(col_name=self.col_name, cond=cond)

-

- # items

- items = db_manager.list(col_name=self.col_name,

- cond=cond,

- skip=(page - 1) * page_size,

- limit=page_size)

-

- # TODO: getting status for node

-

- return {

- 'status': 'ok',

- 'total_count': total_count,

- 'page_num': page,

- 'page_size': page_size,

- 'items': jsonify(items)

- }

-

- # get item by id

- else:

- return jsonify(db_manager.get(col_name=self.col_name, id=id))

-

- def put(self) -> (dict, tuple):

- """

- PUT method for creating a new item.

- :return:

- """

- args = self.parser.parse_args()

- item = {}

- for k in args.keys():

- if k not in DEFAULT_ARGS:

- item[k] = args.get(k)

- id = db_manager.save(col_name=self.col_name, item=item)

-

- # execute after_update hook

- self.after_update(id)

-

- return jsonify(id)

-

- def update(self, id: str = None) -> (dict, tuple):

- """

- Helper function for update action given the id.

- :param id:

- :return:

- """

- args = self.parser.parse_args()

- item = db_manager.get(col_name=self.col_name, id=id)

- if item is None:

- return {

- 'status': 'ok',

- 'code': 401,

- 'error': 'item not exists'

- }, 401

- values = {}

- for k in args.keys():

- if k not in DEFAULT_ARGS:

- if args.get(k) is not None:

- values[k] = args.get(k)

- item = db_manager.update_one(col_name=self.col_name, id=id, values=values)

-

- # execute after_update hook

- self.after_update(id)

-

- return jsonify(item)

-

- def post(self, id: str = None, action: str = None):

- """

- POST method of the given id for performing an action.

- :param id:

- :param action:

- :return:

- """

- # perform update action if action is not specified

- if action is None:

- return self.update(id)

-

- # if action is not defined in the attributes, return 400 error

- if not hasattr(self, action):

- return {

- 'status': 'ok',

- 'code': 400,

- 'error': 'action "%s" invalid' % action

- }, 400

-

- # perform specified action of given id

- return getattr(self, action)(id)

-

- def delete(self, id: str = None) -> (dict, tuple):

- """

- DELETE method of given id for deleting an item.

- :param id:

- :return:

- """

- # perform delete action

- db_manager.remove_one(col_name=self.col_name, id=id)

-

- # execute after_update hook

- self.after_update(id)

-

- return {

- 'status': 'ok',

- 'message': 'deleted successfully',

- }

-

- def after_update(self, id: str = None):

- """

- This is the after update hook once the update method is performed.

- To be overridden.

- :param id:

- :return:

- """

- pass

diff --git a/crawlab/routes/deploys.py b/crawlab/routes/deploys.py

deleted file mode 100644

index 885173c1..00000000

--- a/crawlab/routes/deploys.py

+++ /dev/null

@@ -1,46 +0,0 @@

-from db.manager import db_manager

-from routes.base import BaseApi

-from utils import jsonify

-

-

-class DeployApi(BaseApi):

- col_name = 'deploys'

-

- arguments = (

- ('spider_id', str),

- ('node_id', str),

- )

-

- def get(self, id: str = None, action: str = None) -> (dict, tuple):

- """

- GET method of DeployAPI.

- :param id: deploy_id

- :param action: action

- """

- # action by id

- if action is not None:

- if not hasattr(self, action):

- return {

- 'status': 'ok',

- 'code': 400,

- 'error': 'action "%s" invalid' % action

- }, 400

- return getattr(self, action)(id)

-

- # get one node

- elif id is not None:

- return jsonify(db_manager.get('deploys', id=id))

-

- # get a list of items

- else:

- items = db_manager.list('deploys', {})

- deploys = []

- for item in items:

- spider_id = item['spider_id']

- spider = db_manager.get('spiders', id=str(spider_id))

- item['spider_name'] = spider['name']

- deploys.append(item)

- return {

- 'status': 'ok',

- 'items': jsonify(deploys)

- }

diff --git a/crawlab/routes/files.py b/crawlab/routes/files.py

deleted file mode 100644

index ef80e20b..00000000

--- a/crawlab/routes/files.py

+++ /dev/null

@@ -1,50 +0,0 @@

-import os

-

-from flask_restful import reqparse, Resource

-

-from utils import jsonify

-from utils.file import get_file_content

-

-

-class FileApi(Resource):

- parser = reqparse.RequestParser()

- arguments = []

-

- def __init__(self):

- super(FileApi).__init__()

- self.parser.add_argument('path', type=str)

-

- def get(self, action=None):

- """

- GET method of FileAPI.

- :param action: action

- """

- args = self.parser.parse_args()

- path = args.get('path')

-

- if action is not None:

- if action == 'getDefaultPath':

- return jsonify({

- 'defaultPath': os.path.abspath(os.path.join(os.path.curdir, 'spiders'))

- })

-

- elif action == 'get_file':

- file_data = get_file_content(path)

- file_data['status'] = 'ok'

- return jsonify(file_data)

-

- else:

- return {}

-

- folders = []

- files = []

- for _path in os.listdir(path):

- if os.path.isfile(os.path.join(path, _path)):

- files.append(_path)

- elif os.path.isdir(os.path.join(path, _path)):

- folders.append(_path)

- return jsonify({

- 'status': 'ok',

- 'files': sorted(files),

- 'folders': sorted(folders),

- })

diff --git a/crawlab/routes/nodes.py b/crawlab/routes/nodes.py

deleted file mode 100644

index 4ab7a4ad..00000000

--- a/crawlab/routes/nodes.py

+++ /dev/null

@@ -1,81 +0,0 @@

-from constants.task import TaskStatus

-from db.manager import db_manager

-from routes.base import BaseApi

-from utils import jsonify

-from utils.node import update_nodes_status

-

-

-class NodeApi(BaseApi):

- col_name = 'nodes'

-

- arguments = (

- ('name', str),

- ('description', str),

- ('ip', str),

- ('port', str),

- )

-

- def get(self, id: str = None, action: str = None) -> (dict, tuple):

- """

- GET method of NodeAPI.

- :param id: item id

- :param action: action

- """

- # action by id

- if action is not None:

- if not hasattr(self, action):

- return {

- 'status': 'ok',

- 'code': 400,

- 'error': 'action "%s" invalid' % action

- }, 400

- return getattr(self, action)(id)

-

- # get one node

- elif id is not None:

- return db_manager.get('nodes', id=id)

-

- # get a list of items

- else:

- # get a list of active nodes from flower and save to db

- update_nodes_status()

-

- # iterate db nodes to update status

- nodes = db_manager.list('nodes', {})

-

- return {

- 'status': 'ok',

- 'items': jsonify(nodes)

- }

-

- def get_deploys(self, id: str) -> (dict, tuple):

- """

- Get a list of latest deploys of given node_id

- :param id: node_id

- """

- items = db_manager.list('deploys', {'node_id': id}, limit=10, sort_key='finish_ts')

- deploys = []

- for item in items:

- spider_id = item['spider_id']

- spider = db_manager.get('spiders', id=str(spider_id))

- item['spider_name'] = spider['name']

- deploys.append(item)

- return {

- 'status': 'ok',

- 'items': jsonify(deploys)

- }

-

- def get_tasks(self, id):

- """

- Get a list of latest tasks of given node_id

- :param id: node_id

- """

- items = db_manager.list('tasks', {'node_id': id}, limit=10, sort_key='create_ts')

- for item in items:

- spider_id = item['spider_id']

- spider = db_manager.get('spiders', id=str(spider_id))

- item['spider_name'] = spider['name']

- return {

- 'status': 'ok',

- 'items': jsonify(items)

- }

diff --git a/crawlab/routes/schedules.py b/crawlab/routes/schedules.py

deleted file mode 100644

index 01db8be1..00000000

--- a/crawlab/routes/schedules.py

+++ /dev/null

@@ -1,25 +0,0 @@

-import json

-

-import requests

-

-from constants.task import TaskStatus

-from db.manager import db_manager

-from routes.base import BaseApi

-from tasks.scheduler import scheduler

-from utils import jsonify

-from utils.spider import get_spider_col_fields

-

-

-class ScheduleApi(BaseApi):

- col_name = 'schedules'

-

- arguments = (

- ('name', str),

- ('description', str),

- ('cron', str),

- ('spider_id', str),

- ('params', str)

- )

-

- def after_update(self, id: str = None):

- scheduler.update()

diff --git a/crawlab/routes/sites.py b/crawlab/routes/sites.py

deleted file mode 100644

index e49dbe37..00000000

--- a/crawlab/routes/sites.py

+++ /dev/null

@@ -1,90 +0,0 @@

-import json

-

-from bson import ObjectId

-from pymongo import ASCENDING

-

-from db.manager import db_manager

-from routes.base import BaseApi

-from utils import jsonify

-

-

-class SiteApi(BaseApi):

- col_name = 'sites'

-

- arguments = (

- ('keyword', str),

- ('main_category', str),

- ('category', str),

- )

-

- def get(self, id: str = None, action: str = None):

- # action by id

- if action is not None:

- if not hasattr(self, action):

- return {

- 'status': 'ok',

- 'code': 400,

- 'error': 'action "%s" invalid' % action

- }, 400

- return getattr(self, action)(id)

-

- elif id is not None:

- site = db_manager.get(col_name=self.col_name, id=id)

- return jsonify(site)

-

- # list tasks

- args = self.parser.parse_args()

- page_size = args.get('page_size') or 10

- page_num = args.get('page_num') or 1

- filter_str = args.get('filter')

- keyword = args.get('keyword')

- filter_ = {}

- if filter_str is not None:

- filter_ = json.loads(filter_str)

- if keyword is not None:

- filter_['$or'] = [

- {'description': {'$regex': keyword}},

- {'name': {'$regex': keyword}},

- {'domain': {'$regex': keyword}}

- ]

-

- items = db_manager.list(

- col_name=self.col_name,

- cond=filter_,

- limit=page_size,

- skip=page_size * (page_num - 1),

- sort_key='rank',

- sort_direction=ASCENDING

- )

-

- sites = []

- for site in items:

- # get spider count

- site['spider_count'] = db_manager.count('spiders', {'site': site['_id']})

-

- sites.append(site)

-

- return {

- 'status': 'ok',

- 'total_count': db_manager.count(self.col_name, filter_),

- 'page_num': page_num,

- 'page_size': page_size,

- 'items': jsonify(sites)

- }

-

- def get_main_category_list(self, id):

- return {

- 'status': 'ok',

- 'items': db_manager.distinct(col_name=self.col_name, key='main_category', filter={})

- }

-

- def get_category_list(self, id):

- args = self.parser.parse_args()

- filter_ = {}

- if args.get('main_category') is not None:

- filter_['main_category'] = args.get('main_category')

- return {

- 'status': 'ok',

- 'items': db_manager.distinct(col_name=self.col_name, key='category',

- filter=filter_)

- }

diff --git a/crawlab/routes/spiders.py b/crawlab/routes/spiders.py

deleted file mode 100644

index e3d897cb..00000000

--- a/crawlab/routes/spiders.py

+++ /dev/null

@@ -1,937 +0,0 @@

-import json

-import os

-import shutil

-import subprocess

-from datetime import datetime

-from random import random

-from urllib.parse import urlparse

-

-import gevent

-import requests

-from bson import ObjectId

-from flask import current_app, request

-from flask_restful import reqparse, Resource

-from lxml import etree

-from werkzeug.datastructures import FileStorage

-

-from config import PROJECT_DEPLOY_FILE_FOLDER, PROJECT_SOURCE_FILE_FOLDER, PROJECT_TMP_FOLDER

-from constants.node import NodeStatus

-from constants.spider import SpiderType, CrawlType, QueryType, ExtractType

-from constants.task import TaskStatus

-from db.manager import db_manager

-from routes.base import BaseApi

-from tasks.scheduler import scheduler

-from tasks.spider import execute_spider, execute_config_spider

-from utils import jsonify

-from utils.deploy import zip_file, unzip_file

-from utils.file import get_file_suffix_stats, get_file_suffix

-from utils.spider import get_lang_by_stats, get_last_n_run_errors_count, get_last_n_day_tasks_count, get_list_page_data, \

- get_detail_page_data, generate_urls

-

-parser = reqparse.RequestParser()

-parser.add_argument('file', type=FileStorage, location='files')

-

-IGNORE_DIRS = [

- '.idea'

-]

-

-

-class SpiderApi(BaseApi):

- col_name = 'spiders'

-

- arguments = (

- # name of spider

- ('name', str),

-

- # execute shell command

- ('cmd', str),

-

- # spider source folder

- ('src', str),

-

- # spider type

- ('type', str),

-

- # spider language

- ('lang', str),

-

- # spider results collection

- ('col', str),

-

- # spider schedule cron

- ('cron', str),

-

- # spider schedule cron enabled

- ('cron_enabled', int),

-

- # spider schedule cron enabled

- ('envs', str),

-

- # spider site

- ('site', str),

-

- ########################

- # Configurable Spider

- ########################

-

- # spider crawl fields for list page

- ('fields', str),

-

- # spider crawl fields for detail page

- ('detail_fields', str),

-

- # spider crawl type

- ('crawl_type', str),

-

- # spider start url

- ('start_url', str),

-

- # url pattern: support generation of urls with patterns

- ('url_pattern', str),

-

- # spider item selector

- ('item_selector', str),

-

- # spider item selector type

- ('item_selector_type', str),

-

- # spider pagination selector

- ('pagination_selector', str),

-

- # spider pagination selector type

- ('pagination_selector_type', str),

-

- # whether to obey robots.txt

- ('obey_robots_txt', bool),

-

- # item threshold to filter out non-relevant list items

- ('item_threshold', int),

- )

-

- def get(self, id=None, action=None):

- """

- GET method of SpiderAPI.

- :param id: spider_id

- :param action: action

- """

- # action by id

- if action is not None:

- if not hasattr(self, action):

- return {

- 'status': 'ok',

- 'code': 400,

- 'error': 'action "%s" invalid' % action

- }, 400

- return getattr(self, action)(id)

-

- # get one node

- elif id is not None:

- spider = db_manager.get('spiders', id=id)

-

- # get deploy

- last_deploy = db_manager.get_last_deploy(spider_id=spider['_id'])

- if last_deploy is not None:

- spider['deploy_ts'] = last_deploy['finish_ts']

-

- return jsonify(spider)

-

- # get a list of items

- else:

- items = []

-

- # get customized spiders

- dirs = os.listdir(PROJECT_SOURCE_FILE_FOLDER)

- for _dir in dirs:

- if _dir in IGNORE_DIRS:

- continue

-

- dir_path = os.path.join(PROJECT_SOURCE_FILE_FOLDER, _dir)

- dir_name = _dir

- spider = db_manager.get_one_by_key('spiders', key='src', value=dir_path)

-

- # new spider

- if spider is None:

- stats = get_file_suffix_stats(dir_path)

- lang = get_lang_by_stats(stats)

- spider_id = db_manager.save('spiders', {

- 'name': dir_name,

- 'src': dir_path,

- 'lang': lang,

- 'suffix_stats': stats,

- 'type': SpiderType.CUSTOMIZED

- })

- spider = db_manager.get('spiders', id=spider_id)

-

- # existing spider

- else:

- # get last deploy

- last_deploy = db_manager.get_last_deploy(spider_id=spider['_id'])

- if last_deploy is not None:

- spider['deploy_ts'] = last_deploy['finish_ts']

-

- # file stats

- stats = get_file_suffix_stats(dir_path)

-

- # language

- lang = get_lang_by_stats(stats)

-

- # spider type

- type_ = SpiderType.CUSTOMIZED

-

- # update spider data

- db_manager.update_one('spiders', id=str(spider['_id']), values={

- 'lang': lang,

- 'type': type_,

- 'suffix_stats': stats,

- })

-

- # append spider

- items.append(spider)

-

- # get configurable spiders

- for spider in db_manager.list('spiders', {'type': SpiderType.CONFIGURABLE}):

- # append spider

- items.append(spider)

-

- # get other info

- for i in range(len(items)):

- spider = items[i]

-

- # get site

- if spider.get('site') is not None:

- site = db_manager.get('sites', spider['site'])

- if site is not None:

- items[i]['site_name'] = site['name']

-

- # get last task

- last_task = db_manager.get_last_task(spider_id=spider['_id'])

- if last_task is not None:

- items[i]['task_ts'] = last_task['create_ts']

-

- # ---------

- # stats

- # ---------

- # last 5-run errors

- items[i]['last_5_errors'] = get_last_n_run_errors_count(spider_id=spider['_id'], n=5)

- items[i]['last_7d_tasks'] = get_last_n_day_tasks_count(spider_id=spider['_id'], n=5)

-

- # sort spiders by _id descending

- items = reversed(sorted(items, key=lambda x: x['_id']))

-

- return {

- 'status': 'ok',

- 'items': jsonify(items)

- }

-

- def delete(self, id: str = None) -> (dict, tuple):

- """

- DELETE method of given id for deleting an spider.

- :param id:

- :return:

- """

- # get spider from db

- spider = db_manager.get(col_name=self.col_name, id=id)

-

- # delete spider folder

- if spider.get('type') == SpiderType.CUSTOMIZED:

- try:

- shutil.rmtree(os.path.abspath(os.path.join(PROJECT_SOURCE_FILE_FOLDER, spider['src'])))

- except Exception as err:

- return {

- 'status': 'ok',

- 'error': str(err)

- }, 500

-

- # perform delete action

- db_manager.remove_one(col_name=self.col_name, id=id)

-

- # remove related tasks

- db_manager.remove(col_name='tasks', cond={'spider_id': spider['_id']})

-

- # remove related schedules

- db_manager.remove(col_name='schedules', cond={'spider_id': spider['_id']})

-

- # execute after_update hook

- self.after_update(id)

-

- return {

- 'status': 'ok',

- 'message': 'deleted successfully',

- }

-

- def crawl(self, id: str) -> (dict, tuple):

- """

- Submit an HTTP request to start a crawl task in the node of given spider_id.

- @deprecated

- :param id: spider_id

- """

- args = self.parser.parse_args()

- node_id = args.get('node_id')

-

- if node_id is None:

- return {

- 'code': 400,

- 'status': 400,

- 'error': 'node_id cannot be empty'

- }, 400

-

- # get node from db

- node = db_manager.get('nodes', id=node_id)

-

- # validate ip and port

- if node.get('ip') is None or node.get('port') is None:

- return {

- 'code': 400,

- 'status': 'ok',

- 'error': 'node ip and port should not be empty'

- }, 400

-

- # dispatch crawl task

- res = requests.get('http://%s:%s/api/spiders/%s/on_crawl?node_id=%s' % (

- node.get('ip'),

- node.get('port'),

- id,

- node_id

- ))

- data = json.loads(res.content.decode('utf-8'))

- return {

- 'code': res.status_code,

- 'status': 'ok',

- 'error': data.get('error'),

- 'task': data.get('task')

- }

-

- def on_crawl(self, id: str) -> (dict, tuple):

- """

- Start a crawl task.

- :param id: spider_id

- :return:

- """

- args = self.parser.parse_args()

- params = args.get('params')

-

- spider = db_manager.get('spiders', id=ObjectId(id))

-

- # determine execute function

- if spider['type'] == SpiderType.CONFIGURABLE:

- # configurable spider

- exec_func = execute_config_spider

- else:

- # customized spider

- exec_func = execute_spider

-

- # trigger an asynchronous job

- job = exec_func.delay(id, params)

-

- # create a new task

- db_manager.save('tasks', {

- '_id': job.id,

- 'spider_id': ObjectId(id),

- 'cmd': spider.get('cmd'),

- 'params': params,

- 'create_ts': datetime.utcnow(),

- 'status': TaskStatus.PENDING

- })

-

- return {

- 'code': 200,

- 'status': 'ok',

- 'task': {

- 'id': job.id,

- 'status': job.status

- }

- }

-

- def deploy(self, id: str) -> (dict, tuple):

- """

- Submit HTTP requests to deploy the given spider to all nodes.

- :param id:

- :return:

- """

- spider = db_manager.get('spiders', id=id)

- nodes = db_manager.list('nodes', {'status': NodeStatus.ONLINE})

-

- for node in nodes:

- node_id = node['_id']

-

- output_file_name = '%s_%s.zip' % (

- datetime.now().strftime('%Y%m%d%H%M%S'),

- str(random())[2:12]

- )

- output_file_path = os.path.join(PROJECT_TMP_FOLDER, output_file_name)

-

- # zip source folder to zip file

- zip_file(source_dir=spider['src'],

- output_filename=output_file_path)

-

- # upload to api

- files = {'file': open(output_file_path, 'rb')}

- r = requests.post('http://%s:%s/api/spiders/%s/deploy_file?node_id=%s' % (

- node.get('ip'),

- node.get('port'),

- id,

- node_id,

- ), files=files)

-

- # TODO: checkpoint for errors

-

- return {

- 'code': 200,

- 'status': 'ok',

- 'message': 'deploy success'

- }

-

- def deploy_file(self, id: str = None) -> (dict, tuple):

- """

- Receive HTTP request of deploys and unzip zip files and copy to the destination directories.

- :param id: spider_id

- """

- args = parser.parse_args()

- node_id = request.args.get('node_id')

- f = args.file

-

- if get_file_suffix(f.filename) != 'zip':

- return {

- 'status': 'ok',

- 'error': 'file type mismatch'

- }, 400

-

- # save zip file on temp folder

- file_path = '%s/%s' % (PROJECT_TMP_FOLDER, f.filename)

- with open(file_path, 'wb') as fw:

- fw.write(f.stream.read())

-

- # unzip zip file

- dir_path = file_path.replace('.zip', '')

- if os.path.exists(dir_path):

- shutil.rmtree(dir_path)

- unzip_file(file_path, dir_path)

-

- # get spider and version

- spider = db_manager.get(col_name=self.col_name, id=id)

- if spider is None:

- return None, 400

-

- # make source / destination

- src = os.path.join(dir_path, os.listdir(dir_path)[0])

- dst = os.path.join(PROJECT_DEPLOY_FILE_FOLDER, str(spider.get('_id')))

-

- # logging info

- current_app.logger.info('src: %s' % src)

- current_app.logger.info('dst: %s' % dst)

-

- # remove if the target folder exists

- if os.path.exists(dst):

- shutil.rmtree(dst)

-

- # copy from source to destination

- shutil.copytree(src=src, dst=dst)

-

- # save to db

- # TODO: task management for deployment

- db_manager.save('deploys', {

- 'spider_id': ObjectId(id),

- 'node_id': node_id,

- 'finish_ts': datetime.utcnow()

- })

-

- return {

- 'code': 200,

- 'status': 'ok',

- 'message': 'deploy success'

- }

-

- def get_deploys(self, id: str) -> (dict, tuple):

- """

- Get a list of latest deploys of given spider_id

- :param id: spider_id

- """

- items = db_manager.list('deploys', cond={'spider_id': ObjectId(id)}, limit=10, sort_key='finish_ts')

- deploys = []

- for item in items:

- spider_id = item['spider_id']

- spider = db_manager.get('spiders', id=str(spider_id))

- item['spider_name'] = spider['name']

- deploys.append(item)

- return {

- 'status': 'ok',

- 'items': jsonify(deploys)

- }

-

- def get_tasks(self, id: str) -> (dict, tuple):

- """

- Get a list of latest tasks of given spider_id

- :param id:

- """

- items = db_manager.list('tasks', cond={'spider_id': ObjectId(id)}, limit=10, sort_key='create_ts')

- for item in items:

- spider_id = item['spider_id']

- spider = db_manager.get('spiders', id=str(spider_id))

- item['spider_name'] = spider['name']

- if item.get('status') is None:

- item['status'] = TaskStatus.UNAVAILABLE

- return {

- 'status': 'ok',

- 'items': jsonify(items)

- }

-

- def after_update(self, id: str = None) -> None:

- """

- After each spider is updated, update the cron scheduler correspondingly.

- :param id: spider_id

- """

- scheduler.update()

-

- def update_envs(self, id: str):

- """

- Update environment variables

- :param id: spider_id

- """

- args = self.parser.parse_args()

- envs = json.loads(args.envs)

- db_manager.update_one(col_name='spiders', id=id, values={'envs': envs})

-

- def update_fields(self, id: str):

- """

- Update list page fields variables for configurable spiders

- :param id: spider_id

- """

- args = self.parser.parse_args()

- fields = json.loads(args.fields)

- db_manager.update_one(col_name='spiders', id=id, values={'fields': fields})

-

- def update_detail_fields(self, id: str):

- """

- Update detail page fields variables for configurable spiders

- :param id: spider_id

- """

- args = self.parser.parse_args()

- detail_fields = json.loads(args.detail_fields)

- db_manager.update_one(col_name='spiders', id=id, values={'detail_fields': detail_fields})

-

- @staticmethod

- def _get_html(spider) -> etree.Element:

- if spider['type'] != SpiderType.CONFIGURABLE:

- return {

- 'status': 'ok',

- 'error': 'type %s is invalid' % spider['type']

- }, 400

-

- if spider.get('start_url') is None:

- return {

- 'status': 'ok',

- 'error': 'start_url should not be empty'

- }, 400

-

- try:

- r = None

- for url in generate_urls(spider['start_url']):

- r = requests.get(url, headers={

- 'user-agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_10_5) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3729.169 Safari/537.36'

- })

- break

- except Exception as err:

- return {

- 'status': 'ok',

- 'error': 'connection error'

- }, 500

-

- if not r:

- return {

- 'status': 'ok',

- 'error': 'response is not returned'

- }, 500

-

- if r and r.status_code != 200:

- return {

- 'status': 'ok',

- 'error': 'status code is not 200, but %s' % r.status_code

- }, r.status_code

-

- # get html parse tree

- try:

- sel = etree.HTML(r.content.decode('utf-8'))

- except Exception as err:

- sel = etree.HTML(r.content)

-

- # remove unnecessary tags

- unnecessary_tags = [

- 'script'

- ]

- for t in unnecessary_tags:

- etree.strip_tags(sel, t)

-

- return sel

-

- @staticmethod

- def _get_children(sel):

- return [tag for tag in sel.getchildren() if type(tag) != etree._Comment]

-

- @staticmethod

- def _get_text_child_tags(sel):

- tags = []

- for tag in sel.iter():

- if type(tag) != etree._Comment and tag.text is not None and tag.text.strip() != '':

- tags.append(tag)

- return tags

-

- @staticmethod

- def _get_a_child_tags(sel):

- tags = []

- for tag in sel.iter():

- if tag.tag == 'a':

- if tag.get('href') is not None and not tag.get('href').startswith('#') and not tag.get(

- 'href').startswith('javascript'):

- tags.append(tag)

-

- return tags

-

- @staticmethod

- def _get_next_page_tag(sel):

- next_page_text_list = [

- '下一页',

- '下页',

- 'next page',

- 'next',

- '>'

- ]

- for tag in sel.iter():

- if tag.text is not None and tag.text.lower().strip() in next_page_text_list:

- return tag

- return None

-

- def preview_crawl(self, id: str):

- spider = db_manager.get(col_name='spiders', id=id)

-

- # get html parse tree

- sel = self._get_html(spider)

-

- # when error happens, return

- if type(sel) == type(tuple):

- return sel

-

- # parse fields

- if spider['crawl_type'] == CrawlType.LIST:

- if spider.get('item_selector') is None:

- return {

- 'status': 'ok',

- 'error': 'item_selector should not be empty'

- }, 400

-

- data = get_list_page_data(spider, sel)[:10]

-

- return {

- 'status': 'ok',

- 'items': data

- }

-

- elif spider['crawl_type'] == CrawlType.DETAIL:

- # TODO: 详情页预览

- pass

-

- elif spider['crawl_type'] == CrawlType.LIST_DETAIL:

- data = get_list_page_data(spider, sel)[:10]

-

- ev_list = []

- for idx, d in enumerate(data):

- for f in spider['fields']:

- if f.get('is_detail'):

- url = d.get(f['name'])

- if url is not None:

- if not url.startswith('http') and not url.startswith('//'):

- u = urlparse(spider['start_url'])

- url = f'{u.scheme}://{u.netloc}{url}'

- ev_list.append(gevent.spawn(get_detail_page_data, url, spider, idx, data))

- break

-

- gevent.joinall(ev_list)

-

- return {

- 'status': 'ok',

- 'items': data

- }

-

- def extract_fields(self, id: str):

- """

- Extract list fields from a web page

- :param id:

- :return:

- """

- spider = db_manager.get(col_name='spiders', id=id)

-

- # get html parse tree

- sel = self._get_html(spider)

-

- # when error happens, return

- if type(sel) == tuple:

- return sel

-

- list_tag_list = []

- threshold = spider.get('item_threshold') or 10

- # iterate all child nodes in a top-down direction

- for tag in sel.iter():

- # get child tags

- child_tags = self._get_children(tag)

-

- if len(child_tags) < threshold:

- # if number of child tags is below threshold, skip

- continue

- else:

- # have one or more child tags

- child_tags_set = set(map(lambda x: x.tag, child_tags))

-

- # if there are more than 1 tag names, skip

- if len(child_tags_set) > 1:

- continue

-

- # add as list tag

- list_tag_list.append(tag)

-

- # find the list tag with the most child text tags

- max_tag = None

- max_num = 0

- for tag in list_tag_list:

- _child_text_tags = self._get_text_child_tags(self._get_children(tag)[0])

- if len(_child_text_tags) > max_num:

- max_tag = tag

- max_num = len(_child_text_tags)

-

- # get list item selector

- item_selector = None

- item_selector_type = 'css'

- if max_tag.get('id') is not None:

- item_selector = f'#{max_tag.get("id")} > {self._get_children(max_tag)[0].tag}'

- elif max_tag.get('class') is not None:

- cls_str = '.'.join([x for x in max_tag.get("class").split(' ') if x != ''])

- if len(sel.cssselect(f'.{cls_str}')) == 1:

- item_selector = f'.{cls_str} > {self._get_children(max_tag)[0].tag}'

- else:

- item_selector = max_tag.getroottree().getpath(max_tag)

- item_selector_type = 'xpath'

-

- # get list fields

- fields = []

- if item_selector is not None:

- first_tag = self._get_children(max_tag)[0]

- for i, tag in enumerate(self._get_text_child_tags(first_tag)):

- el_list = first_tag.cssselect(f'{tag.tag}')

- if len(el_list) == 1:

- fields.append({

- 'name': f'field{i + 1}',

- 'type': 'css',

- 'extract_type': 'text',

- 'query': f'{tag.tag}',

- })

- elif tag.get('class') is not None:

- cls_str = '.'.join([x for x in tag.get("class").split(' ') if x != ''])

- if len(tag.cssselect(f'{tag.tag}.{cls_str}')) == 1:

- fields.append({

- 'name': f'field{i + 1}',

- 'type': 'css',

- 'extract_type': 'text',

- 'query': f'{tag.tag}.{cls_str}',

- })

- else:

- for j, el in enumerate(el_list):

- if tag == el:

- fields.append({

- 'name': f'field{i + 1}',

- 'type': 'css',

- 'extract_type': 'text',

- 'query': f'{tag.tag}:nth-of-type({j + 1})',

- })

-

- for i, tag in enumerate(self._get_a_child_tags(self._get_children(max_tag)[0])):

- # if the tag is , extract its href

- if tag.get('class') is not None:

- cls_str = '.'.join([x for x in tag.get("class").split(' ') if x != ''])

- fields.append({

- 'name': f'field{i + 1}_url',

- 'type': 'css',

- 'extract_type': 'attribute',

- 'attribute': 'href',

- 'query': f'{tag.tag}.{cls_str}',

- })

-

- # get pagination tag

- pagination_selector = None

- pagination_tag = self._get_next_page_tag(sel)

- if pagination_tag is not None:

- if pagination_tag.get('id') is not None:

- pagination_selector = f'#{pagination_tag.get("id")}'

- elif pagination_tag.get('class') is not None and len(sel.cssselect(f'.{pagination_tag.get("id")}')) == 1:

- pagination_selector = f'.{pagination_tag.get("id")}'

-

- return {

- 'status': 'ok',

- 'item_selector': item_selector,

- 'item_selector_type': item_selector_type,

- 'pagination_selector': pagination_selector,

- 'fields': fields

- }

-

-

-class SpiderImportApi(Resource):

- __doc__ = """

- API for importing spiders from external resources including Github, Gitlab, and subversion (WIP)

- """

- parser = reqparse.RequestParser()

- arguments = [

- ('url', str)

- ]

-

- def __init__(self):

- super(SpiderImportApi).__init__()

- for arg, type in self.arguments:

- self.parser.add_argument(arg, type=type)

-

- def post(self, platform: str = None) -> (dict, tuple):

- if platform is None:

- return {

- 'status': 'ok',

- 'code': 404,

- 'error': 'platform invalid'

- }, 404

-

- if not hasattr(self, platform):

- return {

- 'status': 'ok',

- 'code': 400,

- 'error': 'platform "%s" invalid' % platform

- }, 400

-

- return getattr(self, platform)()

-

- def github(self) -> None:

- """

- Import Github API

- """

- self._git()

-

- def gitlab(self) -> None:

- """

- Import Gitlab API

- """

- self._git()

-

- def _git(self):

- """

- Helper method to perform github important (basically "git clone" method).

- """

- args = self.parser.parse_args()

- url = args.get('url')

- if url is None:

- return {

- 'status': 'ok',

- 'code': 400,

- 'error': 'url should not be empty'

- }, 400

-

- try:

- p = subprocess.Popen(['git', 'clone', url], cwd=PROJECT_SOURCE_FILE_FOLDER)

- _stdout, _stderr = p.communicate()

- except Exception as err:

- return {

- 'status': 'ok',

- 'code': 500,

- 'error': str(err)

- }, 500

-

- return {

- 'status': 'ok',

- 'message': 'success'

- }

-

-

-class SpiderManageApi(Resource):

- parser = reqparse.RequestParser()

- arguments = [

- ('url', str)

- ]

-

- def post(self, action: str) -> (dict, tuple):

- """

- POST method for SpiderManageAPI.

- :param action:

- """

- if not hasattr(self, action):

- return {

- 'status': 'ok',

- 'code': 400,

- 'error': 'action "%s" invalid' % action

- }, 400

-

- return getattr(self, action)()

-

- def deploy_all(self) -> (dict, tuple):

- """

- Deploy all spiders to all nodes.

- """

- # active nodes

- nodes = db_manager.list('nodes', {'status': NodeStatus.ONLINE})

-

- # all spiders

- spiders = db_manager.list('spiders', {'cmd': {'$exists': True}})

-

- # iterate all nodes

- for node in nodes:

- node_id = node['_id']

- for spider in spiders:

- spider_id = spider['_id']

- spider_src = spider['src']

-

- output_file_name = '%s_%s.zip' % (

- datetime.now().strftime('%Y%m%d%H%M%S'),

- str(random())[2:12]

- )

- output_file_path = os.path.join(PROJECT_TMP_FOLDER, output_file_name)

-

- # zip source folder to zip file

- zip_file(source_dir=spider_src,

- output_filename=output_file_path)

-

- # upload to api

- files = {'file': open(output_file_path, 'rb')}

- r = requests.post('http://%s:%s/api/spiders/%s/deploy_file?node_id=%s' % (

- node.get('ip'),

- node.get('port'),

- spider_id,

- node_id,

- ), files=files)

-

- return {

- 'status': 'ok',

- 'message': 'success'

- }

-

- def upload(self):

- f = request.files['file']

-

- if get_file_suffix(f.filename) != 'zip':

- return {

- 'status': 'ok',

- 'error': 'file type mismatch'

- }, 400

-

- # save zip file on temp folder

- file_path = '%s/%s' % (PROJECT_TMP_FOLDER, f.filename)

- with open(file_path, 'wb') as fw:

- fw.write(f.stream.read())

-

- # unzip zip file

- dir_path = file_path.replace('.zip', '')

- if os.path.exists(dir_path):

- shutil.rmtree(dir_path)

- unzip_file(file_path, dir_path)

-

- # copy to source folder

- output_path = os.path.join(PROJECT_SOURCE_FILE_FOLDER, f.filename.replace('.zip', ''))

- print(output_path)

- if os.path.exists(output_path):

- shutil.rmtree(output_path)

- shutil.copytree(dir_path, output_path)

-

- return {

- 'status': 'ok',

- 'message': 'success'

- }

diff --git a/crawlab/routes/stats.py b/crawlab/routes/stats.py

deleted file mode 100644

index fe43e7a9..00000000

--- a/crawlab/routes/stats.py

+++ /dev/null

@@ -1,235 +0,0 @@

-import os

-from collections import defaultdict

-from datetime import datetime, timedelta

-

-from flask_restful import reqparse, Resource

-

-from constants.task import TaskStatus

-from db.manager import db_manager

-from routes.base import BaseApi

-from utils import jsonify

-

-

-class StatsApi(BaseApi):

- arguments = [

- ['spider_id', str],

- ]

-

- def get(self, action: str = None) -> (dict, tuple):

- """

- GET method of StatsApi.

- :param action: action

- """

- # action

- if action is not None:

- if not hasattr(self, action):

- return {

- 'status': 'ok',

- 'code': 400,

- 'error': 'action "%s" invalid' % action

- }, 400

- return getattr(self, action)()

-

- else:

- return {}

-

- def get_home_stats(self):

- """

- Get stats for home page

- """

- # overview stats

- task_count = db_manager.count('tasks', {})

- spider_count = db_manager.count('spiders', {})

- node_count = db_manager.count('nodes', {})

- deploy_count = db_manager.count('deploys', {})

-

- # daily stats

- cur = db_manager.aggregate('tasks', [

- {

- '$project': {

- 'date': {

- '$dateToString': {

- 'format': '%Y-%m-%d',

- 'date': '$create_ts'

- }

- }

- }

- },

- {

- '$group': {

- '_id': '$date',

- 'count': {

- '$sum': 1

- }

- }

- },

- {

- '$sort': {

- '_id': 1

- }

- }

- ])

- date_cache = {}

- for item in cur:

- date_cache[item['_id']] = item['count']

- start_date = datetime.now() - timedelta(31)

- end_date = datetime.now() - timedelta(1)

- date = start_date

- daily_tasks = []

- while date < end_date:

- date = date + timedelta(1)

- date_str = date.strftime('%Y-%m-%d')

- daily_tasks.append({

- 'date': date_str,

- 'count': date_cache.get(date_str) or 0,

- })

-

- return {

- 'status': 'ok',

- 'overview_stats': {

- 'task_count': task_count,

- 'spider_count': spider_count,

- 'node_count': node_count,

- 'deploy_count': deploy_count,

- },

- 'daily_tasks': daily_tasks

- }

-

- def get_spider_stats(self):

- args = self.parser.parse_args()

- spider_id = args.get('spider_id')

- spider = db_manager.get('spiders', id=spider_id)

- tasks = db_manager.list(

- col_name='tasks',

- cond={

- 'spider_id': spider['_id'],

- 'create_ts': {

- '$gte': datetime.now() - timedelta(30)

- }

- },

- limit=9999999

- )

-

- # task count

- task_count = len(tasks)

-

- # calculate task count stats

- task_count_by_status = defaultdict(int)

- task_count_by_node = defaultdict(int)

- total_seconds = 0

- for task in tasks:

- task_count_by_status[task['status']] += 1

- task_count_by_node[task.get('node_id')] += 1

- if task['status'] == TaskStatus.SUCCESS and task.get('finish_ts'):

- duration = (task['finish_ts'] - task['create_ts']).total_seconds()

- total_seconds += duration

-

- # task count by node

- task_count_by_node_ = []

- for status, value in task_count_by_node.items():

- task_count_by_node_.append({

- 'name': status,

- 'value': value

- })

-

- # task count by status

- task_count_by_status_ = []

- for status, value in task_count_by_status.items():

- task_count_by_status_.append({

- 'name': status,

- 'value': value

- })

-

- # success rate

- success_rate = task_count_by_status[TaskStatus.SUCCESS] / task_count

-

- # average duration

- avg_duration = total_seconds / task_count

-

- # calculate task count by date

- cur = db_manager.aggregate('tasks', [

- {

- '$match': {

- 'spider_id': spider['_id']

- }

- },

- {

- '$project': {

- 'date': {

- '$dateToString': {

- 'format': '%Y-%m-%d',

- 'date': '$create_ts'

- }

- },

- 'duration': {

- '$subtract': [

- '$finish_ts',